Author: Denis Avetisyan

A new review synthesizes the rapidly evolving landscape of AI agents designed to assist medical professionals and improve patient care.

This paper presents a seven-dimensional taxonomy for empirically evaluating LLM-based agents in healthcare, based on a systematic review of 49 research publications.

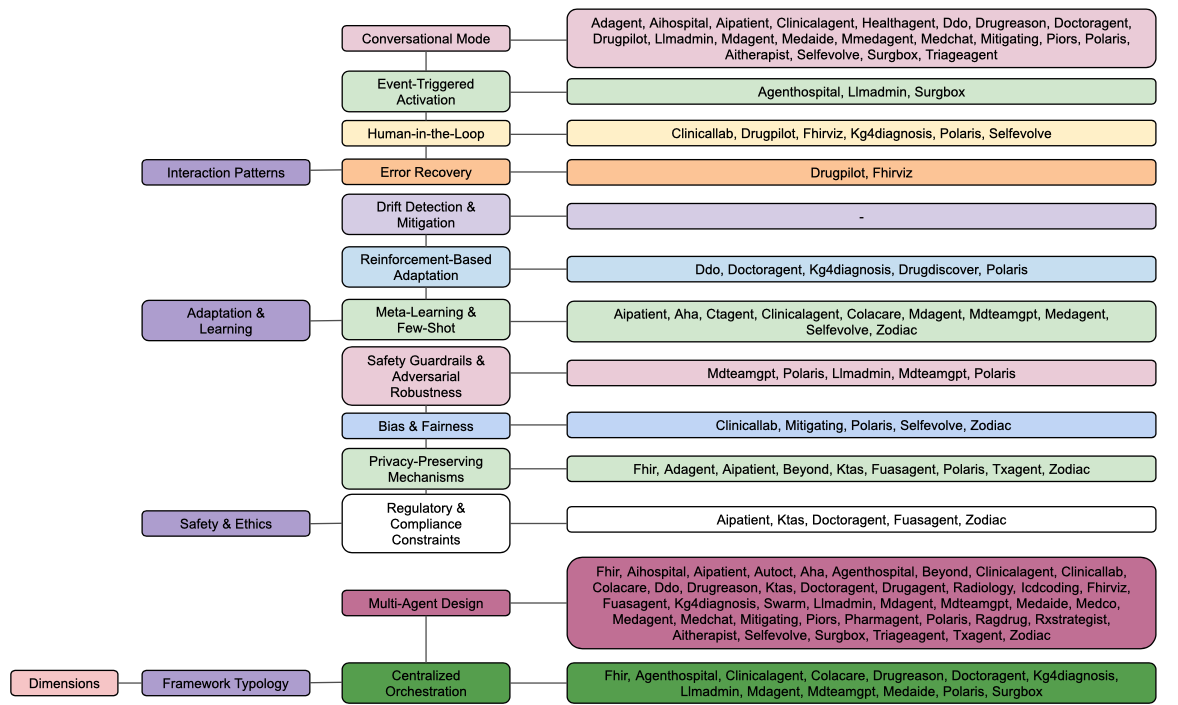

Despite increasing claims of competence, evaluations of Large Language Model (LLM)-based agents in healthcare lack a unified analytical framework. This paper, ‘Agentic AI in Healthcare & Medicine: A Seven-Dimensional Taxonomy for Empirical Evaluation of LLM-based Agents’, addresses this gap through a systematic review of 49 studies, mapped against a novel taxonomy encompassing cognitive capabilities, knowledge management, safety, and task-specific implementation. Our analysis reveals significant asymmetries – strong performance in external knowledge integration but limited progress in areas like event-triggered activation and adaptive learning – highlighting substantial opportunities for targeted development. What architectural and functional advancements are needed to fully realize the potential of agentic AI for truly impactful clinical applications?

The Impending Strain on Clinical Resources: A Systemic Challenge

The healthcare landscape is undergoing a period of intense strain, characterized by aging populations, rising chronic disease prevalence, and persistent workforce shortages. These converging factors are creating unprecedented demands on already limited resources, threatening the sustainability of current care models and potentially compromising patient outcomes. Consequently, there is a growing imperative to explore and implement innovative solutions, with artificial intelligence emerging as a particularly promising avenue. AI-driven tools offer the potential to automate administrative tasks, enhance diagnostic accuracy, personalize treatment plans, and ultimately improve the efficiency with which healthcare is delivered – not as a replacement for human expertise, but as a powerful means of augmenting it and ensuring that quality care remains accessible to all.

Large Language Models (LLMs) represent a significant leap in artificial intelligence, demonstrating an exceptional capacity to parse and produce human language with remarkable fluency. However, translating this capability into trustworthy clinical tools demands more than simply applying these models to healthcare data. Unlike general language tasks, medical applications require unwavering accuracy and a deep understanding of nuanced terminology, potential biases within datasets, and the critical implications of even minor errors. Successful adaptation necessitates rigorous fine-tuning with carefully curated medical corpora, the implementation of robust validation procedures to assess performance across diverse patient populations, and the development of mechanisms to ensure LLMs operate within established clinical guidelines and safety protocols. Only through such meticulous preparation can the promise of LLMs be realized, transforming them from powerful language tools into reliable partners in patient care.

The convergence of Large Language Models and agent-based systems holds considerable potential to reshape healthcare delivery by automating tasks and augmenting the capabilities of medical professionals. These intelligent agents, powered by LLMs, can potentially handle administrative burdens, assist in diagnosis through data analysis, and even personalize patient communication-freeing up clinicians to focus on complex cases and direct patient care. However, realizing this promise demands rigorous attention to safety and reliability; LLMs, while adept at language, are prone to errors and biases that, if unchecked, could lead to misdiagnosis or inappropriate treatment recommendations. Consequently, ongoing research focuses on developing robust validation frameworks, incorporating explainability features, and implementing fail-safe mechanisms to ensure these AI-driven agents consistently provide accurate, trustworthy, and ethically sound support within the demanding healthcare landscape.

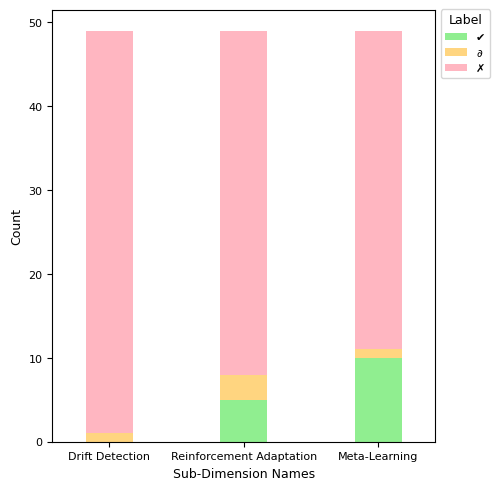

Adaptive Knowledge: A Prerequisite for Long-Term Reliability

Maintaining knowledge accuracy in large language model (LLM)-based agents requires continuous updating and, crucially, the ability to forget outdated or irrelevant information. Without these dynamic knowledge management techniques, LLMs risk basing decisions on inaccurate data, potentially leading to suboptimal outcomes. Current research indicates a significant implementation gap; a survey of existing literature reveals that only 2% of papers address both dynamic updates and forgetting mechanisms within LLM agents, suggesting a critical area for future development to ensure long-term reliability and clinical validity.

Meta-learning and few-shot learning techniques address the limitations of traditional machine learning approaches that require substantial datasets for each new task. Meta-learning enables a model to learn how to learn, allowing it to quickly generalize to novel clinical scenarios with minimal examples. Few-shot learning specifically focuses on achieving high performance with only a handful of training instances, often leveraging pre-trained models and transfer learning to adapt existing knowledge. This is particularly valuable in healthcare where obtaining large, labeled datasets for every possible condition or procedure is often impractical or cost-prohibitive. By reducing the reliance on extensive re-training, these methods enable LLM-based agents to be deployed more efficiently and adapt to evolving clinical needs with greater agility.

Reinforcement-based adaptation in LLM agents utilizes a feedback mechanism, typically involving reward signals, to iteratively improve performance on specific tasks. This process allows the agent to learn an optimal policy through trial and error, adjusting its decision-making process to maximize cumulative rewards. In clinical applications, rewards can be designed to reflect positive patient outcomes, adherence to best practices, or efficiency gains. Algorithms such as Q-learning and policy gradients are employed to train the agent, enabling it to refine its actions based on the received feedback and ultimately optimize clinical decision-making and improve patient care. The technique is particularly valuable in complex scenarios where explicit programming of optimal behavior is impractical or impossible.

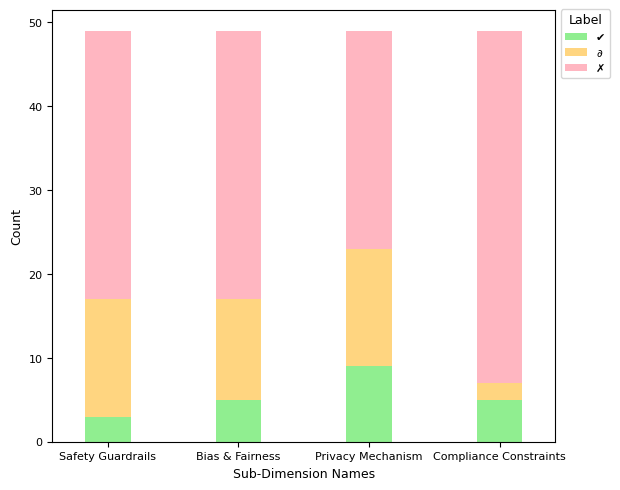

Guarding Against Clinical Error: A Matter of Rigorous Validation

Safety guardrails and adversarial robustness are critical components in deploying AI agents within clinical settings, designed to prevent operation outside of predefined, safe parameters and to resist manipulation via malicious or unexpected inputs. These safeguards function by establishing boundaries for agent behavior and incorporating mechanisms to detect and neutralize potentially harmful input data. A recent survey of published research indicates a significant gap in implementation; only 6% of papers demonstrate full implementation of these necessary security measures. This low percentage highlights a substantial need for increased focus on developing and integrating robust safety protocols to ensure the reliable and secure operation of AI agents in healthcare applications.

Error recovery mechanisms in clinical AI agents are designed to identify and address operational failures as they occur, preventing the propagation of inaccuracies that could lead to adverse patient outcomes. These mechanisms typically involve real-time monitoring of agent performance against predefined criteria, coupled with automated or human-guided corrective actions. Detection strategies include anomaly detection, consistency checks against known medical knowledge, and validation of outputs against expected ranges. Correction methods range from re-executing specific modules with adjusted parameters to triggering alerts for human review and intervention. Effective error recovery is crucial because even minor initial errors can compound through sequential decision-making, potentially resulting in significant and harmful clinical consequences.

Bias and fairness mitigation techniques are crucial in clinical AI systems due to the potential for algorithmic perpetuation of existing health disparities. These techniques address biases stemming from skewed or unrepresentative training data, which can lead to inaccurate diagnoses or inappropriate treatment recommendations for specific demographic groups. Common mitigation strategies include data augmentation to balance representation, algorithmic adjustments to minimize disparate impact, and fairness-aware machine learning models designed to optimize for equitable outcomes across different patient subgroups. Evaluation metrics beyond overall accuracy, such as equal opportunity and predictive parity, are essential to quantitatively assess and ensure fairness in clinical applications; neglecting these considerations can exacerbate inequalities in healthcare access and quality.

Protecting patient privacy requires implementation of several mechanisms to comply with regulations like HIPAA in the United States, GDPR in Europe, and similar legislation globally. These mechanisms include data encryption both in transit and at rest, de-identification and anonymization techniques to remove personally identifiable information (PII), and access controls to restrict data access to authorized personnel only. Differential privacy, federated learning, and homomorphic encryption are increasingly employed to enable data analysis while minimizing privacy risks. Regular audits and adherence to data governance policies are also essential components of a robust privacy-preserving strategy, alongside secure data storage and transmission protocols.

Orchestrating Intelligent Support: A Multi-Agent Paradigm

The prevailing trend in advanced clinical support systems centers on multi-agent design, a strategy reflected in the findings of 82% of surveyed research papers. This approach moves beyond monolithic applications by constructing a network of specialized agents, each dedicated to a specific task – such as medication monitoring, symptom analysis, or patient education. By distributing responsibilities, these systems achieve a more nuanced understanding of individual patient needs and offer correspondingly tailored interventions. This modularity not only enhances the depth of support provided, but also facilitates easier updates and improvements to individual components without disrupting the entire system, promising a more adaptable and robust framework for future healthcare solutions.

Effective clinical support increasingly relies on multi-agent systems, yet simply deploying numerous specialized agents isn’t enough. Centralized orchestration-a system for coordinating these agents-is crucial for maximizing efficiency and preventing potentially harmful conflicts in patient care. While a majority of surveyed research-57%-incorporates this approach, adoption remains partial, largely categorized as Δ\Delta, suggesting a focus on incremental improvements rather than fully integrated systems. This indicates that while the benefit of coordination is recognized, substantial work remains to establish robust, comprehensive orchestration frameworks capable of seamlessly managing complex interactions between agents and ensuring consistently reliable clinical support.

A robust evaluation of multi-agent systems in clinical settings relies heavily on benchmarking and simulation, with a striking 80% of recent research utilizing standardized evaluation methods. This emphasis on rigorous testing allows developers to move beyond theoretical performance and assess agent capabilities in realistic, yet controlled, environments. Simulations recreate complex clinical scenarios, enabling the identification of potential weaknesses and areas for improvement without risking patient safety. Benchmarking, through the use of common datasets and metrics, facilitates comparison between different agent designs and promotes the development of best practices. This commitment to quantifiable performance is crucial for building trust in these systems and paving the way for responsible clinical integration, ultimately ensuring that these tools demonstrably enhance-rather than hinder-patient care.

Responsible deployment of AI-driven clinical support systems fundamentally hinges on strict adherence to regulatory frameworks and compliance standards. These systems, handling sensitive patient data and influencing critical healthcare decisions, necessitate robust safeguards to ensure privacy, security, and ethical operation. Compliance isn’t merely a legal obligation; it’s vital for cultivating and maintaining patient trust, a cornerstone of effective healthcare. Demonstrating alignment with regulations like HIPAA, GDPR, and emerging AI-specific guidelines provides a demonstrable commitment to patient well-being and data protection. Furthermore, transparent documentation of data provenance, algorithmic bias mitigation strategies, and audit trails are increasingly expected by both regulatory bodies and healthcare providers, solidifying the foundation for widespread adoption and sustained public confidence.

The Trajectory of Intelligent Healthcare Agents

Intelligent healthcare agents are evolving beyond scheduled check-ins to embrace event-triggered activation, a system where shifts in a patient’s condition or the occurrence of specific clinical events initiate a response. This proactive approach moves beyond reactive care, allowing agents to analyze real-time data – such as vital sign fluctuations, lab result anomalies, or medication adherence patterns – and intervene before conditions escalate. Consequently, these agents can alert medical staff to potential crises, suggest adjustments to treatment plans, or even initiate automated interventions within pre-defined safety parameters. By continuously monitoring for specific triggers, the system enhances responsiveness, reduces delays in care, and ultimately contributes to improved patient outcomes and a more efficient healthcare delivery system.

Intelligent healthcare agents, despite their potential to revolutionize patient care, necessitate robust oversight to guarantee responsible implementation. A “human-in-the-loop” approach ensures that critical decisions aren’t solely entrusted to algorithms, but are instead subject to validation by qualified medical professionals. This collaborative framework allows clinicians to review agent recommendations, particularly in complex or ambiguous cases, preventing errors and upholding ethical standards. Such oversight isn’t merely a safety net; it also facilitates continuous learning for the agent itself, as human feedback refines its algorithms and improves future performance. By integrating human expertise, this system fosters trust in AI-driven healthcare, paving the way for widespread adoption and ultimately, better patient outcomes.

Intelligent healthcare agents are not meant to operate in isolation; their true potential lies in seamlessly incorporating external knowledge sources. These agents actively access and process a continuously updating stream of information, including the latest peer-reviewed research, evolving clinical guidelines, and comprehensive drug databases. This dynamic integration surpasses the limitations of static, pre-programmed knowledge, allowing the agent to adapt to new discoveries and best practices in real-time. By leveraging external knowledge, these agents can offer more accurate diagnoses, personalized treatment plans, and proactively identify potential risks, ultimately improving patient outcomes and supporting clinicians with evidence-based insights. The ability to synthesize information from diverse sources ensures the agent remains at the forefront of medical advancements, fostering a continuous learning cycle and enhancing the quality of care provided.

Intelligent healthcare agents are poised to revolutionize numerous clinical workflows, extending their capabilities across a spectrum of essential tasks. These agents are not envisioned as replacements for medical professionals, but rather as powerful tools to augment their expertise, beginning with initial patient triage and the formulation of potential diagnoses – a process known as differential diagnosis. Beyond initial assessment, these systems can assist in complex diagnostic reasoning, sifting through vast amounts of patient data to identify patterns and insights. Crucially, agents are being developed to support treatment planning and prescription, offering evidence-based recommendations tailored to individual patient needs, while also facilitating continuous patient interaction and monitoring through remote sensors and virtual assistants – ultimately enabling more proactive and personalized care.

The presented taxonomy of agentic AI in healthcare demands a rigorous approach to evaluating LLM-based agents, mirroring a fundamental principle of information theory. As Claude Shannon stated, “The most important thing in communication is to convey the message with the least possible redundancy.” This sentiment echoes throughout the analysis of cognitive capabilities and knowledge management detailed in the research. Every parameter, every data point utilized by these agents, must serve a defined purpose, minimizing noise and maximizing the clarity of clinical decision support. The pursuit of provable accuracy, rather than merely functional performance, aligns perfectly with Shannon’s emphasis on efficient and reliable information transmission, ensuring patient safety and ethical considerations are not obscured by superfluous complexity.

What’s Next?

The proliferation of LLM-based agents in healthcare, as evidenced by this review of nearly fifty studies, reveals a field characterized more by enthusiastic exploration than rigorous validation. The seven-dimensional taxonomy offers a useful descriptive framework, yet it simultaneously highlights the enduring challenge: demonstrating genuine, provable improvement beyond superficial performance metrics. Many proposed agents excel at mimicking clinical reasoning, but few offer mathematically demonstrable superiority to established methods. The pursuit of ‘agentic’ capabilities, while conceptually appealing, risks obscuring the fundamental need for verifiable accuracy and robustness.

Future work must prioritize formal verification techniques. The current emphasis on empirical testing, while pragmatic, remains insufficient. A system that correctly diagnoses 95% of cases is interesting; a system whose diagnostic process can be mathematically proven to be optimal, even under uncertainty, is fundamentally different. The field needs to move beyond assessing what these agents achieve, and focus on how they achieve it, establishing clear boundaries of applicability and limitations with mathematical precision.

In the chaos of data, only mathematical discipline endures. The promise of AI in healthcare hinges not on the ability to generate plausible outputs, but on the capacity to create systems whose internal logic is as sound and unassailable as the principles of medicine itself. Until that standard is met, these agents will remain clever approximations, not true advancements.

Original article: https://arxiv.org/pdf/2602.04813.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash of Clans Unleash the Duke Community Event for March 2026: Details, How to Progress, Rewards and more

- Brawl Stars February 2026 Brawl Talk: 100th Brawler, New Game Modes, Buffies, Trophy System, Skins, and more

- Gold Rate Forecast

- MLBB x KOF Encore 2026: List of bingo patterns

- eFootball 2026 Show Time Worldwide Selection Contract: Best player to choose and Tier List

- Free Fire Beat Carnival event goes live with DJ Alok collab, rewards, themed battlefield changes, and more

- Brent Oil Forecast

- Magic Chess: Go Go Season 5 introduces new GOGO MOBA and Go Go Plaza modes, a cooking mini-game, synergies, and more

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Overwatch Domina counters

2026-02-05 23:59