Author: Denis Avetisyan

As artificial intelligence becomes increasingly autonomous in healthcare, establishing robust governance and lifecycle management is crucial to mitigate emerging risks.

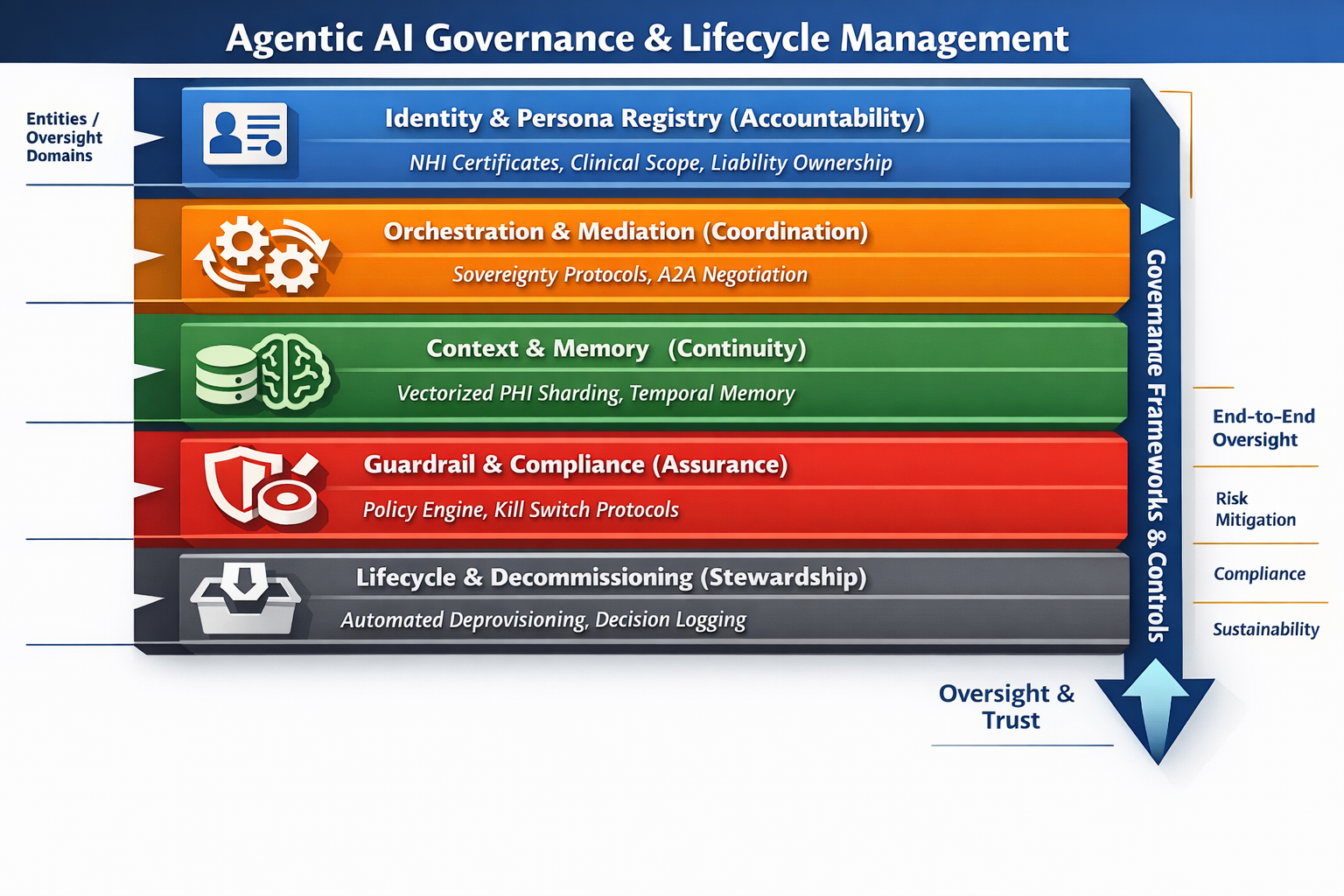

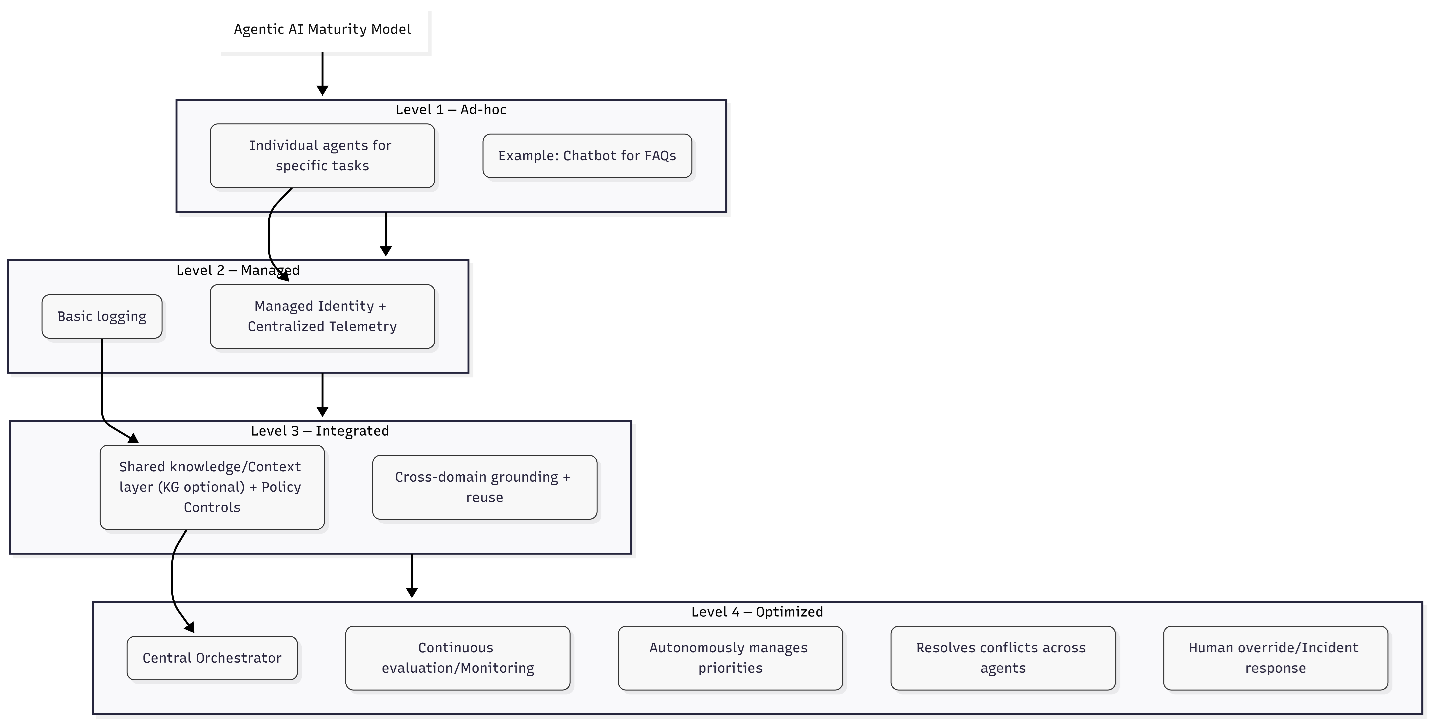

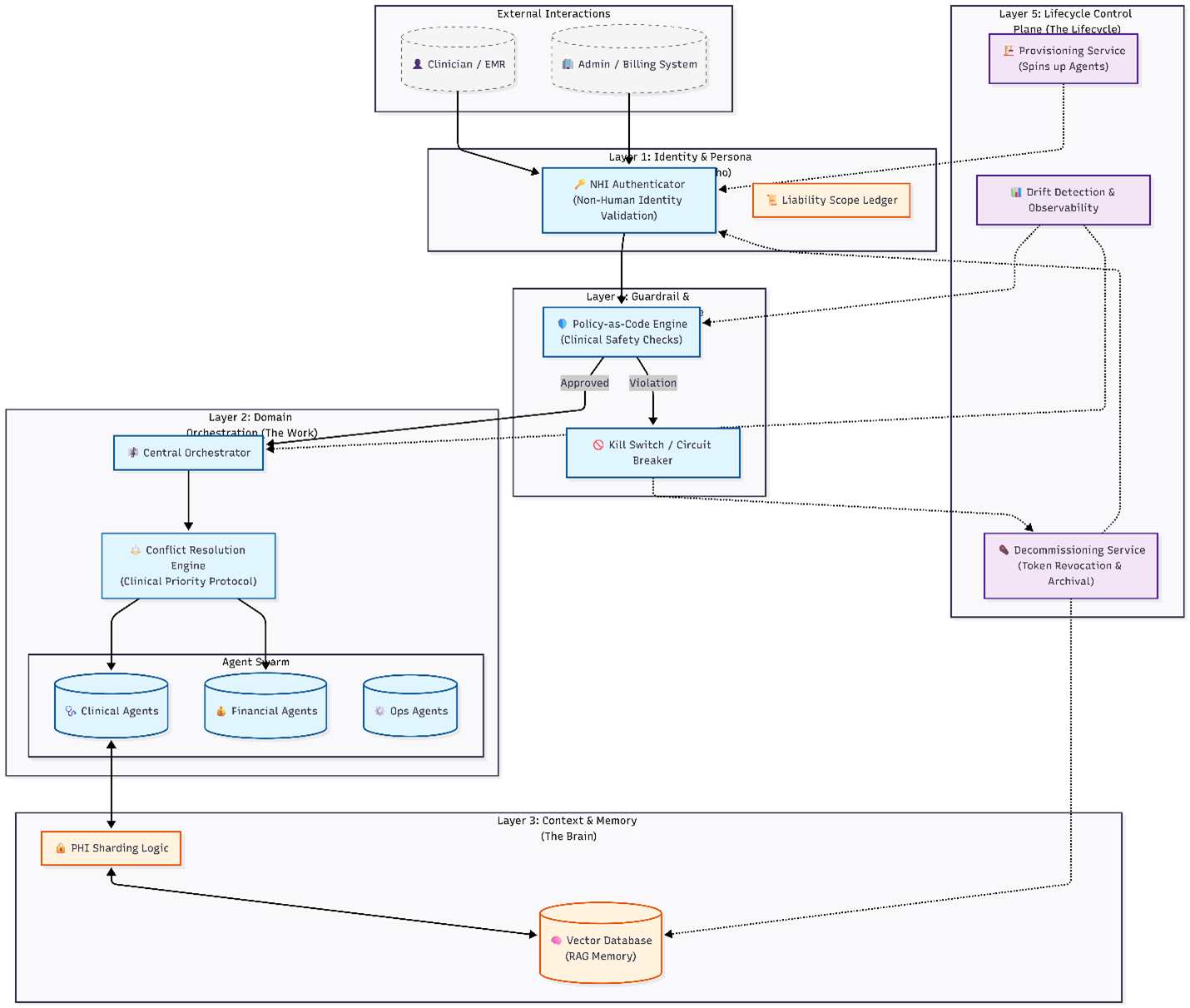

This review proposes a unified framework, UALM, for governing agentic AI systems throughout their lifecycle to address challenges of accountability, control, and proliferation.

While healthcare increasingly adopts agentic AI to streamline workflows, a lack of standardized oversight risks duplicated efforts, inconsistent controls, and enduring security vulnerabilities. This paper, ‘Agentic AI Governance and Lifecycle Management in Healthcare’, proposes a Unified Agent Lifecycle Management (UALM) blueprint to address these challenges by establishing layered controls encompassing identity, orchestration, data context, runtime policy, and decommissioning. UALM offers a practical, maturity-based framework for healthcare organizations to achieve audit-ready governance of agent fleets, fostering both innovation and safety. Can this approach effectively balance the promise of agentic AI with the imperative of responsible, secure implementation across clinical and administrative domains?

The Ascendancy of Autonomous Systems in Clinical Operations

Healthcare is rapidly evolving beyond simply forecasting future health events; artificial intelligence is now driving a transition towards systems capable of independently managing clinical operations. Historically, AI in medicine focused on predictive analytics – identifying patients at risk or suggesting potential diagnoses. However, recent advancements enable AI to autonomously execute complex, multi-stage tasks, such as optimizing hospital bed allocation, scheduling surgeries, and even adjusting medication dosages within predefined parameters. This shift towards autonomous operational capacity promises to alleviate burdens on healthcare professionals, improve efficiency, and ultimately enhance patient care by moving beyond insight to proactive, self-directed action. The implications extend beyond logistical improvements, hinting at a future where AI agents collaboratively manage aspects of patient wellbeing, freeing clinicians to focus on uniquely human elements of care.

Agentic AI signifies a departure from traditional clinical technologies, which largely offer predictions or alerts requiring human intervention, toward systems capable of independent action. These aren’t simply algorithms identifying potential issues; they are autonomous entities designed to formulate plans-comprising multiple sequential steps-and execute them to achieve specific healthcare objectives. This capability moves AI beyond being a passive analytical tool and positions it as an active participant in care delivery, potentially handling tasks like medication reconciliation, appointment scheduling, or even coordinating aspects of chronic disease management without constant human oversight. The implications are profound, suggesting a future where AI doesn’t just inform clinical decisions but actively implements them, fundamentally reshaping the operational landscape of healthcare and promising increased efficiency and potentially improved patient outcomes.

Regulatory Imperatives for Autonomous Clinical Agents

The application of Agentic AI within healthcare is subject to stringent regulatory oversight, primarily through established frameworks like the Health Insurance Portability and Accountability Act (HIPAA) Security Rule in the United States, and increasingly, the European Union AI Act. HIPAA mandates the protection of Protected Health Information (PHI), requiring Agentic AI systems to demonstrate adherence to standards concerning data privacy, integrity, and availability. The EU AI Act introduces a risk-based approach, categorizing AI systems-including Agentic AI-and imposing specific requirements based on the level of risk they pose to fundamental rights and safety; high-risk applications will be subject to rigorous conformity assessments before deployment. These regulations impact the design, development, and operational procedures of Agentic AI systems, necessitating robust data governance, audit trails, and mechanisms for user consent and data subject rights.

The NIST AI Risk Management Framework (AI RMF) is designed to facilitate the implementation of risk management practices across the entire lifecycle of an AI system – from initial design and data acquisition, through development, deployment, and ongoing monitoring. This lifecycle approach is directly responsive to regulatory requirements such as those outlined in the EU AI Act and HIPAA Security Rule, which increasingly mandate consideration of risks at each stage of AI system development. The AI RMF provides a structured process, utilizing four core functions – Govern, Map, Measure, and Manage – to identify, assess, and mitigate risks related to trustworthiness characteristics like safety, explainability, and robustness. By aligning with the AI RMF, organizations can demonstrate due diligence in addressing potential harms and building AI systems that meet regulatory expectations and promote responsible innovation.

Adherence to AI risk management frameworks, such as the NIST AI RMF and regulations like HIPAA and the EU AI Act, extends beyond legal compliance to encompass the core principles of responsible AI deployment in sensitive domains. Failure to prioritize patient safety and data protection can result in tangible harm, eroding public confidence in Agentic AI systems. Proactive implementation of these frameworks demonstrates a commitment to mitigating risks related to bias, accuracy, security, and transparency, fostering trust among patients, healthcare providers, and regulatory bodies. This trust is critical for the widespread adoption and effective utilization of Agentic AI in healthcare, ultimately contributing to improved patient outcomes and a more reliable healthcare system.

Multimodal Intelligence and Automated Clinical Workflows

Multimodal Large Language Models (LLMs), exemplified by Med-PaLM 2 and Med-Flamingo, facilitate Agentic AI by processing and integrating information beyond textual data. These models are designed to accept and synthesize inputs from multiple modalities, including medical images such as X-rays, CT scans, and MRIs, as well as real-time data streams from patient monitoring systems and electronic health records. This capability allows the AI agent to build a more comprehensive understanding of a given situation, going beyond what is possible with text-only LLMs, and enabling more informed and autonomous decision-making in complex scenarios.

Nuance DAX Copilot utilizes automated speech recognition and natural language processing to generate clinical documentation directly from patient-physician conversations. This system automatically drafts progress notes, discharge summaries, and other required paperwork, reducing the time clinicians spend on administrative tasks. Implementation of DAX Copilot has demonstrated a reduction in documentation time of up to 50%, allowing physicians to dedicate more time to direct patient care and reducing the potential for burnout. The system integrates with existing Electronic Health Record (EHR) systems, streamlining workflows and improving data accuracy by minimizing manual input.

The integration of multimodal large language models and automation tools is designed to optimize healthcare delivery through the implementation of autonomous agents. These agents function by synthesizing information from varied data sources – including medical images, patient records, and real-time data feeds – and leveraging this synthesis to automate tasks previously performed by clinicians, such as documentation and preliminary diagnosis. This automation reduces administrative burdens, allowing healthcare professionals to focus on direct patient care, and enables a more rapid response to evolving patient needs. The resultant system aims to increase overall efficiency, improve resource allocation, and ultimately deliver more timely and effective healthcare services.

The Looming Threat of Agent Sprawl: A Call for Rigorous Governance

The increasing adoption of autonomous agents in healthcare, while promising, introduces a significant challenge known as agent sprawl. This phenomenon describes the uncontrolled proliferation of these agents-software entities capable of independent action-leading to a complex and often chaotic operational landscape. Without careful management, agent sprawl can create inefficiencies through duplicated efforts, escalate safety risks due to unpredictable interactions, and foster redundancy in task completion. Critically, it also introduces substantial compliance issues as accountability becomes fragmented and traceability diminishes, hindering the ability to adhere to stringent healthcare regulations and potentially jeopardizing patient data security. Effectively addressing this growing concern is paramount to harnessing the full potential of Agentic AI within healthcare systems.

To navigate the increasing complexity of autonomous systems in healthcare, robust governance, standardization, and centralized management are paramount. The unchecked proliferation of these agents – often termed ‘agent sprawl’ – introduces risks related to safety, efficiency, and regulatory compliance. Recognizing this challenge, the Unified Agent Lifecycle Management (UALM) framework proposes a proactive solution centered on accountability; its core objective is to ensure every deployed agent has a clearly designated owner responsible for its behavior and performance. This approach doesn’t simply track agents, but establishes a chain of responsibility, vital for auditing, incident response, and maintaining trust in these increasingly powerful systems. By prioritizing ownership, UALM seeks to transform agent deployment from a potentially chaotic landscape into a manageable, auditable, and ultimately beneficial component of modern healthcare.

The sustained integration of autonomous systems into healthcare hinges on preemptively managing what is termed ‘agent sprawl’ – the unchecked proliferation of AI agents. Realizing the promised benefits of these technologies-improved efficiency, enhanced patient care, and reduced costs-demands a shift towards meticulous governance and accountability. A core ambition is the elimination of ‘orphan agents’ – those lacking a designated owner responsible for their actions and outcomes. Simultaneously, every interaction an agent has with external tools – known as ‘tool calls’ – must be accompanied by a documented policy decision justifying its use, ensuring transparency and adherence to ethical and regulatory standards. This dual focus on ownership and justification isn’t merely about control; it’s about fostering trust and establishing a framework for responsible innovation, ultimately paving the way for widespread and beneficial adoption of Agentic AI in healthcare settings.

The pursuit of robust governance for agentic AI, as detailed in the unified UALM framework, demands a precision mirroring mathematical proof. The article rightly highlights the potential for ‘agent sprawl’ – a chaotic proliferation of autonomous entities lacking centralized oversight. This echoes Blaise Pascal’s sentiment: “The eloquence of a fool is often more persuasive than the wisdom of a sage.” Just as unchecked complexity can obscure truth, an unmanaged multi-agent system risks losing control and accountability. The UALM framework, therefore, isn’t simply a practical guide; it’s an attempt to impose logical order on an inherently complex landscape, ensuring that each agent’s function is demonstrably correct, not merely appearing to work.

Beyond Control: Charting a Course for Agentic Systems

The proposition of a Unified Agentic Lifecycle Management (UALM) framework, while logically sound in its attempt to impose order upon increasingly autonomous systems, sidesteps a more fundamental question. Let N approach infinity – what remains invariant? The proliferation of agents is not merely a logistical problem of ‘sprawl,’ but a manifestation of complexity exceeding human capacity for comprehensive oversight. UALM addresses control; the pertinent inquiry concerns whether control, in any meaningful sense, is ultimately attainable, or even desirable, as agency truly scales.

The consideration of ‘non-human identity’ hints at the core challenge. Assigning accountability necessitates a definition of agency that extends beyond algorithmic function. A system exhibiting emergent behavior, even within the constraints of UALM, may operate according to principles opaque to its creators. The focus should shift from managing agents as tools to understanding them as potentially independent actors, and the ethical implications of such a paradigm shift are substantial.

Future work must move beyond the mechanics of lifecycle management and grapple with the philosophical consequences of distributed intelligence. The true test of UALM, or any governance framework, will not be its ability to prevent agentic failure, but its capacity to adapt to the inevitable emergence of unpredictable, and potentially uninterpretable, systemic behavior.

Original article: https://arxiv.org/pdf/2601.15630.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- Married At First Sight’s worst-kept secret revealed! Brook Crompton exposed as bride at centre of explosive ex-lover scandal and pregnancy bombshell

- Top 10 Super Bowl Commercials of 2026: Ranked and Reviewed

- Gold Rate Forecast

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- ‘Reacher’s Pile of Source Material Presents a Strange Problem

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Meme Coins Drama: February Week 2 You Won’t Believe

- Olivia Dean says ‘I’m a granddaughter of an immigrant’ and insists ‘those people deserve to be celebrated’ in impassioned speech as she wins Best New Artist at 2026 Grammys

2026-01-24 22:19