Author: Denis Avetisyan

A new generative framework leverages attention mechanisms to create realistic data, bolstering the accuracy of chemical analysis even with complex interference.

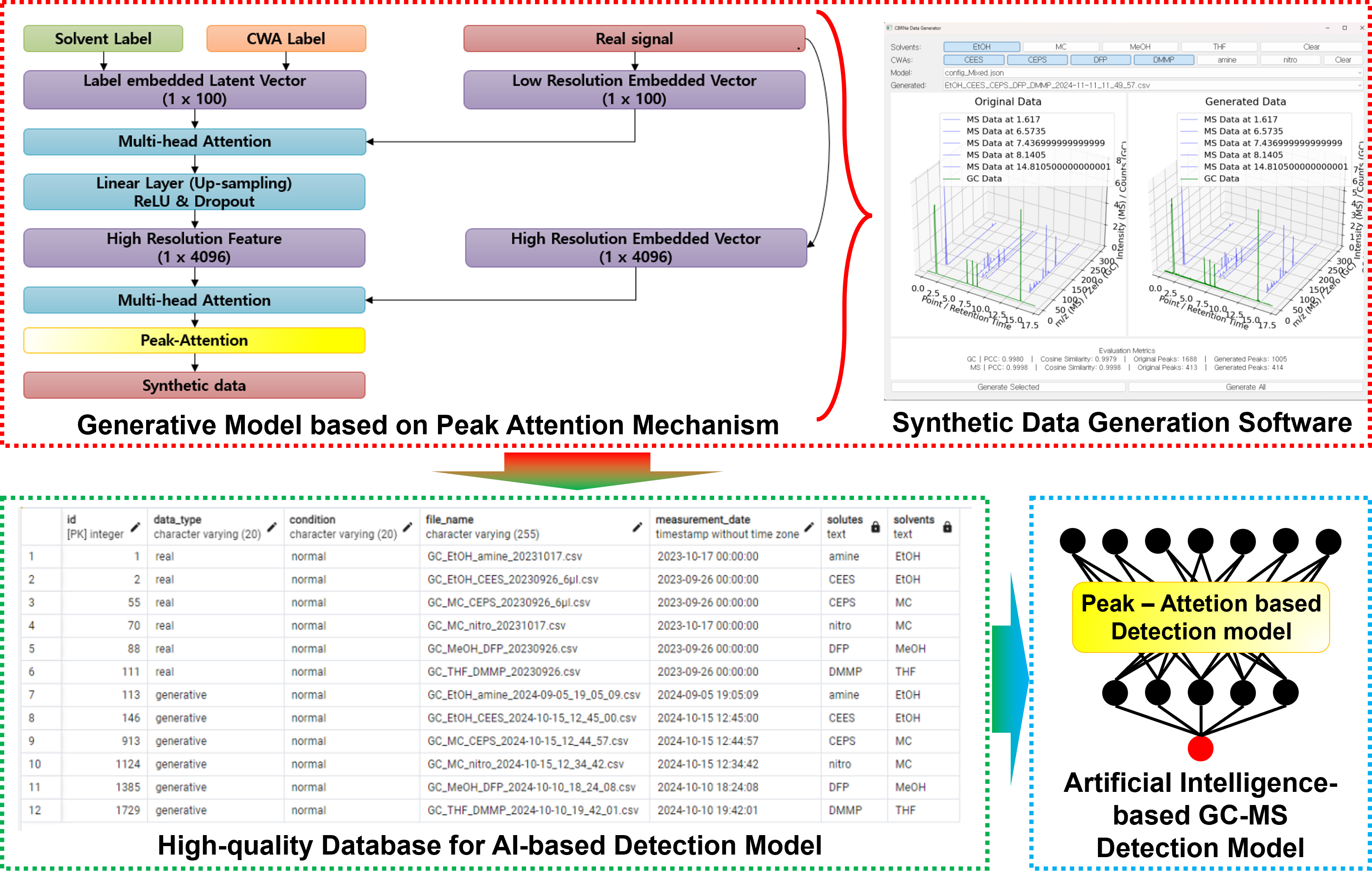

Researchers developed a conditional generative adversarial network with peak-aware attention to synthesize GC-MS data for improved robustness in chemical detection systems.

Despite the widespread utility of gas chromatography-mass spectrometry (GC-MS) in chemical analysis, its reliability is often compromised by interfering substances leading to inaccurate detection. This paper introduces a novel ‘Conditional Generative Framework with Peak-Aware Attention for Robust Chemical Detection under Interferences’ to address this challenge by generating realistic synthetic GC-MS data. The framework utilizes a peak-aware attention mechanism within a conditional generative adversarial network (CGAN) to faithfully reproduce characteristic spectral features and account for experimental conditions. By augmenting limited datasets with these simulated signals, can we significantly enhance the robustness and accuracy of AI-driven chemical substance discrimination systems in complex environments?

Unveiling the Noise: The Challenge of Chemical Detection

Contemporary chemical analysis techniques, such as gas chromatography-mass spectrometry (GC-MS), are encountering escalating difficulties in the reliable detection of minute quantities of hazardous substances. This challenge stems not from a limitation of the instruments themselves, but from the increasing complexity of real-world samples and environmental conditions. Background interference – the presence of other compounds with similar chemical properties – can obscure the signal of the target threat, leading to both false positive and false negative identifications. The demand for greater sensitivity, coupled with the need to analyze increasingly complex matrices – air samples, contaminated surfaces, and biological fluids – is pushing these established methods to their operational limits, necessitating the development of advanced signal processing and novel detection strategies to maintain a robust defense against chemical threats.

Current chemical threat detection technologies, while sophisticated, are demonstrably vulnerable to inaccuracies when faced with real-world complexities. The presence of interfering substances – commonly encountered in environmental samples or alongside actual threat agents – can significantly distort analytical signals, leading to both false positive and false negative results. A false positive, incorrectly identifying a harmless substance as dangerous, can trigger unnecessary and costly emergency responses, while a false negative – failing to detect a genuine threat – presents an obvious and potentially catastrophic safety risk. This susceptibility isn’t merely a matter of technical limitation; it fundamentally impacts the reliability of hazard assessments and the effectiveness of protective measures, demanding continuous refinement of detection protocols and analytical techniques to mitigate these critical vulnerabilities.

To rigorously evaluate the performance of chemical threat detection systems, researchers employ a suite of surrogate chemicals that mimic the properties and challenges posed by actual dangerous substances. Compounds like Sarin and VX Gas, notorious nerve agents, are rarely used directly in testing due to safety concerns; instead, structurally similar yet less hazardous chemicals-including Ethylenediamine, 2-CEPS, 2-CEES, and 4-Nitrophenol-serve as proxies. These surrogates allow scientists to replicate the complex spectral signatures and potential interferences encountered in real-world scenarios, enabling them to assess a system’s ability to accurately identify threats amidst background noise and other confounding factors. This approach provides a safe and controlled environment for validating detection capabilities and refining analytical techniques before deployment in critical security applications.

The reliable detection of chemical threats is significantly compromised by the phenomenon of spectral overlap and distortion, particularly within complex real-world scenarios. When multiple chemical compounds are present, their individual spectral signatures-the unique patterns of light absorption or emission used for identification-can merge and obscure one another. This blending creates ambiguous signals, making it difficult to isolate and accurately identify the target substance. Furthermore, the presence of interfering compounds can distort the spectral shape of the target, shifting peaks or introducing extraneous features, thus mimicking the presence of other, potentially benign, chemicals. Consequently, standard analytical techniques may yield false positives or, more critically, fail to detect a genuine threat concealed within the noise of these overlapping and distorted signals, demanding increasingly sophisticated analytical approaches and signal processing techniques to overcome these limitations.

![Comparison of gas chromatography-mass spectrometry (GC-MS) data reveals limitations of generative AI models-including TimeGAN[31], LSTM-CNN GAN[40], and DCGAN[9]-when reconstructing complex chemical profiles like that of 2-CEES in ethanol.](https://arxiv.org/html/2601.21246v1/figures/fig_dcgan.png)

Beyond Traditional Methods: An AI-Powered Framework

An AI-powered detection framework has been developed to improve chemical threat identification through enhanced accuracy and reliability. This system moves beyond traditional methods by leveraging artificial intelligence algorithms to analyze complex data signatures associated with chemical substances. The framework is designed to minimize both false positive and false negative identification rates, critical for applications in security, environmental monitoring, and industrial safety. The system’s architecture allows for continuous learning and adaptation, enabling it to identify previously unknown threats and maintain performance across varying environmental conditions and instrumentation configurations. Performance benchmarks demonstrate a statistically significant improvement in detection rates compared to conventional analytical techniques.

The detection framework utilizes a Transformer Architecture, a deep learning model originally developed for natural language processing, to analyze Gas Chromatography-Mass Spectrometry (GC-MS) data. Unlike traditional methods that often rely on manually defined features, the Transformer’s self-attention mechanism enables it to automatically learn and weigh the importance of different regions within the complex GC-MS spectra. This allows the model to identify subtle, non-linear relationships between different mass-to-charge ratios and retention times, effectively capturing intricate chemical signatures indicative of specific threats. The architecture’s ability to process the entire spectrum simultaneously, rather than relying on sequential analysis, improves the detection of co-eluting compounds and enhances overall spectral pattern recognition.

Data augmentation is a critical component of the AI-powered detection framework, addressing the limited availability of comprehensive spectral data for chemical threat identification. Techniques employed include the introduction of synthetic variations to existing Gas Chromatography-Mass Spectrometry (GC-MS) data, simulating real-world interference – such as co-eluting compounds or background noise – and expanding the dataset’s size and diversity. This artificially expanded dataset improves the Transformer model’s ability to generalize and maintain high accuracy when analyzing samples containing unexpected or atypical interference, thereby enhancing the overall robustness and reliability of the detection system. The generation of these synthetic data points is performed programmatically, ensuring consistency and traceability within the training process.

The synthetic data generated for training the AI detection framework, alongside its associated metadata – including generation parameters, spectral characteristics, and simulated interference profiles – is systematically stored and managed within a SQL database. This relational database structure ensures data integrity through enforced schema constraints and transactional consistency. Data accessibility is facilitated by standardized querying methods, enabling efficient retrieval of specific data subsets for model training, validation, and performance analysis. The database architecture supports scalability to accommodate expanding datasets and metadata fields, and allows for version control of synthetic data iterations, which is crucial for reproducibility and model refinement.

![The generative model accurately reproduces both the peak positions and intensity patterns of complex GC-MS spectra, as demonstrated for [latex]4[/latex]-nitrophenol + MC and a more complex mixture of [latex]2[/latex]-CEES, [latex]2[/latex]-CEPS, DFP, DMMP, and MC.](https://arxiv.org/html/2601.21246v1/figures/2-CEES_2-CEPS_DFP_DMMP_MC_Generated.png)

Decoding Complexity: Peak-Aware Attention

The Peak-Attention Conditional Generative Framework addresses limitations in analyzing Gas Chromatography-Mass Spectrometry (GC-MS) data by focusing on accurate reconstruction of peak structures, even when spectral interference is present. This framework employs a conditional generative model, meaning it generates GC-MS spectra conditioned on input data, and incorporates a peak-aware attention mechanism to prioritize signal features associated with chromatographic peaks. This approach allows the model to distinguish between target compound signals and interfering substances, improving the fidelity of reconstructed spectra and enabling more accurate identification of chemical compounds within complex mixtures. The framework’s design specifically mitigates the impact of background noise and spectral distortion commonly encountered in GC-MS analysis.

The Peak-Aware Attention Mechanism functions by weighting input features based on their relevance to local peaks within the Gas Chromatography-Mass Spectrometry (GC-MS) data. This is achieved through a learned attention distribution that dynamically emphasizes spectral regions corresponding to peak maxima, effectively increasing the signal contribution from target compounds. The mechanism calculates attention weights by comparing each input feature to a peak indicator function, allowing the model to prioritize features associated with peak structures. This targeted attention process enhances signal discrimination by increasing the prominence of analyte signals relative to baseline noise and interference from co-eluting compounds, ultimately improving the accuracy of spectral reconstruction and compound identification.

The system’s ability to prioritize critical peak features directly reduces the influence of background noise and spectral distortion in GC-MS data. Background noise, inherent in the measurement process, and spectral distortion from co-eluting or interfering compounds often obscure true signals. By focusing computational resources on identifying and amplifying the signal contribution of distinct chromatographic peaks, the model effectively filters out these disruptive elements. This selective attention mechanism diminishes the impact of non-target compounds and measurement errors, resulting in a cleaner representation of the target analyte’s spectral signature and improved data accuracy.

The reconstruction accuracy of GC-MS spectra, as evaluated by the Peak-Aware Attention Conditional Generative Framework, consistently exceeds 0.94 when measured using both cosine similarity and Pearson correlation coefficients. Cosine similarity assesses the angle between the reconstructed and original spectra, providing a measure of directional agreement, while the Pearson correlation coefficient quantifies the linear relationship between the two datasets. Values exceeding 0.94 for both metrics indicate a strong positive correlation and high degree of similarity, demonstrating the model’s capacity to accurately reproduce complex GC-MS spectral data with minimal distortion.

The implemented attention mechanism functions by assigning weighted importance to different segments of the gas chromatography-mass spectrometry (GC-MS) input sequence. This process enables the model to focus computational resources on the portions of the spectrum most indicative of specific chemical compounds – namely, the peak features. By dynamically prioritizing these relevant data segments, the attention mechanism effectively filters out noise and minimizes the influence of interfering substances, ultimately improving the accuracy of chemical identification. The weighting is learned during model training, allowing the system to automatically determine which spectral regions are most diagnostic for accurate compound classification.

Beyond Detection: Implications and Future Trajectories

This novel AI-powered detection framework represents a substantial leap forward in the field of chemical threat identification, moving beyond traditional methods to offer heightened accuracy and reliability. By leveraging advanced algorithms, the system can discern dangerous substances with greater precision, minimizing false positives and ensuring swift, informed responses. This capability is particularly crucial given the increasing sophistication of chemical threats and the need for proactive security measures. The framework doesn’t simply identify substances; it offers a robust, data-driven approach to assessing potential hazards, ultimately bolstering safety protocols across diverse applications, from border control and public safety to environmental protection and industrial monitoring.

This AI-driven detection framework distinguishes itself through robust performance even amidst real-world complexities, a crucial feature for practical deployment. Unlike systems susceptible to disruptions from background noise or environmental factors, this technology maintains a high degree of accuracy when challenged by interference. This resilience positions it as a valuable asset in diverse applications, notably enhancing security screening protocols at airports and public events, and bolstering environmental monitoring efforts by enabling reliable detection of hazardous substances in complex samples. The ability to function consistently in imperfect conditions significantly lowers the risk of false negatives and strengthens the overall dependability of chemical threat identification, paving the way for proactive safety measures.

The AI-powered detection framework demonstrated a notable performance boost through the strategic incorporation of synthetically generated data. Utilizing data augmentation techniques, the system’s ability to accurately identify chemical threats was substantially improved; detection accuracy reached 0.935 when 307 synthetic samples were added to the existing training dataset. This augmentation also yielded a corresponding F1-score of 0.794, indicating a strong balance between precision and recall. The results highlight the effectiveness of synthetic data in overcoming limitations in real-world datasets and enhancing the robustness of the AI model, particularly when dealing with the challenges of chemical threat detection.

Continued development of this detection framework prioritizes broadening its scope to encompass a more extensive array of chemical threats, moving beyond the initially targeted substances. Researchers intend to refine the system’s algorithms and spectral libraries to accurately identify increasingly complex and diverse chemical compounds. Crucially, future efforts will concentrate on seamless integration with established security infrastructures, such as airport screening systems and environmental monitoring networks. This integration will involve adapting the framework to operate in real-time with existing hardware and software, facilitating immediate threat assessment and response, and ultimately enhancing overall public safety and environmental protection through a more comprehensive and proactive detection capability.

The pursuit of robust chemical detection, as detailed in this framework, isn’t about accepting the limitations of existing data-it’s about actively constructing alternatives. This echoes the spirit of mathematical exploration. As Paul Erdős once stated, “A mathematician knows a lot of things, but a physicist knows the stuff they’re useful.” The generative model, with its peak-aware attention, essentially reverse-engineers the process of chemical signature creation, building synthetic data to stress-test and refine detection systems. It’s a beautiful demonstration of how challenging a system-in this case, a GC-MS analyzer-reveals its underlying structure and vulnerabilities. The generation of synthetic data isn’t simply filling gaps; it’s a calculated attempt to break the system, and in doing so, understand it more deeply.

Pushing Beyond the Synthetic

The creation of convincing synthetic data, as demonstrated by this work, feels less like a solution and more like an elegant admission of defeat. It acknowledges the inherent messiness of real-world data-the interference, the noise-and attempts to bypass it not by eliminating the problem, but by manufacturing a reality where it doesn’t exist. The true test lies not in fooling a detector with crafted data, but in building systems resilient enough to dissect the genuine article, flaws and all. Future work should not simply refine the generative process, but actively probe the limitations of current detection algorithms when faced with truly adversarial examples – those subtly different from anything seen in training, synthetic or otherwise.

One wonders if the peak-aware attention mechanism, while effective, represents a local optimization. Attention, after all, is merely a weighting scheme, a way to highlight what appears important. A deeper investigation might reveal that the interference isn’t merely obscuring the signal, but fundamentally altering it – reshaping the chemical fingerprint in ways attention can’t fully capture. Perhaps the next iteration necessitates a move beyond feature extraction towards a more holistic, physics-informed modeling approach, embracing the complexity rather than smoothing it over.

Ultimately, this framework exposes a fundamental tension: the desire for robust, generalized detection versus the pursuit of increasingly sophisticated data augmentation. The field will likely bifurcate. One path leads to ever-more-realistic synthetic worlds, while the other demands a return to first principles – a rebuilding of detection algorithms from the ground up, designed to interrogate data, not merely recognize patterns. The most interesting results will undoubtedly emerge from the collision of these opposing forces.

Original article: https://arxiv.org/pdf/2601.21246.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Overwatch Domina counters

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- 1xBet declared bankrupt in Dutch court

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

- Gold Rate Forecast

- Bikini-clad Jessica Alba, 44, packs on the PDA with toyboy Danny Ramirez, 33, after finalizing divorce

- James Van Der Beek grappled with six-figure tax debt years before buying $4.8M Texas ranch prior to his death

2026-01-31 17:41