Author: Denis Avetisyan

A new approach leverages the power of mixture-of-experts to significantly improve robotic surgery policies, even with limited training data.

This work introduces MoE-ACT, a supervised Mixture-of-Experts architecture for action transformers that enables robust and data-efficient learning of minimally-invasive surgical tasks from vision-based control.

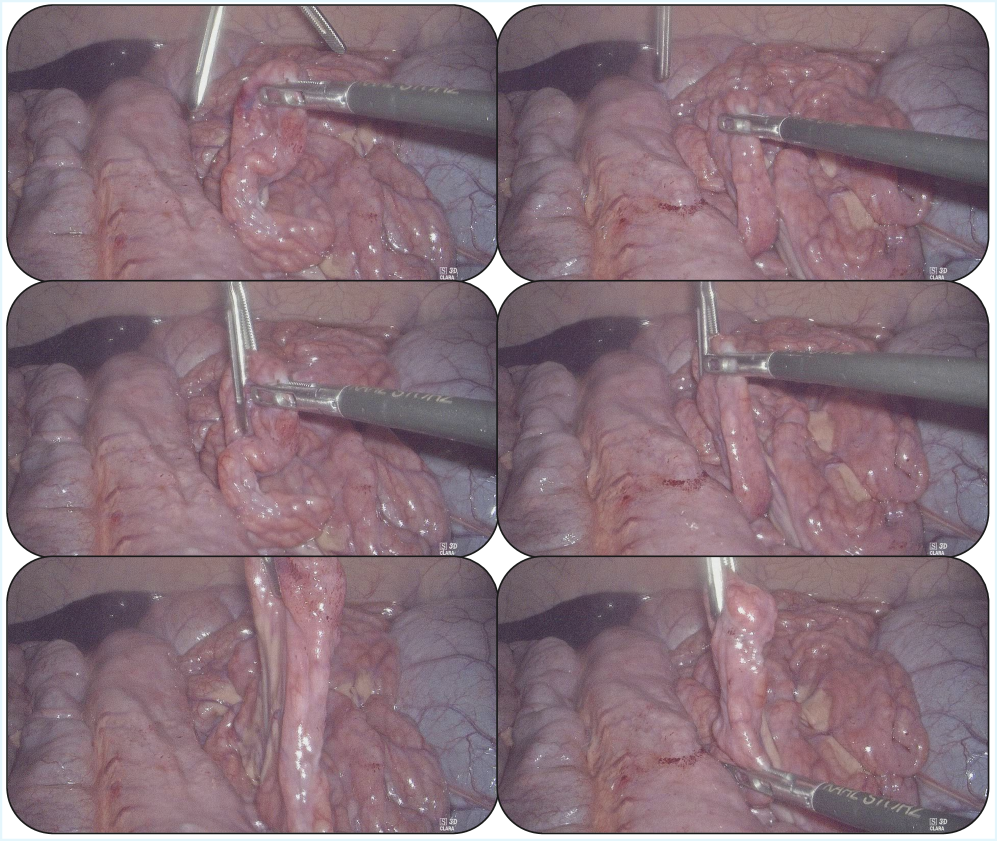

Despite advances in robotic manipulation, applying imitation learning to minimally-invasive surgery remains challenging due to limited data and the need for exceptional safety. This work, ‘MoE-ACT: Improving Surgical Imitation Learning Policies through Supervised Mixture-of-Experts’, introduces a supervised Mixture-of-Experts architecture for action transformer policies, enabling robust and data-efficient learning of surgical tasks from limited visual input. We demonstrate significant performance gains over state-of-the-art methods in collaborative surgical tasks-specifically, bowel grasping and retraction-and achieve zero-shot transfer to ex vivo porcine tissue. Could this approach pave the way for more adaptable and reliable surgical robots capable of assisting surgeons in complex procedures?

The Inevitable Complexity of Surgical Skill

Minimally-invasive surgery, while offering patients faster recovery and reduced scarring, presents a significant dexterity challenge for surgeons. These procedures require incredibly precise movements within a confined space, often relying on long instruments that diminish tactile feedback and amplify even minor tremors. Compounding this technical difficulty is a growing shortage of skilled surgical staff, placing increased demands on existing personnel. Certain tasks, like maintaining tissue retraction to provide a clear surgical field or delicately manipulating the bowel during complex procedures, are particularly arduous and time-consuming, increasing the risk of complications and surgical fatigue. Consequently, there’s a critical need to develop technologies that can augment surgical precision and alleviate the burden on medical professionals, ensuring consistent, high-quality patient care in the face of these evolving challenges.

The established pathway to surgical proficiency demands considerable resources, presenting a significant challenge for healthcare systems globally. Becoming a skilled surgeon traditionally involves years of intensive training, requiring dedicated mentorship from experienced practitioners, access to specialized facilities, and substantial patient exposure for practice. This model is not only costly in terms of time and finances, but also faces limitations due to the availability of qualified mentors and the increasing demands on surgical staff. Consequently, there is growing interest in developing automated assistance technologies – robotic systems and advanced simulation tools – capable of augmenting surgical training, providing repetitive practice opportunities, and potentially reducing the burden on experienced surgeons while ensuring consistent skill development for the next generation.

The translation of artificial intelligence into practical surgical assistance is hindered by a significant generalization problem. Current machine learning models, often trained on simulated data or limited datasets, frequently fail when confronted with the unpredictable variability inherent in real surgical environments. Factors like differing tissue types, patient anatomy, and the dynamic nature of procedures create a vast ‘reality gap’ that existing algorithms struggle to bridge. Consequently, novel learning strategies – including techniques like transfer learning, meta-learning, and reinforcement learning with robust simulation – are crucial to develop systems capable of adapting to unforeseen circumstances and performing reliably across a diverse range of surgical scenarios. Overcoming this limitation isn’t merely about improving accuracy; it’s about building trust and ensuring patient safety by creating AI collaborators that can function effectively in the complex and often chaotic world of the operating room.

Specialization as a Path to Surgical Automation

The Surgical Robotics Transformer is a policy developed for execution of complex surgical procedures requiring dexterity and sequential actions. This policy is specifically designed to address the challenges of multi-step tasks common in surgery, such as those involving tissue manipulation, instrument tracking, and precise tool application. Unlike broadly applicable robotic policies, the Surgical Robotics Transformer is tailored to the surgical domain, prioritizing performance on tasks representative of surgical workflows and utilizing a transformer-based architecture to model task dependencies and facilitate robust action planning.

The Surgical Robotics Transformer diverges from generalist foundation models by prioritizing specialization in surgical skills. This focused approach allows the policy to achieve robust performance with significantly less training data compared to models requiring extensive datasets for broad applicability. By concentrating on the specific kinematics, constraints, and objectives of surgical tasks, the system minimizes the need for large-scale pre-training, enabling efficient development and deployment in resource-constrained environments. This data efficiency is achieved through a tailored architecture and training regimen optimized for the nuances of surgical manipulation, rather than attempting to generalize across diverse domains.

Precise robotic control during experimentation was maintained through the utilization of the Universal Robots UR5e Robotic Arm, configured with a Remote-Center-of-Motion (RCM) constraint. This RCM functionality allows for manipulation of instruments within the OpenHELP Phantom surgical simulator while minimizing unintended movement of the insertion point, crucial for surgical precision. The UR5e’s six degrees of freedom, combined with the RCM constraint, facilitated complex maneuvers and stable instrument control throughout the evaluation of the Surgical Robotics Transformer policy. The OpenHELP Phantom provided a realistic, yet controlled, environment for assessing the system’s performance under conditions mirroring surgical procedures.

Deconstructing Complexity: The Illusion of Control

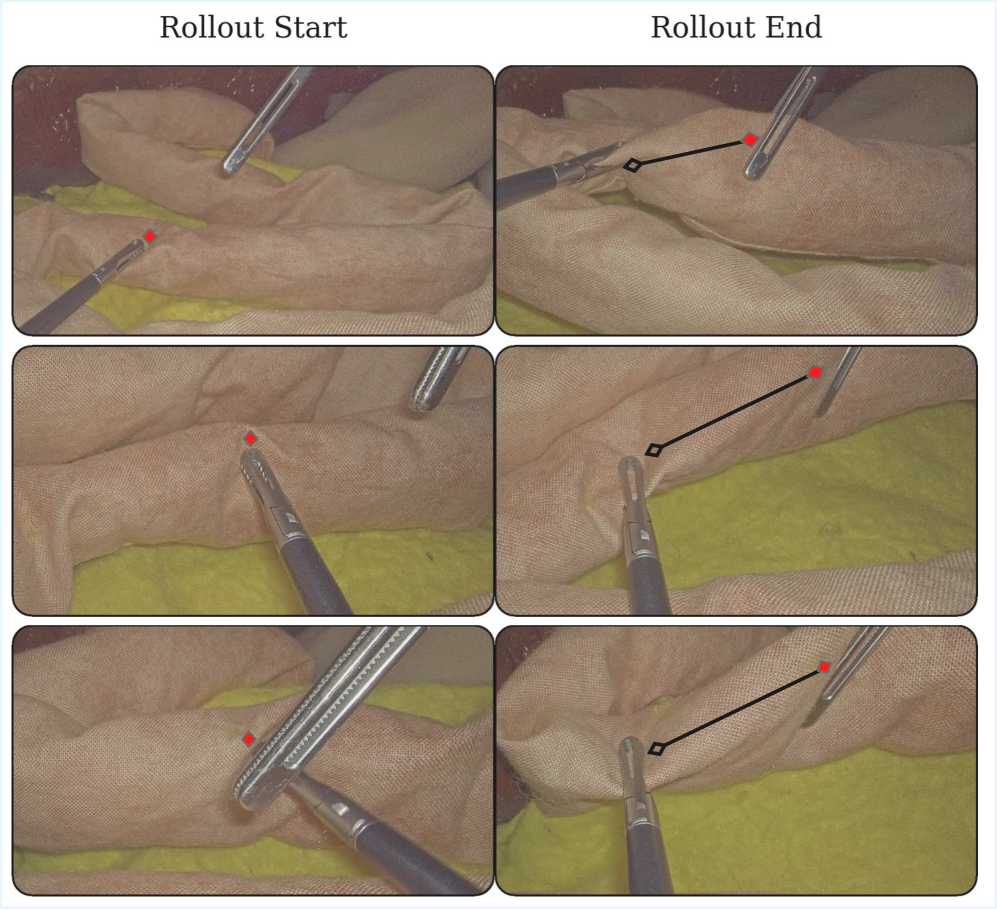

Action Chunking Transformers (ACT) represent a novel approach to surgical action recognition and prediction, exceeding the performance of existing Vision-Language-Action (VLA) models. The core innovation lies in the utilization of a variational framework to decompose complex surgical procedures into discrete, reconstructible “action chunks.” This decomposition allows the model to learn temporal dependencies and predict future actions based on observed visual and linguistic inputs. By framing the problem as a reconstruction task – attempting to recreate these action chunks from input data – the model develops a robust understanding of surgical workflows and improves accuracy in action prediction, particularly beneficial in data-limited scenarios.

Action Chunking Transformers address the challenge of limited data availability in surgical training and robotic assistance by extending the established transformer architecture. This modification enables effective learning with fewer examples, a critical factor given the difficulty and cost of acquiring large-scale labeled surgical datasets. The integration of Endoscopic Visual Feedback further enhances performance; endoscopic video provides a readily available source of visual data that complements action chunking, allowing the model to correlate observed surgical maneuvers with corresponding visual inputs. This synergistic effect improves the model’s ability to generalize from limited data and accurately predict or assist with surgical tasks.

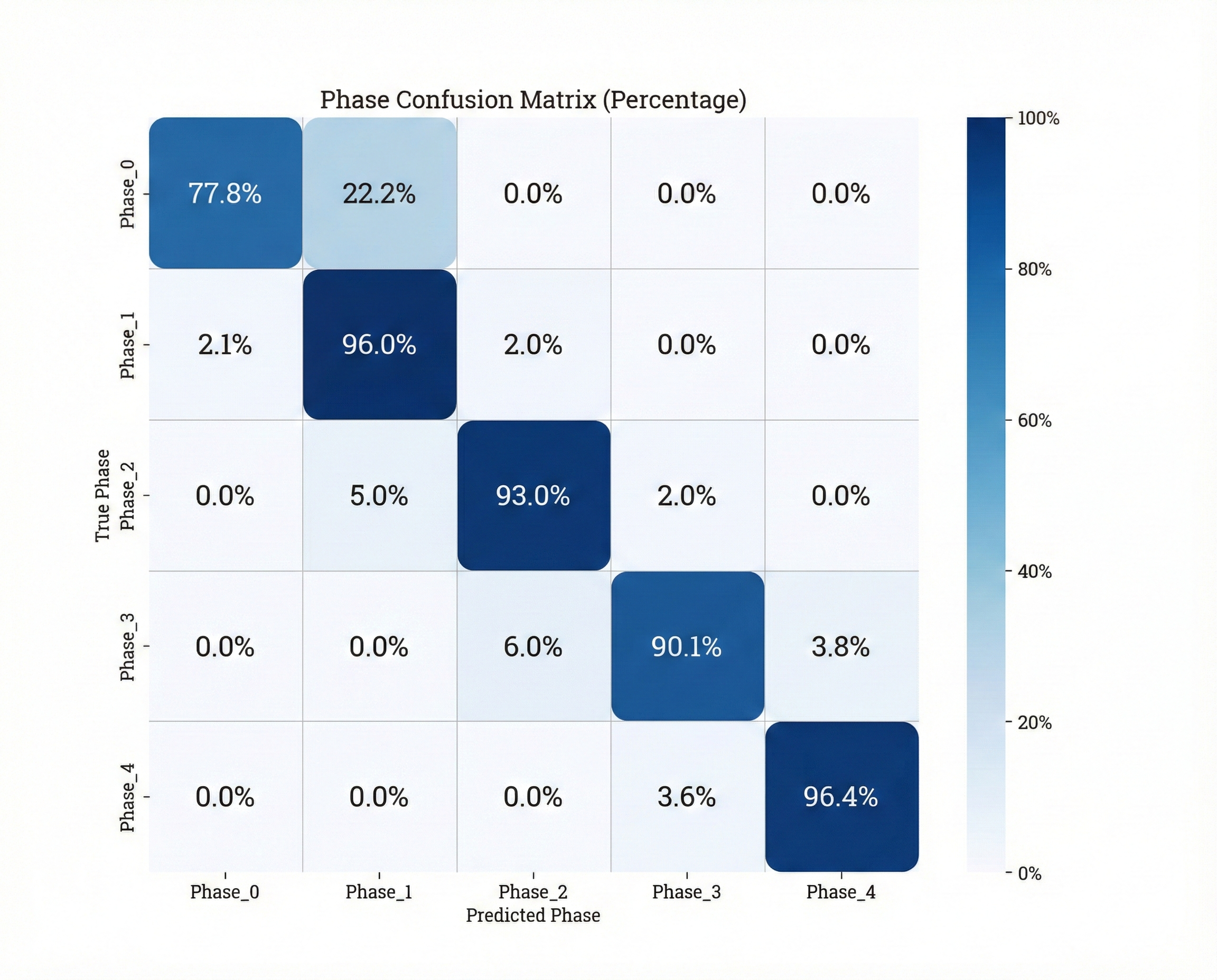

Implementation of a Mixture-of-Experts (MoE) architecture in conjunction with Action Chunking Transformers resulted in an 85% success rate during evaluation of the surgical bowel retraction task. This performance represents a 70% relative improvement compared to the baseline Action Chunking Transformer (ACT) model. The MoE architecture enables specialization within the model, allowing different ‘expert’ networks to focus on specific aspects of the surgical task, thereby increasing overall accuracy and efficiency in action prediction and execution.

The Fragile Promise of In Vivo Intelligence

A novel surgical assistance system, leveraging the Mixture-of-Experts architecture, was successfully implemented during in vivo porcine surgery, marking a significant step toward real-world clinical application. This approach allows the system to dynamically select and combine specialized “expert” networks tailored to different tissue types and surgical conditions encountered during the procedure. The in vivo validation demonstrated the system’s ability to function reliably within the complex and unpredictable environment of live tissue, paving the way for future development of intelligent tools that can augment a surgeon’s capabilities. By effectively navigating the challenges of a live surgical setting, this research highlights the potential of advanced machine learning to improve surgical precision, efficiency, and ultimately, patient outcomes.

The system’s capacity for zero-shot transfer was powerfully demonstrated through an 80% success rate on ex vivo porcine tissue. This signifies the model’s ability to generalize learned features from training datasets – initially focused on distinct image sources – to a completely new biological context without any additional training or fine-tuning on porcine data. Such performance is critical for practical surgical applications, where acquiring extensive labeled datasets for every anatomical variation or surgical scenario is impractical. The successful transfer highlights the robustness of the learned representations and suggests a fundamental understanding of tissue characteristics, enabling reliable performance even when presented with previously unseen biological samples. This adaptability represents a significant step towards creating surgical assistance tools that can operate effectively in diverse and unpredictable clinical environments.

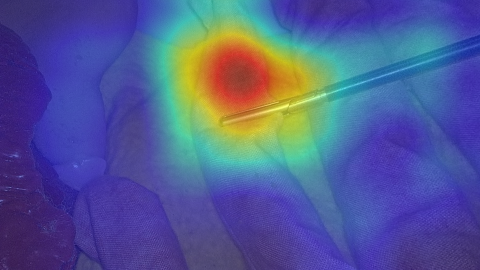

To understand how the system arrives at its classifications, researchers employed AblationCAM in conjunction with the Phase Classifier, effectively visualizing the learned feature representations. This technique systematically ablates, or removes, different portions of the input image, observing the corresponding impact on the classification outcome. The resulting heatmaps reveal which specific tissue features – such as textural characteristics or subtle boundary definitions – are most influential in determining the identified surgical phase. By pinpointing these critical visual cues, AblationCAM doesn’t simply confirm the system’s accuracy, but offers a window into its ‘reasoning’ – a crucial step towards building trust and ensuring responsible implementation of AI-assisted surgical tools, and potentially identifying previously unappreciated indicators of surgical progress.

The pursuit of robust surgical imitation learning, as detailed in this work, resembles tending a garden rather than constructing a fortress. One anticipates imperfections, adjustments, and emergent behaviors. G. H. Hardy observed, “The essence of mathematics lies in its simplicity, and the art lies in knowing what to leave out.” Similarly, this research demonstrates that complex tasks benefit from carefully chosen expertise – the ‘experts’ within the Mixture-of-Experts architecture – allowing the system to focus on essential elements. It’s not about forcing a solution, but allowing the system to grow into competence, even from limited data, acknowledging that every refinement is merely a temporary reprieve from inevitable entropy.

What’s Next?

The pursuit of surgical imitation learning, even through architectures as nuanced as Mixture-of-Experts, merely delays the inevitable confrontation with irreducible complexity. This work demonstrates a refinement of policy, a more efficient mapping from observation to action, but it does not, and cannot, solve surgery. The system learns to navigate a local optimum of demonstrated skill, a temporary reprieve from the chaos inherent in biological tissue and unforeseen circumstance. Each successful imitation is a cache, storing a fleeting order between outages.

Future efforts will undoubtedly focus on extending this architecture to more complex procedures, larger datasets, and ultimately, unsupervised learning. However, the true challenge lies not in scaling the system, but in acknowledging its fundamental limitations. The promise of autonomous surgery is not the elimination of error, but the graceful management of it. There are no best practices-only survivors. The field must begin to embrace the inevitable failures, to design systems that recover from them, and to view each intervention not as a perfect execution, but as an improvisation within an unyielding system.

Architecture is, at its core, how one postpones chaos. The elegance of MoE-ACT lies not in its performance gains, but in its implicit recognition that perfect imitation is an illusion. The next generation of surgical robots will not be defined by their ability to replicate human skill, but by their capacity to learn from their mistakes, and to adapt to the unpredictable realities of the operating room.

Original article: https://arxiv.org/pdf/2601.21971.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Overwatch Domina counters

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- 1xBet declared bankrupt in Dutch court

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

- Gold Rate Forecast

- Bikini-clad Jessica Alba, 44, packs on the PDA with toyboy Danny Ramirez, 33, after finalizing divorce

- James Van Der Beek grappled with six-figure tax debt years before buying $4.8M Texas ranch prior to his death

2026-01-31 19:16