Author: Denis Avetisyan

This review explores how carefully crafted ‘skill frameworks’ unlock the potential of smaller language models for complex tasks in real-world industrial settings.

Research demonstrates that skill-based architectures enable small language models to achieve performance comparable to larger models, with significant gains in VRAM efficiency and utilizing code-specialized designs.

While large language models have demonstrated impressive capabilities, their deployment in resource-constrained industrial settings often faces limitations due to cost and data security concerns. This work, ‘Agent Skill Framework: Perspectives on the Potential of Small Language Models in Industrial Environments’, investigates the efficacy of applying an Agent Skill framework-originally designed to enhance performance with larger models-to smaller language models (SLMs). Our findings reveal that moderately sized SLMs benefit substantially from this approach, achieving performance comparable to closed-source baselines-particularly code-specialized variants-with improved GPU efficiency. Can this framework unlock the potential of SLMs for complex tasks, enabling robust and cost-effective AI solutions in practical industrial applications?

The Inevitable Bottleneck: Scaling Beyond Limits

Large Language Models (LLMs) have rapidly advanced natural language processing, exhibiting proficiency in tasks ranging from text generation to complex problem-solving. However, this performance is intrinsically linked to the size of the ‘context window’ – the amount of text the model considers when generating a response. Processing these extensive windows demands substantial computational resources, specifically memory and processing power, creating a significant bottleneck as models and desired context lengths grow. The quadratic scaling of attention mechanisms – where computational cost increases with the square of the context length – exacerbates this issue, making it increasingly difficult and expensive to handle long-form content or complex dialogues. Consequently, research is actively focused on developing more efficient attention mechanisms and alternative architectures to mitigate these computational challenges and unlock the full potential of LLMs without prohibitive costs.

While Retrieval-Augmented Generation (RAG) has become a standard approach to enhancing Large Language Models with external knowledge, its effectiveness diminishes as the volume of retrieved context grows. The core issue lies in maintaining coherence and relevance amidst increasingly lengthy inputs; models struggle to pinpoint the most critical information within a sea of data, leading to diluted or inaccurate responses. This isn’t simply a matter of computational cost; even with sufficient processing power, the model’s attention mechanisms become less focused, failing to establish meaningful connections between the query and the relevant contextual details. Consequently, complex reasoning – tasks demanding synthesis and inference across multiple sources – are particularly susceptible to degradation, highlighting the limitations of scaling traditional context engineering methods to address genuinely intricate problems.

Beyond Fixed Windows: The Rise of Dynamic Skills

The Agent Skill Framework departs from traditional large language model architectures that rely on fixed-size context windows. Instead, it implements a dynamic system composed of discrete, reusable skills. Each skill encapsulates a specific capability, such as information retrieval, data analysis, or logical inference. These skills are not merely prompts, but modular components designed to perform focused tasks. By representing knowledge and functionality as individual skills, the framework allows for selective application of relevant capabilities, avoiding the limitations imposed by a static context window and enabling adaptation to varying task requirements and input complexities.

The Agent Skill Framework builds upon In-Context Learning (ICL) by organizing learning capabilities into a structured, skill-based architecture. This approach moves beyond simply providing examples within a context window; instead, it decomposes complex tasks into smaller, manageable skills, each representing a specific function or knowledge domain. These skills are modular and reusable, allowing the agent to apply previously learned capabilities to new situations without retraining. The skill-based structure facilitates efficient task decomposition, as the agent can select and combine relevant skills to address a given problem, promoting knowledge transfer and reducing the need for extensive, task-specific ICL examples.

The Agent Skill Framework enhances contextual reasoning by dynamically managing the information utilized for each task. Instead of processing a fixed context window, the framework applies specific skills that retrieve and filter only the relevant data needed for the current objective. This active context management reduces redundancy by preventing the processing of irrelevant information, and maximizes relevance by prioritizing data directly applicable to the task at hand. Consequently, performance is improved through focused processing, and computational costs are reduced by minimizing the volume of data that requires analysis.

Formalizing Intelligence: A Mathematical Foundation

The Agent Skill Framework leverages Partially Observable Markov Decision Processes (POMDPs) to provide a formal mathematical foundation for modeling agent behavior, particularly in scenarios involving incomplete information. A POMDP is defined by a tuple [latex](S, A, O, T, R, \Omega, \gamma)[/latex], where S represents the state space, A the action space, O the observation space, T the transition function, R the reward function, Ω the set of possible observations, and γ the discount factor. By formulating the agent’s skill acquisition and execution within this framework, the system explicitly models the agent’s belief state – a probability distribution over possible world states – which is updated based on observations and actions. This allows for a rigorous representation of information-seeking behaviors, enabling precise analysis and optimization of the agent’s decision-making process under uncertainty, and providing a means to formally define and evaluate the impact of observations on agent behavior.

The Skill Reference Mechanism within the Agent Skill Framework is mathematically defined as a Markov kernel, denoted as [latex]T(s’|s,a)[/latex], which specifies the probability of transitioning to a new state [latex]s'[/latex] given a current state [latex]s[/latex] and the execution of a specific skill [latex]a[/latex]. This formalization allows skills to condition their execution on the outputs of other skills, effectively sharing information and enabling compositional reasoning. Specifically, the kernel models the dependency between skills by representing the probability distribution over subsequent states, given the outcome of a prior skill’s execution; this probabilistic linkage enables the system to reason about the reliability and potential consequences of skill combinations, and to plan accordingly. The Markov property ensures that the future state depends only on the present state and action, simplifying the modeling of complex skill interactions.

The Agent Skill Framework’s functionality is predicated on the existence of formalized Skill Descriptors and Skill Policies. Skill Descriptors provide a precise specification of a skill’s inputs, outputs, preconditions, and effects, allowing for static analysis of skill compatibility and potential interactions. Skill Policies, conversely, define the agent’s decision-making process when executing a skill, expressed as a mapping from the agent’s belief state and skill inputs to a probability distribution over possible actions [latex] \pi(a|b,s) [/latex]. This formalization facilitates automated verification – confirming that skill compositions meet specified safety or performance criteria – and optimization through techniques like reinforcement learning or automated planning, enabling the system to learn and refine complex behaviors without manual intervention.

Real-World Impact: Efficiency and Adaptability

The Agent Skill Framework presents a marked improvement in video random access memory (VRAM) efficiency when contrasted with conventional large language model (LLM) deployments. This enhanced efficiency stems from a strategic decoupling of task execution, allowing the system to dynamically load and utilize only the necessary components for each specific operation. Consequently, the framework significantly reduces the computational burden and memory footprint, making it particularly well-suited for implementation in resource-constrained environments such as edge devices or systems with limited graphical processing capabilities. This adaptability broadens the potential applications of LLMs, moving beyond high-performance computing centers to a wider range of accessible platforms and use cases where minimizing resource consumption is paramount.

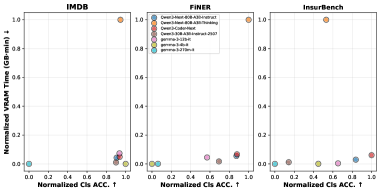

The Agent Skill Framework distinguishes itself through a highly adaptable architecture, seamlessly incorporating diverse large language models (LLMs) to optimize task performance. This modularity permits the strategic deployment of either instruction-tuned LLMs, proficient in general conversational tasks, or code-specialized LLMs, excelling in programming and logical reasoning. Notably, evaluations reveal that code-specialized LLMs achieve the lowest VRAM time, signifying enhanced computational efficiency during code-related operations; this allows the framework to dynamically select the most appropriate LLM for a given challenge, maximizing both speed and resource utilization, and offering a substantial advantage in environments where computational resources are limited.

Rigorous evaluation utilizing the LangChain DeepAgent platform confirms the substantial benefits of this framework, revealing that accurate skill selection consistently reached 100% when tackling intricate challenges. Notably, the Qwen3-80B-Instruct model, when integrated within this system, achieved a score of 0.654 on the FiNER dataset – a benchmark for evaluating information extraction capabilities. This result represents a marked advancement over the baseline performance of 0.198 attained without the implementation of Agent Skills, highlighting the framework’s capacity to significantly enhance the precision and effectiveness of complex task completion and knowledge retrieval.

Towards Robust Intelligence: A Future of Dynamic Skills

The pursuit of efficient artificial intelligence has led researchers to investigate the potential of smaller language models within an agent skill framework. This approach prioritizes task completion not through sheer model size, but through a curated selection of specialized skills. Recent studies demonstrate that these smaller models, when integrated strategically, can achieve performance levels comparable to significantly larger counterparts – all while drastically reducing computational demands and associated costs. By focusing on skill-based reasoning, the framework allows agents to decompose complex problems into manageable components, effectively leveraging the strengths of compact models and paving the way for more accessible and sustainable AI systems. This offers a viable path towards deploying intelligent agents on resource-constrained platforms without compromising on capability.

The architecture can be significantly enhanced by implementing Hierarchical Multi-Agent Systems, enabling the tackling of increasingly intricate challenges through distributed cognition. This approach moves beyond single-agent problem-solving by fostering collaboration, where specialized agents, each possessing a focused skillset, work in concert to decompose complex tasks into manageable sub-problems. By organizing agents into hierarchies – with higher-level agents coordinating the activities of lower-level specialists – the system gains the capacity to navigate ambiguity and adapt to dynamic environments with greater efficiency. Such a system mirrors the collaborative intelligence observed in natural systems, promising a pathway toward agents capable of not only executing predefined tasks but also of creatively addressing novel situations through collective reasoning and problem-solving.

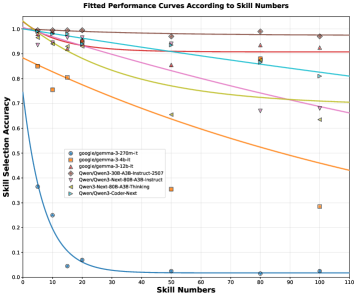

Research indicates a strong correlation between model size and the capacity for robust skill-based reasoning. Models surpassing 12 billion parameters consistently maintained high accuracy in skill selection, even when presented with a diverse set of 100 distinct skills. Conversely, smaller models exhibited a rapid decline in performance as the number of skills increased, suggesting a limited capacity for effectively managing and applying a broad repertoire of capabilities. This finding underscores the potential of scaling the skill-based reasoning process – by leveraging larger models – to cultivate genuinely adaptable and intelligent agents. Such agents would not only be capable of tackling complex challenges but also of continuously learning and innovating by integrating new skills without sacrificing overall performance or reliability.

The pursuit of capable agents within constrained environments echoes a natural order. This work, examining small language models and agent skill frameworks, isn’t about building intelligence, but rather cultivating it. The findings-that comparable performance can be achieved with significantly reduced VRAM-suggest a system’s true strength lies not in brute force, but in efficient adaptation. As John von Neumann observed, “The best way to predict the future is to create it.” Here, the creation isn’t a monolithic structure, but a flexible framework, growing towards capability through skillful context engineering and model selection. Every refinement to this framework begins as a prayer for efficiency and ends in repentance as new limitations emerge – a testament to the system simply growing up.

What Lies Ahead?

The demonstrated efficacy of smaller language models within an agent skill framework does not signal a triumph over scale, but rather a realignment of expectations. The pursuit of ever-larger models resembles a protracted attempt to brute-force intelligence – a strategy inevitably constrained by diminishing returns and escalating resource demands. The current work suggests that focused specialization, particularly within code, offers a more viable, though not necessarily simpler, path. One anticipates, however, that the challenges inherent in defining, decomposing, and selecting appropriate skills will prove far more persistent than any VRAM constraint.

The framework, by its very nature, acknowledges that complete foresight is impossible. Each skill chosen, each context engineered, represents a pre-commitment to a particular mode of failure. Stability, in this context, is merely an illusion that caches well. The true metric of progress will not be performance on curated benchmarks, but rather the system’s capacity to gracefully degrade – to recognize and adapt to the inevitable emergence of unanticipated states.

The ultimate limitation isn’t computational, but epistemological. A guarantee is just a contract with probability. The system, as a complex adaptive ecosystem, will always exceed the predictive power of its creators. Chaos isn’t failure – it’s nature’s syntax. Future work should prioritize methods for observing, interpreting, and learning from these emergent behaviors, rather than attempting to suppress them through increasingly elaborate control mechanisms.

Original article: https://arxiv.org/pdf/2602.16653.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Overwatch Domina counters

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- Gold Rate Forecast

- 1xBet declared bankrupt in Dutch court

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

2026-02-19 22:52