Author: Denis Avetisyan

As household robots become more commonplace, understanding how families balance convenience with data privacy is crucial for responsible design and adoption.

This review examines multi-person households’ privacy preferences for shared robots and offers design recommendations for manufacturers to address concerns regarding data security and accommodate diverse user needs.

As household robots become increasingly integrated into daily life, a paradox emerges: these helpful devices collect significantly more personal data than traditional domestic appliances. This research, titled ‘It’s like a pet…but my pet doesn’t collect data about me”: Multi-person Households’ Privacy Design Preferences for Household Robots’, investigates how families perceive privacy risks associated with shared robotic devices and collaboratively designs solutions to mitigate them. Findings reveal that participants prioritize user control over data, accessible privacy settings, and customizable features to accommodate diverse household member preferences. How can robot manufacturers translate these insights into designs that foster trust and respect user privacy in the evolving landscape of multi-user homes?

The Home as Ecosystem: Privacy in a Connected World

The modern home is rapidly evolving into a digitally connected ecosystem, with household robots transitioning from futuristic concepts to everyday realities. These devices, encompassing robotic vacuum cleaners, lawnmowers, and even companion robots, are increasingly designed to automate tasks and offer assistance with daily living. Beyond simple automation, advancements in artificial intelligence and machine learning are enabling robots to perform more complex functions, such as monitoring home security, providing elder care support, and even offering companionship. This growing integration promises significant convenience and improved quality of life for many, but also signals a fundamental shift in how individuals interact with their living spaces and the technologies within them. The proliferation of these robotic helpers highlights a move towards proactive, rather than reactive, home management, with devices anticipating needs and adapting to routines.

The increasing presence of smart devices within the home environment generates substantial privacy concerns centered around the collection and potential misuse of personal data. These devices, ranging from voice assistants to security cameras and automated appliances, continuously gather information about occupants’ habits, routines, and even intimate details of daily life. This data, often transmitted and stored remotely, creates vulnerabilities to security breaches, unauthorized surveillance, and potential profiling. Beyond simple data harvesting, the aggregation of information from multiple devices paints an incredibly detailed picture of household activity, raising questions about who has access to this information and how it’s being utilized – concerns that extend beyond traditional data privacy frameworks designed for less pervasive technologies. The sheer volume and sensitivity of data collected necessitates a re-evaluation of current privacy protections to ensure individuals maintain control over their personal information within the increasingly connected home.

The increasing ubiquity of smart home devices generates a constant stream of personal data, demanding a re-evaluation of current privacy practices. These devices, from voice assistants to networked appliances, continuously collect information about daily routines, habits, and even intimate details of household life. This data, often gathered without explicit user awareness, extends beyond basic usage patterns to encompass potentially sensitive details like sleep schedules, dietary preferences, and social interactions. The sheer volume and variety of collected data present significant challenges for data security and responsible usage, raising concerns about potential misuse, unauthorized access, and the erosion of personal boundaries within the home. Consequently, a careful consideration of data minimization, transparency, and robust security measures is crucial to ensure user privacy is protected in this rapidly evolving technological landscape.

Current privacy regulations, largely designed for data transmitted across networks or collected by stationary devices, prove inadequate when applied to the dynamic environment of a shared home populated by robots. This study’s qualitative research, involving in-depth interviews with households integrating robotic assistance, reveals a nuanced understanding of these challenges; participants expressed discomfort not with data collection itself, but with the ambiguity surrounding how that data, gathered continuously within intimate spaces, is utilized and potentially shared. The research highlights a key disconnect: existing frameworks focus on informing users about data practices, yet households consistently report a feeling of powerlessness to meaningfully control data flows from devices operating within their private lives, particularly as robots navigate and learn from shared spaces and interpersonal interactions. This suggests a need for privacy regulations specifically tailored to the unique characteristics of robotic data collection within the home, moving beyond simple transparency to emphasize user agency and contextual control.

Designing for Resilience: A Multifaceted Approach to Privacy

Effective privacy design necessitates a holistic approach extending beyond purely technical implementations. While encryption, secure data storage, and access controls are vital, usability considerations are equally critical; complex or unintuitive privacy settings can lead to user frustration and, ultimately, the disabling of protective features or acceptance of default, potentially less private, configurations. Therefore, privacy-respecting systems must prioritize clear communication of data collection practices, transparent control mechanisms, and user interfaces designed to facilitate informed decision-making regarding personal information. Ignoring the user experience introduces significant risk, as even the most robust technical safeguards are ineffective if users cannot easily understand or utilize them.

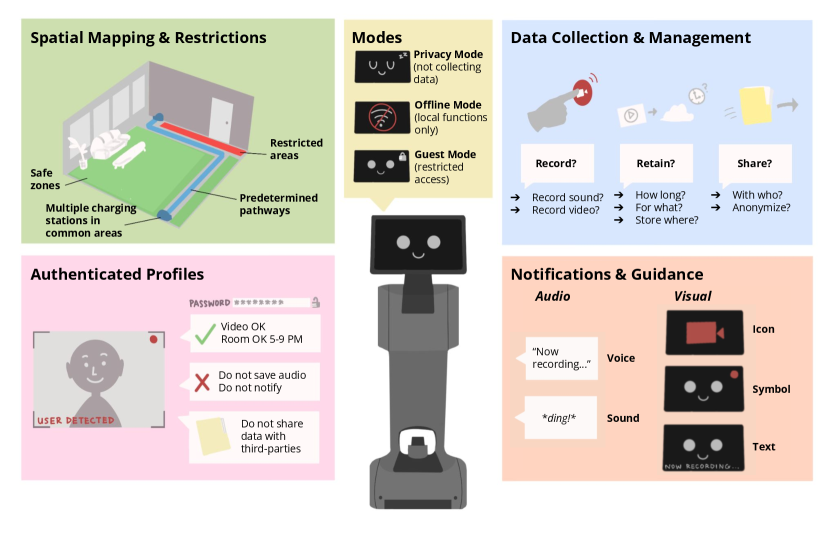

Effective implementation of user control necessitates providing granular privacy settings, allowing individuals to specify preferences for data collection, processing, and sharing. This includes clear and accessible dashboards for managing permissions, the ability to review and modify collected data, and options to opt-out of specific data uses. Furthermore, systems should support data portability, enabling users to easily transfer their information to other services. Transparency regarding data practices is crucial; users require understandable explanations of how their data is used and with whom it is shared to make informed decisions about their privacy. Robust mechanisms for revoking consent and initiating data deletion, adhering to applicable regulations like GDPR and CCPA, are also essential components of user control.

Robust user authentication relies on verifying claimed identities to prevent unauthorized system access and data breaches. Multifactor authentication (MFA), combining two or more verification factors – something the user knows (password), something the user has (security token or mobile device), or something the user is (biometrics) – significantly enhances security compared to single-factor methods. Password policies should enforce complexity and regular updates, while biometric authentication requires careful consideration of data privacy and potential vulnerabilities. Secure storage of authentication credentials, utilizing hashing algorithms and salting techniques, is critical to protect against compromise. Furthermore, adaptive authentication, which adjusts security requirements based on user behavior and risk factors, provides a dynamic layer of protection.

Data management protocols require a tiered approach to security, encompassing encryption at rest and in transit, as well as implementation of strict access controls based on the principle of least privilege. Responsible access necessitates detailed audit logging of all data interactions, including user access and system modifications, to facilitate accountability and detect potential breaches. Furthermore, organizations must establish clear procedures for users to exercise their right to data deletion, ensuring complete and verifiable removal of personal information from all systems, including backups, within a defined timeframe and in compliance with relevant data protection regulations like GDPR and CCPA. These protocols should be regularly reviewed and updated to address evolving threats and technological advancements.

The Social Home: Privacy in a Multi-User Ecosystem

Household robots, unlike personal computing devices, function within shared living spaces occupied by multiple individuals, each with potentially differing privacy expectations and concerns. Consequently, privacy-by-design frameworks must extend beyond individual user models to encompass a Multi-User Perspective. This requires consideration of how the robot collects, processes, and shares data not only in relation to a primary user, but also concerning all members of the household, including guests. Failing to account for these complex social dynamics can lead to unintentional privacy violations, erosion of trust, and ultimately, hinder the successful integration of robots into domestic environments. Effective privacy design, therefore, necessitates identifying and addressing the privacy needs of all stakeholders within the robot’s operational environment.

Robot mobility within a home environment introduces specific spatial privacy concerns due to the robot’s capacity to physically access and observe various areas. Unlike stationary devices, a mobile robot can traverse multiple rooms, potentially collecting data – including audio, video, and environmental sensor readings – from spaces considered private, such as bedrooms or bathrooms. This movement necessitates consideration of not only where data is collected, but also how the robot’s navigation impacts the perception of privacy within the home. The robot’s ability to map and remember the layout of a home further exacerbates these concerns, creating a persistent record of spatial access and potentially enabling retroactive analysis of activity within those spaces.

Effective privacy policies for household robots require explicit detailing of all data collection, storage, and usage practices. These policies must extend beyond legal compliance to provide accessible explanations for all household members, regardless of technical expertise. Specifically, the policies should outline what data is collected (e.g., audio, video, location), how that data is used (e.g., for robot functionality, service improvement, third-party access), with whom it is shared, and the mechanisms available for data access, modification, or deletion. Transparency regarding data retention periods and security measures is also crucial for building trust and enabling informed consent amongst all users within the household.

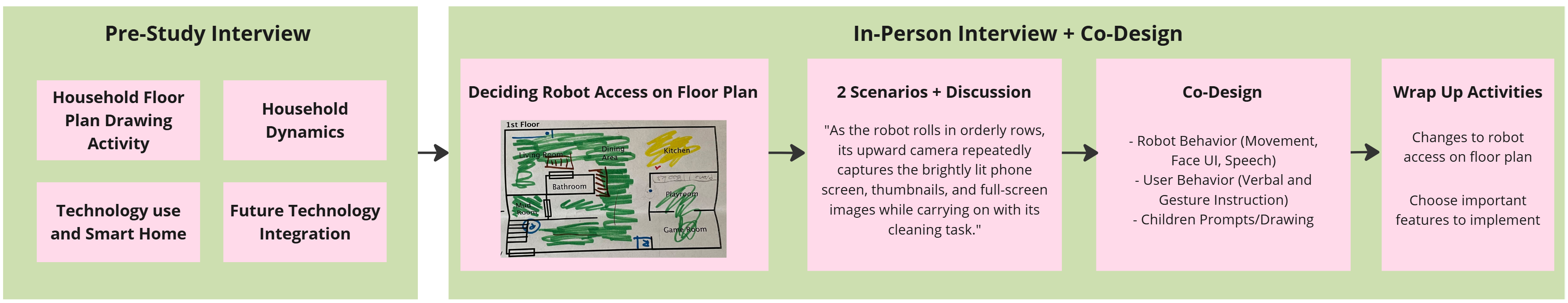

The Co-Design method offers a user-centered approach to the development of household robots by actively incorporating the perspectives of all potential users throughout the design process. This study demonstrates the value of this methodology through qualitative insights gathered from direct user involvement, allowing for the identification of nuanced privacy needs and preferences often overlooked in traditional development cycles. By facilitating collaborative sessions and iterative prototyping with household members, developers can ensure that the resulting robot functionalities and privacy features align with real-world usage scenarios and address the specific concerns of those sharing the domestic environment. This proactive engagement is crucial for building trust and fostering acceptance of robotic technologies within the home.

The Evolving Contract: Long-Term Trust in a Connected Future

The perception of privacy isn’t static; research demonstrates a longitudinal shift in how individuals evaluate and adjust their privacy settings over time. This ongoing evaluation isn’t simply about reacting to breaches, but reflects a dynamic interplay between technological understanding, evolving social norms, and personal experiences. The study indicates users don’t adopt a ‘set it and forget it’ approach, instead continually reassessing risks and benefits as devices integrate further into daily life. Consequently, successful smart home ecosystems necessitate adaptable privacy controls-interfaces that facilitate regular review and adjustment, empowering individuals to maintain agency over their personal data and reinforcing a sense of ongoing control rather than a single initial configuration.

The convenience of remote access to smart home devices is inextricably linked to heightened security vulnerabilities and privacy risks. Enabling external connections inherently expands the potential attack surface for malicious actors, creating opportunities for unauthorized entry and data breaches. While manufacturers implement encryption and authentication protocols, these measures are not foolproof and can be circumvented through sophisticated exploits or simple misconfigurations. Furthermore, the data transmitted during remote access sessions – including audio, video, and operational commands – becomes susceptible to interception and misuse. Addressing these concerns necessitates robust security practices, including regular software updates, strong password policies, and multi-factor authentication, alongside transparent data handling policies that empower users to understand and control how their information is accessed and utilized.

Sustaining user confidence in increasingly interconnected technologies demands more than simply adhering to existing privacy regulations; it necessitates a commitment to open dialogue and agile adaptation. Research indicates that perceptions of privacy are not static, but rather evolve alongside technological advancements and shifting societal norms. Therefore, organizations must actively solicit feedback, transparently communicate data handling practices, and promptly address emerging concerns. This proactive approach fosters a sense of control and demonstrates a genuine respect for user autonomy, thereby strengthening the crucial bond of trust. Ignoring evolving privacy expectations risks eroding confidence and hindering the widespread adoption of beneficial technologies, while responsiveness cultivates lasting relationships built on mutual understanding and shared values.

A truly user-centric approach to smart home technology demands privacy be considered not as a feature, but as an integral component throughout the entire device lifecycle – from initial design and development, through deployment and regular updates, to eventual decommissioning. This proactive stance moves beyond simply responding to privacy concerns, and instead anticipates evolving user preferences – as demonstrated by recent longitudinal studies revealing the fluidity of individual privacy perceptions. By embedding privacy-enhancing technologies and transparent data handling practices at every stage, manufacturers can cultivate a relationship of trust with consumers, fostering a future where smart home devices seamlessly integrate into daily life without compromising personal autonomy or creating undue security risks. Ultimately, prioritizing privacy isn’t merely about compliance; it’s about building a sustainable and mutually beneficial relationship between technology and the individuals it serves.

The study illuminates a fundamental truth about complex systems: their behavior isn’t dictated by design, but emerges from interaction. It’s a precarious balance, this attempt to predict user needs within a multi-person household. As John McCarthy observed, “It is better to be vaguely right than precisely wrong.” This resonates deeply with the research’s findings; rigid privacy settings, while appearing precise, often fail to accommodate the nuanced preferences of cohabitating users. The ecosystem of a home demands adaptability, a willingness to embrace the ‘vague’ in service of genuine usability and sustained trust. The challenge isn’t to build a privacy solution, but to cultivate one that can evolve alongside the household it serves.

The Looming Shadow of the Shared Home

This exploration of privacy within multi-person robot households reveals, not solutions, but the inevitable expansion of existing tensions. Each accommodation for diverse user preference is, in effect, a formalized agreement on which freedoms will be sacrificed. The study highlights a desire for flexibility, but forgets that flexibility is merely deferred complexity. A system designed to adapt to every user will, eventually, be adapted by the most demanding user – or the most skillfully malicious.

The true challenge isn’t data security, but data negotiation. Households aren’t seeking impenetrable fortresses, but functional compromises. Future work must move beyond asking ‘how do we protect data?’ and instead ask ‘how do we choreograph its inevitable flow, and who arbitrates the resulting conflicts?’. Any architecture promising seamless integration ignores the fundamental truth: order is just a temporary cache between failures, and every shared resource becomes a site of potential contention.

The ‘pet-like’ framing is particularly telling. We imbue these devices with a simulacrum of agency, then act surprised when they reflect our own messy, conflicting desires. The next generation of research won’t focus on controlling these systems, but on understanding how they, in turn, begin to shape the spaces – and the relationships – within them.

Original article: https://arxiv.org/pdf/2602.16975.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Overwatch Domina counters

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- Gold Rate Forecast

- 1xBet declared bankrupt in Dutch court

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

2026-02-20 08:53