Author: Denis Avetisyan

A new approach combines sensor data and machine learning to accurately recognize human activities while safeguarding patient privacy in resource-constrained healthcare settings.

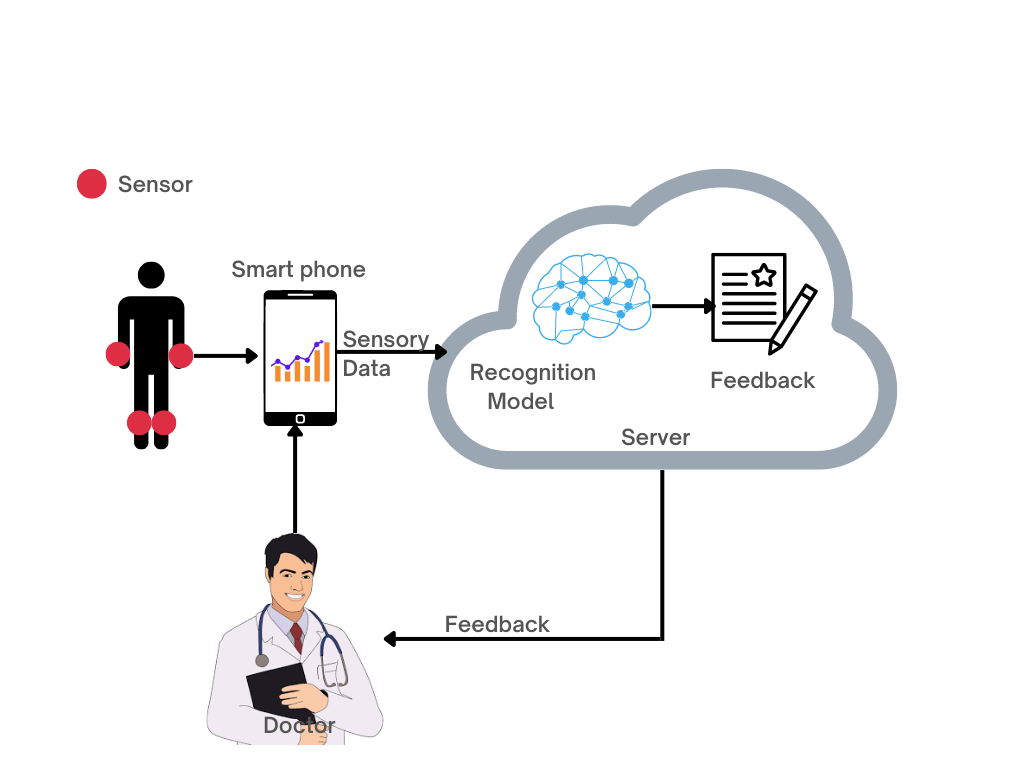

This review details a privacy-preserving human activity recognition system utilizing wearable sensors, Support Tensor Machines, and federated learning techniques for improved accuracy and data security.

Limited access to consistent medical care necessitates innovative remote monitoring solutions, particularly for vulnerable populations. This is addressed in ‘Privacy-Preserving Sensor-Based Human Activity Recognition for Low-Resource Healthcare Using Classical Machine Learning’, which proposes a low-cost framework leveraging wearable sensors and machine learning for automated human activity recognition. Experimental results demonstrate that a Support Tensor Machine (STM) outperforms traditional classifiers-achieving 96.67% test accuracy-by effectively capturing spatio-temporal motion dynamics while preserving data privacy through federated learning. Could such a scalable system fundamentally reshape healthcare delivery in resource-constrained settings and beyond?

Deconstructing Movement: The Why of Human Activity Recognition

The precise recognition of human activity, encompassing everything from fundamental actions like ambulation and rest to more intricate maneuvers such as stair negotiation, underpins a surprisingly broad spectrum of technological advancements. Beyond simple step counting or fitness tracking, accurate activity identification is becoming essential for applications in healthcare – enabling remote patient monitoring, fall detection, and personalized rehabilitation programs. Furthermore, this capability fuels the development of smart home environments that adapt to occupant behavior, enhances security systems through anomaly detection, and informs the design of more intuitive human-computer interfaces. The ability to discern what a person is doing, not just that they are moving, is therefore pivotal for creating technologies that are both responsive and genuinely beneficial to daily life.

Existing methods for identifying human activity often falter when faced with the subtle variations in how individuals perform even the simplest tasks. A person’s gait while walking, for example, is uniquely defined by factors such as speed, stride length, and even emotional state, creating a complex signature that traditional systems struggle to decipher. This inherent variability – compounded by differences in body size, clothing, and the environment – necessitates the development of more robust and adaptable systems capable of learning and generalizing across diverse movement patterns. Such advancements are critical, as the accurate interpretation of human motion relies not just on what an action is, but how it is performed, demanding a shift towards models that can account for the nuanced complexities of real-world human behavior.

Accurate human activity classification, extending even to seemingly passive states like ‘Lying’, serves as a critical building block for a diverse array of advanced technologies. Beyond simple activity recognition, this foundational capability enables the development of sophisticated systems for health monitoring, fall detection, and behavioral analysis. Precise identification of ‘Lying’ – differentiating it from resting or sleeping – is particularly important for prolonged health assessments and recognizing subtle changes indicative of medical conditions. Furthermore, robust activity classification underpins the creation of more intuitive human-computer interfaces, personalized assistive technologies, and detailed environmental monitoring systems that respond intelligently to human behavior, ultimately enhancing safety, wellbeing, and quality of life.

Sensor Webs: The Foundation of Behavioral Mapping

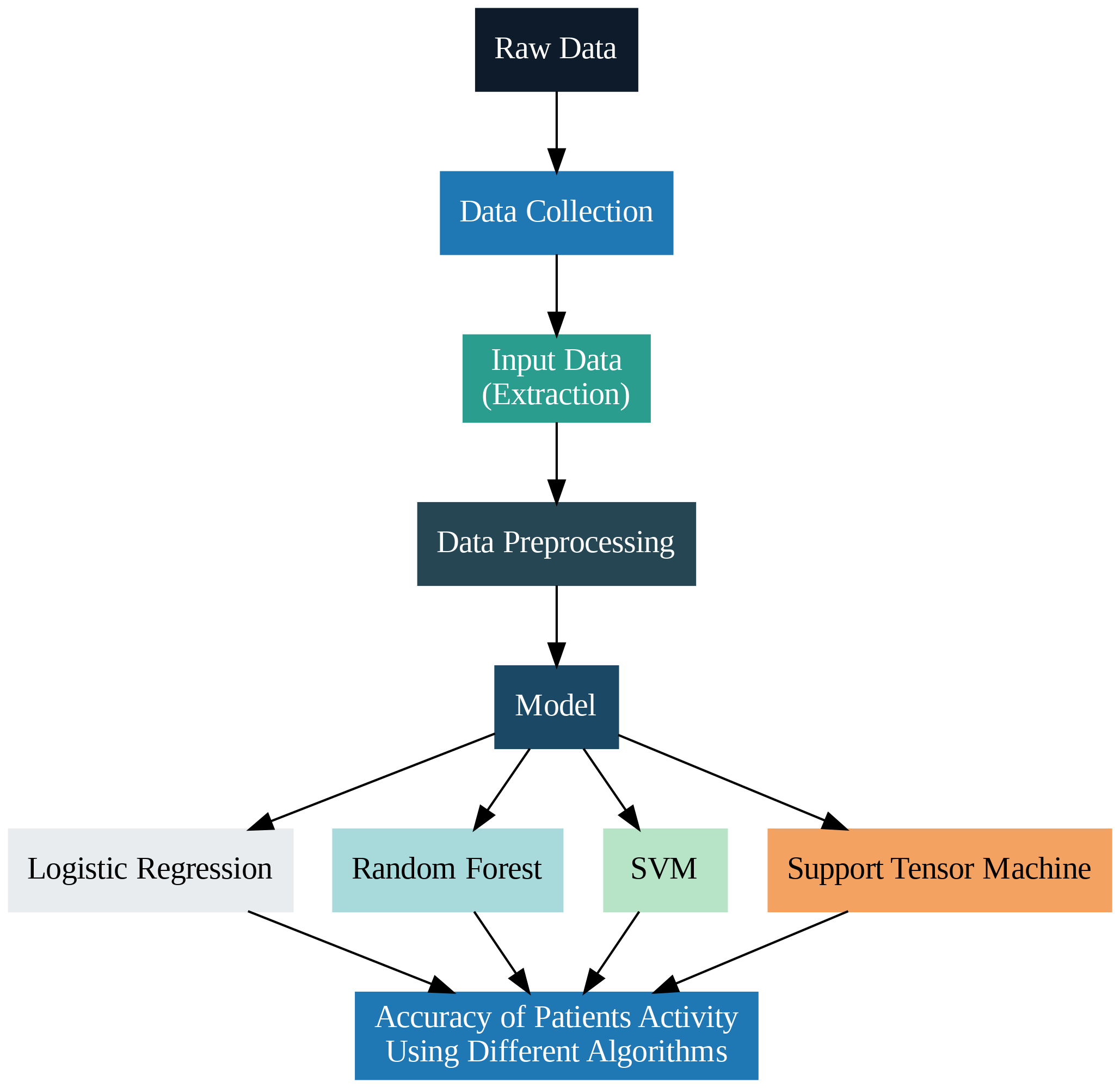

Wearable sensors form the foundational data acquisition component of sensor-based Human Activity Recognition (HAR) systems. These devices, typically including accelerometers and gyroscopes, are strategically positioned on the body – commonly the torso, limbs, or feet – to capture kinematic data reflecting human movement. The portability of these sensors enables data collection in real-world environments, unlike laboratory-based motion capture systems. Furthermore, the decreasing cost of micro-electromechanical systems (MEMS) technology has made wearable sensor solutions economically viable for large-scale deployments and continuous monitoring applications. Common sensor data outputs include tri-axial acceleration and angular velocity, providing a comprehensive representation of body motion for subsequent analysis.

Activity classification is the process of interpreting data acquired from wearable sensors to determine the specific human activity being performed. Algorithms employed for this purpose typically utilize machine learning techniques, trained on labeled datasets of sensor readings corresponding to known activities – such as walking, running, sitting, or lying down. These algorithms analyze features extracted from the raw sensor data, including statistical measures like mean, variance, and signal magnitude area, to identify patterns indicative of each activity. The performance of activity classification is commonly evaluated using metrics such as accuracy, precision, recall, and F1-score, comparing the algorithm’s predictions against ground truth labels.

Data smoothing and refinement within Human Activity Recognition (HAR) systems commonly utilize techniques such as the Moving Average Filter and the Kalman Filter to enhance classification accuracy. The Moving Average Filter operates by calculating the average of data points over a specified window, reducing noise and short-term fluctuations. Conversely, the Kalman Filter is a recursive estimator that uses a system model and measurements to estimate the state of a dynamic system, effectively predicting future values and minimizing estimation error. Both filters address the inherent noise present in sensor data – stemming from sensor limitations or external interference – which can significantly degrade the performance of activity classification algorithms. By reducing noise and improving signal quality, these filtering techniques enable more reliable feature extraction and, consequently, more accurate activity identification.

Algorithmic Decoding: Extracting Intent from Motion

Machine Learning (ML) techniques form the core of contemporary Human Activity Recognition (HAR) systems by automating the process of identifying patterns within sensor data. Traditionally, activity recognition relied on manually engineered rules and thresholds; however, ML algorithms learn directly from labeled datasets, adapting to variations in individual behavior and sensor noise. This data-driven approach eliminates the need for explicit programming of activity signatures, enabling systems to generalize to new users and environments. Common ML methodologies applied to HAR involve supervised learning, where algorithms are trained on pre-classified data to predict activity labels from incoming sensor readings, facilitating automatic and robust activity classification.

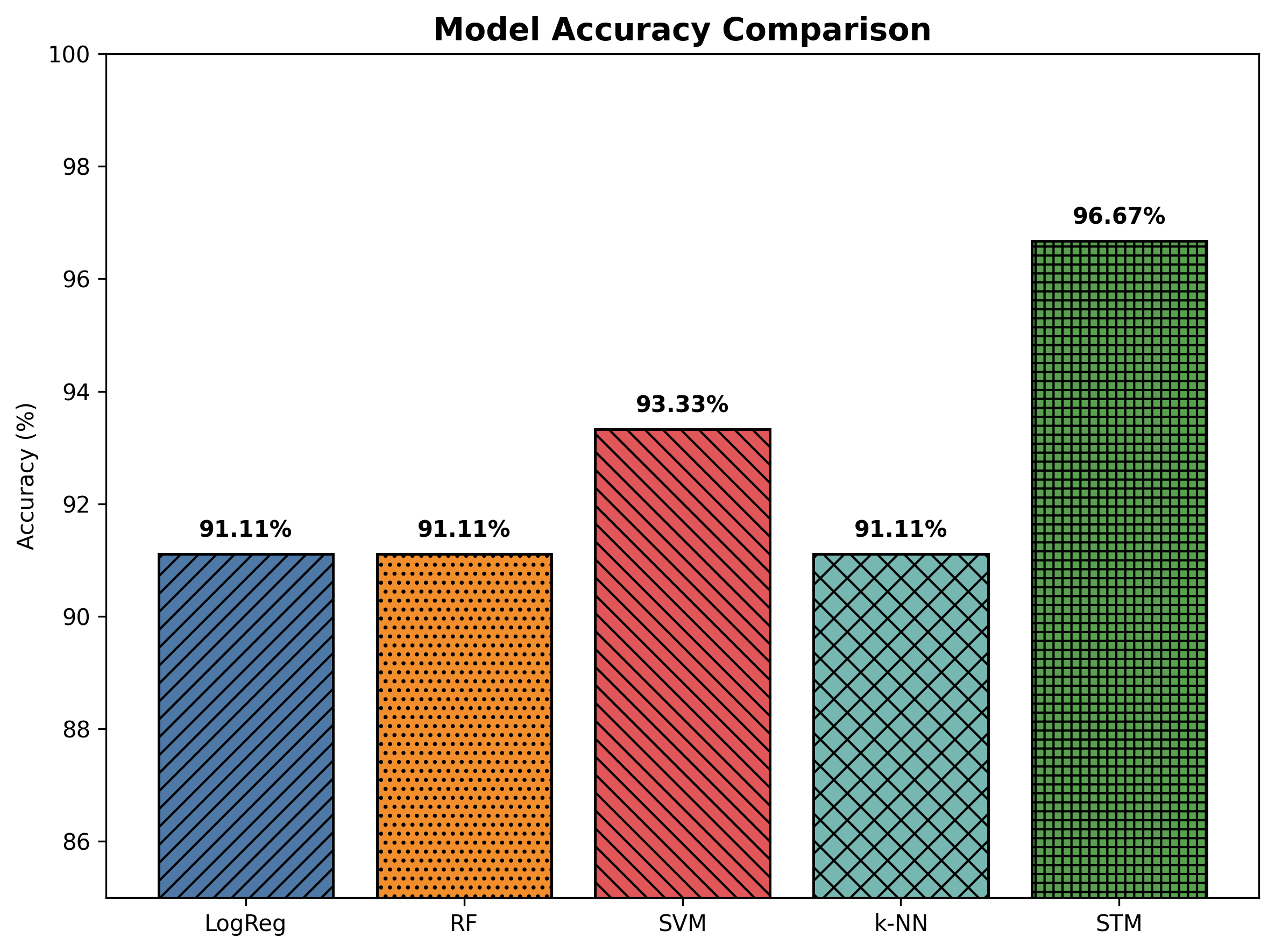

Several machine learning algorithms are commonly employed for human activity recognition (HAR) due to their differing strengths in processing and classifying sensor data. Logistic Regression, a linear model, provides a computationally efficient baseline for classification tasks. Random Forest, an ensemble learning method constructing a multitude of decision trees, offers robustness and handles high-dimensional data effectively. Support Tensor Machines (STM), an extension of Support Vector Machines, utilizes tensor representations to capture complex relationships within the data, often achieving higher accuracy compared to traditional SVMs, particularly with multivariate time-series data from inertial measurement units (IMUs).

Rigorous evaluation of model performance within human activity recognition (HAR) systems employs techniques such as k-fold cross-validation, a process that partitions data into multiple subsets for training and testing to assess generalization capability. Specifically, the Support Tensor Machine (STM) demonstrated a cross-validation accuracy of 98.50% using this methodology, indicating a high degree of consistency in its predictive performance across different data partitions. This metric is calculated as the percentage of correctly classified instances averaged over all folds, providing a robust estimate of the model’s ability to accurately recognize activities on unseen data. The cross-validation process minimizes the risk of overfitting and offers a more reliable performance estimate than single train-test splits.

The Weighted Support Tensor Machine (WSTM) demonstrated superior performance in human activity recognition, achieving a test accuracy of 96.67%. This result surpasses the accuracy attained by both Logistic Regression, which scored 91.11%, and Support Vector Machines (SVM), which achieved 93.33% under the same testing conditions. The WSTM’s improved performance indicates its effectiveness in discerning patterns within activity data compared to these established machine learning algorithms, suggesting a potential benefit for applications requiring high-precision activity classification.

Beyond Prediction: Implications for Life and Wellbeing

Human Activity Recognition technology is poised to revolutionize healthcare through proactive and personalized monitoring systems. These systems move beyond simple step counting, offering the potential to detect falls in real-time, triggering immediate alerts to caregivers or emergency services – a critical feature for elderly or mobility-impaired individuals. Beyond immediate safety, HAR facilitates continuous patient monitoring, tracking activity levels and identifying deviations from established baselines that could indicate deteriorating health or the need for intervention. Furthermore, the technology supports rehabilitation efforts by providing objective data on patient progress, allowing therapists to tailor exercise programs and track recovery with greater precision, ultimately improving outcomes and quality of life.

Human Activity Recognition systems are rapidly becoming foundational to the development of truly intelligent smart home environments. These systems move beyond simple automation, enabling homes to proactively respond to the needs and behaviors of their occupants. By accurately identifying activities like cooking, relaxing, or sleeping, a smart home can adjust lighting, temperature, and entertainment systems to optimize comfort and energy efficiency. Furthermore, HAR facilitates personalized assistance; for example, detecting a fall or prolonged inactivity could trigger automated alerts to caregivers or emergency services, enhancing safety and providing peace of mind. This level of responsiveness transforms the home from a static structure into a dynamic, supportive environment tailored to the well-being of its inhabitants.

Recent advancements in machine learning are addressing a critical challenge in human activity recognition: data privacy. Federated learning (FL) offers a compelling solution, enabling collaborative model training across multiple devices or institutions without directly exchanging sensitive user data. Instead, individual devices train a local model, and only model updates – not the raw data – are shared with a central server for aggregation. This decentralized approach significantly enhances data security and addresses growing privacy concerns. Studies demonstrate that FL can achieve remarkably high accuracy; a recent implementation attained a Federated Learning Model Accuracy of 98.69%, suggesting its potential to unlock broader applications of HAR while upholding stringent privacy standards and fostering more inclusive data collaborations.

Recent evaluations highlight the enhanced performance of the Spatio-Temporal Model (STM) in discerning nuanced human activities. Specifically, the STM demonstrated a marked improvement in recall accuracy when identifying complex movements; it correctly identified ‘Walking Upstairs’ in 96% of instances, exceeding the 93% achieved by Support Vector Machines (SVM). Similarly, the STM’s ability to recognize ‘Walking Downstairs’ reached 95% recall, a substantial gain over the 87% attained with the SVM approach. These results suggest that the STM’s capacity to integrate both spatial and temporal information provides a more robust framework for accurately interpreting the intricacies of human motion, potentially leading to more reliable applications in areas like health monitoring and assisted living.

The pursuit of accurate human activity recognition, as detailed in this study, inherently demands a willingness to challenge existing methodologies. This research doesn’t simply accept the limitations of traditional machine learning; it actively seeks to circumvent them through innovative techniques like Support Tensor Machines and federated learning. As Grace Hopper once stated, “It’s easier to ask forgiveness than it is to get permission.” This sentiment perfectly encapsulates the spirit of inquiry demonstrated here-a drive to explore novel approaches, even if it means deviating from established norms, to achieve robust and privacy-preserving analysis of sensor data. The system’s focus on low-resource healthcare settings further emphasizes this pragmatic, results-oriented approach, prioritizing functionality over rigid adherence to convention.

What Lies Beyond?

The pursuit of recognizing human activity from sensor data, while seemingly solved at a superficial level, consistently reveals deeper architectural challenges. This work, employing Support Tensor Machines and federated learning, represents not an endpoint, but a carefully constructed perturbation. The achieved accuracy, impressive as it is, merely sharpens the edges of the unresolved questions – questions regarding the true dimensionality of ‘activity’ itself. Is ‘walking’ a singular state, or a complex manifold woven from subtle variations in gait, intention, and environmental context? The system performs well, yet performance is a distraction from understanding.

The focus on privacy, laudable as it is, implicitly acknowledges a fundamental tension. To truly know an individual’s activity is, inevitably, to relinquish a degree of their privacy. Federated learning offers a palliative, not a cure. Future work must interrogate the very premise of ‘privacy-preserving’ machine learning – is it a technical problem to be solved, or a philosophical boundary to be continually renegotiated? The real innovation will not lie in concealing data, but in fundamentally altering the relationship between observation and knowledge.

Ultimately, this research highlights the inherent instability of categorization. ‘Walking,’ ‘sitting,’ ‘stair climbing’ are convenient labels, but reality is fluid. The next phase demands systems capable of not merely recognizing activities, but of anticipating them, contextualizing them, and, perhaps, even questioning their definition. Chaos is not an enemy, but a mirror of architecture reflecting unseen connections.

Original article: https://arxiv.org/pdf/2601.22265.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- MLBB x KOF Encore 2026: List of bingo patterns

- Brawl Stars February 2026 Brawl Talk: 100th Brawler, New Game Modes, Buffies, Trophy System, Skins, and more

- Gold Rate Forecast

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Magic Chess: Go Go Season 5 introduces new GOGO MOBA and Go Go Plaza modes, a cooking mini-game, synergies, and more

- Overwatch Domina counters

- ‘The Mandalorian and Grogu’ Trailer Finally Shows What the Movie Is Selling — But is Anyone Buying?

- Breaking Down the Ruthless Series Finale of Tell Me Lies

- Clash Royale: What is the Ironheart Lucky Chest and how to unlock it

2026-02-02 23:44