Author: Denis Avetisyan

A new method helps robots better understand images and language by restoring crucial spatial relationships between objects, leading to more effective manipulation.

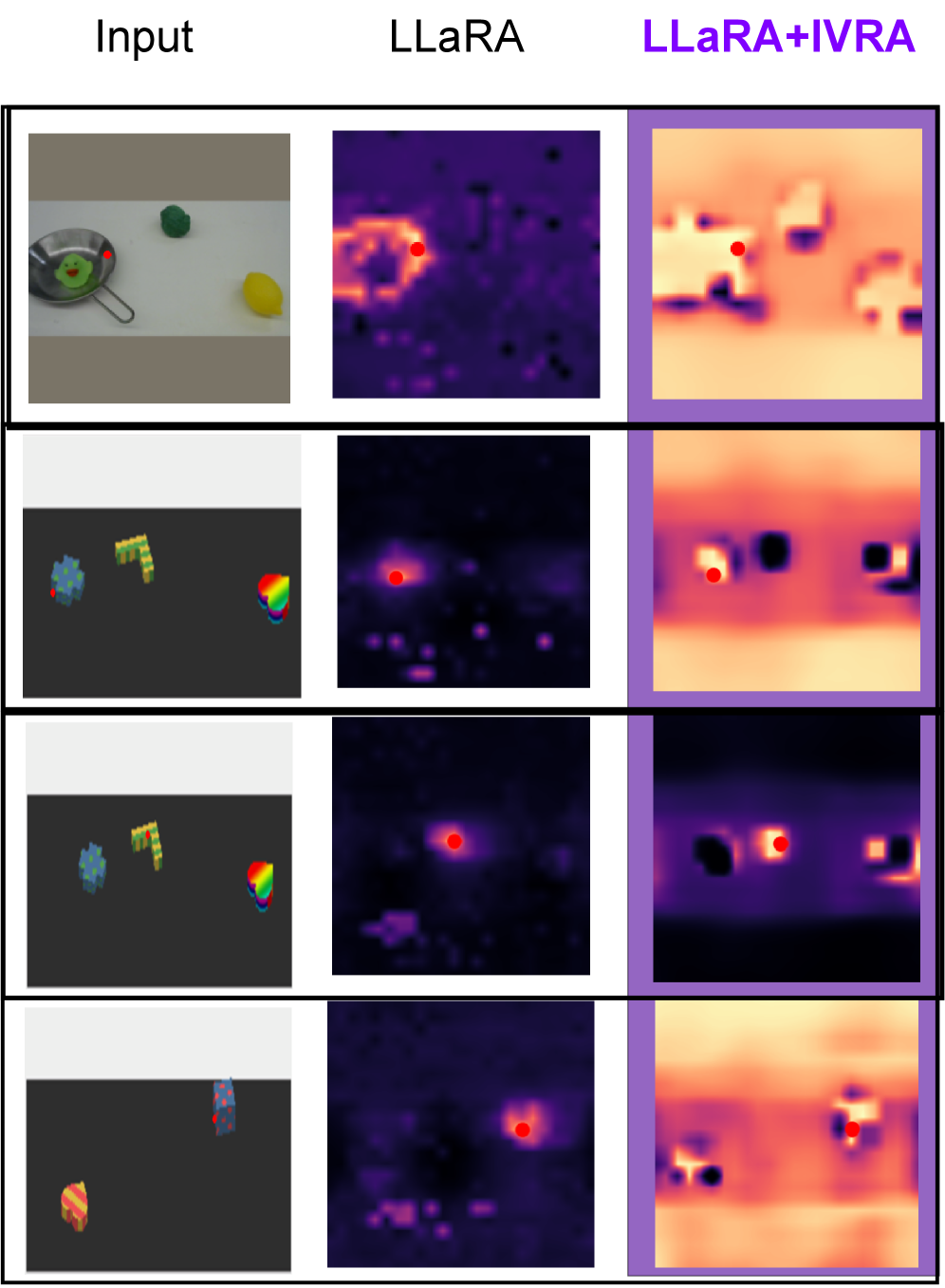

IVRA improves robot action policy by injecting training-free affinity hints derived from the vision encoder to enhance visual-token relations.

Flattening image patches into token sequences for vision-language-action (VLA) models often weakens crucial 2D spatial understanding needed for precise robot manipulation. To address this, we introduce IVRA-Improving Visual-Token Relations for Robot Action Policy with Training-Free Hint-Based Guidance-a lightweight, training-free method that enhances spatial reasoning by selectively injecting affinity hints from the vision encoder into the language model. This inference-time intervention realigns visual-token interactions, improving performance across diverse VLA architectures and benchmarks-including gains even when baseline accuracy is high. Can this simple yet effective approach unlock more robust and geometrically-aware robot action policies in increasingly complex environments?

The Erosion of Spatial Memory in Vision-Language Systems

Vision-Language-Action (VLA) models represent a pivotal advancement in the field of robotic control, promising machines the ability to interpret natural language instructions and execute them within a visual environment. However, a fundamental challenge arises from the standard practice of converting visual input – images – into a flattened sequence of tokens for processing. This process, while computationally efficient, critically discards the inherent spatial relationships between objects within the scene. Consequently, the model loses vital contextual information regarding object positions, orientations, and relative distances, hindering its capacity for accurate object manipulation and complex reasoning – abilities essential for successful navigation and interaction within real-world environments. The erosion of spatial understanding therefore presents a significant bottleneck in the development of truly intelligent and adaptable robotic systems.

The diminished spatial understanding within vision-language models directly impedes their capacity for precise object manipulation and sophisticated scene reasoning, ultimately affecting performance in embodied artificial intelligence tasks. When a model fails to accurately perceive the relationships between objects – their positions, orientations, and relative distances – it struggles to plan and execute actions within a physical environment. This deficiency manifests as errors in grasping, placing, or navigating around obstacles, as the model lacks the necessary contextual awareness to predict the outcomes of its actions. Consequently, tasks requiring dexterity, foresight, or an understanding of spatial constraints become significantly more challenging, limiting the potential of these models in real-world robotic applications and hindering the development of truly intelligent, embodied agents.

The prevalent use of global pooling techniques within vision-language models inadvertently erodes their capacity to interpret spatial relationships between objects. Global pooling, designed to condense visual information into a fixed-length vector, effectively discards crucial positional data – the very information that defines how objects relate to one another within a scene. This simplification, while computationally efficient, results in the model treating all visual features as equally important and independent, hindering its ability to discern, for example, whether an object is on top of, beside, or behind another. Consequently, tasks requiring precise object manipulation or complex reasoning about scene layouts – fundamental to embodied artificial intelligence – suffer significant performance degradation, as the model struggles to accurately contextualize objects within their environment.

Restoring Spatial Context: The Mechanics of IVRA

IVRA (Injection-based Restoration of Affinity) is a training-free technique designed to mitigate spatial loss in Vision-Language Action (VLA) models. Spatial loss occurs when the model fails to adequately represent the relationships between different regions of an image, hindering its ability to understand and interpret visual information. IVRA addresses this by directly injecting spatial context into the VLA model without requiring any parameter updates or additional training. This is achieved by leveraging information about the relative positions and relationships of image patches, thereby improving the model’s understanding of the spatial arrangement of visual elements and enhancing performance on tasks requiring spatial reasoning.

IVRA utilizes an affinity map generated from the visual language model’s encoder to quantify the relationships between different image patches. This map represents the degree of local similarity; higher values indicate stronger correlations between patches based on feature embeddings extracted by the encoder. Specifically, the affinity map is computed by measuring the cosine similarity between the embeddings of each patch pair, effectively identifying neighboring regions with shared visual characteristics. The resulting map serves as a spatial prior, informing the weighted pooling mechanism to prioritize information from highly correlated patches during context restoration.

The core of IVRA’s spatial context restoration lies in a weighted average pooling mechanism. Utilizing the derived affinity map, the system calculates weights representing the similarity between each visual token and its neighboring patches. These weights are then applied during a pooling operation, effectively blending the token’s features with contextual information from adjacent regions. This process allows the model to propagate spatial relationships, mitigating information loss inherent in Vision Language Action (VLA) models and resulting in demonstrated performance improvements of up to 30% on evaluated real-world robotic tasks.

Empirical Validation: Performance Gains on Embodied AI Benchmarks

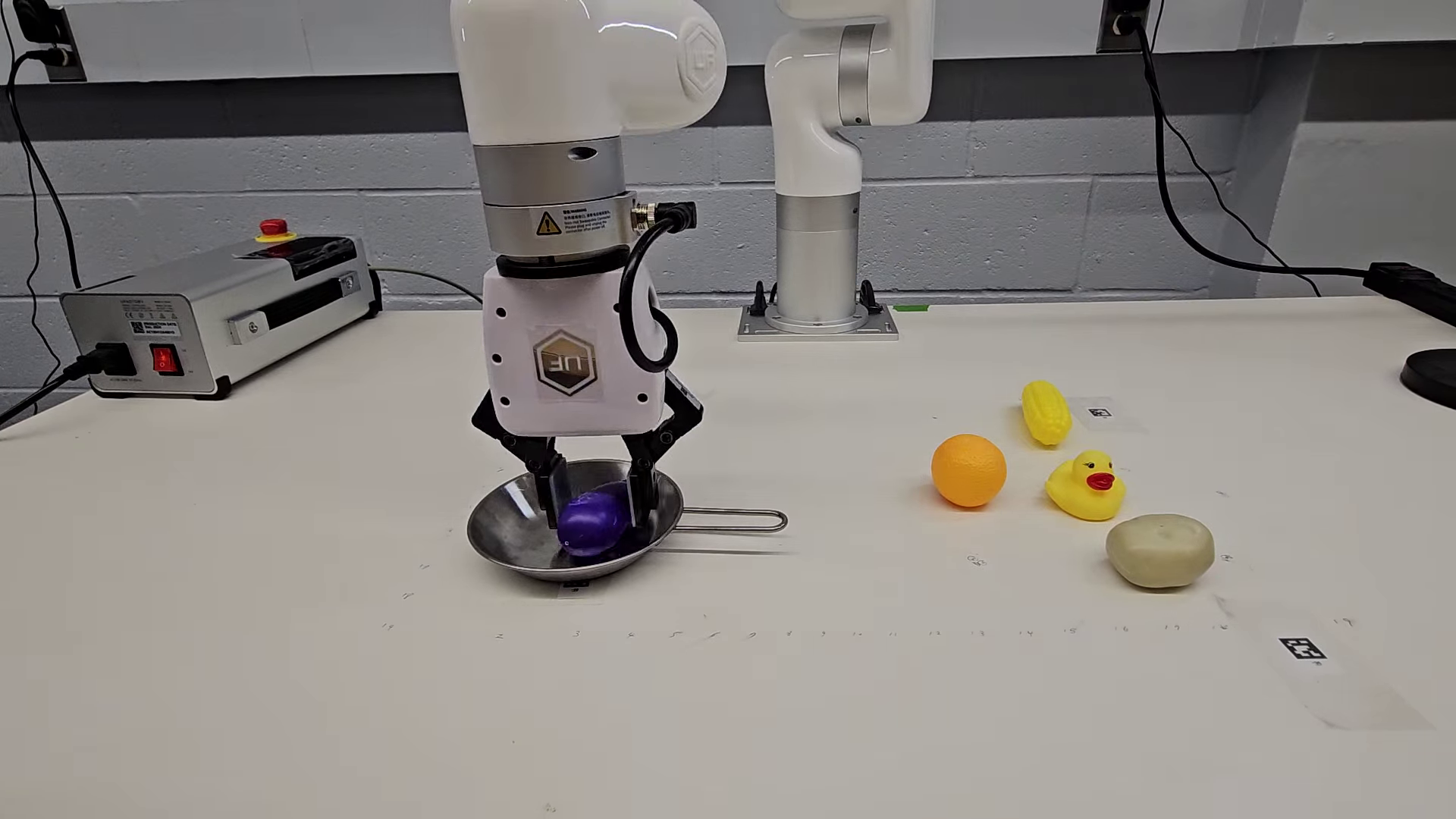

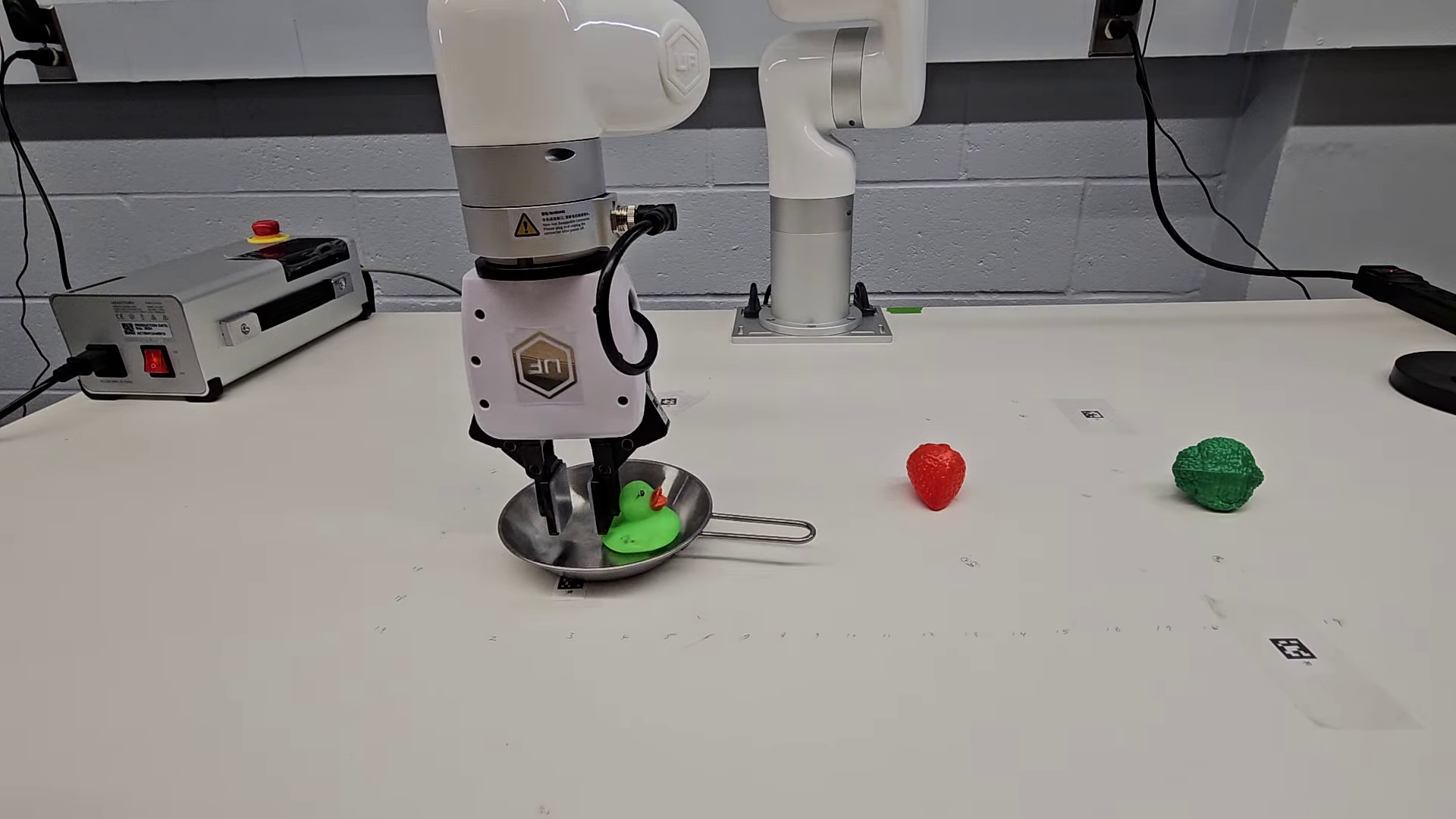

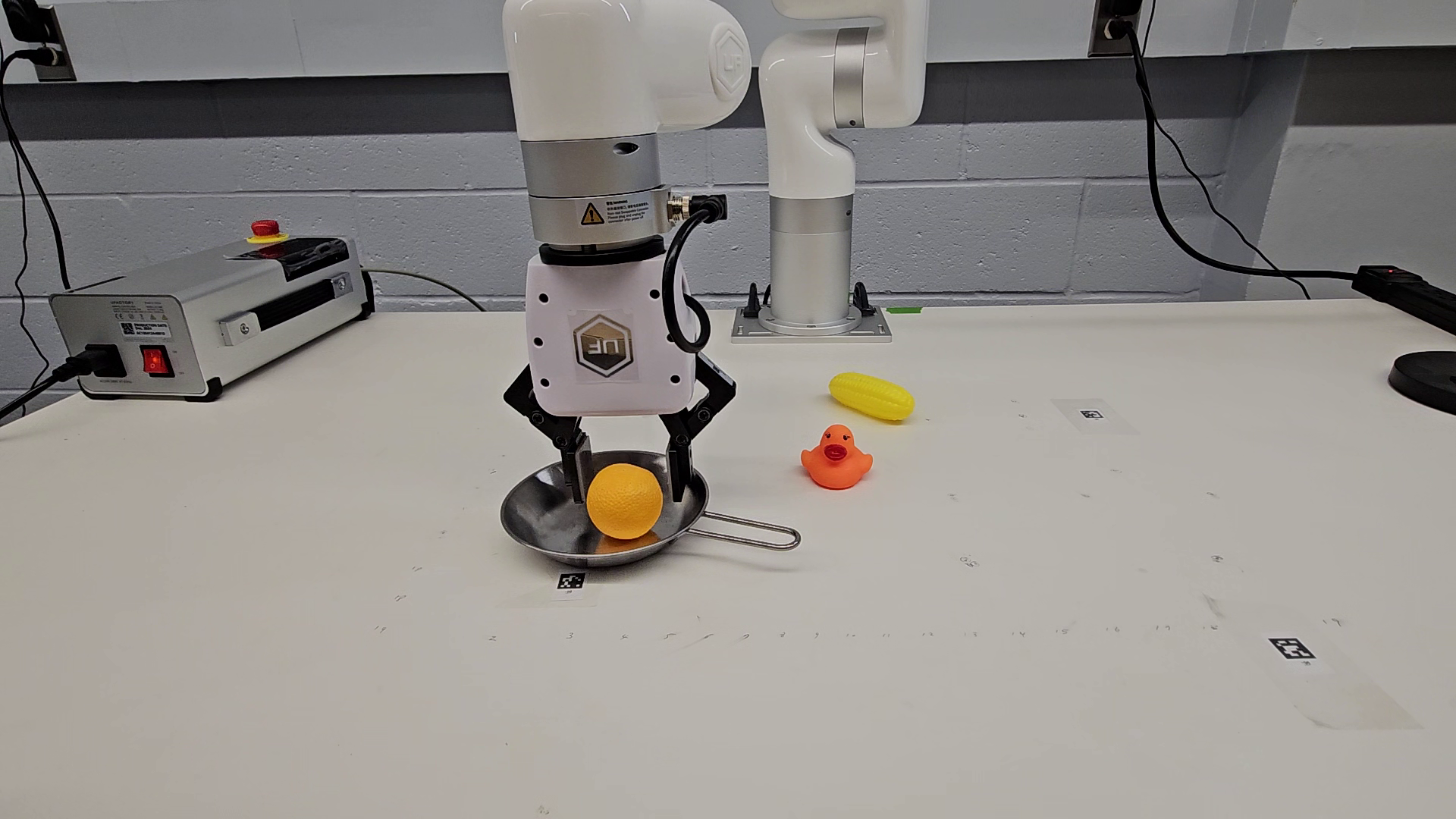

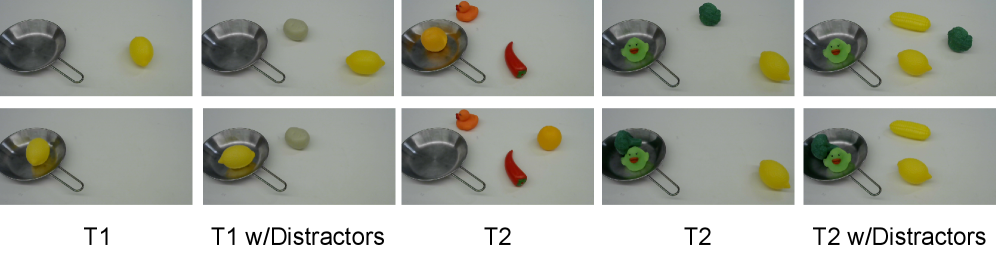

Quantitative evaluation demonstrates that IVRA achieves improved performance on established embodied AI benchmarks. Specifically, IVRA exhibits a 5.0% absolute increase in success rate on the VIMA benchmark when compared to the OpenVLA baseline. Furthermore, performance on the LIBERO benchmark improves by an average of 1.1% using IVRA, indicating a consistent advantage across diverse manipulation tasks. These gains are based on standardized evaluation protocols for both VIMA and LIBERO, assessing the model’s ability to successfully complete specified manipulation objectives.

Within the VIMA benchmark suite, the IVRA model demonstrated enhanced capabilities in object manipulation tasks specifically focused on precise placement. Quantitative results indicate a 3.5% improvement in success rate on Object Placement tasks when compared to the OpenVLA baseline. This improvement suggests that IVRA exhibits superior performance in scenarios demanding accurate spatial reasoning and fine motor control for manipulating objects within a defined environment. The observed gains are directly attributable to the model’s ability to more effectively interpret and execute instructions related to positioning objects according to specific criteria.

IVRA demonstrates enhanced performance in open-vocabulary detection tasks through integration with RegionCLIP and Grounding DINO. Empirical results show a 30% improvement in success rate on the Real World Object Color Matching task compared to the LLaRA baseline. Similarly, IVRA achieves a 30% higher success rate on the Cluttered Localization task when contrasted with LLaRA, indicating a substantial advancement in the model’s ability to identify and locate objects in complex, real-world environments using open-vocabulary approaches.

Towards Robust Intelligence: Implications and Future Directions

Recent advancements in embodied artificial intelligence leverage visual language models (VLMs), but their performance often degrades in unpredictable, real-world settings. To address this, researchers have developed In-View Representation Alignment (IVRA), a technique that explicitly preserves spatial context during processing. This allows VLMs to maintain a more accurate understanding of an agent’s surroundings, leading to significantly improved robustness in complex environments. Notably, IVRA achieves this enhanced performance with a minimal computational overhead, adding only a 3% increase in latency – a crucial factor for real-time applications like robotics and autonomous navigation. By anchoring language understanding to a stable spatial framework, IVRA represents a key step towards creating AI agents capable of reliably operating and adapting within the inherent complexities of the physical world.

The development of embodied artificial intelligence stands to benefit significantly from agents capable of generalizing beyond their training data, and recent advancements are laying the groundwork for precisely that. By improving an agent’s ability to understand and react to previously unseen environments and circumstances, researchers are moving beyond systems limited to narrow, pre-defined tasks. This enhanced adaptability isn’t simply about recognizing new objects; it’s about constructing a flexible internal model of the world that allows for robust performance even when faced with unexpected configurations or challenges. Consequently, future AI systems promise a greater capacity to operate effectively in dynamic, real-world settings, opening doors to applications ranging from autonomous robotics to personalized assistance and beyond, all while demanding less specialized training for each new environment.

Researchers anticipate a significant leap in embodied intelligence through the synergistic combination of Interval Visual Representation Augmentation (IVRA) with models like RT-2. This integration aims to move beyond simply perceiving an environment to actively reasoning about it and generating more effective action plans. By leveraging RT-2’s capabilities in translating visual inputs into robotic commands, and augmenting these inputs with IVRA’s preserved spatial context, future systems promise improved adaptability and robustness. This approach doesn’t merely enhance action generation; it allows agents to anticipate consequences, navigate complex scenarios, and ultimately exhibit a more human-like understanding of their surroundings, fostering truly generalizable intelligence in robotic systems.

The presented work acknowledges an inherent fragility within complex systems, mirroring the inevitable decay observed in natural processes. Much like erosion subtly alters landscapes over time, ‘technical debt’ accumulates in robotic systems, diminishing performance. This research, through IVRA, attempts to mitigate this decay by reinforcing the vital link between visual perception and action-restoring spatial reasoning where it might otherwise degrade. As Bertrand Russell observed, “The difficulty lies not so much in developing new ideas as in escaping from old ones.” IVRA offers a pathway to escape reliance on computationally expensive retraining, instead leveraging existing visual information to achieve robust action policies, much like restoring an aging structure rather than rebuilding it entirely.

What Lies Ahead?

The introduction of IVRA represents a pragmatic step, a localized repair within the inevitable entropy of vision-language-action systems. The method skillfully addresses the loss of spatial reasoning-a common symptom of encoding-but it does not, and cannot, resolve the fundamental disconnect between continuous perception and discrete action. Versioning, in this context, is a form of memory, patching deficits as they emerge. The true challenge resides not in restoring what is lost, but in designing systems resilient to loss from the outset.

Future iterations will likely focus on the propagation of these ‘affinity hints.’ Currently, the method operates as a corrective measure. A more elegant solution might involve integrating such spatial awareness directly into the encoding process – a proactive rather than reactive approach. This necessitates revisiting the foundational assumption that effective representation can be achieved through purely perceptual means; perhaps a degree of symbolic grounding is inescapable.

Ultimately, the arrow of time always points toward refactoring. Each improvement, each restored spatial relationship, is merely a postponement of inevitable decay. The field must move beyond incremental gains and confront the larger question: can a truly robust vision-language-action system be built, or are these endeavors destined to be perpetually optimized approximations of an unattainable ideal?

Original article: https://arxiv.org/pdf/2601.16207.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Married At First Sight’s worst-kept secret revealed! Brook Crompton exposed as bride at centre of explosive ex-lover scandal and pregnancy bombshell

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- Top 10 Super Bowl Commercials of 2026: Ranked and Reviewed

- Gold Rate Forecast

- ‘Reacher’s Pile of Source Material Presents a Strange Problem

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- Meme Coins Drama: February Week 2 You Won’t Believe

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Olivia Dean says ‘I’m a granddaughter of an immigrant’ and insists ‘those people deserve to be celebrated’ in impassioned speech as she wins Best New Artist at 2026 Grammys

2026-01-24 05:27