Author: Denis Avetisyan

A new framework enables robots and humans to safely and efficiently share workspaces by prioritizing conflict resolution through decentralized control.

This review details a distributed Virtual Model Control approach for scalable human-robot collaboration, emphasizing agent-agnostic design and safety-aware force balance.

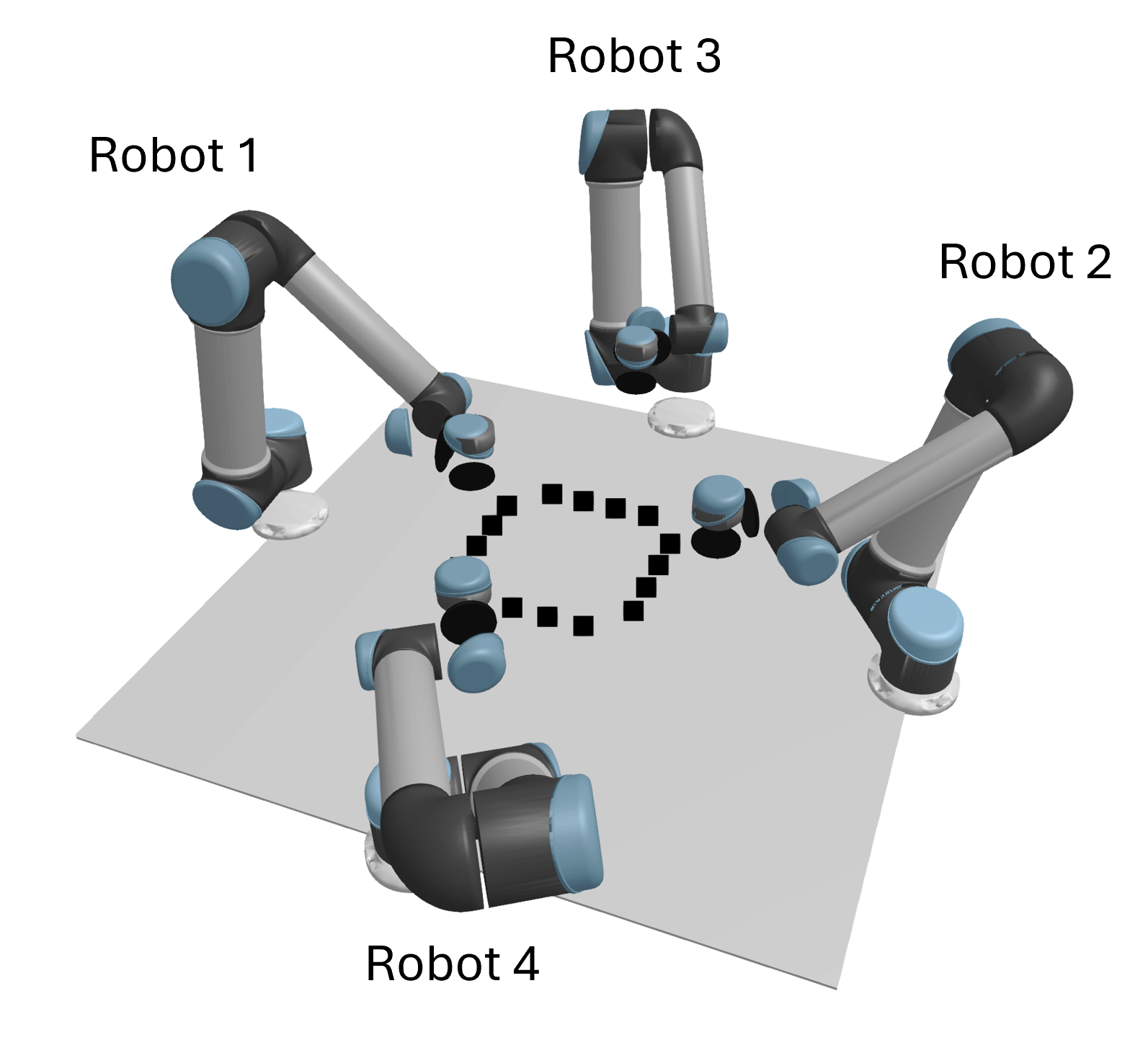

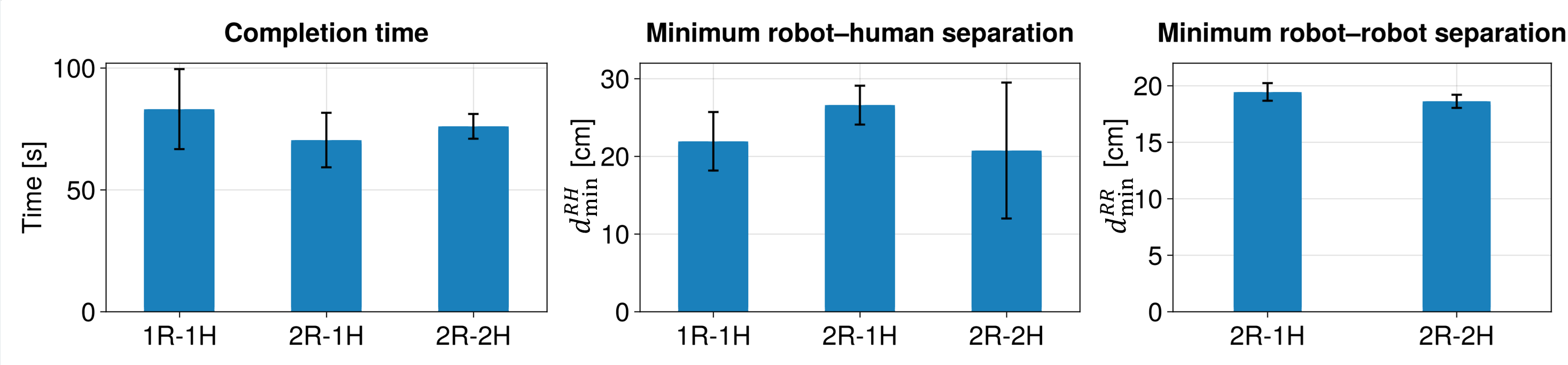

Achieving truly scalable and safe human-robot collaboration remains a challenge due to the complexities of shared workspaces and potential for deadlock. This paper introduces ‘Distributed Virtual Model Control for Scalable Human-Robot Collaboration in Shared Workspace’, a novel decentralized framework leveraging Virtual Model Control to enable intuitive interaction and conflict resolution between humans and robots. By embedding agents within a virtual component-shaped environment and utilizing force-based negotiation, the method achieves deadlock-free operation with up to two robots and two humans-and scales to four robots in simulation-while maintaining consistent inter-agent separation. Could this approach unlock new possibilities for flexible and robust collaborative robotics in complex, real-world environments?

The Inevitable Friction of Shared Space

The evolving landscape of automation witnesses a significant shift: robots are no longer confined to isolated industrial cells but are increasingly integrated into human-centric environments. This burgeoning trend, driven by demands for enhanced productivity and flexibility, necessitates a fundamental rethinking of robotic safety. Traditional approaches, predicated on physical barriers and emergency stops, often prove cumbersome and impede the very collaboration they aim to enable. Consequently, researchers are actively developing novel safety paradigms – encompassing advanced sensing, predictive algorithms, and compliant robot designs – to allow for truly safe and seamless interaction between humans and robots in shared workspaces. This transition demands not just technological innovation, but also a shift in regulatory frameworks and a focus on human-robot trust and acceptance.

Historically, robotic safety has relied on rigid barriers and emergency stops – methods effective at preventing harm, but also severely limiting a robot’s responsiveness and ability to work with humans. These conventional approaches often necessitate large safety zones, restricting the robot’s range of motion and hindering its potential for intricate, collaborative tasks. Consequently, robots have been largely confined to isolated, automated cells, preventing the seamless integration needed for truly shared workspaces. This reliance on static safety measures creates a fundamental tension: maximizing safety often means sacrificing the agility and adaptability essential for effective human-robot collaboration, prompting a shift towards more dynamic and intelligent safety systems.

Preventing collisions and resolving conflicts in shared workspaces represents a significant hurdle in advancing human-robot collaboration. As robots move beyond isolated industrial cages and into environments shared with people, the need for dynamic, responsive safety systems becomes paramount. Traditional methods, such as emergency stops and physical barriers, often compromise a robot’s efficiency and ability to seamlessly assist humans. Instead, researchers are focusing on sophisticated sensing technologies – including vision systems, force sensors, and predictive algorithms – to anticipate potential collisions and proactively adjust robot trajectories. This involves not only detecting obstacles but also understanding human intent and predicting their movements, allowing robots to navigate complex environments while maintaining a safe distance and responding to unexpected changes in real-time. Ultimately, creating truly collaborative robots requires a shift from purely reactive safety measures to proactive, intelligent systems capable of resolving conflicts before they escalate.

The Virtual Loom: Weaving a Predictive Safety Net

Virtual Model Control (VMC) establishes a unified architecture for coordinating human and robotic movements by employing a virtual model representing both the human operator and the robot. This model predicts the consequences of intended motions, enabling pre-emptive adjustments to avoid kinematic singularities or dynamic instabilities. Specifically, VMC utilizes a dynamic model of the human-robot system, incorporating representations of both entities’ physical properties – mass, inertia, and joint limits – to simulate interactions before they occur. This predictive capability allows for the computation of control actions that ensure smooth, coordinated movement and facilitates tasks requiring shared control, such as collaborative assembly or assistance with manipulation. The framework supports a range of control strategies, including impedance control and admittance control, all operating within the predictive environment of the virtual model.

Virtual Model Control (VMC) achieves proactive collision avoidance and conflict resolution by constructing a dynamic model of the human operator and robot, and simulating interaction forces within that model. This allows the system to predict potential contact scenarios before they occur in the physical world. By representing forces – such as those arising from intended movements or unexpected disturbances – as virtual forces acting on the modeled entities, VMC can preemptively adjust robot trajectories or apply assistive forces to guide the human, preventing collisions and resolving conflicting intentions. This predictive capability extends beyond simple obstacle avoidance; VMC can also manage scenarios involving shared control, where both the human and robot contribute to a task, by resolving conflicting inputs before they manifest as physical discrepancies.

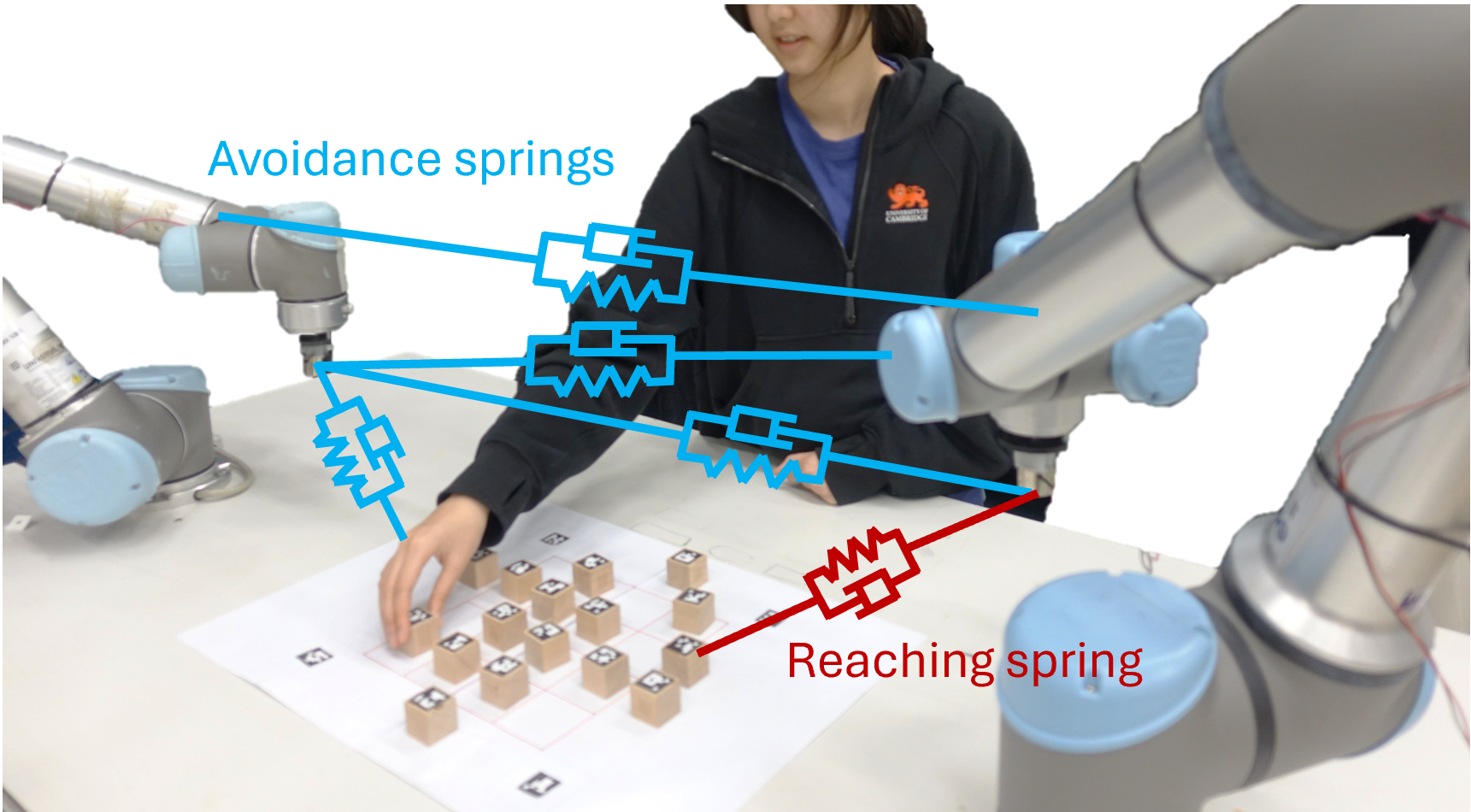

Virtual Model Control utilizes virtual springs and dampers to govern the interaction between a robot and its environment, or between a robot and a human. These virtual elements are mathematical representations of physical properties, allowing for the simulation of force feedback without requiring physical contact. Specifically, virtual springs define the restorative force proportional to displacement, while virtual dampers define the force proportional to velocity [latex]F = -k \cdot x[/latex] and [latex]F = -c \cdot \dot{x}[/latex] respectively, where ‘k’ is the spring constant, ‘c’ is the damping coefficient, ‘x’ is displacement, and [latex]\dot{x}[/latex] is velocity. By adjusting the parameters of these virtual components, the system can predictably regulate interaction forces, ensuring stable and safe collaborative movements and preventing excessive forces during contact.

Distributed Intelligence: The Swarm’s Silent Agreement

A decentralized control architecture distributes decision-making authority to individual robotic agents, enabling them to perceive and respond to environmental changes without reliance on a central processing unit. This is achieved by equipping each robot with its own sensor suite and local processing capabilities, allowing it to independently assess its surroundings and formulate actions based on pre-programmed rules and algorithms. The system avoids single points of failure inherent in centralized control and enhances responsiveness by minimizing communication latency; each robot can react immediately to local events, contributing to a more adaptable and resilient multi-robot system. This approach necessitates robust algorithms for self-awareness, obstacle avoidance, and inter-agent communication to ensure coordinated behavior emerges from distributed actions.

Multi-agent collaboration is achieved through a distributed system where each robot operates based on local sensing and force balance analysis, eliminating the need for a central coordinating unit. This decentralized approach allows robots to dynamically adjust their actions in response to both environmental changes and the actions of other agents in the group. Coordination arises from the summation of individual robot behaviors, prioritizing actions based on calculated forces and potential collisions. The system allows for efficient task completion as robots can simultaneously pursue objectives and resolve conflicts without communication bottlenecks or single points of failure typically associated with centralized control architectures.

Experiments conducted with up to four robots consistently avoided deadlock scenarios. This was achieved through the implementation of a force balance analysis, which allowed each robot to assess potential conflicts, and subsequent prioritization procedures that determined which robot would yield in ambiguous situations. The consistent avoidance of deadlocks across all experimental runs validates the robustness of these algorithms in a multi-agent robotic system, indicating scalability and reliability in dynamic environments.

![Implementing a conflict resolution layer enabled robots to recover from a deadlock caused by balanced forces at [latex]t=25[/latex] s by prioritizing one robot's trajectory (blue) and resolving a stall detected at [latex]t=10.87[/latex] s, as demonstrated by the transition from stalled forces to successful navigation at [latex]t=10.95[/latex] s.](https://arxiv.org/html/2602.17415v1/Images/exp2_conflict_showcase/exp/nostuck_prioritized_force_novalue.png)

The Ghost in the Machine: Anticipating Human Intent

The system proactively prevents collisions by incorporating real-time human hand tracking directly into the Virtual Motion Controller (VMC) framework. This allows the robot to predict potential contact before it occurs, rather than simply reacting to it. By continuously monitoring the position and velocity of human hands, the VMC can adjust the robot’s trajectory, effectively creating a dynamic safety zone. This anticipatory approach is crucial for collaborative robotics, as it enables smoother, more natural interactions and minimizes the risk of accidental impacts, fostering a safer and more intuitive working environment for humans and robots alike.

The system employs unilateral dampers – a control mechanism designed to react solely when a human limb approaches the robot’s workspace. This innovative approach establishes a dynamic safety buffer, effectively preventing collisions without hindering the robot’s intended motions when a human is at a safe distance. Unlike traditional collision avoidance strategies that often impede movement preemptively, these dampers remain passive until an approaching limb triggers a reactive force, gently guiding the human away from potential contact. This targeted response minimizes unnecessary intervention and allows for a more natural and efficient human-robot interaction, prioritizing safety only when and where it’s needed.

Recent experiments demonstrate a robust safety system for collaborative robotics, achieving an average minimum distance of 21.8 cm between humans and robots across five trials. This was accomplished by integrating human-aware control with established techniques like impedance control and control barrier functions, allowing the robots to dynamically adjust their movements in response to human proximity. Notably, this safety framework didn’t impede task completion; four robots successfully finished the designated tasks in an average of 88.5 ± 4.8 seconds, indicating a balance between safe operation and efficient performance. The results suggest a viable path towards more intuitive and secure human-robot interactions in shared workspaces, paving the way for increased collaboration and productivity.

The pursuit of scalable human-robot collaboration, as detailed in this work, inevitably reveals the limitations of any centralized architectural vision. This framework, with its emphasis on decentralized control and agent-agnostic design, recognizes that true scalability isn’t about imposing order, but about fostering resilience within a complex system. As Linus Torvalds once observed, “Talk is cheap. Show me the code.” This prioritization scheme, allowing for conflict resolution through a defined hierarchy, isn’t a declaration of absolute control, but a pragmatic acknowledgement that failures will occur; the system’s strength lies in its ability to gracefully navigate those inevitable disruptions. The very notion of a ‘safety-aware control’ acknowledges the inherent chaos and strives for a temporary cache against it.

The Looming Horizon

This work, like all attempts to choreograph interaction, builds a scaffolding destined to be overgrown. The prioritization scheme, a necessary fiction for initial stability, will inevitably encounter scenarios unforeseen in its design – every edge case a testament to the incompleteness of any predictive model. The true measure of this framework won’t be its initial performance, but its capacity to absorb these failures, to yield gracefully to the inevitable entropy of a shared dynamic system. It is not about preventing conflict, but about building a substrate where conflict can be resolved, and even become the mechanism of adaptation.

The claim of agent-agnosticism is particularly intriguing. It suggests a decoupling of control from specific instantiation, a move towards a more generalizable, less brittle architecture. But every dependency is a promise made to the past, and even the most abstract interface will bear the weight of its implementation. The question isn’t whether the system can accommodate new agents, but whether it will do so without subtly shifting the locus of control, recreating the very dependencies it seeks to avoid.

Ultimately, this is a step towards recognizing that systems don’t so much solve problems as defer them. Everything built will one day start fixing itself – or, more accurately, being fixed by the interactions it mediates. The future lies not in more sophisticated control algorithms, but in architectures that cultivate resilience, that allow the system to become its own repair mechanism, a self-regulating ecosystem responding to pressures both anticipated and, inevitably, unknown.

Original article: https://arxiv.org/pdf/2602.17415.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Overwatch Domina counters

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- Gold Rate Forecast

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- 1xBet declared bankrupt in Dutch court

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

2026-02-20 14:00