Author: Denis Avetisyan

Researchers have developed a novel framework that allows multiple robots to cooperatively manipulate objects without grasping, using generative AI and advanced motion planning.

This work introduces GCo, a flow-matching co-generation approach coupled with a new anonymous multi-robot motion planning algorithm (Gspi) for scalable and efficient non-prehensile manipulation.

Coordinating multiple robots to manipulate objects in cluttered spaces remains a challenge due to the complexity of joint planning and the need for scalable solutions. This paper introduces a novel framework, ‘Collaborative Multi-Robot Non-Prehensile Manipulation via Flow-Matching Co-Generation’, which integrates generative modeling with motion planning to address this issue. By co-generating contact formations and trajectories, and employing a new motion planner (Gspi), our approach enables efficient and coordinated multi-robot manipulation. Could this unified framework pave the way for more robust and adaptable collaborative robotic systems in complex real-world scenarios?

Orchestrating Collective Action: The Promise of Multi-Robot Manipulation

The ability for multiple robots to cooperatively manipulate objects represents a cornerstone challenge in robotics, holding significant promise across diverse fields. In modern manufacturing, coordinated robotic teams could assemble complex products with greater efficiency and flexibility than single robots or human workers. Within logistics, these systems envision autonomously unloading, sorting, and transporting goods in warehouses and distribution centers. Perhaps most compelling are the potential applications in search and rescue operations, where teams of robots could navigate hazardous environments, locate survivors, and deliver essential supplies – tasks often too dangerous or inaccessible for humans. Successfully addressing the complexities of multi-robot manipulation is therefore crucial for realizing a future where robots work alongside – and even with – people in a wide range of critical applications.

The orchestration of multiple robotic manipulators presents a significant computational hurdle as the number of agents increases, quickly exceeding the capabilities of conventional methodologies. This difficulty doesn’t stem simply from tracking more moving parts; rather, it arises from the exponential growth in possible interaction scenarios between the robots themselves and the manipulated object. Each additional robot introduces new potential collisions, force distributions, and synergistic movements that must be accounted for in real-time. Traditional planning algorithms, often designed for single robots or simple cooperative tasks, struggle with this combinatorial explosion, becoming computationally intractable and yielding suboptimal or even failed manipulations. The inherent complexity demands innovative approaches that move beyond centralized control and pre-defined roles, seeking decentralized and adaptive strategies to manage the intricate interplay of forces and motions in multi-robot systems.

Many current multi-robot manipulation strategies suffer from inflexibility due to a reliance on predetermined roles or centralized control systems. These methods typically assign each robot a specific task – for example, one robot might be solely responsible for holding an object while another assembles it – or require a central computer to dictate the actions of every robot involved. While effective in highly structured environments, this architecture struggles when faced with unexpected changes or dynamic scenarios. The rigidity hinders the system’s ability to adapt to variations in object shape, position, or unforeseen obstacles, and scaling up to larger teams of robots introduces a computational bottleneck at the central control unit, significantly limiting the overall efficiency and robustness of the collaborative effort.

GCo: A Framework for Co-Generation and Adaptive Planning

GCo is a novel framework designed to address the challenges of collaborative manipulation tasks involving multiple robots. It integrates learned behaviors with traditional motion planning algorithms to enable robots to cooperatively manipulate objects in a shared workspace. Unlike systems that treat learning and planning as separate modules, GCo unifies these processes, allowing the system to leverage the strengths of both approaches. Specifically, GCo utilizes learned generative models to propose potential manipulation strategies, which are then refined and validated by a planning algorithm to ensure feasibility and collision avoidance. This unified approach facilitates robust and adaptable performance in dynamic environments and with varying task requirements.

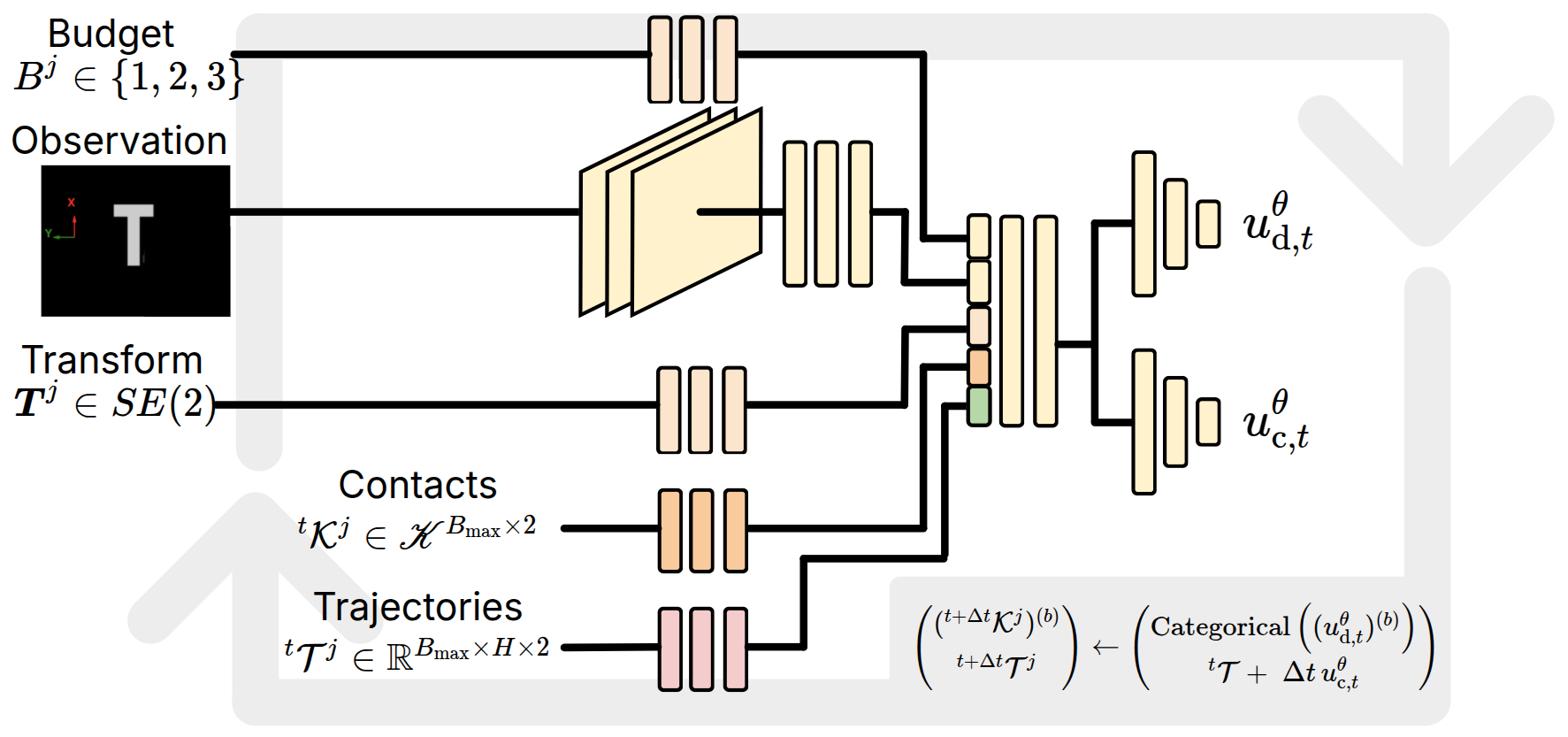

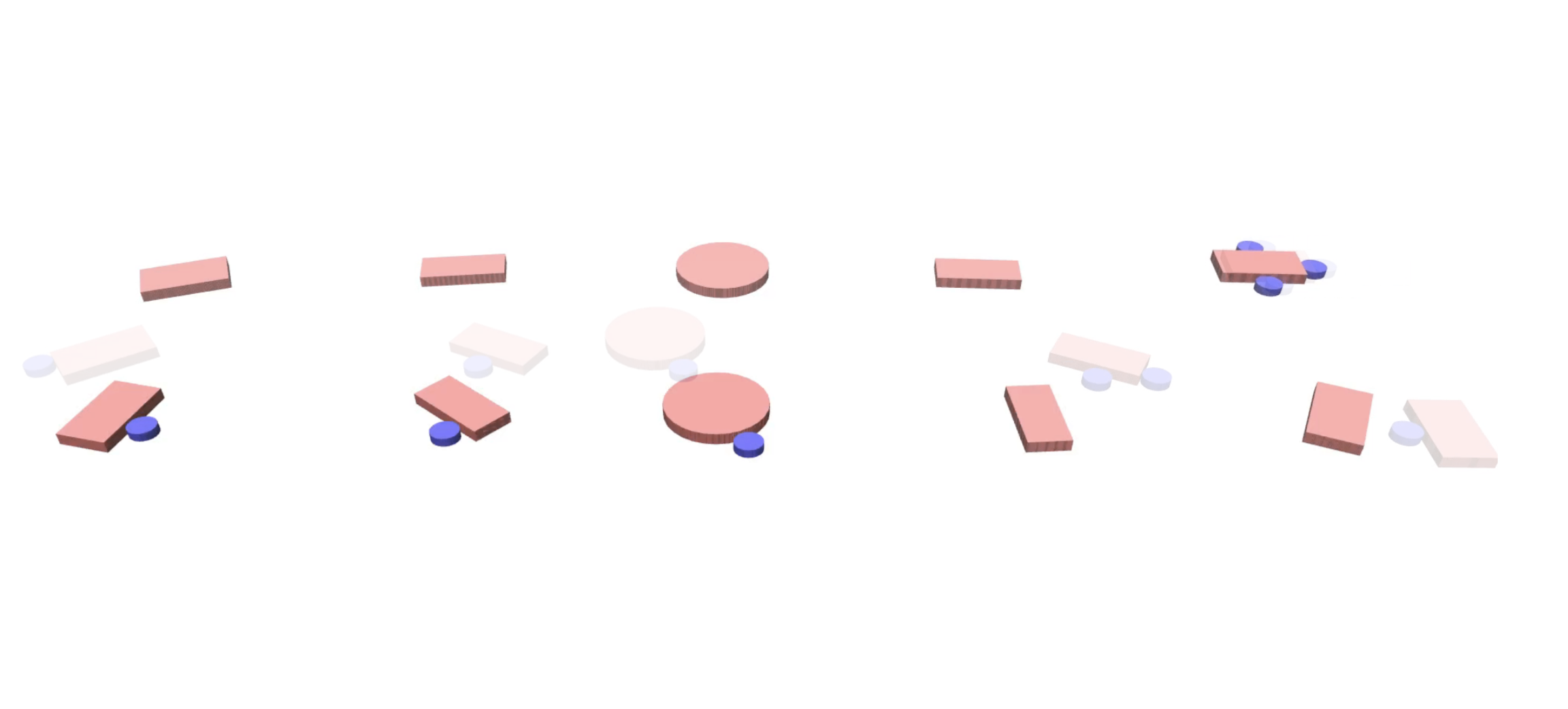

GCo utilizes Flow-Matching Co-Generation, a probabilistic generative modeling technique, to predict feasible contact formations and corresponding manipulation trajectories directly from perceptual input, such as camera images or point clouds. This process involves training a flow-matching model to learn the distribution of successful contact and motion plans observed during training data collection. Given a new perceptual observation, the model generates a distribution over possible contact points on objects and a corresponding trajectory for each robot to achieve manipulation. This generative process effectively proposes a set of potential plans, which are then evaluated and refined by the planning component of the GCo framework, offering a computationally efficient method for exploring the solution space compared to traditional planning approaches.

The GCo framework integrates a generative approach with the Gspi algorithm to facilitate anonymous multi-robot motion planning, guaranteeing collision-free execution of manipulation trajectories. Gspi operates by assigning unique identifiers to robots, allowing for decentralized planning without explicit communication or centralized coordination. This approach enables scalability to larger robot teams and robustness to robot failures. Experimental results demonstrate that this combination achieves state-of-the-art performance in both single- and multi-object manipulation tasks, exceeding the capabilities of existing methods in terms of success rate and efficiency. Specifically, GCo has been shown to effectively coordinate multiple robots to manipulate objects in complex environments while avoiding collisions and minimizing execution time.

Underlying Mechanisms: Generating and Executing Coordinated Actions

Flow-Matching Co-Generation employs continuous optimization techniques to generate manipulation trajectories for robots. This method formulates the trajectory generation problem as a continuous control problem, allowing the use of gradient-based optimization algorithms to refine the robot’s movements. By optimizing a cost function that penalizes factors like trajectory length, jerk, and deviation from desired waypoints, the system produces smooth and efficient paths. The resulting trajectories minimize execution time and reduce wear and tear on robotic joints, enabling precise and reliable manipulation tasks. This differs from discrete planning methods by directly optimizing the entire trajectory rather than sequencing pre-defined motions.

Discrete-Continuous Generation addresses the complexities of robot manipulation by simultaneously optimizing both discrete and continuous variables. Traditional methods often treat contact choices – such as which surfaces a robot grasps – as discrete decisions made independently of trajectory planning. This approach limits the solution space and can result in suboptimal or infeasible motions. Discrete-Continuous Generation instead formulates the problem as a mixed optimization, where discrete contact selections and continuous motion parameters—like position, velocity, and acceleration—are jointly optimized. This allows the system to explore a wider range of possibilities, identifying trajectories that are both kinematically feasible and leverage optimal contact strategies. The method typically employs techniques like differentiable relaxation or implicit differentiation to enable gradient-based optimization across both discrete and continuous spaces, resulting in smoother and more robust manipulation plans.

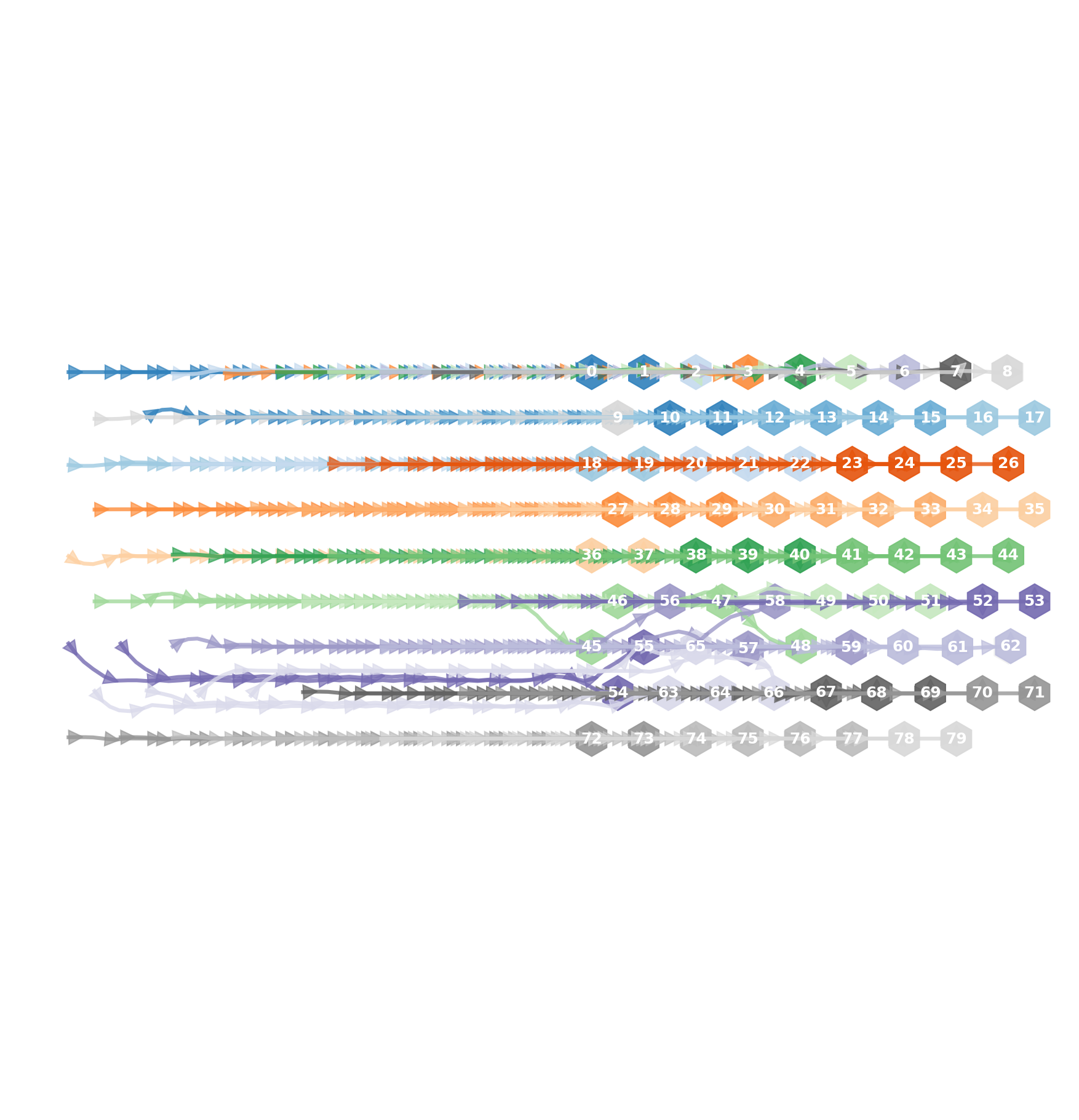

Gspi achieves multi-robot coordination by employing Priority Inheritance to manage potential conflicts arising when multiple robots attempt to execute actions simultaneously; this system assigns temporary priorities based on task requirements, ensuring that higher-priority actions are completed without indefinite blocking. In conjunction with Priority Inheritance, Gspi utilizes the ORCA (Optimal Reciprocal Collision Avoidance) algorithm for local collision avoidance. ORCA calculates reciprocal velocities between robots, steering them around obstacles and each other based on predicted future positions and velocities, thereby enabling safe navigation in dynamic environments without requiring centralized planning or communication.

Demonstrated Scalability and Impact on Collaborative Robotics

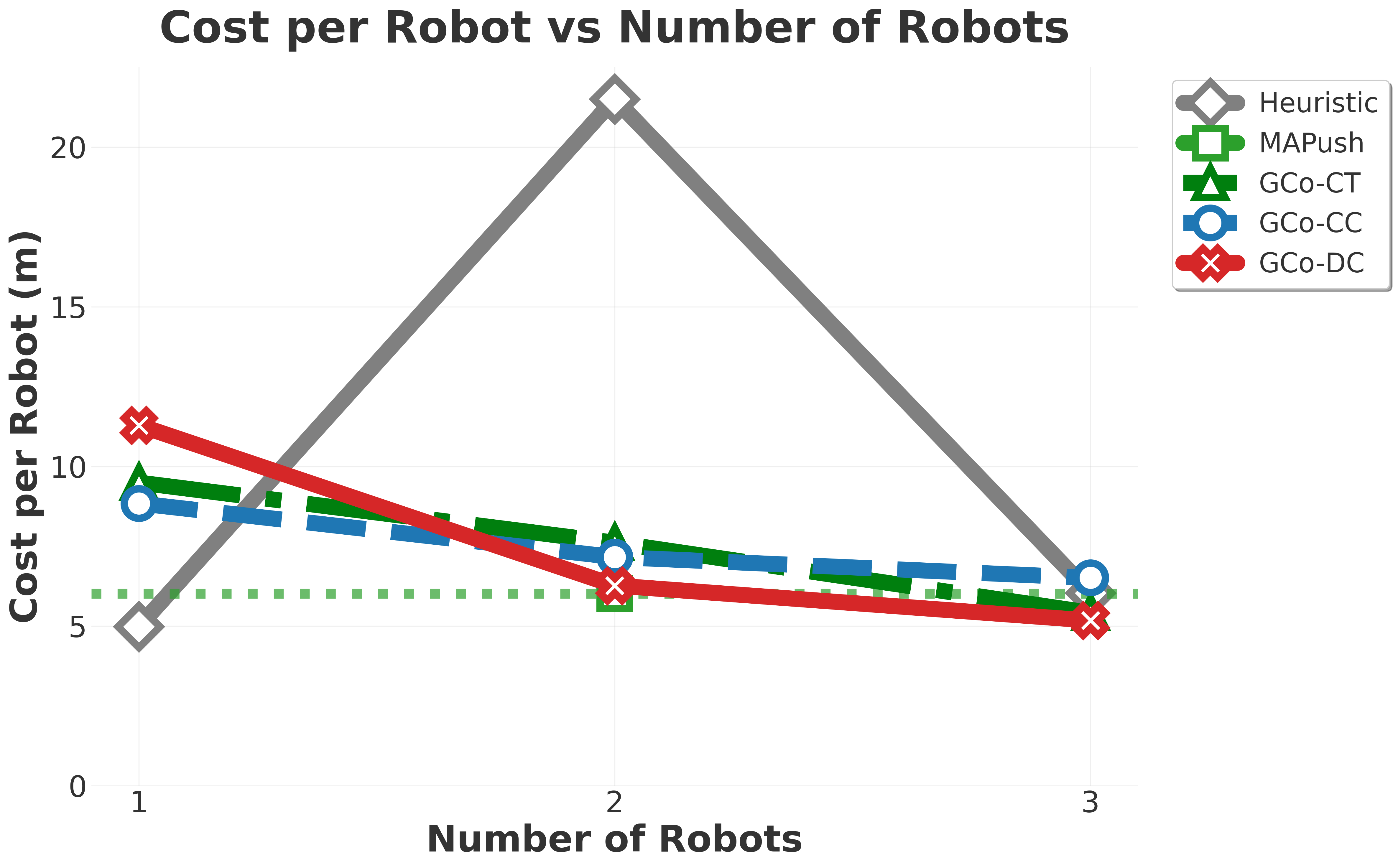

Experiments reveal that the Generalized Cooperative (GCo) approach consistently achieves a high success rate when tackling intricate manipulation tasks. Rigorous testing demonstrates GCo’s superior performance compared to established baseline methods across a variety of complex scenarios. This isn’t merely incremental improvement; the system exhibits a robust ability to adapt and reliably complete tasks demanding precise coordination and problem-solving. The observed success stems from GCo’s capacity to effectively navigate challenges inherent in multi-robot systems, ensuring consistent and dependable outcomes even as task complexity increases. This capability positions GCo as a promising solution for applications requiring dependable robotic manipulation in dynamic and unpredictable environments.

The Generalized Cooperative (GCo) framework distinguishes itself through exceptional scalability, a feature rigorously demonstrated in multi-robot experiments. Unlike many approaches that falter as team size increases, GCo consistently maintains high performance even with a substantial rise in robotic agents. Researchers successfully deployed GCo to address multi-robot manipulation planning (MRMP) challenges involving over 100 robots, showcasing its ability to coordinate complex tasks across large-scale teams without significant performance degradation. This robust scalability suggests GCo is uniquely positioned to facilitate the deployment of large, adaptable robotic workforces in demanding real-world scenarios, moving beyond the limitations of smaller-scale robotic deployments.

The demonstrated scalability of GCo opens avenues for robotic deployment in previously inaccessible real-world scenarios. Complex logistical operations, such as large-scale warehouse fulfillment or rapid disaster response, often demand coordinated action from numerous robotic agents. GCo’s ability to maintain high performance as robot numbers increase addresses a critical bottleneck in these applications, moving beyond small-scale demonstrations to truly impactful deployments. This enhanced efficiency isn’t merely about speed; it’s about resilience and adaptability, allowing robotic teams to dynamically adjust to changing conditions and unforeseen obstacles, ultimately promising more robust and cost-effective solutions for a wide range of industries and emergency situations.

The pursuit of scalable multi-robot systems, as demonstrated by GCo, echoes a fundamental tenet of efficient communication. Claude Shannon observed, “The most important thing in communication is to convey a message that is understandable to the recipient.” Similarly, GCo aims to establish a shared ‘understanding’ between robots, enabling them to coordinate complex manipulation tasks without explicit pre-planning. The framework’s reliance on generative modeling and the Gspi algorithm isn’t merely about generating possible motions; it’s about generating motions that are coherent within the collaborative context, ensuring each robot’s actions are predictably understandable by its peers. This approach, prioritizing clarity in action, aligns with the idea that effective systems, much like clear communication, thrive on reducing ambiguity and promoting shared understanding.

Future Directions

The presented framework, while a step toward scalable multi-robot manipulation, reveals the enduring challenge of composing complex behaviors from relatively simple components. This work addresses collision avoidance and co-generation, but the underlying structure still dictates the limits of adaptability. Consider a city’s infrastructure: simply adding more lanes to a highway does not solve fundamental urban planning flaws. Similarly, improving motion planning algorithms alone will not yield truly robust collaborative systems. The critical need remains for methods that facilitate emergent behavior, allowing robots to gracefully handle unforeseen circumstances and dynamically re-allocate tasks – a form of decentralized intelligence.

Further investigation should focus on the generative model’s capacity to learn from sparse, imperfect data. Current approaches often demand extensive training, a limitation in real-world applications where data acquisition is costly. A more elegant solution would involve a system capable of rapidly adapting to new environments and objects with minimal supervision, much like a skilled artisan learning a new craft. This requires moving beyond simply predicting trajectories and toward understanding the principles governing object interaction.

Finally, scaling beyond a handful of robots will necessitate a shift in focus from centralized control to fully distributed architectures. The current framework, while demonstrating progress, still relies on a degree of global awareness. A truly scalable system must operate on local information, with robots coordinating through implicit communication and shared understanding of the environment. The goal is not to program collaboration, but to create the conditions in which it can emerge.

Original article: https://arxiv.org/pdf/2511.10874.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash Royale Best Boss Bandit Champion decks

- Vampire’s Fall 2 redeem codes and how to use them (June 2025)

- Mobile Legends January 2026 Leaks: Upcoming new skins, heroes, events and more

- M7 Pass Event Guide: All you need to know

- Clash Royale Furnace Evolution best decks guide

- Clash Royale Season 79 “Fire and Ice” January 2026 Update and Balance Changes

- World Eternal Online promo codes and how to use them (September 2025)

- Clash of Clans January 2026: List of Weekly Events, Challenges, and Rewards

- Best Arena 9 Decks in Clast Royale

- Best Hero Card Decks in Clash Royale

2025-11-17 12:53