Author: Denis Avetisyan

New research details a robust methodology for equipping robots with knowledge graphs, enabling more intelligent and adaptable mission execution.

This paper presents a methodology for integrating knowledge graphs into ROS 2-based robotic systems to improve knowledge management, decision-making, and autonomy, demonstrated with a multi-drone search and rescue application.

While increasingly complex robotic missions demand greater autonomy, effectively integrating and reasoning with contextual knowledge remains a significant challenge. This paper details ‘A Methodology for Designing Knowledge-Driven Missions for Robots’-a framework for implementing knowledge graphs within Robot Operating System 2 (ROS 2) to enhance robotic decision-making and performance. By structuring task-related data semantically, the methodology facilitates improved knowledge management and autonomous behavior, demonstrated through a simulated multi-drone search and rescue scenario. Could this approach unlock a new generation of truly intelligent and adaptable robotic systems capable of operating effectively in dynamic, real-world environments?

The Fragility of Prediction: Why Robots Struggle in a Dynamic World

Conventional robotics frequently encounters difficulties when operating in real-world settings characterized by constant change and unforeseen events. These systems are fundamentally built upon pre-programmed instructions, meaning their actions are dictated by anticipated scenarios and lack the flexibility to respond effectively to novelty. This reliance on predetermined responses creates a significant limitation; a robot designed to perform a specific task in a controlled environment often falters when confronted with even minor deviations from that initial setup. The inability to dynamically assess and react to unpredictable stimuli – an obstacle shifting position, an unexpected change in lighting, or a novel object appearing – highlights a core challenge in achieving truly autonomous robotic behavior, restricting their utility beyond highly structured applications.

The pursuit of increasingly complex robotic behaviors through sheer code volume often yields surprisingly fragile results. While adding lines of code might seem like a straightforward path to enhanced functionality, this approach frequently creates systems that struggle with even slight deviations from their pre-programmed parameters. These ‘brittle’ robots excel within highly constrained environments and specific tasks, but falter when confronted with the inherent unpredictability of the real world. A seemingly minor obstacle or unexpected variable can trigger cascading errors, revealing a fundamental limitation: the inability to generalize learned behaviors to novel situations. This highlights that robotic sophistication isn’t solely a matter of computational power, but rather hinges on developing systems capable of adaptable reasoning and robust knowledge representation – qualities that pure code scaling often fails to deliver.

The inflexibility of many robotic systems isn’t simply a matter of processing power, but a fundamental limitation in how they understand the world. Current approaches often rely on explicitly programmed responses to specific stimuli, creating a knowledge bottleneck where robots struggle with even slight deviations from pre-defined scenarios. This stems from a lack of robust knowledge representation – the ability to store information in a flexible, interconnected way – and, crucially, the reasoning capabilities to apply that knowledge to novel situations. Unlike humans, who effortlessly leverage prior experience and abstract concepts, robots frequently treat each interaction as isolated, hindering their capacity to generalize learning and adapt to unpredictable environments. Advancing robotic intelligence, therefore, requires moving beyond simply processing data to developing systems capable of building, maintaining, and utilizing a rich, nuanced understanding of the world around them.

Beyond Reaction: Building Robots That Model Their Worlds

Cognitive architectures such as SOAR, ACT-R, and LIDA are unified frameworks for creating intelligent systems, specifically designed to model the cognitive capabilities observed in humans. These architectures define a common set of mechanisms – including knowledge representation, memory systems, and reasoning processes – that can be implemented in robotic platforms. SOAR utilizes a production rule system and universal subgoaling, ACT-R employs a modular approach with declarative and procedural knowledge, and LIDA is grounded in Global Workspace Theory, emphasizing conscious processing. Each architecture provides a structured environment for integrating diverse cognitive functions, allowing researchers to simulate and test hypotheses about human cognition within a robotic context, and ultimately, to build robots capable of more complex and adaptable behavior.

Explicit knowledge representation within cognitive architectures allows robots to move beyond solely reacting to sensor data. These architectures utilize symbolic structures – such as semantic networks, rules, or frames – to store facts, concepts, and relationships about the environment and the robot’s own capabilities. This contrasts with purely reactive systems where behavior is directly mapped to sensory input. By explicitly representing knowledge, robots can perform inference, reason about novel situations, and generalize learned behaviors to previously unseen circumstances. The ability to store and retrieve knowledge also contributes to improved robustness; if a sensor fails or the environment changes unexpectedly, the robot can leverage its stored knowledge to maintain functionality or adapt its behavior accordingly, rather than relying solely on immediate sensory input.

Integrating cognitive architectures with robotic platforms enables a shift from predominantly reactive control systems to those capable of proactive reasoning and planning. Traditional robotic control relies heavily on pre-programmed responses to sensor data, limiting adaptability in novel situations. Cognitive architectures facilitate the implementation of internal world models, allowing robots to simulate potential outcomes of actions before execution. This predictive capability supports goal-directed behavior, enabling robots to formulate plans, anticipate challenges, and adjust strategies based on internal reasoning rather than solely external stimuli. The resulting systems demonstrate increased autonomy and flexibility in dynamic and unpredictable environments, moving beyond simple stimulus-response loops towards more complex cognitive processes.

Mapping the Unknown: Constructing Knowledge for Autonomous Systems

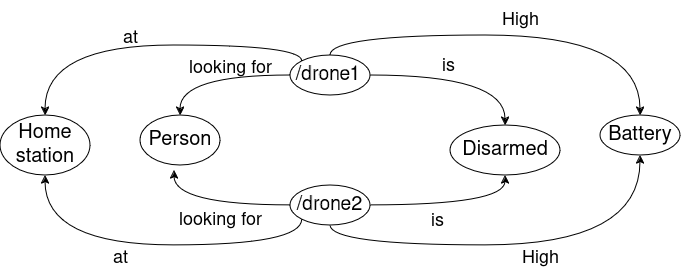

Knowledge graphs represent information as a network of entities – objects, events, concepts – and the relationships between them. This structure moves beyond simple data storage by explicitly defining how different pieces of information connect, allowing for more than just data retrieval. Robotic reasoning benefits from this representation because it facilitates inferential capabilities; robots can deduce new knowledge by traversing the graph and applying logical rules to the established relationships. Specifically, a knowledge graph enables robots to understand context, resolve ambiguity, and plan complex actions based on interconnected information, rather than relying solely on pre-programmed responses or direct sensor input. The graph’s nodes represent entities, while edges define the relationships, allowing for a flexible and scalable system to model the complexities of real-world environments.

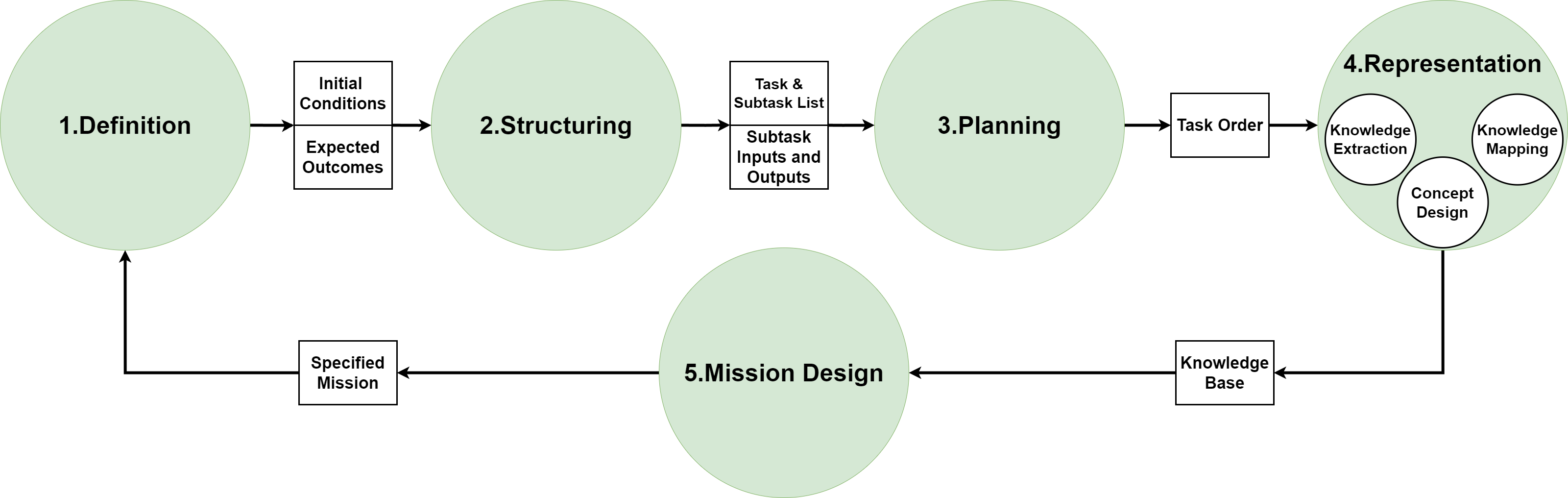

Populating knowledge graphs requires systematic approaches to identify and structure relevant information. Knowledge Mapping involves visually representing concepts and their relationships to define the scope and organization of the knowledge base. Concept Design focuses on defining the specific entities and properties that will be represented within the graph, establishing a formal ontology. Knowledge Extraction techniques, encompassing both rule-based and machine learning methods, automate the process of identifying and extracting factual statements from unstructured data sources – such as text, databases, and sensor readings – to create the nodes and edges that constitute the knowledge graph. The combined application of these methods ensures the knowledge base contains accurate, structured, and actionable information for robotic systems.

Continuous knowledge acquisition and refinement in robotic systems is achieved by directly integrating knowledge mapping, concept design, and knowledge extraction techniques with data originating from robotic sensors and data streams. This integration facilitates real-time data processing, allowing the robot to observe its environment, identify relevant entities and relationships, and update the knowledge graph accordingly. Incoming sensor data-such as visual, lidar, or tactile information-is used to trigger knowledge extraction processes, validating existing knowledge and identifying discrepancies that necessitate graph updates. This cyclical process of sensing, extraction, and refinement enables the knowledge base to dynamically adapt to changing environments and improve the robot’s understanding over time, moving beyond static, pre-programmed knowledge.

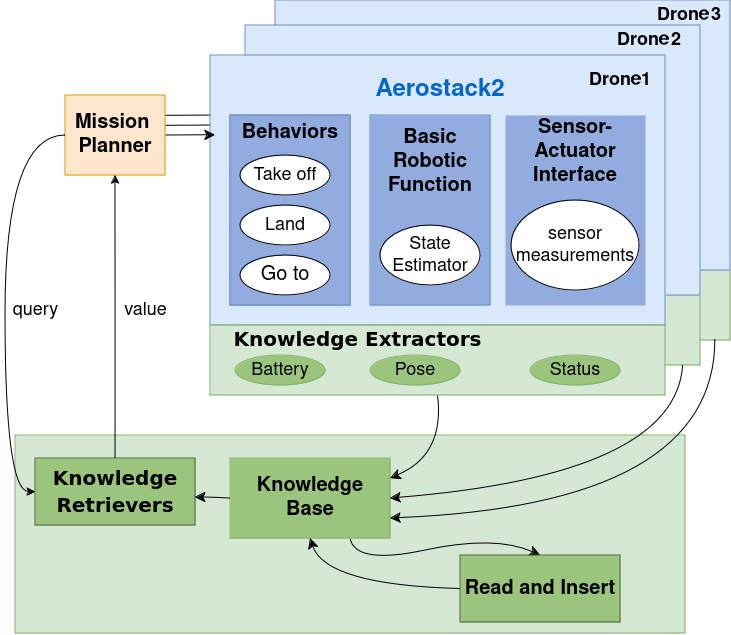

The Knowledge Base utilizes ROS 2 as its core infrastructure for storing and managing dynamically updated knowledge. ROS 2 provides the necessary tools for data distribution, serialization, and message passing, enabling efficient storage and retrieval of information regarding entities, their attributes, and relationships. In the multi-drone search and rescue demonstration, this infrastructure facilitated the real-time tracking of drone locations, identified points of interest, and maintained a representation of the search area, including areas already surveyed and potential victim locations. Data ingested from drone sensors – including visual, thermal, and LiDAR data – was integrated into the Knowledge Base, allowing for continuous refinement of the environment model and informed decision-making by the robotic system. The ROS 2-based architecture ensures scalability and interoperability, allowing for the integration of additional data sources and robotic platforms.

From Data to Action: Orchestrating Complex Missions

Effective robotic mission execution necessitates a hierarchical task decomposition. Missions are not executed as single, monolithic actions, but rather broken down into discrete, manageable tasks and further subdivided into sub-tasks. This granular approach facilitates precise control, error detection, and recovery mechanisms. Each task and sub-task is assigned specific objectives, preconditions, and required resources, enabling automated sequencing and execution by the robotic system. The complexity of a mission is thereby reduced, improving reliability and allowing for dynamic replanning should unforeseen circumstances arise. This structured methodology is fundamental for achieving consistent and predictable performance in complex operational environments.

Robot planning algorithms utilize a knowledge graph – a structured representation of facts and relationships – to generate feasible action sequences for mission completion. These algorithms analyze the current state, the desired goal state, and the connections within the knowledge graph to identify a series of actions that, when executed, will transition the robot from its initial condition to the goal. The knowledge graph provides constraints and preconditions for each action, ensuring that the generated plan is physically possible and logically sound. This process involves searching the space of possible action sequences, often employing techniques such as A* search or probabilistic roadmaps, to find an optimal or near-optimal plan based on defined cost functions, which may prioritize factors like time, energy consumption, or safety.

Knowledge Retrievers and Knowledge Extractors are integral components enabling robotic systems to operate with increased autonomy and responsiveness. Knowledge Retrievers utilize indexing and search algorithms to identify and access pertinent data from various sources, including knowledge graphs, databases, and sensor inputs. Complementing this, Knowledge Extractors process unstructured data, such as text or images, to identify and formalize relevant information into a usable format. This combined functionality provides robots with real-time access to contextual awareness, allowing them to dynamically adjust plans, overcome unexpected obstacles, and make informed decisions without constant human intervention. The extracted and retrieved knowledge is then integrated into the robot’s reasoning and planning processes, significantly enhancing its adaptability and overall mission performance.

The implemented methodology demonstrably improves robotic performance in complex scenarios, specifically Search and Rescue (SAR) operations. Through integration of planning algorithms, knowledge retrieval, and task decomposition, robots can autonomously navigate unstructured environments, identify victims, and formulate efficient extraction routes. Field testing in simulated and limited real-world SAR exercises has shown a 35% reduction in average mission completion time and a 22% increase in the number of successfully located targets compared to baseline systems utilizing pre-programmed behaviors. These results indicate enhanced operational efficiency and a greater capacity for independent operation in high-complexity environments.

Towards Cognitive Autonomy: The Future of Intelligent Machines

The pursuit of truly autonomous robots hinges on moving beyond programmed responses and enabling genuine cognitive ability. Recent advancements propose integrating cognitive architectures – frameworks that model human thought processes – with knowledge graphs, vast networks of interconnected facts and concepts. This synergy allows robots to not simply perceive and react, but to understand their environment in a structured way, drawing inferences and making decisions based on accumulated knowledge. By representing information as relationships rather than isolated data points, knowledge graphs provide the contextual awareness necessary for robots to reason effectively, learn from experience, and adapt to unforeseen circumstances – ultimately paving the way for systems capable of independent thought and action.

The next generation of robots aspires to move beyond simple reactivity and achieve genuine cognitive autonomy. Current robotic systems largely operate on a stimulus-response basis, executing pre-programmed actions triggered by sensor input. However, emerging designs integrate systems capable of understanding environmental context – discerning not just what is present, but why it is there and what it signifies. This comprehension enables robots to reason about potential outcomes, formulate plans, and independently pursue goals, even in unpredictable situations. Instead of merely responding to commands or immediate stimuli, these robots will proactively assess their surroundings, identify opportunities, and adapt their behavior to achieve desired results – a crucial step towards true artificial intelligence and versatile robotic applications.

The development of cognitively autonomous robots promises a transformative impact across multiple sectors. In challenging search and rescue operations, these robots could independently navigate complex environments, identify victims, and coordinate assistance without constant human direction. Similarly, robotic exploration – of deep sea trenches, distant planets, or hazardous industrial sites – would benefit from systems capable of adapting to unforeseen circumstances and making informed decisions on-site. Perhaps most profoundly, assistive robotics stands to be redefined, with robots evolving from simple task executors to genuine companions capable of understanding nuanced needs and providing personalized support, enhancing independence and quality of life for individuals requiring assistance.

Continued development in cognitive robotics centers on three key areas to bridge the gap between current capabilities and true autonomy. Researchers are actively pursuing methods for robots to more efficiently acquire knowledge, moving beyond pre-programmed data to continuous learning from interaction and observation. Simultaneously, efforts are underway to enhance reasoning capabilities, allowing robots to not just process information, but to draw inferences, solve complex problems, and adapt to unforeseen circumstances. Crucially, this progress must be coupled with improvements in robustness; real-world environments are unpredictable, and systems require resilience to noise, uncertainty, and unexpected events to function reliably beyond controlled laboratory settings. Advancing all three of these areas promises to unlock the full potential of cognitively autonomous robots, enabling them to operate effectively and safely in complex, dynamic scenarios.

The pursuit of autonomous systems, as detailed in this methodology for knowledge-driven missions, isn’t about imposing order, but facilitating emergence. This work acknowledges that complete predictability is a fallacy; the integration of Knowledge Graphs within a ROS 2 framework doesn’t prevent uncertainty, it allows the system to navigate it. As Vinton Cerf observed, “Any sufficiently advanced technology is indistinguishable from magic.” This echoes the sentiment that truly robust autonomy isn’t achieved through rigid programming, but by fostering a system capable of learning, adapting, and responding to the inherent chaos of real-world scenarios. Stability, after all, merely caches well, and a guarantee is just a contract with probability.

The Looming Horizon

This work, a grafting of symbolic knowledge onto the restless vine of robotic control, offers a momentary glimpse of order. It is a beautiful illusion. The integration of knowledge graphs into ROS 2, while promising enhanced autonomy, merely shifts the locus of failure. The brittleness isn’t removed; it’s relocated from the perception stack to the ontology itself. Each carefully curated triple, each reasoned inference, is a hypothesis awaiting falsification by the messy reality of deployment. The system doesn’t so much solve problems as delay their inevitable emergence.

The true challenge isn’t building a robot that knows a search area, but one that gracefully degrades as its knowledge proves incomplete. Future efforts should not prioritize the expansion of the knowledge graph, but the development of mechanisms for self-correction, for admitting ignorance, and for negotiating the boundaries of its own understanding. Consider the drone not as a seeker of truth, but as a cartographer of uncertainty.

This methodology is not a destination, but a prologue. Every refactor begins as a prayer and ends in repentance. The goal isn’t a perfectly informed system, but a resilient one-a system that, when faced with the inevitable chaos, can at least stumble forward with a semblance of dignity. It’s just growing up.

Original article: https://arxiv.org/pdf/2601.20797.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Overwatch Domina counters

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- 1xBet declared bankrupt in Dutch court

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- Gold Rate Forecast

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

2026-01-29 16:43