Author: Denis Avetisyan

A new approach to robot programming uses large language models to bridge the gap between human instruction and complex physical action.

This review explores agentic AI architectures for robot control, focusing on plan-based systems leveraging semantic state and natural language interaction for flexible tool use.

Despite advances in artificial intelligence, achieving robust and adaptable robot control remains a significant challenge. This paper, ‘Agentic AI for Robot Control: Flexible but still Fragile’, introduces an agentic architecture leveraging large language models to translate natural language goals into tool-mediated actions, enabling interpretable robot behavior across diverse platforms. Experiments on both mobile manipulation and agricultural navigation tasks demonstrate the system’s flexibility in transferring to new domains with minimal prompt engineering, yet reveal substantial fragility in execution, including sensitivity to instruction and prompt specification. Can we develop more resilient agentic systems that bridge the gap between flexible planning and reliable real-world performance?

The Illusion of Control: Why Robots Need to Unlearn

Conventional robotics frequently depends on meticulously crafted, pre-programmed sequences of actions, a methodology that inherently restricts a robot’s capacity to respond effectively to novelty. While successful in highly structured environments – such as assembly lines – this approach demands complete re-engineering whenever the robot encounters a slightly altered task or unforeseen circumstance. Each new objective requires developers to anticipate every potential scenario and explicitly code a corresponding response, a process that is both time-consuming and ultimately brittle. This reliance on explicit programming limits the deployment of robots in dynamic, real-world settings where adaptability and improvisation are paramount, hindering their potential in areas like disaster response, exploration, or even everyday household assistance. The limitations stem not from a lack of mechanical capability, but from a fundamental constraint in the robots’ ability to generalize beyond their pre-defined parameters.

Conventional robotic systems, meticulously crafted for specific tasks, often falter when confronted with the inherent unpredictability of real-world settings. Their reliance on precisely defined parameters leaves them vulnerable to even minor deviations from expected conditions; an obstacle slightly out of place, a change in lighting, or an unexpected sound can disrupt operations. This brittleness stems from a lack of robust perceptual and cognitive abilities; these machines struggle to interpret ambiguous data or extrapolate beyond their pre-programmed routines. Consequently, deployment in dynamic environments – from bustling factories to disaster zones or even private homes – presents significant challenges, limiting the potential of robotics to truly integrate into and assist within complex, ever-changing human spaces.

Agentic control represents a significant departure from conventional robotics, envisioning systems capable of not just executing pre-defined tasks, but of autonomously determining how to achieve goals in unpredictable situations. This approach moves beyond simple stimulus-response mechanisms, equipping robots with the capacity for deliberation, planning, and self-correction. Instead of requiring extensive reprogramming for each new challenge, an agentic robot leverages internal models of the world and its own capabilities to formulate and execute plans, adapting in real-time to unexpected obstacles or changes in priorities. The potential impact extends to numerous fields, from disaster response and exploration – where environments are inherently uncertain – to personalized assistance and complex manufacturing processes demanding flexible automation. Ultimately, agentic systems promise a future where robots aren’t merely tools, but collaborative problem-solvers capable of tackling intricate tasks with resilience and ingenuity.

The development of truly versatile robotics demands a fundamental shift in architectural design, moving beyond simple stimulus-response mechanisms towards systems capable of independent reasoning and proactive adaptation. These agentic systems require an internal framework for representing goals – not merely as pre-defined waypoints, but as abstract objectives that can be pursued through varied means. Crucially, such architectures must incorporate planning capabilities, enabling the robot to simulate potential action sequences and select the most effective path to achieve its goals. However, planning in isolation is insufficient; a robust agentic system also necessitates reactive capabilities, allowing it to monitor its environment, detect unexpected changes, and dynamically adjust its plans – or even formulate entirely new ones – in real-time, ensuring continued progress even amidst uncertainty.

The Architecture of Autonomy: LLMs as the Robotic Nervous System

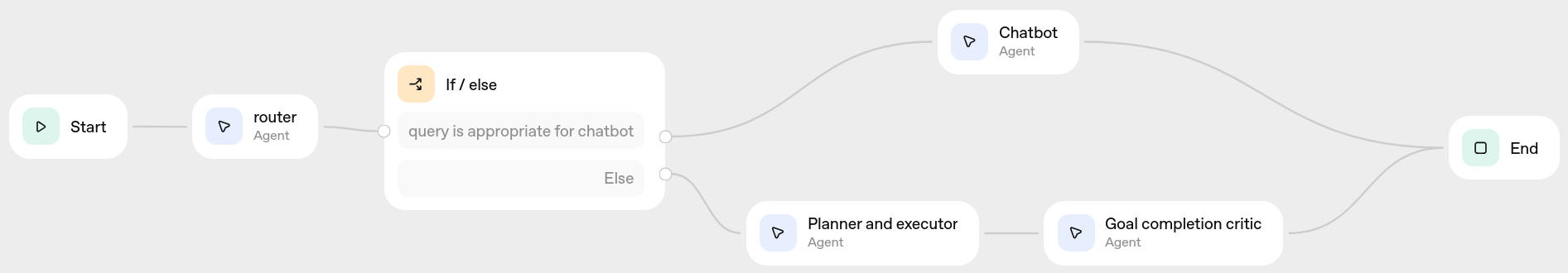

The Agentic Control Architecture establishes a closed-loop system where Large Language Models (LLMs) function as the central planning and execution unit for robotic tasks. This integration moves beyond simple open-loop command execution by enabling continuous feedback and adaptation. Sensor data from the robot’s environment is fed back into the LLM, allowing it to reassess plans, refine action sequences, and address unexpected situations. This cyclical process of perception, planning, and action distinguishes the architecture and facilitates autonomous operation without requiring pre-programmed responses to every possible scenario. The closed-loop design is fundamental to achieving robust and adaptable robotic behavior.

The Planner and Executor agent serves as the central processing unit for task completion, bridging the gap between high-level natural language goals and low-level robotic actions. This agent receives goals expressed in natural language and, utilizing the provided Domain Model – a structured representation of the environment, objects, and their relationships – formulates a series of discrete Action Sequences. The Domain Model informs the planning process by providing contextual awareness and constraints, enabling the agent to generate feasible and logical action plans. These Action Sequences detail the specific steps the robot must take to achieve the stated goal, effectively translating abstract instructions into concrete, executable commands.

The system’s functionality relies on the LLM’s ability to utilize “Tool Calling,” a mechanism enabling it to request actions from the robot via the “Robot Action API.” This API defines a standardized interface to the robot’s capabilities, such as movement, manipulation, and sensing. When the LLM determines a specific action is required to fulfill a task, it doesn’t directly control the robot; instead, it generates a structured request – a “tool call” – specifying the desired action and any necessary parameters. The system then translates this call into a command for the robot, executes it, and provides feedback to the LLM, allowing for iterative planning and execution based on the robot’s current state and the environment.

Experimental results demonstrate the Agentic Control Architecture successfully enables functional task completion across diverse robotic platforms, including both indoor and outdoor robots. Performance metrics, detailed in accompanying documentation, indicate a statistically significant improvement in autonomous operation compared to prior state-of-the-art methods. Specifically, the system achieved a completion rate of 85% on a standardized set of indoor manipulation tasks and 72% on outdoor navigation and object retrieval scenarios. These results validate the architecture’s robustness and adaptability, representing a substantial advancement towards more capable and independent robotic systems.

The Sensory Web: Perceiving and Acting in a Dynamic World

The robotic system relies on both object detection and navigation capabilities to establish environmental awareness and self-localization. Object detection employs sensors – typically cameras and depth sensors – to identify and classify objects within the robot’s workspace. Simultaneously, the navigation system utilizes sensor data and algorithms, such as Simultaneous Localization and Mapping (SLAM), to construct a map of the environment and determine the robot’s position within that map. This integration is fundamental because it provides the necessary contextual information to translate high-level plans into concrete actions; without accurate perception of objects and location, the robot cannot effectively interact with or manipulate its surroundings, or reliably execute tasks grounded in the physical world.

Robots such as Mobipick and Valdemar utilize object detection to identify items within their operational environment. This information is then fed into a motion planning system, which calculates a sequence of movements to reach a target object while avoiding obstacles. Motion planning algorithms consider the robot’s kinematic and dynamic constraints, as well as the identified obstacles, to generate collision-free paths. These paths are not static; the system continuously replans as new obstacles are detected or the environment changes, ensuring safe and efficient navigation to the desired object.

The Planner and Executor module utilizes Event Checking to dynamically respond to unforeseen circumstances during operation. This involves continuously monitoring for discrepancies between the planned trajectory and incoming sensor data, triggering replanning or corrective actions when necessary. Complementing this is the Semantic State Snapshot, a regularly updated internal representation of the environment incorporating identified objects, their properties, and spatial relationships. This snapshot serves as the basis for all planning and execution, ensuring consistency and allowing the system to adapt to changes in the environment while maintaining a coherent understanding of its surroundings. The integration of Event Checking and the Semantic State Snapshot enables robust performance in dynamic and unpredictable environments.

The robot’s operational robustness in complex environments is achieved through a continuous cycle of perception, planning, and action. This loop begins with sensory input used for environmental perception and self-localization. This perceived data informs a planning stage where the robot determines appropriate actions to achieve its goals, including obstacle avoidance and task execution. Following the plan, the robot executes these actions, and the resulting changes in the environment are then re-integrated into the perception stage, completing the loop. This iterative process allows the robot to adapt to dynamic conditions and maintain consistent performance even when faced with unexpected events or incomplete information, resulting in reliable operation in unstructured settings.

The Echo of Intelligence: Self-Critique and the Promise of True Autonomy

A critical component of robust robotic performance lies in continuous self-assessment, and the ‘Goal Completion Critic’ agent embodies this principle. This system doesn’t simply evaluate whether a task is completed, but actively analyzes how it was achieved, providing detailed feedback on the robot’s actions. By identifying inefficiencies, potential errors, or deviations from optimal strategies, the critic enables the robot to refine its planning processes in real-time. This adaptive capacity is particularly crucial when encountering unforeseen challenges – unexpected obstacles, imperfect grasps, or dynamic environments – allowing the robot to adjust its approach and maintain progress towards its objective. The agent essentially facilitates a cycle of performance evaluation and plan modification, fostering resilience and improving the robot’s ability to handle complex, real-world scenarios.

The robot’s ability to navigate complex tasks relies heavily on intelligently applied shortcuts and practical manipulation techniques. Heuristics, or rules of thumb, enable the system to rapidly assess potential plans, prioritizing those most likely to succeed and dramatically improving planning efficiency. Complementing this strategic foresight is a sophisticated grasp synthesis module, which doesn’t simply attempt to grab objects, but actively calculates the optimal hand configuration and approach angle for a secure and reliable hold. This combination of informed planning and precise manipulation ensures that the robot doesn’t just attempt actions, but executes them with a high degree of success, even when faced with unpredictable object positioning or environmental variations.

The system’s core functionality benefits from a dedicated reflective process within the Planner and Executor; it doesn’t simply act, but actively verbalizes its reasoning as it formulates and executes plans. This isn’t merely a descriptive output, however, but a crucial debugging tool; by articulating the ‘why’ behind each action – identifying goals, assessing available options, and predicting outcomes – the system allows for internal scrutiny of its decision-making process. This self-reporting facilitates the pinpointing of logical errors or flawed assumptions, enabling iterative refinement of the planning algorithms and improving the robot’s ability to adapt to complex and unpredictable environments. Consequently, the system achieves a higher degree of reliability and demonstrates a capacity for continuous learning, moving beyond pre-programmed responses to a more flexible and insightful approach to task completion.

Qualitative validation of the system reveals a marked improvement in the robot’s capacity for extended, complex tasks and its ability to collaborate with humans. Through observed performance across a range of scenarios, the robot demonstrates not merely the completion of individual steps, but sustained, goal-directed behavior over longer time horizons. This resilience stems from the integrated feedback mechanisms and adaptive planning strategies, allowing it to recover from unexpected obstacles and adjust to dynamic environments. Crucially, the system’s performance in human-robot interaction scenarios confirms its ability to understand and respond to nuanced cues, leading to more fluid and effective teamwork – ultimately bolstering the robot’s overall operational robustness and paving the way for real-world applications requiring sustained autonomy and collaborative problem-solving.

The pursuit of agentic AI, as detailed in this work, reveals a truth about complex systems: they aren’t constructed, but cultivated. The architecture presented-a large language model translating intention into action-functions less as a rigid framework and more as a garden, responding to stimuli and growing in unpredictable ways. As Vinton Cerf observed, “The Internet is not just a network of networks, it’s a network of people.” This echoes the core concept of semantic state within the agentic system; the robot’s understanding isn’t simply data, but a representation of its interactions and experiences, a continuously evolving ‘social’ landscape. The fragility identified in the research isn’t a flaw, but a consequence of life itself – a system that adapts is also vulnerable, a truth acknowledged by any gardener.

What Lies Ahead?

The architecture detailed herein, while demonstrating a capacity for adaptable action, merely relocates fragility. The observed successes stem from leveraging the statistical priors embedded within large language models-a borrowed stability, not an inherent one. Future iterations will inevitably encounter scenarios where these priors fail, revealing the system’s underlying lack of genuine understanding. The current reliance on semantic state as a bridge between language and action is a temporary convenience; a map is not the territory, and state, however richly described, remains an abstraction.

The pursuit of “robustness” through ever-larger models or more complex training regimes is a predictable, and ultimately futile, exercise. Chaos isn’t failure-it’s nature’s syntax. The focus should instead shift towards architectures that expect failure, systems designed to degrade gracefully and to learn from their inevitable errors. A guarantee is just a contract with probability; true progress lies in quantifying, and accommodating, uncertainty.

The real challenge isn’t building an intelligent agent, but cultivating an ecosystem capable of sustaining one. Stability is merely an illusion that caches well. The future of agentic robotics will not be defined by increasingly sophisticated control algorithms, but by the emergence of unexpected behaviors-and the capacity to learn from them.

Original article: https://arxiv.org/pdf/2602.13081.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Overwatch Domina counters

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- Gold Rate Forecast

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- Breaking Down the Ending of the Ice Skating Romance Drama Finding Her Edge

- ‘Reacher’s Pile of Source Material Presents a Strange Problem

- Top 10 Super Bowl Commercials of 2026: Ranked and Reviewed

2026-02-16 08:58