Author: Denis Avetisyan

Researchers have developed a novel framework that empowers robots to tackle complex manipulation tasks without prior training by combining high-level reasoning with real-time feedback.

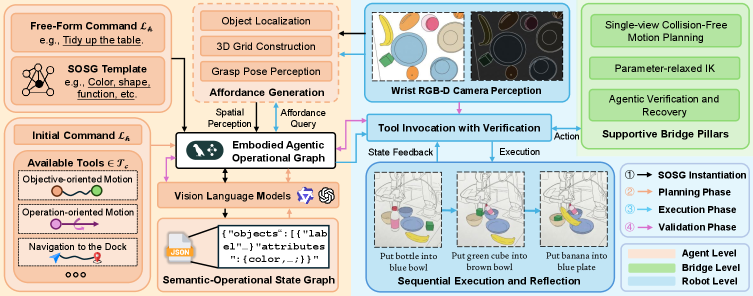

UniManip utilizes a bi-level agentic operational graph to achieve robust zero-shot performance and recover from failures in complex environments through hierarchical planning and closed-loop reflection.

Achieving truly general-purpose robotic manipulation remains a challenge, as existing approaches struggle to bridge semantic understanding with robust physical execution in dynamic environments. To address this, we introduce ‘UniManip: General-Purpose Zero-Shot Robotic Manipulation with Agentic Operational Graph’, a novel framework that unifies high-level reasoning and low-level control through a bi-level agentic operational graph, enabling robust zero-shot performance and autonomous recovery from failures. This approach demonstrates significant improvements over state-of-the-art methods, including a 22.5% and 25.0% higher success rate compared to Vision-Language-Action models and hierarchical planners, respectively, and notably enables transfer to mobile manipulation without retraining. Could this agentic approach pave the way for robots capable of adapting to unforeseen circumstances and seamlessly interacting with the world around them?

The Inherent Limitations of Empiricism in Robotics

Conventional robotic systems often exhibit a frustrating rigidity when confronted with even slight deviations from their programmed parameters. Each new object, altered lighting condition, or unfamiliar environment typically demands a complete retraining process, a significant limitation in practical applications. This dependence on meticulously curated datasets and task-specific programming stems from a lack of generalized understanding; robots excel at repeating learned actions but struggle to extrapolate knowledge to novel situations. The consequence is a system ill-equipped for the unpredictable nature of real-world environments, necessitating constant human intervention and hindering the widespread deployment of robotics beyond highly structured settings like factory assembly lines.

The practical application of robotics faces a significant hurdle due to the extensive, task-specific programming currently required for operation. Robots excel within tightly controlled environments, but their performance degrades rapidly when confronted with even slight deviations from pre-programmed parameters. This dependence on specialized code dramatically restricts their usefulness in the unpredictable conditions of everyday life – from navigating cluttered homes to assisting in rapidly changing industrial settings. Consequently, the promise of widespread robotic assistance remains largely unrealized, as the cost and complexity of adapting robots to new situations often outweigh the benefits. This limitation isn’t merely a technical challenge; it’s a fundamental barrier to broader adoption, preventing robots from becoming truly versatile and integrated components of human activity.

The difficulty in creating truly versatile robots stems from replicating human cognitive flexibility – the capacity to apply learned skills to novel situations without extensive relearning. Unlike current robotic systems, which excel at pre-programmed tasks within rigidly defined parameters, humans effortlessly transfer knowledge across domains, recognizing patterns and adapting strategies on the fly. This inherent adaptability relies on a complex interplay of perception, reasoning, and motor control, allowing for generalization beyond specific training data. Replicating this capability in robotics demands moving beyond algorithms focused on precise execution of known commands, and instead prioritizing systems capable of abstracting underlying principles and applying them to unfamiliar objects and environments – a crucial step towards robots that can truly operate autonomously in the real world.

The limitations of current robotics are increasingly apparent as systems struggle beyond highly controlled, pre-programmed scenarios; a fundamental shift is therefore needed towards machines capable of zero-shot manipulation. This refers to a robot’s ability to perform novel tasks – manipulating previously unseen objects or navigating unfamiliar environments – without any task-specific training data. Rather than relying on extensive re-programming, these systems aim to leverage existing knowledge and generalize learned skills to new situations, mirroring human adaptability. UniManip represents a significant step towards this goal, employing a novel architecture designed to enable this crucial ability to perform actions without explicit instruction, promising a future where robots are truly versatile and capable of thriving in dynamic, real-world settings.

A Cognitive Architecture Rooted in Biological Principles

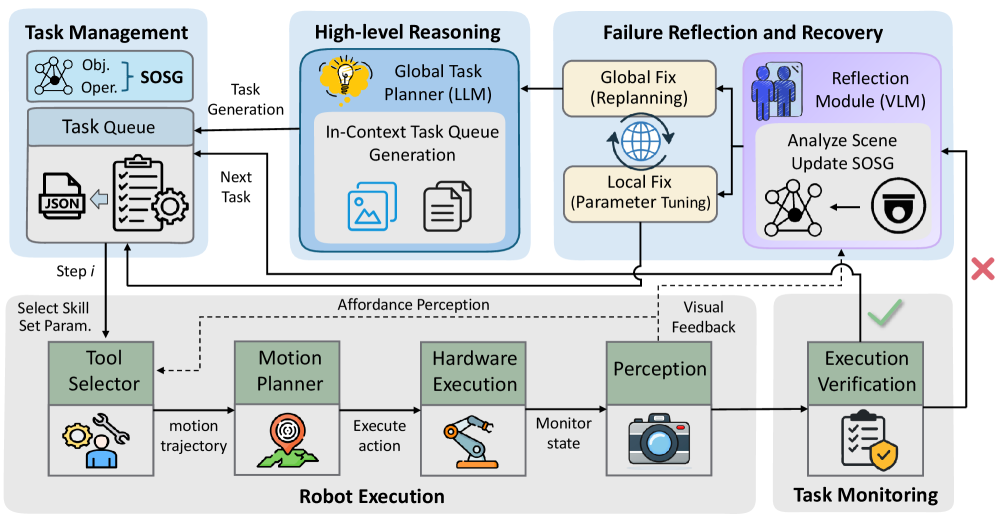

The Bi-level Agentic Operational Graph (AOG) functions as the core cognitive architecture of UniManip, deliberately modeled after the human brain’s distinction between procedural and episodic memory. Procedural memory, responsible for learned skills and automated tasks, is represented through the AOG’s operational component, enabling efficient execution of known procedures. Simultaneously, the episodic memory component captures and integrates real-time sensory data and experiences, creating a contextual understanding of the current environment. This bi-level structure allows UniManip to not only perform pre-programmed actions but also to adapt its behavior based on unique, previously unencountered situations, mirroring the human capacity for both routine execution and novel problem-solving.

The Episodic Memory layer of UniManip’s Bi-level Agentic Operational Graph functions as the system’s primary interface with its immediate environment. This layer continuously processes real-time sensory data – including visual, auditory, and proprioceptive inputs – to construct a dynamic, state-based representation of the current situation. Crucially, this representation isn’t static; the Episodic Memory layer is designed for continuous adaptation, updating its internal model as new sensory information is received and the environment changes. This adaptive capacity allows UniManip to respond effectively to unforeseen circumstances and maintain operational coherence in non-static conditions, forming the foundation for all higher-level reasoning and action planning.

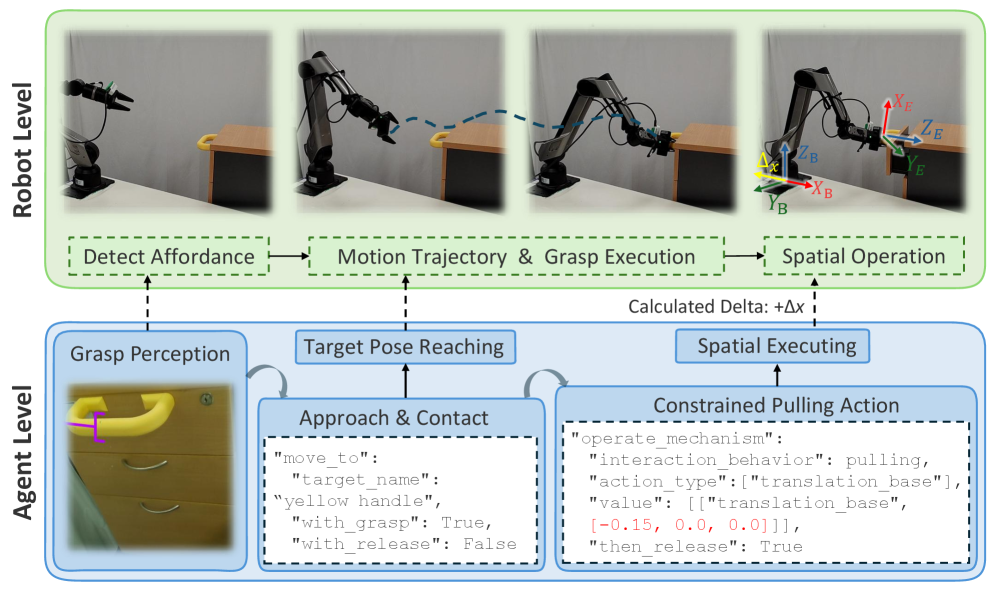

The Agentic Logic Layer within UniManip functions as the system’s central reasoning and control module. It is responsible for decomposing complex tasks into sequential, executable operations, and for managing the overall execution flow. This layer employs a hierarchical planning approach, enabling it to anticipate potential issues and dynamically adjust strategies based on feedback from the Episodic Memory layer. Crucially, the Agentic Logic Layer also incorporates error recovery mechanisms, allowing UniManip to detect failures during task execution, diagnose the root cause, and implement corrective actions – including re-planning or requesting further sensory input – to maintain operational stability and achieve desired outcomes.

The Semantic-Operational State Graph (SOSG) functions as UniManip’s internal world representation, encoding both static knowledge and dynamic states. This graph-based structure allows the system to represent objects, their properties, and relationships, alongside actionable operational states. Nodes within the SOSG define entities and conditions, while edges represent possible actions and their preconditions, enabling UniManip to evaluate potential outcomes before execution. This facilitates informed decision-making by allowing the system to simulate actions within the SOSG and select the optimal path towards task completion. The SOSG’s ability to track state changes and predict consequences is also critical for robust action planning and error recovery, allowing UniManip to adapt to unexpected events and maintain task progress.

![This bi-level agentic operational graph utilizes an AI agent [latex]ALG[/latex] with conditional directed edges operating above a structured semantic understanding of the environment [latex]SOSG[/latex], enabling a nuanced interaction with its surroundings.](https://arxiv.org/html/2602.13086v1/x2.png)

Robustness Through Integrated Planning and Execution

UniManip’s operational safety and reliability are achieved through the integrated implementation of a Safety-Aware Planner and Collision-Free Planning (CFP) algorithms. The Safety-Aware Planner proactively incorporates safety constraints into the trajectory generation process, minimizing potential risks during movement. Complementing this, CFP focuses on constructing paths that strictly avoid collisions with known obstacles in the robot’s workspace. These methods operate in conjunction to ensure that planned trajectories are both feasible and safe, preventing unintended contact and safeguarding both the robot and its environment. This dual approach is foundational to UniManip’s ability to operate autonomously in dynamic and potentially unpredictable settings.

Single-View Conservative Reconstruction allows UniManip to establish a functional environmental understanding despite reliance on limited visual data. This is achieved by generating multiple plausible 3D reconstructions from a single RGB image and conservatively selecting the intersection of these reconstructions as the operative model. This approach prioritizes accuracy by minimizing false positives, even at the cost of potential false negatives, resulting in a more reliable representation of the workspace for planning and execution. The system avoids overestimation of free space, which is crucial for safe manipulation in cluttered environments and minimizes collisions.

Relaxed Inverse Kinematics (RIK) addresses the challenges of generating feasible robot trajectories in complex poses and environments. Traditional inverse kinematics methods often fail when encountering kinematic singularities or obstacles, leading to trajectory discontinuities or impossible configurations. RIK mitigates these issues by formulating the inverse kinematics problem as an optimization process that prioritizes finding a solution that approximates the desired pose while adhering to kinematic limits and avoiding collisions. This relaxation allows for the generation of valid, albeit potentially slightly adjusted, trajectories even in scenarios where a perfect solution is unattainable, improving the robot’s ability to navigate constrained workspaces and maintain continuous motion.

UniManip’s adaptability is achieved through Closed-Loop Reflection, a process of continuous monitoring during task execution. This system incorporates Failure Detection and Recovery (FDR) mechanisms to identify and address anomalies that arise during manipulation. When necessary, the system dynamically adjusts planned trajectories and, utilizing a Tool Selector, chooses the appropriate end-effector for the task. Evaluations demonstrate a 93.75% success rate on zero-shot manipulation tasks, representing a substantial performance increase compared to state-of-the-art Vision-Language-Action (VLA) methods, which achieve a 71.25% success rate under identical conditions.

Expanding the Horizon: Mobile Manipulation and Beyond

UniManip has transcended static robotic arm manipulation by integrating mobile navigation capabilities, allowing it to operate effectively within complex, dynamic environments. This advancement utilizes visual servoing, enabling the robot to visually track and approach target objects, coupled with an open-vocabulary detector that identifies objects even if they haven’t been explicitly programmed. The system doesn’t rely on pre-defined object models; instead, it leverages learned visual representations to understand and interact with a wide range of items in real-world scenarios. This combination allows UniManip to not only locate objects but also to plan and execute manipulation tasks while autonomously navigating cluttered spaces, representing a significant step toward more versatile and adaptable robotic systems.

Recent advancements in robotic manipulation have yielded a system capable of performing tasks without prior training – achieving an 80% success rate across 90 trials in rigorous ablation studies. This zero-shot capability extends to real-world complexity, with the system maintaining an impressive 82.5% success rate when operating in cluttered environments. These results demonstrate a significant leap in robotic adaptability, suggesting the system can effectively generalize learned behaviors to novel situations and objects without the need for task-specific programming or extensive datasets. The consistent performance across varied conditions highlights the robustness of the approach and its potential for deployment in dynamic, unpredictable settings where pre-programmed responses are insufficient.

The system’s remarkable adaptability stems from a synergistic integration of Vision-Language-Action (VLA) Models and Hierarchical Planning. This combination allows for the decomposition of complex manipulation tasks into a sequence of simpler, more manageable actions. VLA Models provide the crucial link between visual perception and linguistic instruction, interpreting human commands and identifying relevant objects within the environment. Hierarchical Planning then leverages this understanding to create a multi-level action plan, strategically organizing sub-tasks to achieve the overarching goal. By breaking down intricate procedures, the system can navigate uncertainty and respond effectively to dynamic changes, ultimately enabling robust performance in unstructured environments and facilitating interaction with a wide range of objects and scenarios.

A key strength of the UniManip framework lies in its precision; the system demonstrates a significantly reduced rate of mistakenly grasping unintended objects, achieving a distractor grasping rate of only 7.78% – a substantial improvement over existing methods. This enhanced ability to accurately identify and target the correct object stems from improved grounding within the system’s perception and action planning. Such reliability isn’t merely an academic achievement, but a critical requirement for real-world deployment; it unlocks potential applications in demanding fields like manufacturing, where precision is paramount, logistics, where efficient object handling is essential, and even healthcare, where delicate manipulation requires unwavering accuracy. This inherent flexibility and robustness positions UniManip as a versatile solution ready to address a wide spectrum of automation challenges.

![The UniManip framework successfully enables a mobile robot with a [latex]65[/latex]-degree-of-freedom arm to navigate an office environment and perform manipulation tasks at multiple docking points.](https://arxiv.org/html/2602.13086v1/x9.png)

The pursuit of a universally capable robotic manipulation framework, as demonstrated by UniManip, echoes a fundamental principle of logical construction. The system’s bi-level agentic operational graph strives for a provably correct sequence of actions, moving beyond mere empirical success. This resonates with Bertrand Russell’s assertion: “The point of the experiment is to distinguish between what is known and what is unknown.” UniManip, through its closed-loop reflection and hierarchical planning, doesn’t simply react to stimuli; it actively refines its understanding of the environment, solidifying its operational logic and minimizing the realm of the ‘unknown’ in complex manipulation tasks. The framework’s capacity for zero-shot generalization is, in essence, a testament to the power of a logically sound and adaptable operational structure.

What’s Next?

The presentation of UniManip, while a logical progression in agentic robotics, serves as a stark reminder: demonstrable function does not equate to fundamental understanding. The framework’s zero-shot capabilities, achieved through semantic operational graphs and closed-loop reflection, are undeniably impressive. However, the underlying assumptions regarding environmental predictability remain largely unaddressed. A robust system must not merely react to failure, but anticipate it – and that requires a formalization of uncertainty currently absent.

Future work would be well-served by shifting focus from purely empirical validation to provable guarantees. Optimization without analysis is self-deception, a trap for the unwary engineer. Demonstrating successful manipulation in a curated set of environments is insufficient. The true challenge lies in establishing the boundaries of this framework’s applicability-identifying the classes of problems where its agentic approach is demonstrably superior to more traditional, explicitly modeled solutions.

Ultimately, the field requires a more rigorous mathematical foundation for representing both the robot’s internal state and its perception of the external world. The current reliance on semantic grounding, while pragmatic, obscures the inherent ambiguities of physical interaction. A truly general-purpose roboticist will not simply build systems that appear intelligent; it will construct systems whose behavior is mathematically inevitable.

Original article: https://arxiv.org/pdf/2602.13086.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Overwatch Domina counters

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- Gold Rate Forecast

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- Breaking Down the Ending of the Ice Skating Romance Drama Finding Her Edge

- ‘Reacher’s Pile of Source Material Presents a Strange Problem

- Top 10 Super Bowl Commercials of 2026: Ranked and Reviewed

2026-02-16 20:51