Author: Denis Avetisyan

Researchers have developed a human-in-the-loop system that allows robots to intelligently request assistance when they encounter difficulties, improving task reliability and reducing human workload.

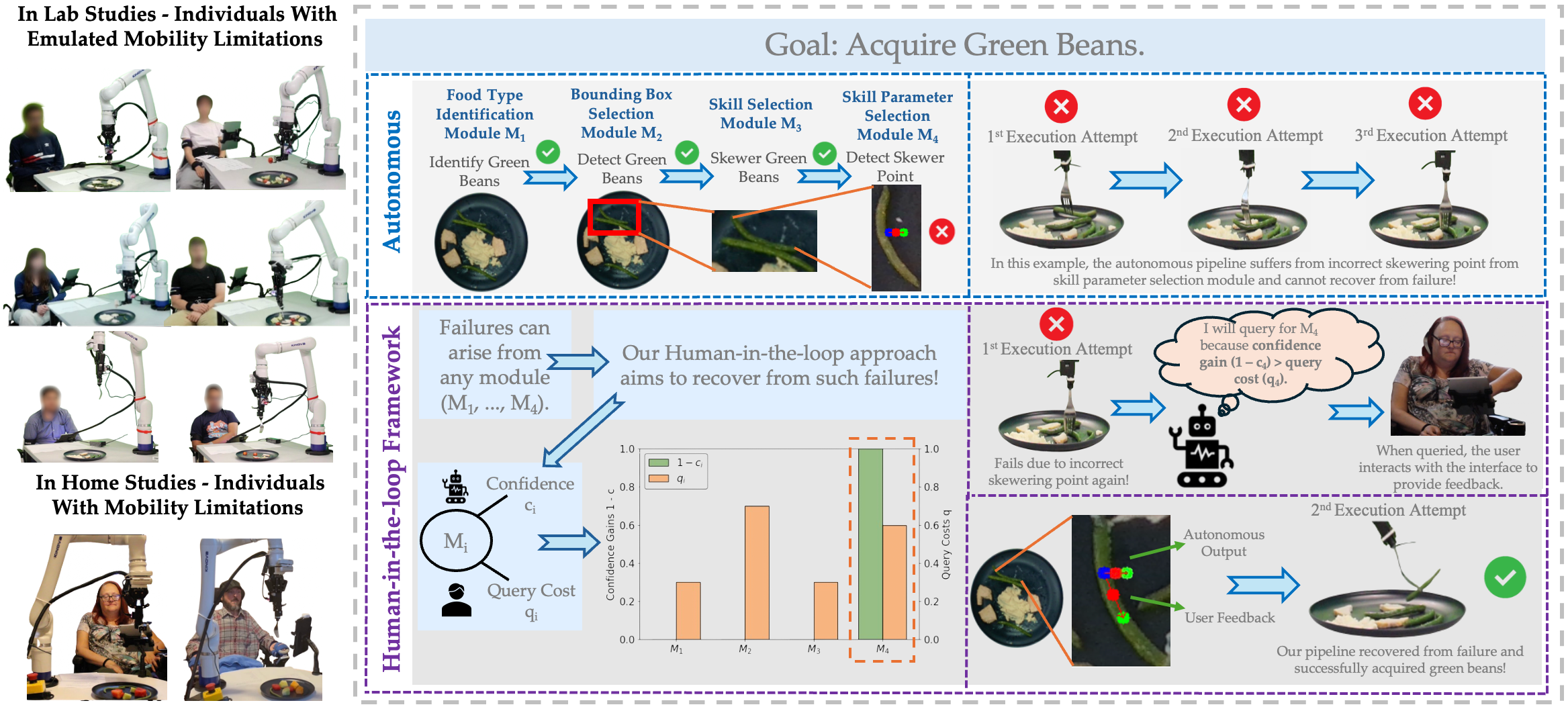

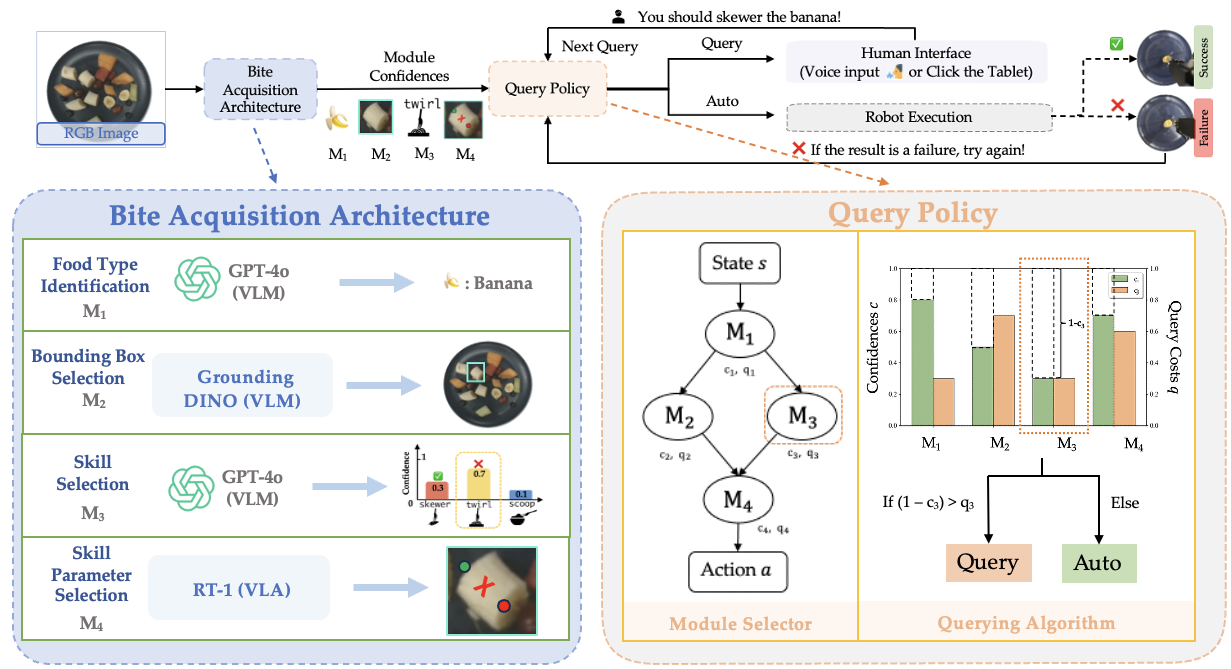

This work presents a confidence-aware failure recovery framework for modular robot policies, demonstrated through a bite acquisition task.

Despite advances in robotic autonomy, failures remain inevitable in unstructured environments, particularly during tasks requiring human collaboration. This paper introduces ‘A Human-in-the-Loop Confidence-Aware Failure Recovery Framework for Modular Robot Policies’-a system designed to optimize assistance requests from humans by integrating module-level uncertainty estimates with models of human workload. Our framework dynamically selects which robotic module requires human intervention and when to request it, demonstrably improving recovery success and reducing user effort, as validated through studies involving individuals with mobility limitations during a bite acquisition task. How can explicitly reasoning about both robotic confidence and human capabilities lead to more efficient and user-centered collaborative robotics systems?

The Inevitable Failures of Predictable Systems

Conventional robotic systems, despite advancements in engineering, frequently encounter difficulties when faced with unforeseen circumstances, necessitating human intervention to resume operation. This reliance stems from a core limitation: most robots are programmed for predictable environments and tasks, lacking the adaptability to effectively manage anomalies like sensor malfunctions, unexpected obstacles, or deviations from pre-programmed routines. Consequently, even minor disruptions can cascade into complete system failures, halting progress and demanding manual correction. The persistent need for human oversight not only limits the scalability of robotic deployments but also undermines the promise of true autonomy – a capability where robots can independently address challenges and maintain consistent performance without external support. Addressing this fragility is paramount for expanding the role of robots in complex, real-world scenarios.

Truly autonomous robotic operation hinges not on flawless execution, but on the capacity to gracefully recover from inevitable failures. Current systems often operate on the assumption of predictable environments, yet real-world scenarios are rife with uncertainty – unexpected obstacles, sensor noise, or component malfunctions. Robust failure recovery necessitates more than simply detecting an error; it requires a layered approach incorporating redundancy, self-diagnosis, and adaptable planning. Researchers are exploring techniques like meta-controllers that monitor performance and dynamically reconfigure robot behavior, alongside algorithms that enable robots to estimate the reliability of their own actions and proactively mitigate potential issues. This proactive stance, acknowledging and accounting for uncertainty, is paramount to achieving genuinely autonomous systems capable of sustained operation without constant human oversight.

Existing robotic systems frequently demonstrate brittle behavior when confronted with scenarios diverging from their training data or pre-programmed parameters. This inflexibility stems from a reliance on precisely modeled environments and predictable events; deviations – a misplaced object, unexpected lighting, or novel surface textures – can rapidly degrade performance, leading to errors or complete operational failure. While sophisticated algorithms can address known uncertainties, the ability to generalize to genuinely new situations remains a significant hurdle. Consequently, robots often struggle to maintain consistent performance across diverse real-world environments, requiring constant recalibration or human oversight to prevent catastrophic failures and limiting their potential for truly autonomous operation. This lack of adaptability underscores the need for more robust and learning-based approaches that enable robots to not simply react to pre-defined contingencies, but to intelligently assess and respond to the unpredictable nature of complex environments.

A truly collaborative robot doesn’t simply offload tasks to a human partner at the first sign of difficulty; instead, advanced systems are being developed to assess their own confidence in completing a task and request aid strategically. This necessitates a nuanced understanding of a robot’s limitations – not just what it can’t do, but also how likely it is to succeed given inherent uncertainties. Researchers are focusing on algorithms that allow robots to quantify this uncertainty and weigh the cost of potential failure against the disruption caused by requesting human intervention. The goal is a partnership where the robot handles tasks autonomously as much as possible, and only seeks assistance when its confidence falls below a critical threshold, maximizing efficiency and fostering a seamless, intuitive collaboration with its human counterpart.

A System Built on Self-Awareness

ConfidenceAwareReasoning, the core of this robotic framework, operates by assigning confidence scores to each module based on its operational history and internal state estimation. These scores, ranging from 0.0 to 1.0, quantitatively represent the module’s reliability in performing its designated task. During operation, the system continuously monitors these scores; modules with consistently low confidence are flagged, triggering either a request for human assistance or a shift in task allocation to more reliable modules. This dynamic assessment allows the robot to proactively mitigate potential failures, improving overall system robustness and ensuring safe operation even when individual components encounter uncertainty or limitations. Furthermore, confidence scores are used to weight the contributions of different modules during multi-module reasoning, prioritizing information from sources deemed more trustworthy.

A ModularRobotSystem architecture is predicated on the decomposition of robotic functionality into independent, identifiable modules. This allows for targeted fault diagnosis and recovery; when a module reports low confidence or fails, the system can isolate the issue without requiring a complete shutdown or hindering operation of unaffected components. Error handling is thus localized, enabling continued performance with potentially degraded, but functional, capabilities. Furthermore, modularity facilitates independent testing, debugging, and future upgrades of individual components without impacting the entire system, reducing development time and increasing overall system robustness. The interfaces between modules are clearly defined, enabling dynamic reconfiguration and adaptation to changing task requirements or environmental conditions.

The ModuleSelector component operates by evaluating available robot modules based on their relevance to the current task and their reported confidence levels. This evaluation utilizes a weighted scoring system, prioritizing modules with both high confidence in their capabilities and a demonstrated ability to address the identified uncertainty. The selection process considers factors such as the type of assistance required – whether it’s clarification, confirmation, or direct intervention – and the cognitive load imposed on the WorkloadModel. By strategically choosing the most appropriate module for assistance requests, the ModuleSelector minimizes unnecessary interruptions and optimizes the efficiency of human-robot collaboration.

The system’s ability to request assistance from a human operator is governed by a WorkloadModel which dynamically estimates the cognitive load currently imposed on the human. This model considers factors such as ongoing tasks, recent requests, and time since the last interaction to predict the human’s availability and capacity for additional cognitive effort. The resulting workload score is then used to modulate the frequency and timing of assistance requests; higher scores result in fewer or delayed requests, while lower scores allow for more frequent interaction. This adaptive approach aims to minimize disruption to the human operator’s primary activities and maintain a sustainable level of human-robot collaboration.

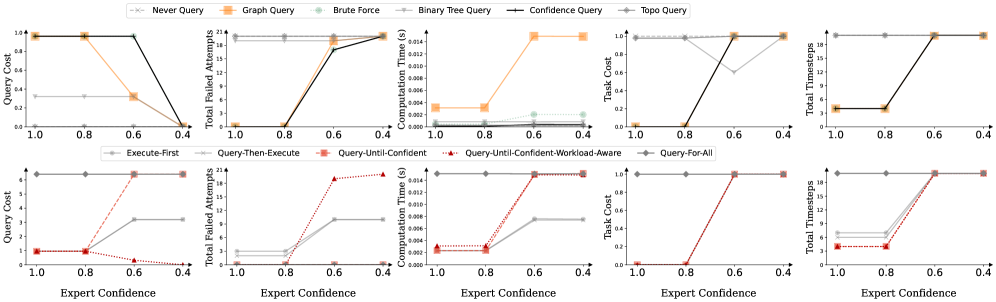

![Ablation studies demonstrate that [latex]BruteForce[/latex] and [latex]GraphQuery[/latex] module selectors exhibit the most robustness and scalability across varying graph redundancies, confidences, and query costs, while [latex]GraphQuery[/latex] outperforms [latex]ConfidenceQuery[/latex] when module confidences overlap and workload variance is high.](https://arxiv.org/html/2602.10289v1/x1.png)

Probing for Certainty: Algorithms for Adaptive Assistance

The ‘QueryUntilConfident’ algorithm is a recursive query mechanism designed to enhance system reliability by actively seeking human assistance until a specified confidence threshold is met. This process initiates a request for input when the system’s internal confidence in a given task or decision falls below the predetermined level. The system then integrates the human-provided information and recalculates its confidence. This cycle of querying and confidence assessment continues iteratively until the confidence level reaches or exceeds the defined threshold, at which point the system proceeds with the task autonomously. The confidence score is determined by a weighted combination of factors including internal state estimation and the reliability of previous human inputs.

The ‘QueryUntilConfidentWorkloadAware’ algorithm builds upon ‘QueryUntilConfident’ by incorporating an assessment of the human operator’s current workload. This refinement prevents excessive or poorly-timed assistance requests, mitigating potential cognitive overload. The algorithm dynamically adjusts the frequency and timing of queries based on factors such as recent task completion, concurrent activities, and estimated cognitive demand. This adaptive approach aims to maintain a sustainable level of human-machine collaboration, ensuring the operator is not overburdened while still receiving necessary support to achieve desired confidence levels in task completion.

The ‘ModuleSelector’ employs ‘GraphQuery’ as a mechanism for identifying relevant software modules by analyzing dependency relationships and associated confidence scores. ‘GraphQuery’ operates on a knowledge graph representing module interactions, allowing the system to traverse connections and assess the reliability of each module based on previously recorded performance data. Modules are ranked according to a combined score derived from dependency weight and confidence level; this prioritized list is then used to select the most appropriate module for a given task, optimizing both efficiency and the probability of successful completion. The system dynamically updates confidence scores based on runtime performance, refining module selection over time.

The efficacy of the querying and assistance algorithms – including ‘QueryUntilConfident’ and its workload-aware variant – was assessed through the ‘BiteAcquisitionTask’. This task simulated a real-world scenario demanding system robustness in the face of potential failures, specifically requiring participants to collaboratively acquire a bite-sized object. Validation involved both controlled in-lab studies and in-home user studies, allowing for assessment of performance across varying environmental conditions and user expertise levels. Data collected from these studies demonstrated the algorithms’ ability to effectively leverage human assistance and recover from errors during task completion, confirming their practical applicability.

![Performance comparisons reveal that both the Query-Until-Confident-Workload-Aware algorithm and the GraphQuery module selector exhibit consistent results across varying numbers of modules [latex]N[/latex] within the module graph [latex]G_{M}[/latex], as demonstrated by median, upper, and lower quartile values from 100 trials (mean for Task Cost).](https://arxiv.org/html/2602.10289v1/x3.png)

Knowledge as a Shield: Bolstering Reliability Through Integration

To enhance the precision and adaptability of core functionalities, the system integrates Retrieval-augmented Generation, or RAG, directly into both the FoodTypeDetectionModule and the SkillSelectionModule. This approach moves beyond static programming by allowing these modules to dynamically access and incorporate relevant information from a knowledge base during operation. Rather than relying solely on pre-existing training data, RAG enables the system to retrieve pertinent examples or contextual details – effectively “looking up” information as needed – and use this retrieved knowledge to inform its decision-making process. This results in significantly improved accuracy, particularly when encountering unfamiliar food types or skill requests, and builds a more robust system capable of gracefully handling unexpected inputs or failures.

The system’s modules demonstrate enhanced resilience through the integration of previously encountered data and learned patterns. This allows them to extrapolate beyond the confines of their initial training, effectively navigating unfamiliar scenarios and mitigating the impact of unforeseen errors. By referencing a repository of past experiences, the modules don’t simply rely on rote memorization; instead, they construct generalized understandings that facilitate adaptive responses to novel inputs. This knowledge integration proves particularly valuable when faced with ambiguous or incomplete information, enabling the system to leverage contextual clues and probabilistic reasoning to arrive at robust and reliable conclusions, even in the face of uncertainty.

Rather than simply assigning a probability to a decision, the system employs a ‘ConfidenceInterval’ to articulate the reliability of its outputs. This approach moves beyond a single point estimate, instead providing a range within which the true value is likely to fall. This nuanced assessment acknowledges inherent uncertainty and allows for a more informed evaluation of the system’s performance; a wider interval indicates greater ambiguity, while a narrower one suggests stronger conviction. Consequently, downstream processes can leverage this interval to prioritize interventions, request human oversight when confidence is low, and ultimately operate with a more realistic understanding of the system’s capabilities – fostering trust through transparency regarding its own limitations.

The system’s efficacy is fundamentally linked to what is termed ‘ExpertConfidence’, a metric that quantifies the reliability placed on feedback provided by a human partner. This isn’t merely about acknowledging human input; it’s an active assessment of the quality of that input, factoring in the partner’s demonstrated accuracy and consistency in previous interactions. A higher ExpertConfidence weighting allows the system to more readily incorporate human corrections, accelerating learning and adaptation, while lower confidence triggers increased internal validation and cautious integration of suggestions. Essentially, the system learns to ‘trust’ its human partner – and that trust, calibrated through performance, becomes a crucial determinant of overall success, especially when navigating ambiguous or unforeseen circumstances.

The pursuit of robust robotic systems, as detailed in this framework for failure recovery, inevitably reveals the limitations of pre-defined architectures. The system doesn’t simply fail when encountering unexpected scenarios during bite acquisition; it evolves into a state demanding human intervention, a predictable consequence of complexity. Ada Lovelace observed, “The Analytical Engine has no pretensions whatever to originate anything. It can do whatever we know how to order it to perform.” This highlights the critical dependency on human foresight-and the inevitability of the unforeseen. The confidence estimation and workload modeling aren’t about preventing failure, but rather about gracefully accepting its emergence and intelligently distributing the burden of response, a testament to the ecosystemic nature of these systems.

What’s Next?

This work, predictably, does not solve failure. It merely postpones the inevitable, shifting the burden from wholly autonomous systems to a shared, human-machine responsibility. The framework presented is, at its heart, a negotiation with uncertainty-a carefully constructed illusion of control. The true challenge lies not in refining confidence estimation, but in acknowledging its inherent limitations. Every sensor reading is a lie told with varying degrees of precision; every policy, a temporary truce with the chaos of the physical world.

Future iterations will undoubtedly focus on scaling these approaches to more complex tasks and environments. Yet, a more profound question remains: how does one design for graceful degradation? Not simply minimizing downtime, but embracing the inevitability of failure as an opportunity for learning and adaptation. There are no best practices-only survivors. The system will not be judged by its successes, but by the elegance of its failures.

The pursuit of ‘human-in-the-loop’ should not be mistaken for a quest for perfect symbiosis. It is, instead, a recognition that architecture is how one postpones chaos. Order is just cache between two outages. The next generation of robotic systems will not be defined by their intelligence, but by their humility-their ability to yield to the unpredictable currents of reality, and to learn from the wreckage.

Original article: https://arxiv.org/pdf/2602.10289.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash of Clans Unleash the Duke Community Event for March 2026: Details, How to Progress, Rewards and more

- Gold Rate Forecast

- Star Wars Fans Should Have “Total Faith” In Tradition-Breaking 2027 Movie, Says Star

- KAS PREDICTION. KAS cryptocurrency

- Christopher Nolan’s Highest-Grossing Movies, Ranked by Box Office Earnings

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Jujutsu Kaisen Season 3 Episode 8 Release Date, Time, Where to Watch

- Jason Statham’s Action Movie Flop Becomes Instant Netflix Hit In The United States

- Jessie Buckley unveils new blonde bombshell look for latest shoot with W Magazine as she reveals Hamnet role has made her ‘braver’

- How to download and play Overwatch Rush beta

2026-02-12 22:40