Author: Denis Avetisyan

Researchers have developed a new framework that allows humanoid robots to seamlessly combine movement and manipulation, even on challenging and unpredictable terrain.

PILOT, a perceptive integrated low-level controller, uses reinforcement learning and attention mechanisms to achieve robust whole-body control for loco-manipulation tasks.

Achieving stable and adaptable whole-body control remains a key challenge for humanoid robots operating in real-world environments. This paper introduces ‘PILOT: A Perceptive Integrated Low-level Controller for Loco-manipulation over Unstructured Scenes’, a unified reinforcement learning framework designed to synergize perceptive locomotion with expansive whole-body control. By fusing proprioceptive and attention-based perceptive representations within a Mixture-of-Experts policy, PILOT demonstrates superior stability, precision, and terrain traversability in both simulation and on a physical Unitree G1 robot. Could this framework serve as a foundational low-level controller, enabling truly robust and versatile loco-manipulation capabilities for humanoid robots in complex, unstructured scenes?

The Challenge of Embodied Intelligence

Conventional robotic systems frequently encounter difficulties when operating beyond the confines of carefully controlled laboratories, largely due to the unpredictable nature of real-world environments. These systems, often reliant on precise pre-programmed instructions and static maps, struggle with unexpected obstacles, shifting terrains, and the constant flux of dynamic scenes. Consequently, a significant challenge lies in developing robots capable of robust adaptation – the ability to perceive changes, replan actions, and maintain stability in the face of uncertainty. This necessitates advancements in sensing technologies, algorithms for real-time environmental analysis, and control systems that can effectively manage the inherent complexities of unstructured and ever-changing surroundings, pushing the boundaries of robotic autonomy beyond pre-defined parameters.

The pursuit of genuine loco-manipulation – the seamless coordination of movement and physical interaction with the environment – faces significant hurdles in both state estimation and control systems. Robots operating in the real world must accurately perceive their own state – position, velocity, joint angles – and the state of surrounding objects, a process complicated by sensor noise and unpredictable dynamics. Simultaneously, control algorithms must translate these estimations into precise, stable actions, accounting for the robot’s morphology and the often-unstructured nature of its surroundings. Current limitations in these areas frequently manifest as jerky movements, instability when encountering unexpected obstacles, and an inability to perform delicate or complex manipulations. Overcoming these challenges necessitates advancements in sensor fusion, robust filtering techniques, and the development of control strategies capable of adapting to uncertainty and maintaining stability in dynamic, real-world scenarios.

The persistent difficulty in deploying robots effectively stems from their struggle with real-world complexity. Current methodologies, reliant on precisely mapped environments and predictable interactions, falter when confronted with the inherent disorder of unstructured spaces. This leads to systemic instability – a robot attempting a task in a dynamic environment may exhibit jerky movements, frequent corrections, or outright failure. Moreover, inefficiency arises as robots expend unnecessary energy and time compensating for unforeseen obstacles or variations. The core issue isn’t a lack of processing power, but rather the limitations of algorithms designed for idealized scenarios, unable to robustly interpret sensory data and adapt control strategies to the constant flux of the physical world. Addressing this requires a paradigm shift towards systems capable of learning and generalizing from experience, effectively bridging the gap between simulated perfection and the messy reality of operation.

PILOT: A Framework for Perceptive Control

PILOT unifies reinforcement learning (RL) with perceptive locomotion and whole-body control by establishing a single framework for these traditionally disparate robotic capabilities. This integration allows the robot to learn complex behaviors-such as navigating challenging terrain or manipulating objects-through RL while simultaneously leveraging perceptual inputs to enhance robustness and adaptability. Specifically, PILOT moves beyond traditional control methods by enabling the robot to actively perceive its environment and react accordingly, rather than relying solely on pre-programmed actions or pre-defined state estimations. The system facilitates the training of policies that directly map perceptual observations to control commands for both locomotion and full-body manipulation, creating a cohesive and adaptable robotic system.

Hierarchical reinforcement learning within PILOT facilitates the breakdown of complex locomotion tasks into a series of sub-goals and primitive actions. This decomposition is achieved through a multi-level structure where a high-level policy selects abstract goals, and low-level policies execute the necessary motor commands to achieve them. This approach significantly reduces the dimensionality of the action space, allowing for more efficient learning and improved generalization to novel situations. By learning reusable skills at each level of the hierarchy, the robot can adapt to variations in environment and task requirements without retraining the entire system, ultimately increasing robustness and efficiency in perceptive control.

The Cross-Modal Context Encoder (CMCE) within PILOT facilitates improved state estimation by fusing proprioceptive data – information regarding the robot’s internal state like joint angles and velocities – with perceptive data derived from external sensors such as cameras and LiDAR. This fusion is achieved through a learned, multimodal representation, allowing the system to infer a more accurate and complete understanding of the robot’s state and its surrounding environment. By combining these data sources, the CMCE mitigates the limitations of relying solely on either proprioceptive or perceptive information, particularly in scenarios with sensor noise, occlusions, or dynamic environments. The resulting state estimate then informs the robot’s control and locomotion policies, enhancing robustness and performance.

Bridging Perception and Action

The Attention-based Multi-Scale Perception Encoder improves robotic terrain traversal stability by selectively focusing on pertinent environmental features. This encoder processes sensory input to prioritize information crucial for stable locomotion, resulting in a measured stumble rate of 0.006. Comparative testing demonstrates a significant improvement over systems lacking this attention mechanism, which exhibited a stumble rate of 0.066 under identical conditions. The reduction in stumbles indicates enhanced robustness and reliability in challenging terrains.

The system utilizes LiDAR-derived elevation maps as a primary data source for environmental perception. These maps provide a detailed, height-field representation of the surrounding terrain, enabling the robot to assess traversability and identify potential obstacles. Specifically, the LiDAR data is processed to generate a grid of elevation values, allowing the system to calculate slope, roughness, and height discontinuities. This information is then used to plan locomotion strategies and avoid unstable or impassable areas, directly informing the action selection process and improving navigational performance. The resolution of the elevation map is dynamically adjusted based on robot speed and sensor range to balance computational efficiency with accuracy.

The system utilizes a Prediction-based Proprioceptive Encoder to estimate future robot states, specifically body configuration and velocity, based on current and past actions. This encoder processes proprioceptive data – information about joint angles, velocities, and forces – and generates predictions used to inform subsequent control decisions. By anticipating future states, the system proactively adjusts its actions to minimize deviations from the desired trajectory, resulting in demonstrably smoother and more efficient locomotion. The predicted states are integrated into the control loop, allowing the robot to preemptively correct for expected disturbances and maintain stability during complex terrain traversal.

Residual Action Parameterization (RAP) optimizes the learning process by decoupling the desired motion from corrective adjustments. Instead of directly learning an absolute action, the system learns a residual – the difference between the desired action and the current state. This approach reduces the complexity of the learning task, as the system focuses solely on refining movements rather than generating them from scratch. Consequently, RAP accelerates adaptation to new terrains and dynamic conditions by allowing the system to quickly learn and apply corrective actions, leading to more efficient and robust locomotion.

Robustness and Adaptability in Action

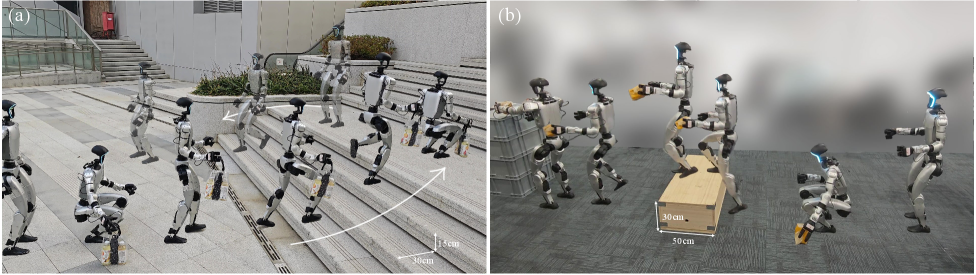

Recent advancements in robotics have yielded a system capable of navigating complex, real-world terrains while reliably manipulating objects. This capability stems from a refined approach to whole-body coordination, allowing the robot to maintain stability and efficiency even in unstructured environments. Testing demonstrated a perfect success rate – 100% – in transporting objects through challenging scenarios, specifically stair traversal and navigating high platforms, as evidenced by successful completion of all five trials for each task. This achievement signifies a substantial leap toward robots operating autonomously and dependably in dynamic, everyday settings, moving beyond controlled laboratory conditions and into the complexities of the outside world.

A critical component of the system’s stability lies in its real-time assessment of terrain interaction, achieved through the implementation of a Foot Stumble Metric. This metric doesn’t simply detect falls, but proactively evaluates the potential for instability based on foot placement and ground contact. By continuously monitoring factors like ground slope, surface texture, and the robot’s center of mass, the system anticipates precarious situations before they escalate. This predictive capability allows for immediate adjustments to gait and balance, maximizing safety and enabling robust locomotion even across challenging and unpredictable surfaces. The result is a platform capable of navigating uneven terrain with a degree of confidence previously unattainable, significantly reducing the risk of stumbles and maintaining efficient movement.

The system’s adaptability stems from a sophisticated Mixture-of-Experts (MoE) architecture, a neural network configuration allowing it to dynamically select and combine specialized sub-networks – or “experts” – for optimal performance across diverse terrains and tasks. This approach moves beyond a single, monolithic policy, enabling the robot to learn and execute complex maneuvers with greater precision. Evaluations demonstrate a command tracking error of just 0.206 when utilizing the unified MoE policy, signifying a substantial improvement in the robot’s ability to accurately follow intended movements and maintain stability even in challenging, unstructured environments. The MoE framework essentially provides the robot with a toolkit of behavioral strategies, intelligently applied to navigate obstacles and achieve its objectives with remarkable efficiency.

Virtual Reality (VR) teleoperation serves as a critical bridge between autonomous robotic function and human intuition, allowing for nuanced control and safe exploration of complex environments. This system enables a human operator to directly inhabit the robot’s perspective, providing real-time oversight and the ability to intervene when necessary – particularly valuable during unforeseen circumstances or when navigating highly unstructured terrain. Beyond immediate control, the VR interface facilitates a process of ‘learning from demonstration’, where human actions guide the refinement of the robot’s autonomous policies. This iterative process significantly accelerates the robot’s adaptation to new challenges and ensures consistently stable and efficient performance, going beyond pre-programmed responses to encompass dynamic, real-world scenarios.

The presented framework, PILOT, seeks to establish a cohesive system for loco-manipulation, recognizing that a robot’s ability to navigate unstructured environments hinges on the interplay between perception and control. This echoes Andrey Kolmogorov’s sentiment: “The most important thing in science is not to be afraid of new ideas.” PILOT doesn’t shy away from integrating attention mechanisms and a Mixture of Experts (MoE) to address the complexities of real-world terrains. The system’s strength lies in its holistic approach; it understands that weaknesses arise where these integrated components fail to communicate effectively. Consequently, PILOT’s design prioritizes seamless interaction, anticipating potential boundaries and striving for a robust, adaptable whole.

Future Directions

The pursuit of robust loco-manipulation, as exemplified by PILOT, inevitably exposes the brittle core of current control architectures. While attention mechanisms and Mixture of Experts offer a path toward managing complexity, the framework still relies on learned policies operating within a defined, though adaptable, state space. The true challenge isn’t scaling perception, but accepting its inherent incompleteness. A system attempting to model ‘unstructured scenes’ will always be chasing an asymptote; the cost of fidelity increases exponentially as the environment’s degrees of freedom expand.

Consequently, future work must confront the limitations of centralized control. Shifting toward decentralized, reactive systems – architectures where behavior emerges from local interactions rather than global planning – presents a compelling, if daunting, alternative. This isn’t simply a matter of algorithmic elegance; it’s an acknowledgement that prediction fails, and resilience demands embracing uncertainty. The emphasis should be on minimizing the consequences of misperception, not eliminating it.

Ultimately, the field will be judged not by the sophistication of its models, but by the simplicity of its failures. A truly scalable system won’t be defined by what it can do, but by what it gracefully doesn’t attempt. The dependencies inherent in any complex robotic system – on perception, on learning, on the very definition of ‘success’ – represent the true cost of ambition.

Original article: https://arxiv.org/pdf/2601.17440.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Overwatch Domina counters

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- Gold Rate Forecast

- Breaking Down the Ending of the Ice Skating Romance Drama Finding Her Edge

- ‘Reacher’s Pile of Source Material Presents a Strange Problem

- Top 10 Super Bowl Commercials of 2026: Ranked and Reviewed

2026-01-27 14:13