Author: Denis Avetisyan

A new approach enables robots to infer complex tasks by observing just a few demonstrations, focusing on how objects relate and change during execution.

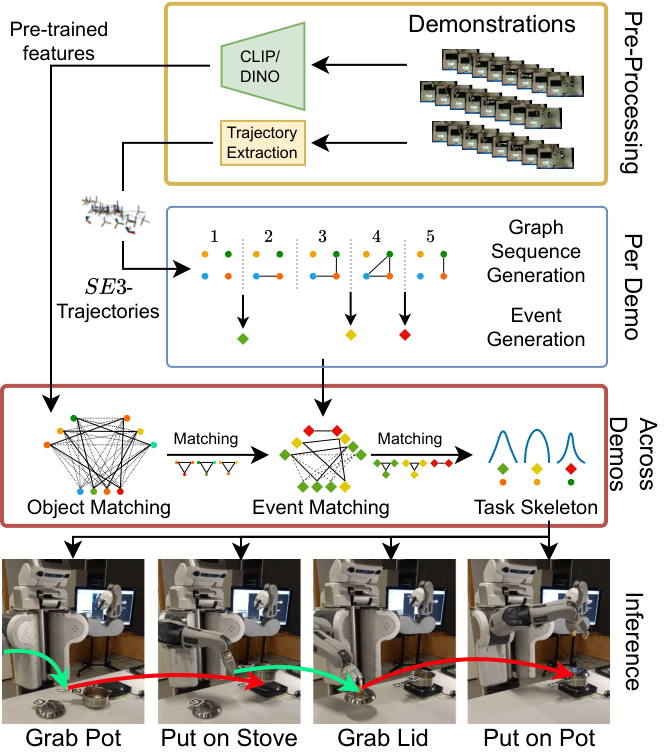

This work introduces SparTa, a method for learning sparse graphical task models from a handful of demonstrations by representing tasks as changing object relationships and matching events across examples.

Efficiently learning long-horizon manipulation tasks remains a key challenge in robot learning, yet current approaches often focus on how a task is performed rather than what the robot should achieve. This work, ‘SparTa: Sparse Graphical Task Models from a Handful of Demonstrations’, introduces a method for inferring task goals by representing evolving scenes as manipulation graphs that capture object relationships and their changes over time. By pooling demonstrations and matching object states, SparTa constructs probabilistic task models from limited data, enabling robust performance across environments. Could this sparse graphical representation unlock more adaptable and generalizable robotic manipulation skills?

Deconstructing Manipulation: Towards Relational Understanding

Traditional approaches to robotic manipulation frequently decompose complex actions into a linear series of motor commands, effectively treating the task as a trajectory through joint space. This methodology overlooks a fundamental aspect of manipulation: the intricate relationships between objects and how those relationships evolve throughout the process. A robot executing a task like assembling a chair isn’t simply moving its arm; it’s managing a dynamic network of connections – aligning a leg with a seat, securing it with a screw, and maintaining stability throughout. By focusing solely on the ‘how’ of movement, rather than the ‘what’ and ‘why’ of object interactions, current systems struggle with adaptability; a slight change in object pose or environment can disrupt the pre-programmed sequence, highlighting the need for a more relational understanding of manipulation tasks.

Effective robotic manipulation hinges not simply on executing a series of movements, but on comprehending the dynamic relationships between objects throughout a task. A robot that understands how objects connect – whether through physical contact, containment, or functional dependency – and anticipates how those connections will evolve is far better equipped to generalize its skills to novel situations. This relational understanding allows a system to abstract away from specific object instances or precise trajectories, focusing instead on the underlying principles governing the manipulation process. Consequently, learning becomes more efficient, requiring fewer examples for a robot to master a task and adapt it to variations in environment or object properties; a grasp applied to one mug can be readily transferred to another, or a stacking action applied to cubes can be extended to blocks, demonstrating a flexibility absent in systems solely focused on rote motor control.

Current approaches to robotic manipulation often falter when faced with even slight variations in task parameters or object configurations because they struggle to capture the dynamic relationships between objects. These methods typically focus on individual object movements rather than the overarching relational changes occurring during a manipulation sequence – how objects connect, separate, or transform relative to one another. Consequently, robots find it difficult to abstract the core principles of a task, hindering their ability to generalize learned skills to novel scenarios. The inability to represent these relational dynamics limits the development of truly adaptable robots, as they remain reliant on precisely programmed sequences instead of understanding the underlying structure of the manipulation itself and applying that understanding to new, unforeseen circumstances.

Building the Relational Backbone: From Observation to Graph

The system constructs a ‘Manipulation Graph’ by analyzing demonstrations of robotic manipulation tasks. This graph represents the connectivity between objects within the demonstrated scenarios, effectively mapping relationships observed during task execution. Each node in the graph corresponds to an object, and edges denote observed interactions or physical connections between those objects as performed in the demonstrations. The graph’s structure is derived from analyzing sequences of object manipulations, capturing how objects are grasped, moved relative to each other, and released during the tasks. This allows the system to build a knowledge base of object relationships based on observed physical interactions, facilitating more robust planning and generalization to novel scenarios.

Object Matching establishes correspondences between objects observed in different demonstrations, creating a consolidated representation of the environment. This process relies on comparing features extracted from each object and calculating an Object Feature Similarity Score of [latex]log(fnTfm)[/latex]. The [latex]fnTfm[/latex] value represents a transformation function applied to the features, and the logarithmic scaling normalizes the similarity score, providing a quantifiable metric for association. Higher scores indicate a stronger likelihood that two observed objects represent the same physical entity across demonstrations, allowing for the construction of a unified object representation.

Feature Extraction provides the data necessary for object association by generating both visual and named features for each object observed in demonstration data. Visual features are derived from image analysis, encompassing characteristics like color histograms, edge orientations, and shape descriptors. Named features, where available, utilize object labels or textual descriptions. These features are then utilized by the Cross-Demo Association process, which systematically compares feature vectors across multiple demonstrations to identify corresponding objects, even when variations in viewpoint, lighting, or partial occlusion exist. The resulting feature vectors enable quantitative comparisons and contribute to the Object Feature Similarity Score, [latex]\log(fnTfm)[/latex], used to determine the strength of the association between objects across demonstrations.

Mutual Information (MI) serves as the primary mechanism for establishing connections within the Manipulation Graph. Specifically, MI quantifies the statistical dependence between the motions of different objects observed across demonstrations; higher MI values indicate a stronger relationship, suggesting that the motion of one object provides information about the motion of another. This calculation is performed by estimating the probability distributions of object motions and then applying the [latex]MI(X;Y) = \in t p(x,y)log\frac{p(x,y)}{p(x)p(y)} dxdy[/latex] formula. The resulting MI scores are then used to define edges in the initial graph structure, with edges weighted according to the strength of the observed statistical dependence. This allows the system to infer relationships between objects even when direct physical interaction is not explicitly demonstrated, forming a foundational representation of object connectivity.

Segmenting Action: Decoding Events Within the Manipulation Graph

Event Generation within the manipulation graph framework focuses on detecting alterations to the graph’s structure. These alterations specifically include the addition of new objects, representing the introduction of novel elements into the manipulated scene; the merging of objects, signifying their combination into a single, unified entity; and the splitting of objects, indicating their decomposition into multiple distinct components. The system continuously monitors these changes to establish a sequence of events that reflect the progression of a manipulation task, providing a granular representation of the actions performed.

Event Types, as defined within the manipulation graph framework, represent the discrete actions that constitute a task. These types are identified by changes observed in the graph’s structure – specifically, the addition of new objects, the merging of existing ones, or the splitting of a single object into multiple components. Each identified change is categorized as a unique Event Type, effectively decomposing the overall task into a sequence of fundamental actions. This categorization allows for the representation of complex manipulations as a series of distinct, interpretable steps, enabling analysis of task progression and facilitating the development of automated planning and learning systems.

Segmentation of robot demonstration data into discrete steps is achieved through analysis of the manipulation graph. This graph represents objects and their relationships, and changes within it – object additions, mergers, or splits – demarcate distinct phases of the task. By identifying these graph alterations, the demonstration is partitioned into interpretable segments, each corresponding to a specific action or sub-task. This process provides a structured representation of task progression, facilitating analysis, learning, and reproduction of the demonstrated behavior. The resulting segmented representation allows for a clear understanding of the temporal order and dependencies between individual steps within the overall task.

ORION and Parameterized Symbolic Abstraction Graphs (PSAGs) build upon basic event segmentation by representing more complex temporal dependencies between actions. ORION utilizes a probabilistic framework to infer relationships between events, accommodating variations in timing and execution order within demonstrations. PSAGs, conversely, employ a symbolic representation where actions are nodes and temporal relationships – such as sequential ordering, parallelism, or conditional execution – are represented as labeled edges. This allows for the abstraction of task structure beyond simple linear sequences, capturing scenarios where the order or presence of certain actions is contingent on others, and facilitating the identification of hierarchical task structures.

Real-World Validation and the Path Forward

The developed methodology underwent rigorous testing utilizing the established ‘HandSOME Dataset’ and ‘Robocasa Dataset’, specifically designed to evaluate robotic manipulation understanding. Results demonstrate a substantial capacity to accurately segment and interpret complex manipulation tasks, culminating in an 85% success rate in generating correctly labeled events. This achievement signifies a significant advancement in the field, indicating the system’s ability to not only identify actions but also to categorize and understand their purpose within a broader context of manipulation. The high level of accuracy suggests the potential for real-world application in scenarios demanding precise robotic action interpretation and execution.

To validate the system’s applicability beyond simulation, researchers integrated it with a physical ‘PR2 Robot’ and employed ‘Aruco Markers’ as a robust tracking mechanism. This setup allowed for real-time assessment of the method’s ability to interpret manipulation events in a dynamic environment. The ‘Aruco Markers’ provided accurate pose estimation of objects, crucial for translating the system’s graph-based understanding into actionable robotic control. Successful execution within this robotic framework demonstrates the practicality of the approach, signifying a crucial step towards deploying the technology in real-world applications and confirming its potential for use in complex manipulation tasks.

The integration of Differentiable Kinematics represents a significant advancement in robotic manipulation, allowing for the direct optimization of robot actions based on the interpreted task graph. Rather than relying on pre-programmed movements or discrete planning steps, this approach treats the robot’s kinematic model as a differentiable function, enabling gradient-based optimization. This means the system can subtly adjust joint angles and trajectories to maximize the probability of successful task completion, as determined by the extracted graph representation of the manipulation. Consequently, the robot isn’t simply executing a plan, but actively learning to perform the task more effectively, leading to improved robustness and adaptability in dynamic or uncertain environments. This capability extends beyond simple trajectory correction, facilitating the refinement of entire manipulation strategies based on real-time feedback and observed outcomes.

The culmination of this research signifies a considerable advancement towards robotic systems exhibiting greater resilience and adaptability in performing complex manipulation tasks. By achieving an 85% success rate in event detection – a 20% improvement over existing baseline methods – the developed approach demonstrates a tangible leap in a robot’s ability to not only recognize actions but to understand their context and generalize learned skills to novel situations. This enhanced comprehension fosters more robust performance in dynamic and unpredictable environments, moving beyond pre-programmed sequences towards truly intelligent robotic manipulation and paving the way for robots capable of assisting in increasingly complex real-world scenarios.

The pursuit of robust robotic task learning, as detailed in this work, hinges on discerning essential relationships within complex demonstrations. SparTa’s approach to modeling tasks as manipulation graphs, and subsequently generating events based on object interactions, echoes a fundamental principle of system design. Donald Davies aptly stated, “You ask probing questions: ‘what are we actually optimizing for?’” This resonates with SparTa’s methodology; by focusing on how objects change relationships during a task, rather than simply recording motions, the system optimizes for a deeper understanding of the task’s underlying structure. This emphasis on discerning the essential – object relationships – from the accidental allows for a more generalizable and adaptable robotic control system, mirroring Davies’ belief in disciplined design.

Beyond the Graph

The construction of task models from limited demonstrations, as demonstrated by SparTa, highlights a crucial point: representation is not merely a coding scheme, but a constraint on possible futures. The method elegantly maps task structure onto manipulation graphs, allowing for event generation and probabilistic modeling. However, the very act of discretizing continuous action into ‘events’ introduces a fragility. A truly robust system will not simply react to change, but anticipate it, and that requires a deeper understanding of the underlying dynamics-a move beyond relational structures towards a predictive framework.

Current approaches still largely rely on demonstrations as the primary source of knowledge. While effective for bootstrapping, this creates a local maximum – the system is limited by the expressiveness, and biases, of the demonstrator. The next evolution must address the problem of grounding – connecting symbolic representations to the continuous world. This necessitates integration with learning methods that can exploit intrinsic motivation and explore beyond the confines of provided data, building models that are not just descriptive, but generative and adaptable.

The simplicity of the manipulation graph is its strength, but also hints at its limits. Complex tasks are rarely decomposable into neat, isolated relational changes. The challenge lies in creating representations that can capture hierarchical structure and temporal dependencies without succumbing to combinatorial explosion. A system that can learn to learn the appropriate level of abstraction-to dynamically adjust its representation based on the task at hand-will be far more resilient, and ultimately, more intelligent.

Original article: https://arxiv.org/pdf/2602.16911.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Overwatch Domina counters

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- 1xBet declared bankrupt in Dutch court

- Gold Rate Forecast

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

- Naomi Watts suffers awkward wardrobe malfunction at New York Fashion Week as her sheer top drops at Khaite show

- Bikini-clad Jessica Alba, 44, packs on the PDA with toyboy Danny Ramirez, 33, after finalizing divorce

2026-02-21 08:44