Author: Denis Avetisyan

A new framework empowers robots to collaboratively assemble furniture by learning how to adapt to real-world uncertainties and dynamically adjust their support strategies.

Researchers present A3D, a dual-arm manipulation system utilizing adaptive affordance learning and point cloud processing for robust robotic assembly.

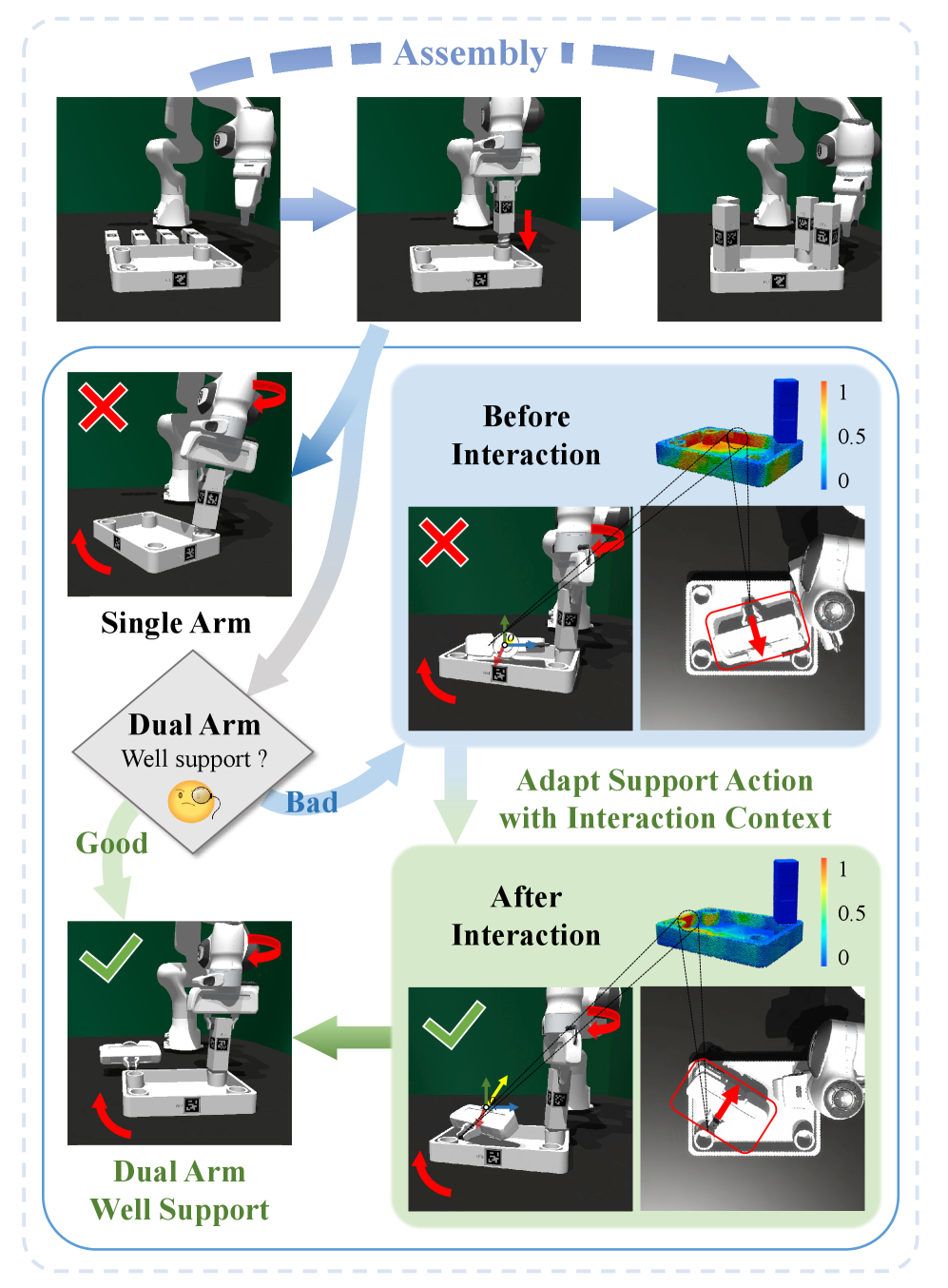

While robotic assembly promises increased efficiency, reliably coordinating dual-arm manipulation for complex tasks remains a significant challenge due to the need for adaptable support strategies. This paper introduces ‘A3D: Adaptive Affordance Assembly with Dual-Arm Manipulation’, a framework that learns to identify optimal support locations on furniture parts through adaptive affordance learning and real-time interaction feedback. By modeling part interaction with dense point-level geometric representations, A3D enables generalization across diverse geometries and demonstrates robust performance in both simulation and real-world scenarios. Could this approach unlock truly versatile robotic assistants capable of tackling a wider range of assembly and manipulation tasks?

The Challenge of Robotic Furniture Assembly: A Matter of Predictability

Conventional robotic assembly techniques often falter when confronted with the inherent challenges of furniture construction. Unlike the highly controlled environments of automotive or electronics manufacturing, furniture presents a significant degree of variability in part shapes, sizes, and orientations. This necessitates extensive manual programming – a process where human experts meticulously define the robot’s movements for each specific assembly step and furniture piece. Furthermore, these systems demand precise conditions, including well-lit spaces and perfectly positioned components, to execute tasks successfully. Even slight deviations from these ideal parameters – a misaligned dowel, a warped panel, or unexpected clutter – can disrupt the assembly process, highlighting the limitations of current robotic approaches in handling the unpredictable nature of real-world furniture.

The promise of automated furniture assembly faces a significant hurdle in the real world: a lack of robust adaptability. Current robotic systems, while capable in highly controlled factory settings, often falter when confronted with the inevitable imperfections of unstructured environments. Slight variations in the position or orientation of a component – an object “pose” that differs from the programmed expectation – can disrupt the assembly process, requiring human intervention. Similarly, unexpected changes like shadows, clutter, or even minor shifts in lighting conditions can confuse the robot’s vision systems. This sensitivity limits deployment to controlled settings and prevents widespread adoption in homes, warehouses, or dynamic workspaces where flexibility and resilience are paramount. Overcoming this challenge necessitates the development of more sophisticated perception algorithms and control strategies that enable robots to not only see the environment, but also to understand and adapt to its inherent uncertainties.

A3D: An Algorithm for Adaptable Assembly

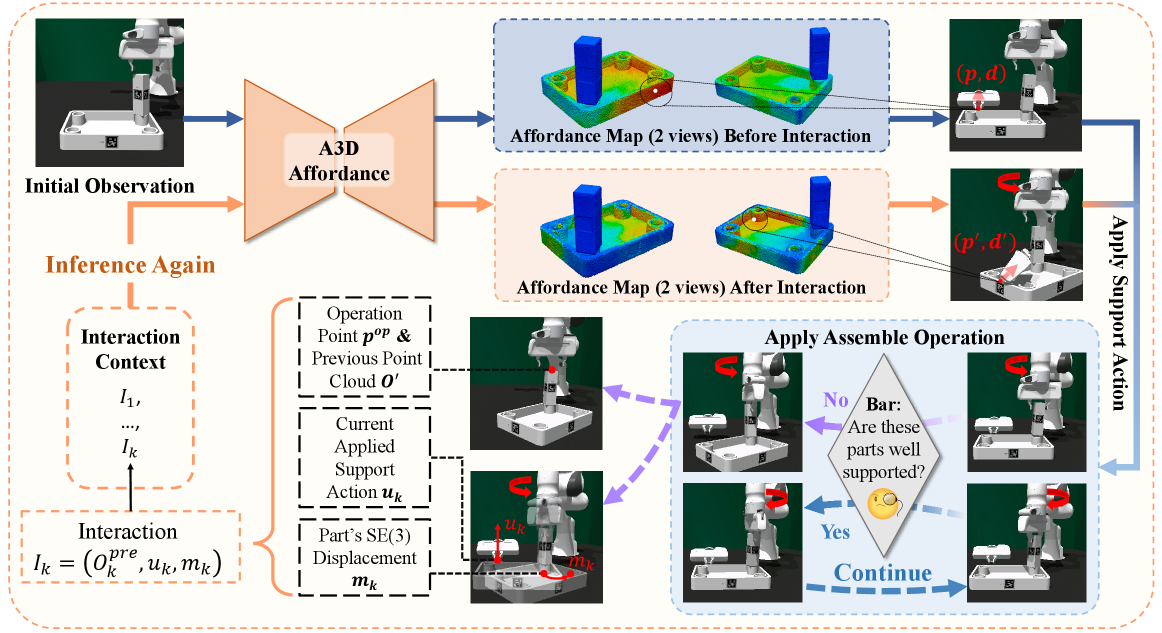

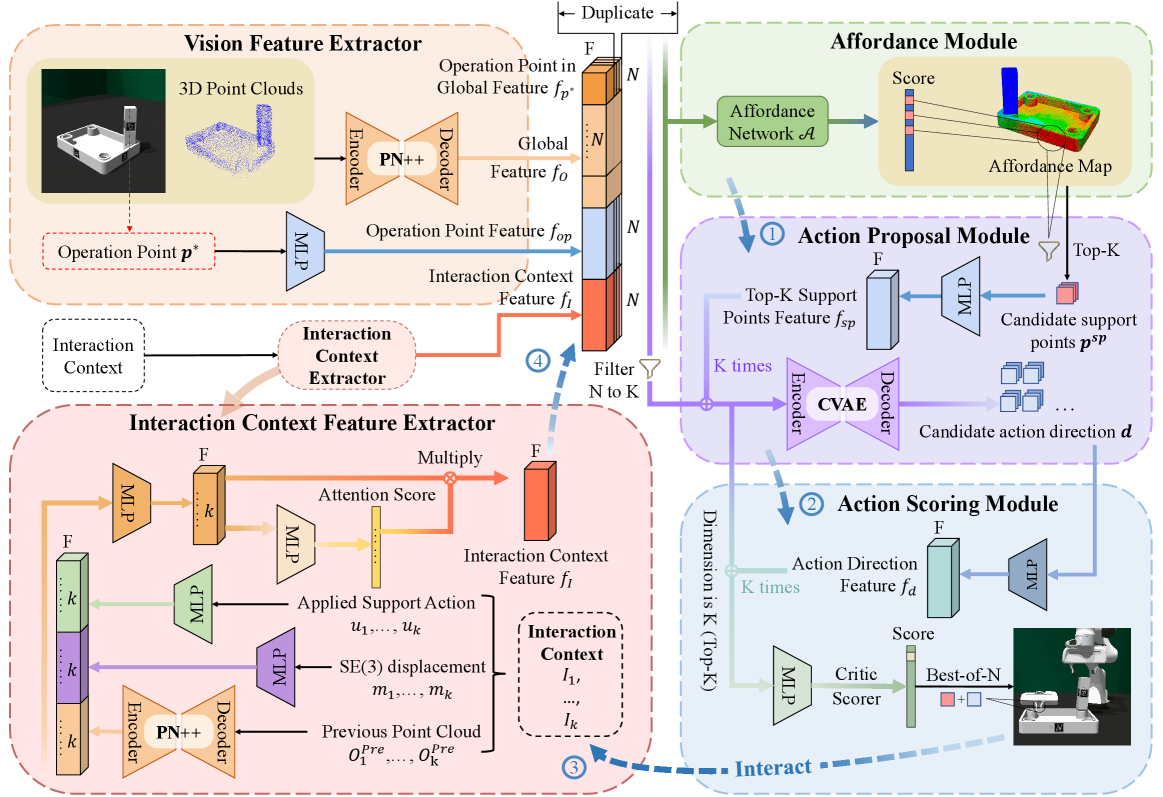

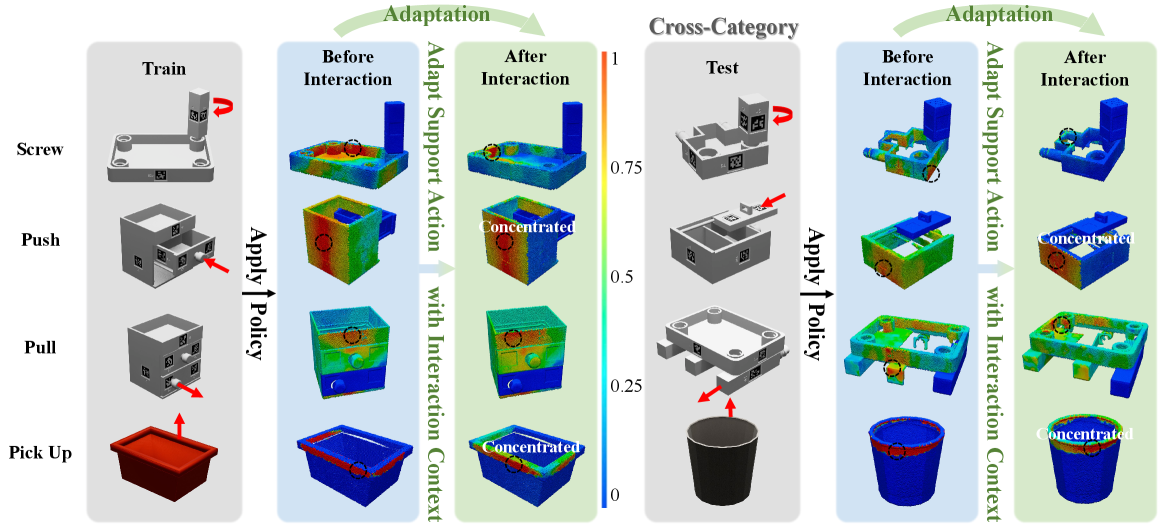

The Adaptive Affordance Assembly (A3D) framework utilizes 3D point cloud data as the primary input for robotic furniture assembly. This approach moves beyond reliance on pre-programmed models or precise localization by enabling the robot to directly perceive and interpret the geometry of parts and the surrounding environment. Affordances – the potential actions possible with an object – are learned directly from this point cloud data, allowing the system to identify grasp points, mating surfaces, and potential assembly sequences. The framework employs machine learning techniques to build a predictive model of these affordances, adapting to variations in object pose, lighting conditions, and partial occlusions inherent in real-world assembly scenarios. This data-driven approach enables the robot to autonomously discover how to interact with furniture components without explicit, hand-coded instructions regarding object manipulation.

The A3D framework leverages dual-arm robotic manipulation to enhance assembly robustness and flexibility. This is achieved through an intelligent action proposal system that generates a set of feasible assembly actions based on the current state of the environment and the assembly plan. Each proposed action is then scored based on multiple criteria, including kinematic feasibility, collision avoidance, and estimated success rate, determined via learned affordance models. This scoring process allows the system to select the optimal action in dynamic environments, accommodating unexpected disturbances or variations in part placement and ensuring successful assembly even with incomplete or noisy sensory data. The dual-arm setup enables efficient task allocation and parallel execution of assembly steps, further improving overall assembly time and adaptability.

Interaction context adaptation within the A3D framework dynamically refines affordance predictions during furniture assembly by incorporating real-time feedback from the robotic system. This process involves continuously evaluating the success or failure of proposed actions – such as grasping or insertion – and updating the underlying affordance model accordingly. Specifically, the framework leverages sensory data, including force and visual feedback, to assess the validity of current predictions. Positive feedback strengthens the association between observed states and successful actions, while negative feedback triggers adjustments to the affordance model, potentially altering grasp points, approach angles, or even the sequence of assembly steps. This iterative refinement allows A3D to handle variations in object pose, environmental conditions, and potential assembly errors, enhancing the robustness and adaptability of the robotic assembly process.

Data-Driven Learning: Establishing a Foundation of Reliability

The A3D framework utilizes a hybrid data collection strategy to enhance model performance and robustness. This strategy combines offline data acquisition through both random and heuristic sampling methods, providing an initial dataset for training. Subsequently, an online adaptive data collection component is employed during operational deployment. This online component actively gathers data from the agent’s interactions with the environment and uses this newly acquired data to refine and improve the model’s parameters in real-time, allowing it to adapt to unforeseen circumstances and continually enhance its decision-making capabilities.

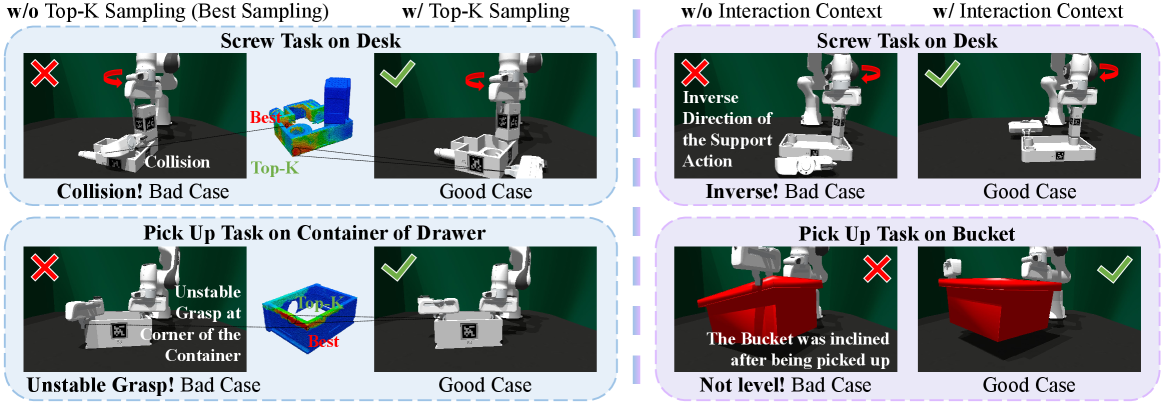

Within the action proposal module, Top-K sampling is implemented to generate a set of K potential actions for evaluation. This method selects the K most probable actions based on the current state, rather than relying on a single, highest-probability action. By considering multiple candidates, the system mitigates the risk of selecting a suboptimal action in novel or unforeseen circumstances. The value of K is a tunable hyperparameter that balances exploration of diverse actions with exploitation of known effective actions. This approach improves robustness by allowing the system to adapt to unexpected situations and prevents reliance on a narrow set of learned behaviors.

The A3D framework achieves elevated success rates in both simulated and real-world environments due to its hybrid data collection strategy. This approach combines pre-collected offline data, generated through random and heuristic sampling methods, with continuously gathered online data that adapts the model during operation. Comparative analysis demonstrates significant performance improvements over existing methods, attributable to the framework’s ability to leverage both the breadth of offline data and the adaptability of online learning. This dual strategy allows the model to generalize effectively across diverse scenarios and refine its performance based on real-time interactions, resulting in increased robustness and reliability.

Beyond Furniture: Towards a Universal Language of Manipulation

The architecture underpinning the Adaptive Affordance and Action Discovery (A3D) system isn’t limited to the specific task of furniture assembly; it represents a significant stride towards robots capable of broadly applicable manipulation skills. Rather than programming robots with precise instructions for each object, A3D focuses on how an object can be interacted with – its affordances – and then intelligently plans actions based on these perceived possibilities. This decoupling of action planning from detailed object models is crucial; it allows a robot to approach novel situations and unfamiliar objects with a degree of adaptability previously unattainable. Consequently, the principles demonstrated in furniture assembly – grasping, supporting, and aligning – become transferable to a wide range of tasks, from organizing cluttered workspaces to assisting in complex manufacturing processes, ultimately paving the way for robots that can learn and operate effectively in unstructured, real-world environments.

A significant limitation of traditional robotic manipulation lies in its reliance on detailed, pre-existing models of objects – a requirement that proves brittle when operating in real-world, unstructured environments. The A3D framework addresses this challenge by fundamentally decoupling action planning from precise object knowledge. Instead of needing a complete digital representation, the system learns to perceive affordances – the potential actions an object enables – directly from visual input. This allows robots to operate effectively with minimal prior knowledge, adapting to novel objects and configurations without requiring laborious re-programming or detailed 3D scans. Consequently, a robot equipped with A3D can successfully manipulate objects it has never encountered before, demonstrating a crucial step towards more flexible and broadly applicable robotic systems capable of thriving in dynamic, unpredictable settings.

Robotic systems are increasingly designed to interact with the world in ways that mirror human dexterity, and a crucial component of this progress lies in how these systems perceive and react to object affordances – the possibilities for action an object presents. Recent advancements demonstrate that by coupling visual perception with a support strategy, robots can move beyond pre-programmed manipulations and engage with complex objects more intuitively. This integration allows the robot to ‘see’ how an object can be supported – identifying stable grasping points and anticipating how forces will be distributed – and then dynamically adjust its actions to maintain stability throughout a manipulation task. The result is a more fluid, adaptable interaction, enabling robots to handle objects with varying shapes, weights, and fragility without requiring detailed prior knowledge or precise modeling – effectively bridging the gap between rigid automation and flexible, human-like manipulation.

The presented A3D framework champions a rigorous approach to robotic assembly, prioritizing demonstrable correctness over mere functionality. This aligns perfectly with the spirit of Paul Erdős, who famously stated, “A mathematician knows a great deal of things – and knows that there are many things he doesn’t.” The A3D system, through its adaptive affordance learning and dynamic support strategy adjustments, doesn’t assume a static understanding of the assembly process. Instead, it continuously refines its knowledge based on real-time interaction feedback, acknowledging the inherent uncertainty and complexity. Like a meticulously crafted proof, each adjustment ensures the robot’s actions are logically sound, minimizing redundancy and maximizing the probability of successful completion – a solution that isn’t simply ‘working on tests,’ but provably robust.

Future Directions

The presented A3D framework, while demonstrating a functional approach to dual-arm assembly, merely scratches the surface of a fundamentally difficult problem. The reliance on learned affordances, though pragmatic, skirts the issue of true geometric understanding. A robust solution demands more than probabilistic associations; it requires a formal representation of stability, force closure, and the inherent symmetries within the assembly process. The current method, inevitably, will falter when confronted with novel configurations or imperfect environmental conditions – situations where learned heuristics offer little advantage over genuine deduction.

Future work should prioritize the development of provably correct assembly strategies. The pursuit of ‘interactive adaptation’ is, frankly, a concession to the inherent imprecision of sensing and actuation. While real-time feedback is valuable, it should serve to refine a mathematically sound plan, not to compensate for its absence. The field would benefit from a shift away from data-hungry learning paradigms and towards the formal verification of robotic behaviors – a move that demands, admittedly, a far greater degree of mathematical rigor.

Ultimately, the true measure of success will not be the ability to assemble a specific piece of furniture, but the capacity to generalize assembly principles to arbitrary objects and environments. This requires an architecture that moves beyond the limitations of point cloud processing and embraces a more symbolic, knowledge-based representation of the world – a representation where the robot does not ‘see’ a collection of points, but ‘understands’ the geometric relationships that define its manipulability.

Original article: https://arxiv.org/pdf/2601.11076.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash Royale Best Boss Bandit Champion decks

- Vampire’s Fall 2 redeem codes and how to use them (June 2025)

- World Eternal Online promo codes and how to use them (September 2025)

- Best Arena 9 Decks in Clast Royale

- Country star who vanished from the spotlight 25 years ago resurfaces with viral Jessie James Decker duet

- ‘SNL’ host Finn Wolfhard has a ‘Stranger Things’ reunion and spoofs ‘Heated Rivalry’

- JJK’s Worst Character Already Created 2026’s Most Viral Anime Moment, & McDonald’s Is Cashing In

- Solo Leveling Season 3 release date and details: “It may continue or it may not. Personally, I really hope that it does.”

- M7 Pass Event Guide: All you need to know

- Kingdoms of Desire turns the Three Kingdoms era into an idle RPG power fantasy, now globally available

2026-01-20 15:49