Author: Denis Avetisyan

Researchers are blending the power of symbolic reasoning with neural networks to create multi-agent systems that can better understand, diagnose, and coordinate with each other.

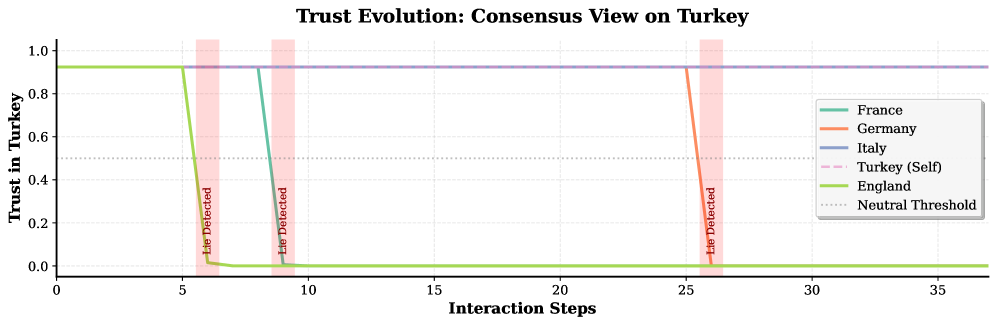

![A system employing differentiable trust dynamically adjusts agent weighting during communication, allowing reliable agents to maintain a consistent trust value of approximately [latex]0.94[/latex], while progressively down-weighting malfunctioning agents to around [latex]0.08[/latex], ultimately enabling the consensus mechanism-represented by a multilayer neural network-to closely track ground truth signal quality, a performance notably superior to that achieved through simple averaging biased by the faulty sensors.](https://arxiv.org/html/2602.12083v1/x8.png)

This review explores the use of differentiable modal logic to enable semantic debugging and improved control within complex multi-agent environments.

As multi-agent systems grow in complexity, diagnosing semantic failures demands reasoning about knowledge and causality-precisely the domain of modal logic, yet traditionally hampered by the need for manually defined relationships. This paper, ‘Differentiable Modal Logic for Multi-Agent Diagnosis, Orchestration and Communication’, introduces a neurosymbolic framework leveraging differentiable modal logic (DML) and Modal Logical Neural Networks (MLNNs) to learn these crucial structures directly from behavioral data. By unifying epistemic, temporal, and deontic reasoning, we demonstrate interpretable learned models for trust, causality, and agent confidence-enabling applications from detecting deception to mitigating LLM hallucinations. Could this approach unlock a new era of robust, explainable, and autonomously debuggable multi-agent AI?

The Illusion of Swarm Intelligence: Why Even Good Scouts Fail

The efficacy of multi-agent systems, often modeled on swarms in nature, hinges on the consistent and accurate exchange of information. However, research demonstrates a surprising fragility within these systems; a single agent providing flawed data – termed a ‘Broken Scout’ – can rapidly destabilize the collective’s understanding of its environment. This isn’t simply a matter of statistical noise; the propagation of inaccurate information can overwhelm correct signals, leading the swarm to make demonstrably poor decisions, even when most agents are functioning optimally. Simulations reveal that the impact of a Broken Scout isn’t linear; the error amplifies as other agents incorporate and re-transmit the flawed data, creating a cascading effect that severely compromises the swarm’s ability to navigate, forage, or respond to threats. Understanding this vulnerability is crucial for designing robust multi-agent systems capable of filtering misinformation and maintaining collective intelligence in dynamic and uncertain environments.

The efficacy of collective intelligence hinges on a shared foundation of trust, yet this foundation proves surprisingly fragile when confronted with misinformation. Studies demonstrate that even a small proportion of inaccurate information, intentionally or unintentionally introduced, can propagate rapidly through a multi-agent system, undermining the validity of the collective’s knowledge. This erosion of trust doesn’t simply introduce errors; it actively diminishes the swarm’s capacity for effective action, as agents begin to discount reliable signals and prioritize self-preservation over collaborative problem-solving. Consequently, a once-coherent collective can devolve into a fragmented group, unable to leverage its combined intelligence and increasingly susceptible to manipulation or failure. The speed and scale of this breakdown highlight the critical need for mechanisms to verify information and maintain confidence within these systems.

Within dynamic multi-agent systems, discerning trustworthy information proves remarkably challenging for conventional methodologies. Existing algorithms often rely on static reputation scores or pre-defined hierarchies, which falter when faced with the fluidity of a swarm and the potential for rapid shifts in agent reliability. A key difficulty lies in the inability of these systems to effectively assess the context of information; a scout previously considered reliable may transmit inaccurate data due to unforeseen circumstances, yet traditional approaches struggle to adapt to such nuanced changes. This limitation leaves the collective vulnerable to the propagation of false or misleading signals, hindering effective decision-making and ultimately compromising the swarm’s overall intelligence. The challenge isn’t simply identifying bad actors, but rather, continuously evaluating the validity of information within a constantly evolving network where trust is not inherent, but earned – and easily lost.

![Trust updates are learned by penalizing agents whose claims deviate from reality beyond a tolerance [latex] au[/latex], with the strength of corrective feedback proportional to both deviation magnitude and current trust, thereby preserving trust for agents within the noise tolerance.](https://arxiv.org/html/2602.12083v1/x9.png)

Formalizing Belief: A Neurosymbolic Patch for Fragile Minds

The presented framework utilizes Differentiable Modal Logic (DML) as a neurosymbolic approach to formalize agent knowledge and intentions. This integration combines the expressive power of modal logic – traditionally used to represent modalities like belief and obligation – with the learning capabilities of neural networks. DML allows for the representation of propositions and relationships between ‘possible worlds’, enabling a computational model of agent reasoning. Specifically, agent states and their associated beliefs are encoded as differentiable functions, facilitating gradient-based learning and adaptation within a larger system. This allows the framework to not only represent knowledge and intentions, but also to learn and refine them through interaction with an environment.

The MLNN (Modal Logic Neural Network) establishes a parameterization of [latex]KripkeStructures[/latex], which are foundational for representing modal logic systems. This parameterization is achieved by mapping the accessibility relations and state properties within the [latex]KripkeStructure[/latex] to the weights and biases of the neural network. Consequently, the MLNN learns to represent possible worlds and the relationships between them – specifically, which worlds are accessible from a given world according to an agent’s beliefs. This allows the system to move beyond predefined relationships and instead infer complex, data-driven relationships between possible worlds, effectively modeling agent beliefs as learned functions of the environment.

The framework facilitates reasoning regarding agent mental states – knowledge and beliefs – and normative obligations within environments characterized by uncertainty. By representing possible worlds and relationships between them via parameterized [latex]KripkeStructures[/latex], the system can evaluate the truth of propositions across these worlds, accounting for varying degrees of agent awareness and commitment. This allows for the computation of agent beliefs given available information, the deduction of obligatory actions based on defined rules or goals, and the assessment of potential outcomes contingent on agent knowledge and intended behavior, even when complete information is not available. The differentiable nature of the logic enables gradient-based learning and adaptation to noisy or incomplete data, improving the robustness of reasoning in uncertain scenarios.

Measuring the Unreliable: Calibration and Consistency as Lifelines

The framework employs [latex]\text{DoxasticLogic}[/latex] to represent agent beliefs as probabilistic statements, enabling quantifiable assessment of agent calibration. Calibration, in this context, measures the correspondence between an agent’s stated confidence in a proposition and the actual probability of that proposition being true. Specifically, the framework analyzes the distribution of agent confidence scores against observed accuracy rates; a well-calibrated agent will exhibit a strong correlation between high confidence and high accuracy, and vice-versa. Deviations from this ideal, such as overconfidence or underconfidence, are identified and used as indicators of potential unreliability. This probabilistic modeling allows for a nuanced evaluation beyond simple accuracy metrics, focusing on the trustworthiness of the agent’s internal belief state.

SayDoConsistency is calculated as the degree to which an agent’s actions correspond to its previously stated intentions or commitments. This metric assesses whether an agent demonstrably does what it says it will do, providing a quantifiable measure of its reliability. Implementation involves tracking both declared intentions – represented as formal statements of planned behavior – and observed actions. A high SayDoConsistency score indicates strong alignment between stated goals and actual execution, while discrepancies suggest potential unreliability or deceptive behavior. This is critical in multi-agent systems where trust and coordination depend on predictable behavior from all participating agents.

Detection of agent hallucination – the confident assertion of false statements – is a key component in improving swarm reliability. Our methodology identifies these instances and allows for the isolation of unreliable agents within the communication network. In testing scenarios involving multi-agent communication, implementing this hallucination detection and mitigation strategy resulted in an 81% reduction in Mean Absolute Error (MAE). This improvement demonstrates a significant enhancement in the overall accuracy and trustworthiness of information propagated within the swarm, directly addressing the impact of confidently incorrect assertions on collective decision-making.

![Reliability diagrams reveal that while population-level calibration can appear reasonable, individual agents exhibit varying degrees of miscalibration, ranging from slight underconfidence to catastrophic overestimation of their predictions, as evidenced by significant deviations from perfect calibration [latex] (y=x) [/latex].](https://arxiv.org/html/2602.12083v1/x5.png)

Beyond Band-Aids: Towards Truly Dependable Multi-Agent Systems

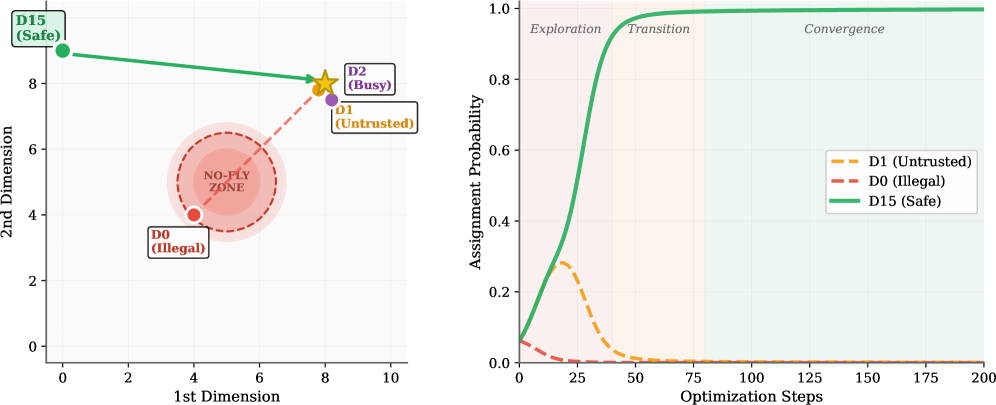

The architecture leverages [latex]\textit{Deontic Logic}[/latex] to establish a formal system of obligations and constraints governing agent behavior. This allows for the precise definition of permissible and prohibited actions within the multi-agent system; for example, a ‘No-Fly Zone’ can be mathematically encoded as an obligation not to enter a specified airspace. Rather than relying on probabilistic or reactive safety measures, this approach guarantees constraint satisfaction by design, ensuring agents adhere to pre-defined rules even in complex and dynamic environments. The system then actively monitors agent plans and actions, verifying compliance with these deontic constraints and intervening to prevent violations, thereby bolstering safety and predictability.

The developed framework establishes a dependable system for guaranteeing agent behavior adheres to established safety guidelines and operational objectives. Through rigorous testing in multi-modal orchestration experiments, the system demonstrated a high degree of accuracy, achieving a Precision-Recall Area Under the Curve (PR-AUC) of 0.964. This performance indicates a substantial ability to correctly identify and maintain safe operational parameters, even within complex scenarios involving multiple interacting agents. The robustness of this approach stems from its capacity to proactively constrain agent actions, ensuring alignment with pre-defined protocols and minimizing the potential for unintended or hazardous outcomes in dynamic environments.

A key advancement in multi-agent system dependability lies in prioritizing agents demonstrating high calibration and consistency. Rather than relying on simple averaging of individual agent outputs, this approach selectively emphasizes contributions from those agents proven most reliable in their predictions. Experimental results demonstrate a substantial improvement in performance, achieving a Mean Absolute Error (MAE) of 0.025 – a significant reduction compared to the 0.132 MAE observed when utilizing raw averaging techniques. This focused prioritization not only minimizes prediction errors but also fosters increased trustworthiness in the system’s collective decision-making process, offering a pathway towards safer and more predictable multi-agent orchestration in complex environments.

The pursuit of semantic understanding in multi-agent systems, as detailed in this work, feels predictably optimistic. It’s a lovely notion – building systems that understand their own reasoning – but one repeatedly battered by production realities. Donald Davies famously observed, “It is not the complexity of the system that is the problem, but the complexity of managing it.” This rings true. Combining modal logic with differentiable neural networks offers a fascinating approach to semantic debugging, yet the inherent messiness of real-world deployments will inevitably introduce unforeseen complexities. The elegance of the framework will, in time, be shadowed by the sheer effort of keeping it operational, a familiar pattern in this field.

What Breaks Down From Here?

The promise of embedding formal logic within differentiable systems is, predictably, proving more brittle than advertised. This work attempts to constrain the inevitable drift of neural networks with the rigid structure of modal logic, hoping to achieve something resembling semantic transparency in multi-agent systems. The immediate challenge isn’t the technique itself, but the scaling. Current demonstrations operate on toy problems, and the moment these models encounter the delightful messiness of production data, the carefully crafted logical constraints will begin to…bend. The bug tracker will, inevitably, fill with reports of subtly violated axioms.

The real question isn’t whether this approach can work, but whether it offers a practical advantage over simply throwing more parameters at the problem. The benefits of “semantic debugging” are largely theoretical until they translate into measurable reductions in deployment failures-and history suggests that elegantly designed systems are often just more interesting ways to fail. The focus will likely shift towards techniques for automatically repairing these logical violations, essentially building a self-healing symbolic layer on top of a fundamentally opaque neural substrate.

The long game isn’t about achieving true AI, of course. It’s about building more sophisticated tools for managing complexity-and, ultimately, for documenting the inevitable postmortems. The system doesn’t learn-it accumulates evidence of its own limitations. It doesn’t deploy-it lets go.

Original article: https://arxiv.org/pdf/2602.12083.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Gold Rate Forecast

- Star Wars Fans Should Have “Total Faith” In Tradition-Breaking 2027 Movie, Says Star

- Christopher Nolan’s Highest-Grossing Movies, Ranked by Box Office Earnings

- Jessie Buckley unveils new blonde bombshell look for latest shoot with W Magazine as she reveals Hamnet role has made her ‘braver’

- KAS PREDICTION. KAS cryptocurrency

- Country star Thomas Rhett welcomes FIFTH child with wife Lauren and reveals newborn’s VERY unique name

- eFootball 2026 is bringing the v5.3.1 update: What to expect and what’s coming

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Clash of Clans Unleash the Duke Community Event for March 2026: Details, How to Progress, Rewards and more

- Mobile Legends: Bang Bang 2026 Legend Skins: Complete list and how to get them

2026-02-14 14:55