Author: Denis Avetisyan

A new framework combines the strengths of multi-agent systems and iterative refinement to dramatically improve the ability of AI to solve complex problems.

![The PRIME framework establishes a system where reasoning steps are continuously vetted for consistency, with a coordinating mechanism managing iterative refinement through a state-based backtracking process informed by Group Relative Policy Optimization [latex] GRPO [/latex], acknowledging that even robust systems require ongoing recalibration to maintain integrity over time.](https://arxiv.org/html/2602.11170v1/figures/fig9_algorithm1.png)

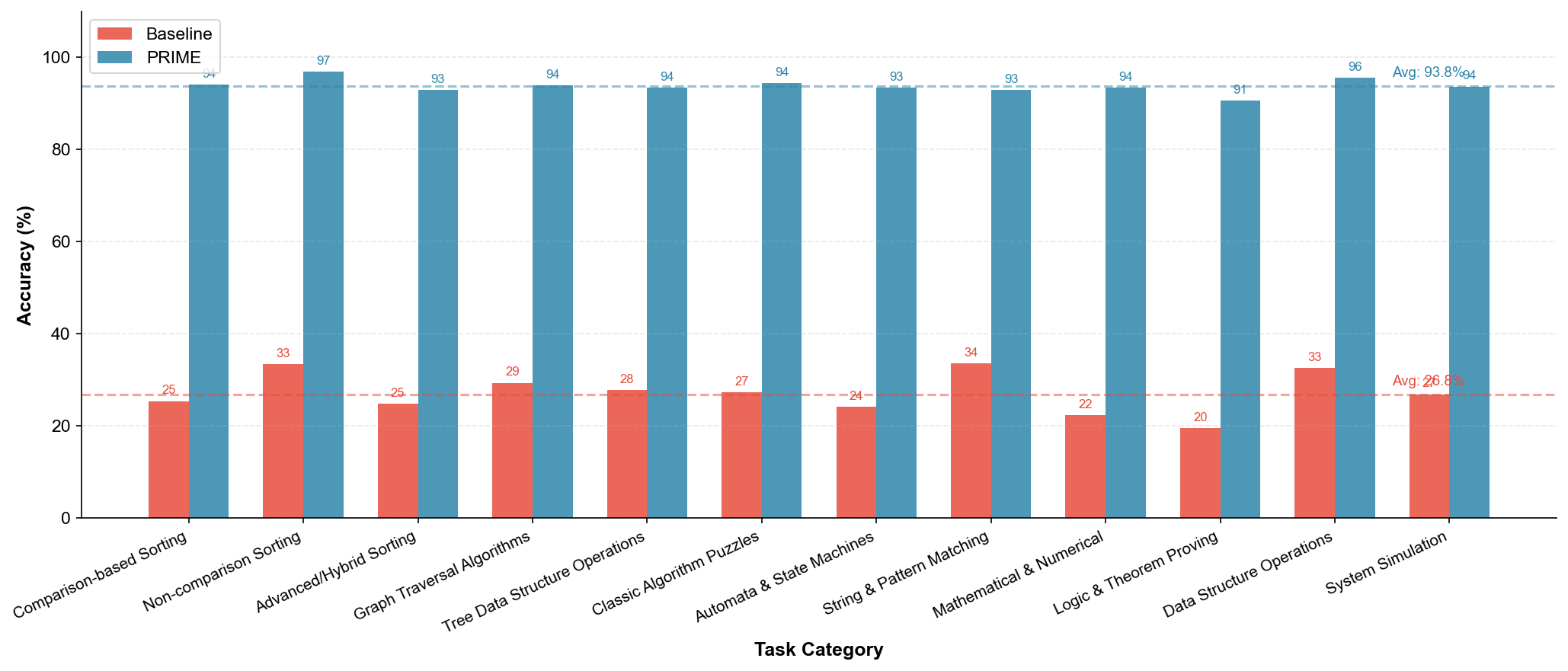

PRIME, a policy-reinforced iterative multi-agent execution framework, achieves state-of-the-art performance on algorithmic reasoning benchmarks.

Despite remarkable progress in diverse reasoning tasks, large language models continue to struggle with systematic algorithmic reasoning. To address this limitation, we introduce PRIME-Policy-Reinforced Iterative Multi-agent Execution-a framework leveraging structured prompting, a multi-agent architecture, and iterative refinement guided by reinforcement learning. PRIME achieves state-of-the-art performance on a comprehensive new benchmark, PRIME-Bench, demonstrating a significant accuracy boost-from 26.8% to 93.8%-across tasks like Turing machine simulation and long division. Could this approach unlock more robust and reliable algorithmic capabilities in large language models, paving the way for their application in domains requiring rigorous logical deduction?

The Inevitable Stumble: Algorithmic Reasoning and Large Language Models

Despite demonstrated proficiency in areas like natural language processing and text generation, Large Language Models consistently encounter difficulties when tasked with robust algorithmic reasoning. These models, trained on vast datasets of text, often struggle with problems demanding systematic, step-by-step deduction-a process fundamentally different from pattern recognition within language. The limitation isn’t a lack of knowledge, but rather an inability to consistently apply knowledge in a logically sequenced manner, hindering performance on tasks such as mathematical proofs, code debugging, or even following complex instructions with multiple conditional steps. This suggests that while LLMs can effectively mimic and extrapolate from existing data, they lack the inherent capacity for the precise, controlled execution required for reliable algorithmic problem-solving.

Current language models often falter when confronted with algorithms, not due to a lack of knowledge, but a deficiency in methodical execution. These models typically demonstrate proficiency in recognizing patterns and recalling information, yet struggle to consistently apply a sequence of precise steps necessary for solving complex problems. The inherent probabilistic nature of their design means they can generate plausible, but ultimately incorrect, solutions, especially when the task demands unwavering adherence to logical deduction. Unlike a computer program which executes instructions deterministically, these models may introduce errors during multi-step reasoning, leading to unreliable outcomes even for relatively simple algorithmic challenges. This limitation highlights a fundamental difference between statistical language processing and the precise, symbolic manipulation required for robust algorithmic reasoning.

Addressing the current limitations in algorithmic reasoning demands a shift towards innovative neural network designs. Researchers are actively exploring architectures that move beyond simple pattern recognition, focusing instead on systems capable of explicitly representing and manipulating symbolic information. These novel approaches include integrating LLMs with external tools – such as symbolic solvers or program interpreters – to offload complex computations and verify results. Furthermore, investigations into architectures that mimic human cognitive processes, like iterative refinement and hypothesis testing, promise to enhance LLMs’ ability to systematically approach and solve multi-step algorithmic problems. Ultimately, unlocking the full potential of these models for tasks demanding precise logical deduction hinges on developing architectures that prioritize not just fluency, but also verifiable correctness and robust reasoning capabilities.

PRIME: A Framework for Deliberate Computation

PRIME employs a Multi-Agent Architecture consisting of two primary components: an agent and a verifier. The agent autonomously generates a sequence of algorithmic steps intended to solve a given problem. These steps are then submitted to the verifier, which assesses their correctness based on predefined criteria. If the verifier identifies errors, it provides feedback to the agent. This feedback loop enables iterative refinement of the generated steps; the agent uses the verifier’s assessment to adjust its approach and produce more accurate solutions in subsequent iterations. This architecture distinguishes itself from single-agent systems by explicitly incorporating a validation stage, enhancing the reliability and correctness of the problem-solving process.

The PRIME framework utilizes Policy-Reinforcement Learning (PRL) to facilitate adaptive performance within its multi-agent system. Both the agent, responsible for generating algorithmic steps, and the verifier, tasked with correctness validation, are trained using PRL techniques. This allows each component to learn an optimal policy for its respective function through iterative interaction and feedback. The agent receives rewards based on the verifier’s assessment of its generated steps, while the verifier is rewarded for accurate validation. Through this continuous learning loop, both agent and verifier refine their policies, improving the overall accuracy and efficiency of the PRIME framework in solving complex tasks. The policies are represented as probability distributions over actions, adjusted based on received rewards to maximize cumulative performance.

Structured prompting within the PRIME framework utilizes a defined methodology to direct the Large Language Model (LLM) through complex tasks. This approach decomposes problems into discrete steps, each accompanied by specific instructions and relevant contextual information provided within the prompt. By clearly outlining expected outputs and providing necessary data for each stage, structured prompting minimizes ambiguity and reduces the likelihood of errors. This method improves both the accuracy of the LLM’s responses and the efficiency with which it processes information, as the LLM is guided toward correct solutions rather than relying on open-ended generation.

![PRIME efficiently explores a decision tree by leveraging a state stack to prune invalid reasoning paths ([latex] ext{red dashed paths}[/latex]) identified by a verifier, allowing it to backtrack to the last valid node and continue search-a process distinct from standard Chain-of-Thought methods.](https://arxiv.org/html/2602.11170v1/figures/fig12_prune_branch.png)

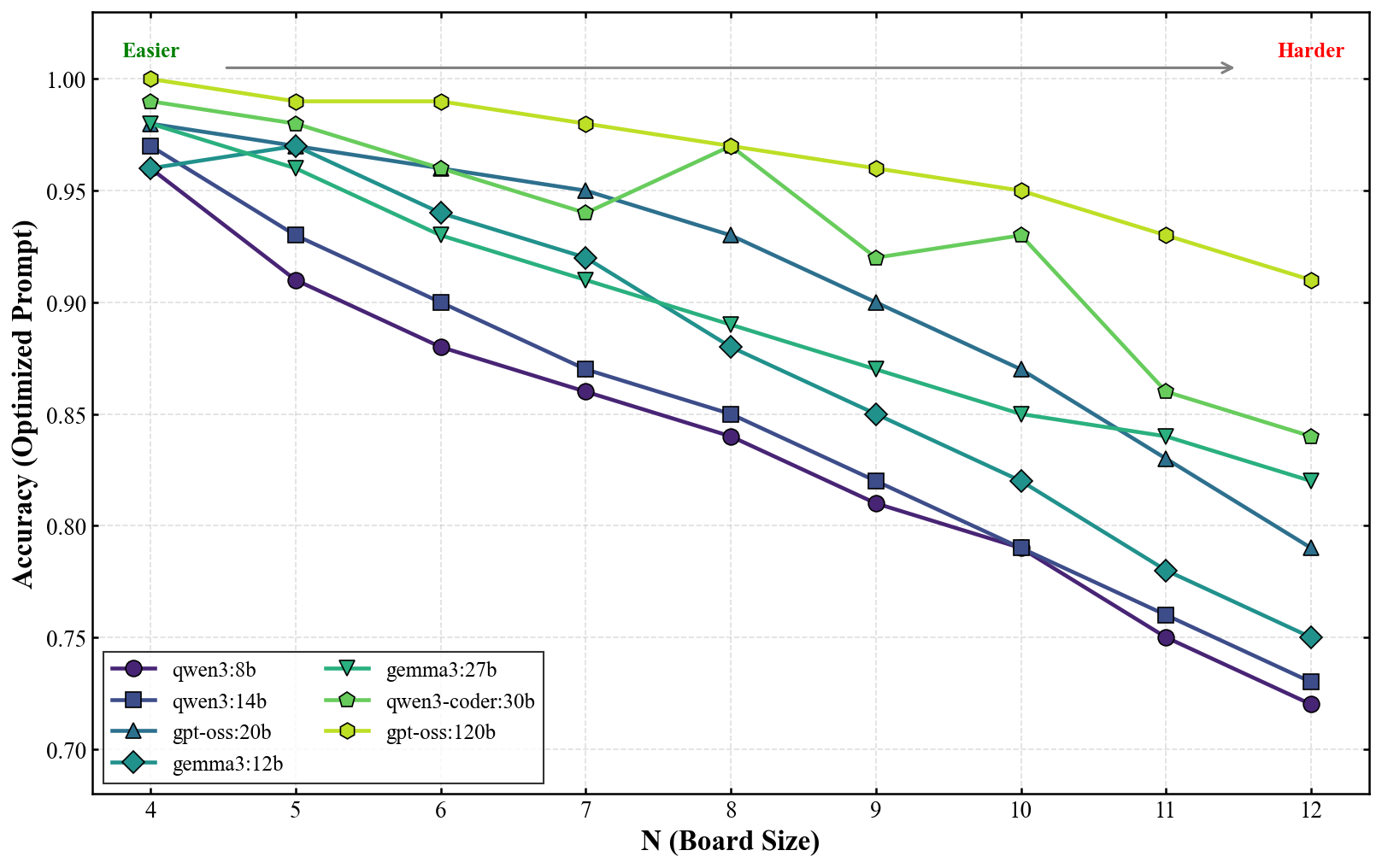

PRIME Benchmarking and Validation: Measuring Robustness

PRIME-Bench serves as the primary evaluation metric for the PRIME framework, consisting of 86 distinct algorithmic tasks designed to assess performance across a broad spectrum of computational challenges. These tasks are categorized to include foundational algorithms such as Sorting Algorithms – encompassing variations like quicksort and merge sort – Searching Algorithms – including binary search and depth-first search – and Graph Algorithms – such as Dijkstra’s algorithm and minimum spanning tree computations. The diversity of PRIME-Bench ensures a robust evaluation of PRIME’s capabilities, moving beyond performance on single algorithm types to demonstrate general problem-solving proficiency. The benchmark’s composition allows for granular analysis of PRIME’s strengths and weaknesses across different algorithmic paradigms.

Evaluations conducted using PRIME-Bench, a benchmark suite of 86 algorithmic tasks encompassing sorting, searching, and graph problems, indicate substantial performance gains when compared to baseline models. These improvements are quantifiable across diverse problem types and scales, demonstrating PRIME’s capacity to achieve superior results in complex algorithmic challenges. Specifically, performance metrics such as solution accuracy, computational efficiency, and scalability consistently exceed those of the compared models, indicating a statistically significant advancement in algorithmic problem-solving capability.

Iterative Refinement, as implemented within the PRIME framework, functions by repeatedly applying and refining potential solutions to algorithmic tasks. This process involves generating an initial solution, evaluating its correctness, and then systematically modifying it based on identified errors. The framework’s implementation of iterative refinement enhances both accuracy, by converging on correct solutions through repeated adjustments, and robustness, by mitigating the impact of initial inaccuracies or incomplete information. Consequently, this approach facilitates Error Recovery, allowing the system to correct mistakes during the solution process, and enables solutions to previously intractable problems by systematically improving approximations until a viable result is achieved.

![PRIME consistently outperforms existing methods across twelve task categories, demonstrating substantial gains-particularly in logic/theorem proving ([latex]364.6%[/latex]) and mathematical/numerical reasoning ([latex]317.4%[/latex]).](https://arxiv.org/html/2602.11170v1/exp/fig1_category_comparison.png)

The Trajectory of Intelligence: Implications and Future Directions

The emergence of PRIME signifies a substantial leap forward in the capabilities of large language models, particularly in domains demanding complex algorithmic reasoning. This system demonstrates a capacity to not merely process information, but to actively generate and refine solutions to intricate problems, unlocking possibilities previously unattainable for LLMs. Consequently, applications such as automated code generation – where PRIME could autonomously construct functional software – and program synthesis, involving the creation of novel algorithms, are now within reach. Perhaps most strikingly, PRIME’s proficiency extends to the realm of robotic control, suggesting a future where robots can learn and adapt to complex tasks through language-based instruction and algorithmic refinement, effectively bridging the gap between human intention and machine execution.

PRIME distinguishes itself through a synergistic blend of supervised and reinforcement learning techniques, fostering a system uniquely capable of sustained progress. Initially, the model benefits from supervised learning, absorbing foundational knowledge and establishing a baseline level of performance. This is then dynamically enhanced by reinforcement learning, allowing PRIME to refine its approach through trial and error, optimizing solutions based on received feedback. This combined strategy doesn’t just achieve high initial accuracy; it enables continuous improvement as the model encounters new data and challenges, adapting its internal parameters to consistently elevate its problem-solving capabilities. The result is a flexible architecture that transcends the limitations of either learning method in isolation, offering a robust path towards increasingly sophisticated algorithmic mastery.

The trajectory of PRIME’s development centers on scaling its algorithmic capacity to address increasingly intricate computational challenges. Researchers intend to move beyond current benchmarks by incorporating more sophisticated problem-solving techniques and expanding the range of algorithms the system can effectively master. This progression isn’t purely academic; a significant focus lies in translating PRIME’s capabilities into tangible real-world applications, spanning fields like logistical optimization, resource management, and even scientific discovery. The ultimate aim is to create a broadly adaptable system capable of tackling previously intractable problems, thereby pushing the boundaries of what’s achievable with artificial intelligence and automated reasoning.

The pursuit of algorithmic reasoning, as demonstrated by PRIME, echoes a fundamental truth about complex systems. The framework’s iterative refinement-its capacity to build upon successive approximations-aligns with the inevitable decay all architectures experience. G. H. Hardy observed, “The greatest enemy of knowledge is not ignorance, it is the illusion of knowledge.” PRIME doesn’t presume complete solutions; instead, it embraces a process of continual adjustment, acknowledging that even the most sophisticated large language models operate within a landscape of inherent uncertainty. The multi-agent system, by design, accepts that improvements age faster than one can fully understand them, creating a dynamic and resilient approach to problem-solving.

What Lies Ahead?

The pursuit of algorithmic reasoning in large language models, as exemplified by frameworks like PRIME, feels less like invention and more like a prolonged negotiation with entropy. Each refinement, each iterative loop, merely delays the inevitable decay of coherence. The current emphasis on multi-agent systems, while demonstrably effective, introduces new vectors for systemic failure – a proliferation of potential fault lines within the architecture. Uptime, in this context, is not a destination, but a rare phase of temporal harmony before the inevitable cascade of errors.

The benchmark results, however impressive, are artifacts of a specific moment. The true test will lie in the system’s resilience to novel problems, to inputs that fall outside the curated datasets. A crucial, largely unaddressed challenge is the question of ‘forgetting’ – the gradual erosion of previously learned skills as models are refined and retrained. Technical debt, in this domain, is not measured in lines of code, but in the increasing computational cost of maintaining consistency.

Future work will likely focus on strategies for mitigating this decay – perhaps through continual learning architectures or more robust methods for knowledge consolidation. But the fundamental truth remains: systems are not built to last. The objective, then, is not to achieve perpetual intelligence, but to engineer graceful degradation-to extend the period of meaningful function before the inevitable return to baseline noise.

Original article: https://arxiv.org/pdf/2602.11170.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Gold Rate Forecast

- Star Wars Fans Should Have “Total Faith” In Tradition-Breaking 2027 Movie, Says Star

- Christopher Nolan’s Highest-Grossing Movies, Ranked by Box Office Earnings

- KAS PREDICTION. KAS cryptocurrency

- Jessie Buckley unveils new blonde bombshell look for latest shoot with W Magazine as she reveals Hamnet role has made her ‘braver’

- Country star Thomas Rhett welcomes FIFTH child with wife Lauren and reveals newborn’s VERY unique name

- eFootball 2026 is bringing the v5.3.1 update: What to expect and what’s coming

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Decoding Life’s Patterns: How AI Learns Protein Sequences

- Clash of Clans Unleash the Duke Community Event for March 2026: Details, How to Progress, Rewards and more

2026-02-15 01:10