Author: Denis Avetisyan

A new review synthesizes psychological insights into human intention, offering a framework to advance collaborative robotics and improve how machines understand our goals.

This paper presents a taxonomy of human intention and explores its applications in human-robot interaction, focusing on shared intention, communication, and task planning.

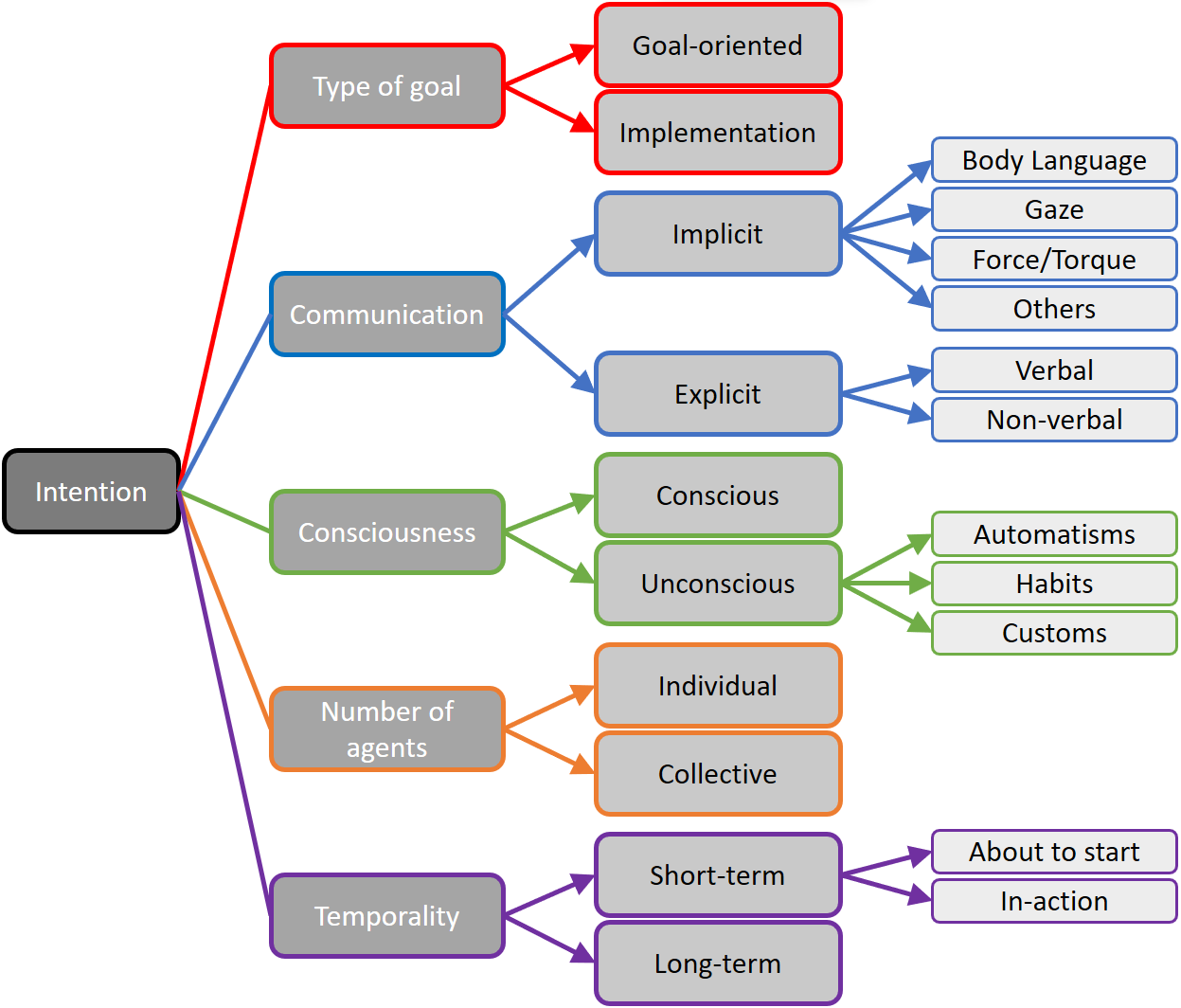

Despite advancements in robotics, a comprehensive understanding of human intention remains a significant challenge, often conflated with task goals alone. This paper, ‘The human intention. A taxonomy attempt and its applications to robotics’, addresses this gap by proposing a nuanced psychological framework for classifying intention types, drawing on insights from communication and cognitive science. The resulting taxonomy provides a valuable lens for interpreting human behavior in collaborative scenarios, and is demonstrated through analyses of use cases like search and object transport. Ultimately, how can a more complete consideration of human intention unlock truly intuitive and effective human-robot partnerships?

The Evolving Tapestry of Intention

The development of robots capable of genuinely interacting with humans hinges on accurately predicting what people will do – effectively, modeling human intention. However, this proves remarkably difficult, as human actions are rarely straightforward and are often driven by complex, hidden motivations. Current robotic systems typically rely on pre-programmed responses or limited environmental awareness, leaving them ill-equipped to handle the inherent ambiguity of human behavior. A robot that misinterprets a person’s intentions – for instance, mistaking a reaching motion for an aggressive act – can create frustrating, or even dangerous, situations. Consequently, researchers are increasingly focused on creating more sophisticated models that account for the multifaceted nature of intention, moving beyond simple stimulus-response mechanisms to incorporate contextual understanding and predictive capabilities – a crucial step toward seamless and safe human-robot collaboration.

Human intention isn’t a single, easily defined impulse, but rather a layered process unfolding across varying durations. A person’s immediate actions – reaching for a tool, uttering a phrase – are often driven by short-term intentions, quickly formulated and executed. However, these actions are frequently embedded within, and shaped by, more enduring, long-term goals, such as completing a project, maintaining a relationship, or achieving personal growth. This interplay between immediate and extended timescales creates a complex tapestry of motivation; a seemingly simple act can be understood only when considered in the context of these broader, temporally-distributed intentions. Recognizing this multi-timescale nature is crucial, as predicting behavior requires discerning not just what someone is doing, but why, and at what level of abstraction that ‘why’ operates.

A comprehensive synthesis of psychological research underscores the critical importance of discerning both conscious and unconscious human intentions for predicting behavior. This review reveals that individuals often act on motivations outside of their immediate awareness – implicit goals and ingrained habits significantly shape actions alongside explicitly stated desires. Accurately anticipating human needs, therefore, necessitates moving beyond readily observable cues and delving into the complex interplay of these cognitive layers. Recognizing unconscious intentions allows for more robust models of human behavior, ultimately enabling more effective and intuitive interactions, particularly within the field of Human-Robot Interaction, where preemptive responsiveness is paramount.

Decoding Action: Methods for Interpreting Intent

Robot intention detection necessitates the translation of observed human actions into estimations of underlying goals. This process relies on identifying behavioral signals – including kinematics, physiology, and verbal cues – and associating them with specific intentions. Current methods employ a range of techniques, from Hidden Markov Models and Bayesian Networks to more recent approaches leveraging machine learning and deep neural networks. Effective interpretation requires addressing the inherent ambiguity in human behavior; a single action can serve multiple purposes, necessitating probabilistic models and contextual analysis to refine intention estimates. Furthermore, the reliability of these systems is contingent upon the quality and quantity of training data used to establish the correlation between observed behaviors and inferred goals.

Robot intention detection algorithms are fundamentally informed by psychological research into the cognitive processes underlying human action. Specifically, studies of goal-directed behavior, action planning, and theory of mind provide models for interpreting observable cues – including kinematics, gaze, and physiological signals – as indicators of an individual’s intentions. These psychological frameworks enable the development of computational models that predict future actions based on observed behavior, and allow for probabilistic inference regarding an actor’s goals. This review of the field demonstrates the consistent application of concepts like appraisal theory and Bayesian belief networks to build robust intention detection systems, with performance directly correlated to the fidelity of the underlying psychological models.

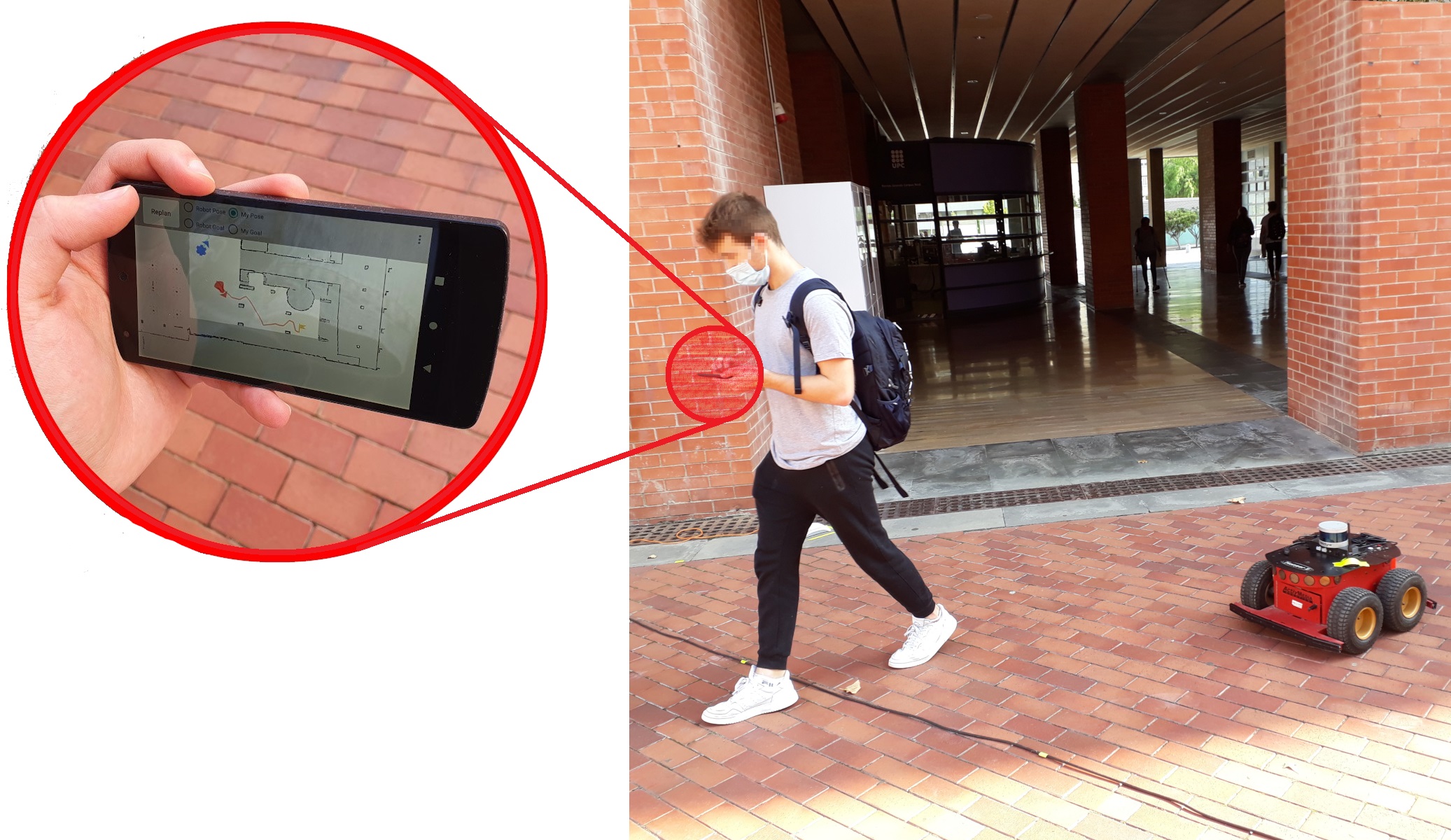

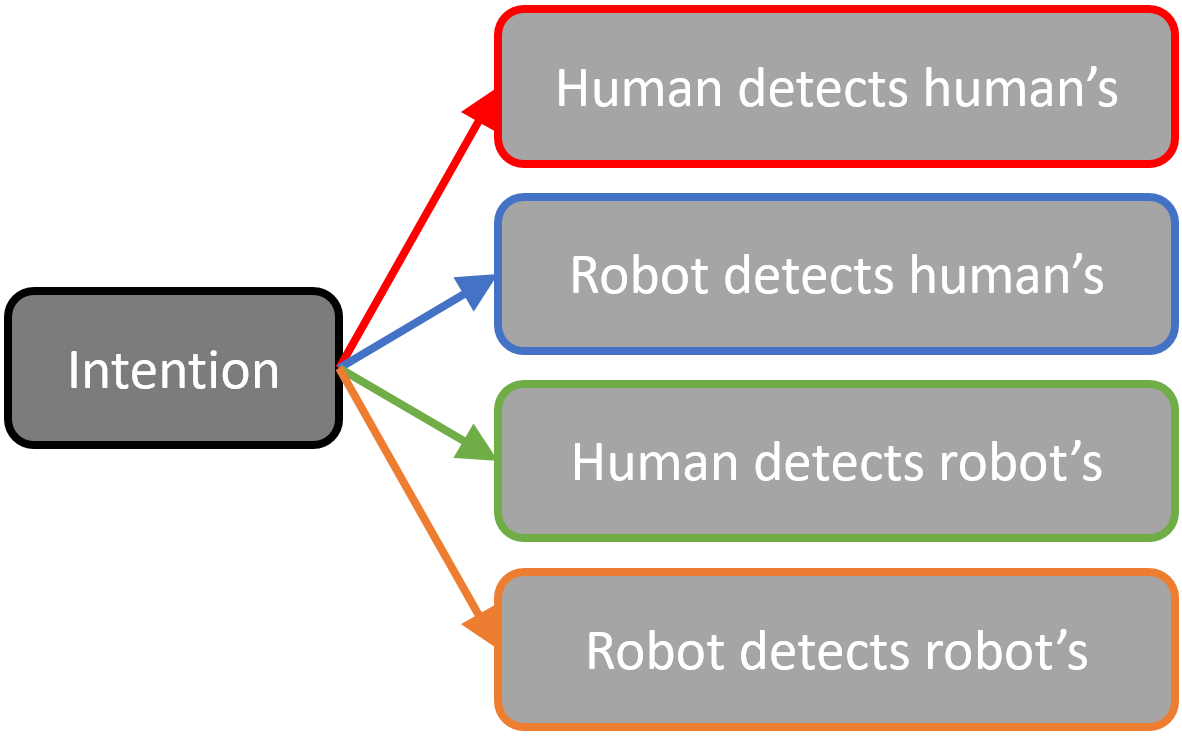

Successful execution of collaborative tasks, such as Collaborative Search and Collaborative Transport, is directly dependent on the ability of agents – robotic or otherwise – to accurately detect human intentions. In Collaborative Search, anticipating a human partner’s focus allows for efficient division of labor and reduces redundant effort in locating target objects. Similarly, in Collaborative Transport, predicting a human’s desired trajectory or intended handover point is critical for seamless object transfer and avoidance of collisions. These scenarios necessitate a shared understanding of goals and predicted actions; failures in intention detection result in decreased efficiency, increased cognitive load for the human participant, and potentially unsafe operational conditions.

Closing the Loop: Expressing Robotic Intent

Robot Intention Expression involves the conveyance of a robot’s objectives and planned actions to human collaborators. This communication is typically achieved through various modalities, including verbal statements, graphical displays of planned paths, or predictive visualizations of future actions. The level of detail communicated can vary based on the task complexity and the human partner’s expertise, ranging from high-level goals – such as “retrieve the object” – to precise kinematic trajectories. Effective intention expression requires the robot to model the human’s knowledge and adapt its communication strategy accordingly, ensuring the information provided is relevant, understandable, and timely for seamless collaboration.

Effective Human-Robot Interaction (HRI) fundamentally relies on a reciprocal exchange of intentions, where both the human and the robot clearly articulate their goals and anticipated actions. This bidirectional communication mitigates the potential for misunderstandings that can arise from differing interpretations of observed behaviors. Specifically, when a robot communicates its intentions – such as planned movements or task objectives – humans can better anticipate its actions, enabling more coordinated and efficient collaboration. Conversely, the robot’s ability to interpret human intentions allows it to adapt its behavior and avoid actions that conflict with human goals. This consistent and transparent exchange fosters trust between the human and the robot, a crucial factor for sustained and effective teamwork, particularly in complex or safety-critical environments.

Cybernetic Avatars, where a human operator directly controls a robotic representation, provide a demonstrable model for enhanced collaborative performance through explicit intention communication. Studies utilizing these avatars have shown that when the avatar’s intended actions are clearly signaled – for example, through visual cues indicating planned trajectory or grasping points – human partners exhibit significantly reduced reaction times and improved task completion rates. This is attributed to the preemptive understanding of the robot’s goals, allowing for smoother coordination and minimizing the need for reactive adjustments. Furthermore, the clarity of communicated intention fosters increased trust in the robotic partner, as human collaborators can more accurately predict its behavior and anticipate its needs, leading to more efficient and reliable teamwork.

The Spectrum of Intent: From Implicit to Explicit

Human intention isn’t simply declared; it unfolds along a continuum, beginning with deliberate, verbalized goals and extending to subtle, nonverbal signals embedded in behavior. Individuals frequently articulate intentions directly, providing clear directives for others to follow. However, a substantial portion of communicative intent remains unstated, conveyed instead through actions like gaze direction, body posture, or even the selection of specific tools. These implicit cues, while often less consciously processed, are critical for effective social interaction, allowing individuals to anticipate needs and coordinate activities without exhaustive explanation. Recognizing this spectrum-from the explicit statement of a desired outcome to the implicit reveal of an unfolding plan-is fundamental to understanding how humans communicate and collaborate, providing a richer and more nuanced perspective than focusing solely on declared objectives.

The success of future Human-Robot Interaction hinges on a system’s capacity to decipher not only what a person says they intend, but also what they imply through actions and behaviors. Robust interaction requires moving beyond simple command-response protocols; a truly adaptable robot must interpret explicit intention – directly communicated goals – alongside implicit intention, gleaned from subtle cues like gaze direction, body posture, or even preparatory movements. Failing to recognize these unspoken signals can lead to misinterpretations, frustrating interactions, and potentially unsafe scenarios. Consequently, researchers are increasingly focused on developing algorithms and sensor systems capable of bridging this gap, allowing robots to anticipate needs, offer proactive assistance, and ultimately, collaborate with humans in a more intuitive and effective manner.

A nuanced understanding of human action necessitates recognizing not just what someone intends to achieve – their goal – but also how they plan to achieve it, a concept known as implementation intention. This detailed specification of the steps required to reach a goal offers critical predictive power; by outlining the anticipated sequence of actions, it allows systems to proactively support, rather than reactively respond to, human behavior. This review synthesizes existing research demonstrating the benefits of incorporating implementation intention into models of human action, revealing its potential to dramatically improve the responsiveness and adaptability of Human-Robot Interaction. While this work doesn’t introduce new quantitative data, it highlights the importance of this often-overlooked facet of intention for building truly collaborative and intuitive systems.

The study meticulously dissects the layers of human intention, acknowledging that effective collaboration – be it between humans or with robots – hinges on recognizing not just what is desired, but how that desire is communicated. This resonates with a fundamental principle of system design: as Edsger W. Dijkstra observed, “It’s not enough to have good intentions, you also need to have good tools.” The taxonomy presented isn’t merely an academic exercise; it’s a toolkit for bridging the communication gap, allowing robotic systems to move beyond simple task execution and toward a more nuanced understanding of shared goals. Like erosion slowly reshaping landscapes, misinterpretations of intention accumulate as ‘technical debt’ in collaborative systems, hindering long-term harmony.

The Horizon Recedes

The attempt to categorize human intention, as detailed within, yields not resolution, but a clearer mapping of the problem’s inherent instability. Any taxonomy, however meticulously constructed, becomes a snapshot of a perpetually flowing system. The edges blur-implicit communication rarely remains so, and shared intention is, at best, a temporary alignment of vectors. Uptime is merely temporary; the illusion of seamless collaboration will always be shadowed by the latency inherent in bridging biological and artificial minds.

Future work will inevitably confront the limitations of applying static models to dynamic phenomena. The focus should shift from ‘understanding’ intention-a fundamentally impossible task-to predicting its likely manifestations, accepting that prediction is not comprehension. Robustness in human-robot systems will not arise from perfect interpretation, but from graceful degradation when those interpretations inevitably fail. Systems age; the question is whether they age gracefully.

The true challenge lies not in mirroring human cognition, but in building systems resilient to its unpredictability. Stability is an illusion cached by time. Investigating methods for continual recalibration, incorporating feedback loops that account for evolving human behavior, and embracing a degree of intentional ambiguity may prove more fruitful than pursuing the chimera of complete understanding.

Original article: https://arxiv.org/pdf/2602.15963.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Overwatch Domina counters

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- 1xBet declared bankrupt in Dutch court

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Gold Rate Forecast

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

2026-02-19 09:33