Author: Denis Avetisyan

New research reveals that user politeness significantly alters the dynamics of information-seeking dialogues with generative AI, impacting both the quality and efficiency of the exchange.

User politeness demonstrably affects response length, informational content, and energy consumption in task-based dialogues with generative AI systems.

While increasingly sophisticated, generative AI’s responsiveness often overlooks the subtle nuances of human communication. This is explored in ‘Cooking Up Politeness in Human-AI Information Seeking Dialogue’, a study investigating how user politeness shapes conversational outcomes in task-oriented dialogues-specifically, seeking cooking assistance. Results demonstrate that politeness is not merely cosmetic, systematically influencing response length, informational gain, and crucially, energy efficiency. Could prioritizing politeness in AI design lead to more inclusive, sustainable, and ultimately, more effective interactions?

The Illusion of Politeness: A Costly Facade

Successful communication in task-based dialogues, such as those between people and computers, isn’t solely determined by the accuracy of information exchanged; the manner in which requests are made and information is delivered significantly impacts efficiency. Research indicates that incorporating elements of politeness – including phrasing requests as questions rather than commands, offering justifications, and acknowledging the system’s effort – can lead to more cooperative responses and reduced errors. This suggests politeness isn’t merely social nicety, but a fundamental component of effective communication, facilitating smoother interactions by reducing cognitive load and fostering a more positive collaborative environment. Consequently, overlooking these socio-pragmatic factors in human-computer interaction risks misinterpreting the true cost of dialogue, as a curt or demanding system, even if technically accurate, may necessitate more reparative turns and ultimately hinder task completion.

Current evaluations of Natural Language Processing systems frequently prioritize task completion – did the system correctly answer a question or fulfill a request? – while largely ignoring how that completion was achieved. This narrow focus obscures a critical element of effective communication: socio-pragmatic considerations like politeness, clarity, and conversational flow. A system might technically provide the correct information, but do so in a brusque or confusing manner, ultimately increasing the user’s cognitive load and diminishing the overall interaction experience. Consequently, traditional metrics can misrepresent the true ‘cost’ of interaction, failing to capture the negative impact of impolite or inefficient dialogue, and potentially leading to the development of systems that are technically functional but frustrating to use. A more holistic evaluation, therefore, must incorporate these nuanced dimensions to accurately reflect the quality and usability of human-computer dialogue.

Simulating Conversation: A Controlled Descent into Style

A large language model (LLM) simulation was conducted to generate conversational data representing three distinct politeness profiles: polite, hyper-efficient, and impolite. This approach involved prompting the LLM to role-play both the agent and the user, with specific instructions defining the desired level of politeness for each participant in the simulated conversation. By systematically varying these politeness parameters, we created a dataset of interactions designed to isolate the effects of conversational style on downstream metrics. The resulting dialogues maintained consistent task objectives and complexity, allowing for a controlled evaluation of how politeness influences information exchange and user perception.

To determine the specific influence of conversational style, our simulation methodology controlled for confounding variables related to informational content and task difficulty. Each simulated conversation utilized identical task parameters and required the delivery of the same core information, irrespective of the agent’s politeness profile-designated as polite, hyper-efficient, or impolite. This ensured any observed differences in user experience or information retention could be directly attributed to the stylistic variations in agent communication, rather than to differences in the information itself or the cognitive load imposed by a complex task. Consequently, the resulting data provides a clear assessment of how politeness-or lack thereof-affects information delivery efficacy in isolation.

To ensure the reliability and generalizability of simulation results regarding conversational style, three large language models were employed: Llama-3.1-8B-Instruct, DeepSeek-8B, and Qwen-2.5-7B-Instruct. These models, all parameter-efficient and instruction-tuned, were selected to provide a diverse baseline for assessing the impact of politeness profiles. Utilizing multiple models mitigated the risk of results being specific to a single model’s architecture or training data, increasing confidence in the observed effects of varying conversational styles on information delivery. Each model underwent identical prompting and evaluation procedures to facilitate comparative analysis.

Quantifying the Signal: Information Nuggets and Statistical Noise

To enable quantifiable analysis of information delivery, we operationalized the concept of an ‘information nugget’ as the minimal, discrete unit of factual content within an agent’s response. This definition moves beyond simply measuring response length, instead focusing on the actual conveyance of distinct pieces of information. Each statement delivering a verifiable fact, regardless of surrounding conversational context, was counted as a single nugget. This approach allows for a direct comparison of information transfer rates across different agent profiles and conversational strategies, providing a metric independent of verbose or redundant phrasing. The count of these information nuggets serves as the primary dependent variable in our analysis of agent performance and efficiency.

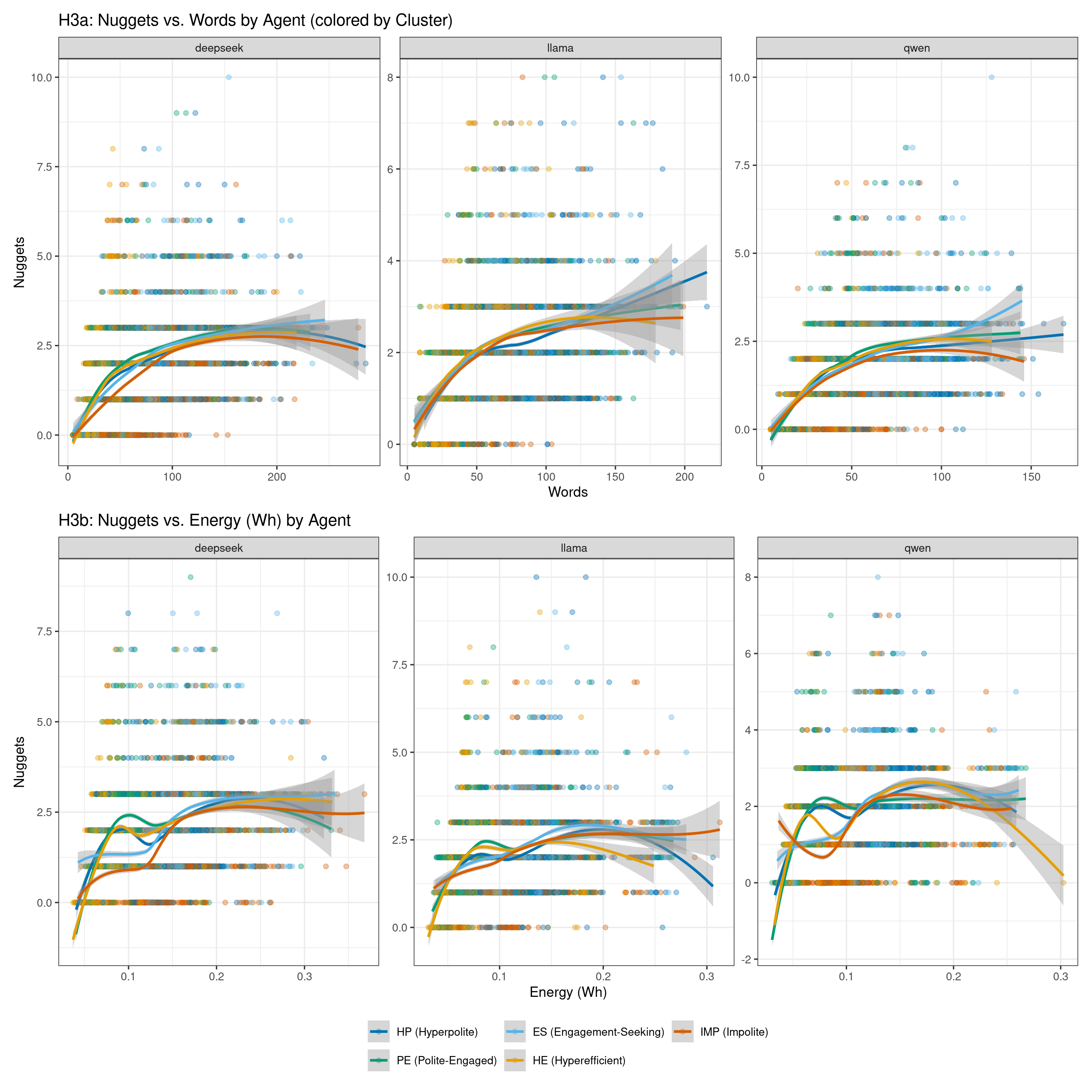

Analysis of information delivery was conducted by employing a Negative Binomial Model to quantify the number of ‘information nuggets’ – minimal factual units – present in agent responses across various politeness profiles. This statistical approach allowed for the assessment of count data exhibiting overdispersion, a characteristic observed in the distribution of information nuggets. Results indicated statistically significant variations in information nugget counts between profiles (p < 0.001) , demonstrating that differing levels of politeness demonstrably impact the quantity of factual information conveyed in agent responses.

Analysis of simulation data indicates that increased politeness does not consistently correlate with a higher volume of information transfer. Specifically, the count of ‘information nuggets’ – defined as minimal factual units – varied by 20-40% between different politeness profiles (p < 0.001). Certain ‘hyper-efficient’ profiles, prioritizing direct information delivery, achieved comparable or superior information nugget counts to more elaborately polite profiles. This suggests that while politeness may influence user experience, it is not a necessary condition for effective information conveyance, and strategies focused on conciseness can be equally, or more, effective in delivering factual content.

Analysis of agent responses revealed substantial variations in length depending on the employed politeness profile. Specifically, response length ranged from the shortest to the longest by a factor of up to 90%. This indicates a significant difference in the verbosity of responses, even when conveying similar information. The observed length differences were consistent across the simulation data and suggest that politeness profiles intrinsically influence the amount of text generated, independent of the information content delivered.

The Price of Pleasantries: Towards Sustainable Dialogue

Dialogue systems, increasingly prevalent in daily life, demand careful consideration of energy efficiency alongside performance metrics. Recent research demonstrates that the energy expenditure of these systems isn’t solely dictated by computational load, but also by the way they communicate. Energy efficiency is quantified not simply by watts consumed, but by a ratio of ‘information nuggets’ – discrete, meaningful units of information delivered to the user – to the total energy used. This approach reveals that conversational style significantly impacts energy usage; systems employing different politeness strategies can vary in efficiency by over 23%. Prioritizing this metric isn’t merely about reducing environmental impact, but also about creating sustainable and accessible technology, particularly as these systems become more deeply integrated into resource-constrained environments and everyday applications.

Research indicates a significant disparity in energy efficiency among differing politeness profiles within dialogue systems. Analysis of conversational data revealed that the least efficient profile consumed up to 23.2% more energy per unit of information delivered – measured as ‘nuggets’ – compared to the most efficient. This suggests that stylistic choices in conversational AI aren’t merely about user experience; they have demonstrable energetic consequences. The findings highlight a previously unconsidered dimension of sustainable AI design, where optimizing for politeness needn’t come at the cost of increased energy consumption, and careful consideration of conversational style can contribute to greener, more efficient technologies.

For dialogue systems to truly serve diverse users, equitable information delivery across conversational styles is paramount. This principle, termed style-response parity, dictates that a system should provide the same level of informative content regardless of whether it adopts a formal, casual, or otherwise nuanced approach. Failing to achieve this parity risks creating systems that inadvertently privilege certain communication styles, potentially excluding or frustrating users who prefer different approaches. Research indicates that stylistic variations can significantly impact perceived helpfulness and user satisfaction; a system perceived as overly polite or unnecessarily verbose, for example, may be seen as less efficient than one that delivers information directly. Ultimately, prioritizing style-response parity fosters inclusivity and ensures that dialogue systems are accessible and effective for all.

The foundation for discerning authentic politeness in dialogue systems rested upon a comprehensive dataset derived from Wizard-of-Oz experiments, meticulously captured by Frummet and colleagues. This approach involved human ‘wizards’ simulating conversational agents, allowing researchers to observe and record naturally occurring politeness strategies in response to varied user prompts. The resulting dataset doesn’t rely on pre-defined rules or artificial constraints; instead, it reflects the nuanced and often subtle ways humans express politeness in real-time interactions. By analyzing these interactions, researchers were able to identify key linguistic features and behavioral patterns indicative of different politeness profiles, providing a crucial benchmark for evaluating the energy efficiency and inclusivity of automated dialogue systems and ensuring they move beyond simplistic, rule-based approaches to conversational style.

The pursuit of seamless human-AI interaction, as detailed in this research, inevitably reveals the messy reality of deployment. It’s observed that user politeness impacts energy efficiency-a practical concern easily lost in theoretical elegance. Andrey Kolmogorov once said, “The mathematics is there for everyone to see, but the vision is private.” This resonates deeply; the algorithms function as designed, yet the way they’re used, influenced by something as subtle as politeness, fundamentally alters the outcome. The study highlights how pragmatic considerations – minimizing dialogue length and energy consumption – are intrinsically linked to social dynamics. Everything optimized for efficiency will, in time, be optimized back toward human factors, as production relentlessly exposes unforeseen constraints. This isn’t a failure of design, simply a testament to the enduring power of real-world use.

The Road Ahead

The observation that user politeness influences AI response length, content, and energy consumption feels less like a breakthrough and more like a restatement of basic social dynamics. Production systems, predictably, mirror the chaos they receive. The elegance of a perfectly rational agent is, once again, revealed as a laboratory fiction. The real question isn’t if politeness matters, but how much extra compute is dedicated to smoothing over the edges of blunt requests, and what that cost is to those with less bandwidth.

Future work will inevitably focus on quantifying this ‘politeness tax’-the energy expenditure required for AI to navigate impolite prompts. One suspects the results will be depressing. A more interesting, though likely ignored, avenue of inquiry involves deliberately introducing friction. Perhaps a system that subtly penalizes brusque queries could nudge users toward more considerate interactions, or at least accurately model the true cost of real-world communication.

Ultimately, this research serves as a reminder that inclusivity isn’t merely about avoiding bias in datasets. It’s about acknowledging that even the most advanced systems are still constrained by the messy realities of human interaction. Legacy will be the energy bill, and bugs will be the moments the system refuses to suffer fools any longer.

Original article: https://arxiv.org/pdf/2601.09898.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash Royale Best Boss Bandit Champion decks

- Vampire’s Fall 2 redeem codes and how to use them (June 2025)

- World Eternal Online promo codes and how to use them (September 2025)

- Best Arena 9 Decks in Clast Royale

- How to find the Roaming Oak Tree in Heartopia

- Country star who vanished from the spotlight 25 years ago resurfaces with viral Jessie James Decker duet

- Mobile Legends January 2026 Leaks: Upcoming new skins, heroes, events and more

- Solo Leveling Season 3 release date and details: “It may continue or it may not. Personally, I really hope that it does.”

- ATHENA: Blood Twins Hero Tier List

- M7 Pass Event Guide: All you need to know

2026-01-17 06:41