Author: Denis Avetisyan

New research explores how assistive robots can enhance independence and social inclusion for blind individuals by leading and supporting them through shared experiences like museum visits.

This review examines the design and implementation of robot-assisted group tours, focusing on navigation, environmental awareness, and effective human-robot interaction for blind users.

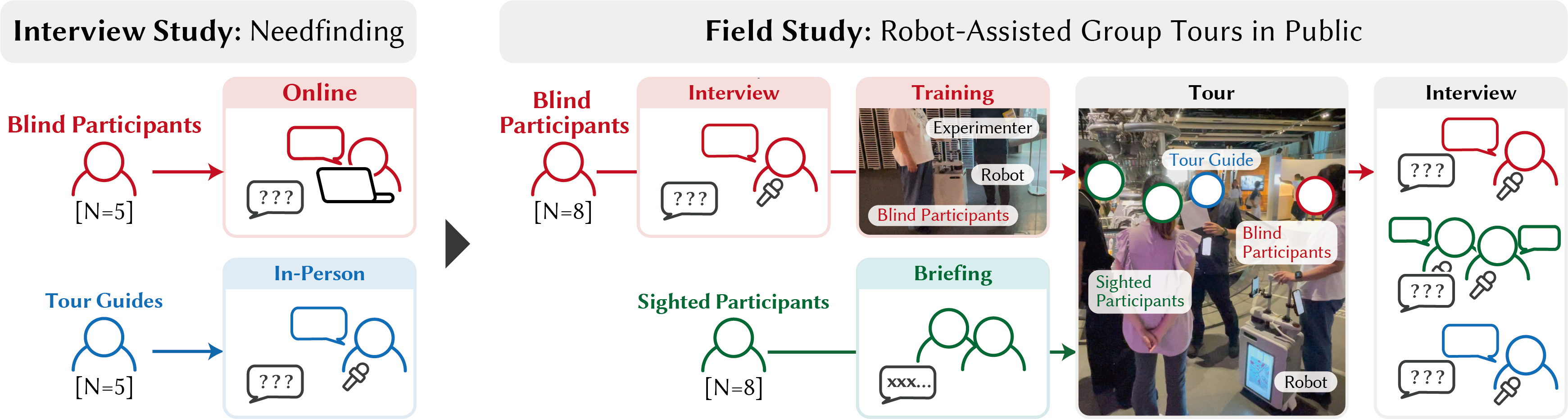

Meaningful social participation often hinges on interpreting subtle visual cues, presenting a significant barrier for blind individuals in group settings. This research, detailed in ‘Robot-Assisted Group Tours for Blind People’, investigates how a mobile robot can facilitate more inclusive group experiences, specifically through guided tours with mixed-visual groups. Findings from a field study in a science museum demonstrate that robotic assistance can enhance a blind participant’s sense of safety and awareness, though concerns regarding full group integration remain. How can future robotic systems be designed to not only address navigational challenges but also foster more natural and equitable social interactions for blind individuals in shared spaces?

Navigating Exclusion: The Barriers to Museum Access for Visually Impaired Visitors

Museums, intended as bastions of knowledge and cultural heritage, frequently present significant accessibility challenges for visually impaired individuals. Architectural layouts often prioritize aesthetic design over tactile navigability, with limited or absent tactile maps, braille signage, and audio guides that comprehensively describe exhibits. This lack of thoughtful accommodation extends beyond physical access; descriptive language in existing materials frequently focuses on visual attributes-color, shape, and artistic technique-rather than conveying the exhibit’s conceptual meaning or historical context. Consequently, blind and low-vision visitors may experience museums as spaces of exclusion, reliant on the assistance of others or forced to passively receive secondhand interpretations, thereby diminishing their potential for independent exploration and full cultural participation.

Conventional museum tours frequently prioritize visual information – detailed analyses of paintings, the aesthetic qualities of sculpture, or the layout of historical artifacts – inadvertently creating significant obstacles for blind visitors. These tours often present descriptions of objects rather than experiences with them, positioning visually impaired individuals as passive recipients of second-hand accounts. Consequently, blind patrons commonly rely on sighted companions to interpret exhibits, or are limited to basic audio descriptions that lack the nuance and detail available to sighted visitors. This dependence fundamentally alters the museum experience, hindering independent exploration and diminishing the opportunity for personal connection and immersive engagement with the artwork and historical context.

The reliance on sighted guides or heavily verbalized descriptions for blind museum visitors fundamentally alters the experience of encountering art and artifacts. Meaningful engagement stems from independent exploration – the ability to approach, touch (when permitted), and interpret exhibits at one’s own pace and through personal interaction. When this autonomy is removed, the immersive quality of the museum visit is diminished, transforming it from a self-directed journey of discovery into a passively received account. This dependence not only restricts access to nuanced details but also limits the development of a personal connection with the exhibits, hindering the potential for profound aesthetic and intellectual enrichment. The core of a fulfilling museum experience – the freedom to explore and interpret – is compromised when a visitor is unable to navigate the space and engage with the collection independently.

Introducing an Assistive Robotic System: Towards Truly Inclusive Museum Tours

This research investigates an Assistive Robot intended to enhance the museum experience for blind individuals participating in group tours. The system aims to move beyond traditional guided tours, which often prioritize the needs of sighted visitors, by fostering increased autonomy for visually impaired users. This is achieved through robotic assistance that enables independent exploration of exhibits while maintaining situational awareness within the group context. The robot is designed not simply as a navigational aid, but as a platform to facilitate richer engagement with museum content, allowing users to access detailed descriptions and interact with exhibits at their own pace and according to their individual preferences.

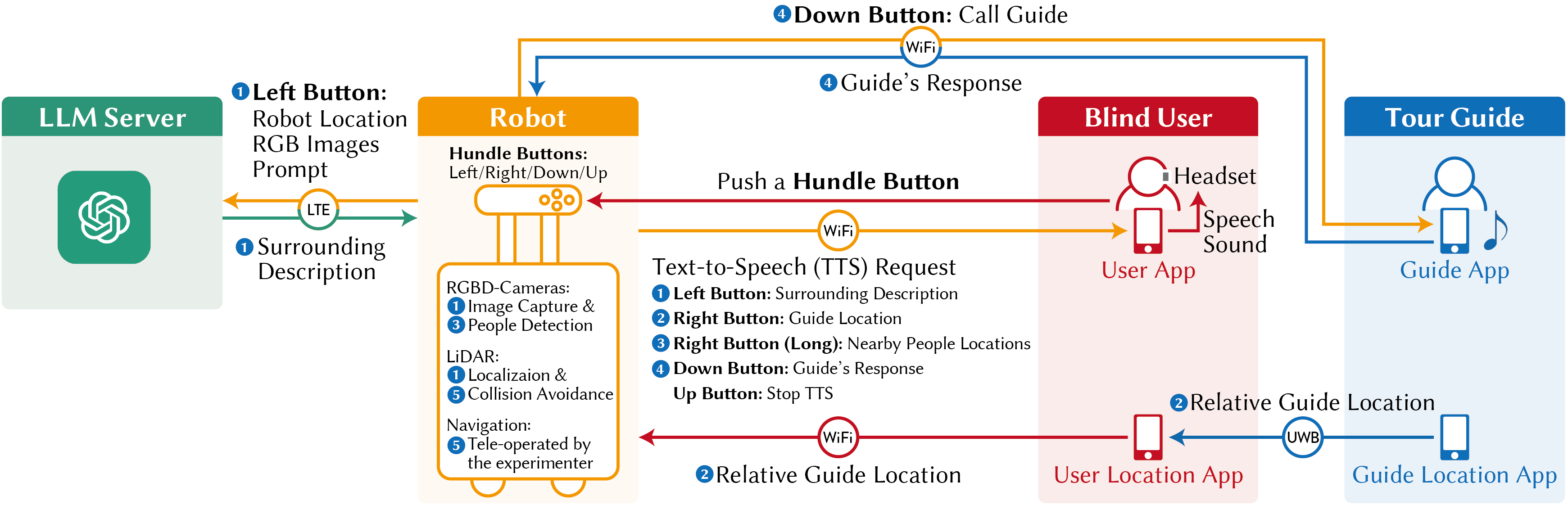

The Assistive Robot utilizes Ultra-Wideband (UWB) technology to achieve precise, real-time location tracking within indoor environments, enabling accurate positioning relative to the user and surrounding obstacles. This is coupled with a teleoperation system, allowing a remote human operator to intervene and ensure safe navigation, particularly in crowded or dynamically changing museum spaces. The teleoperation functionality doesn’t constitute full remote control; instead, it provides override capabilities for collision avoidance and complex maneuvering, supplementing the robot’s autonomous navigation algorithms and enhancing overall safety without compromising user independence. The combination of UWB and teleoperation is critical for reliable operation within the constraints of a public tour setting.

The Assistive Robot employs a shared autonomy framework, meaning control is dynamically distributed between the robot and the user. This is achieved through continuous monitoring of the user’s navigational input – including direction, speed, and expressed preferences – which the system then integrates with its own environmental awareness and path planning algorithms. The robot doesn’t operate as a fully autonomous guide or a remotely controlled device; instead, it anticipates user needs based on observed behavior and offers suggestions or interventions only when necessary, such as obstacle avoidance or route correction. This adaptive approach ensures the user retains a sense of control and agency throughout the tour, while the robot provides support to overcome navigational challenges and enhance the overall experience.

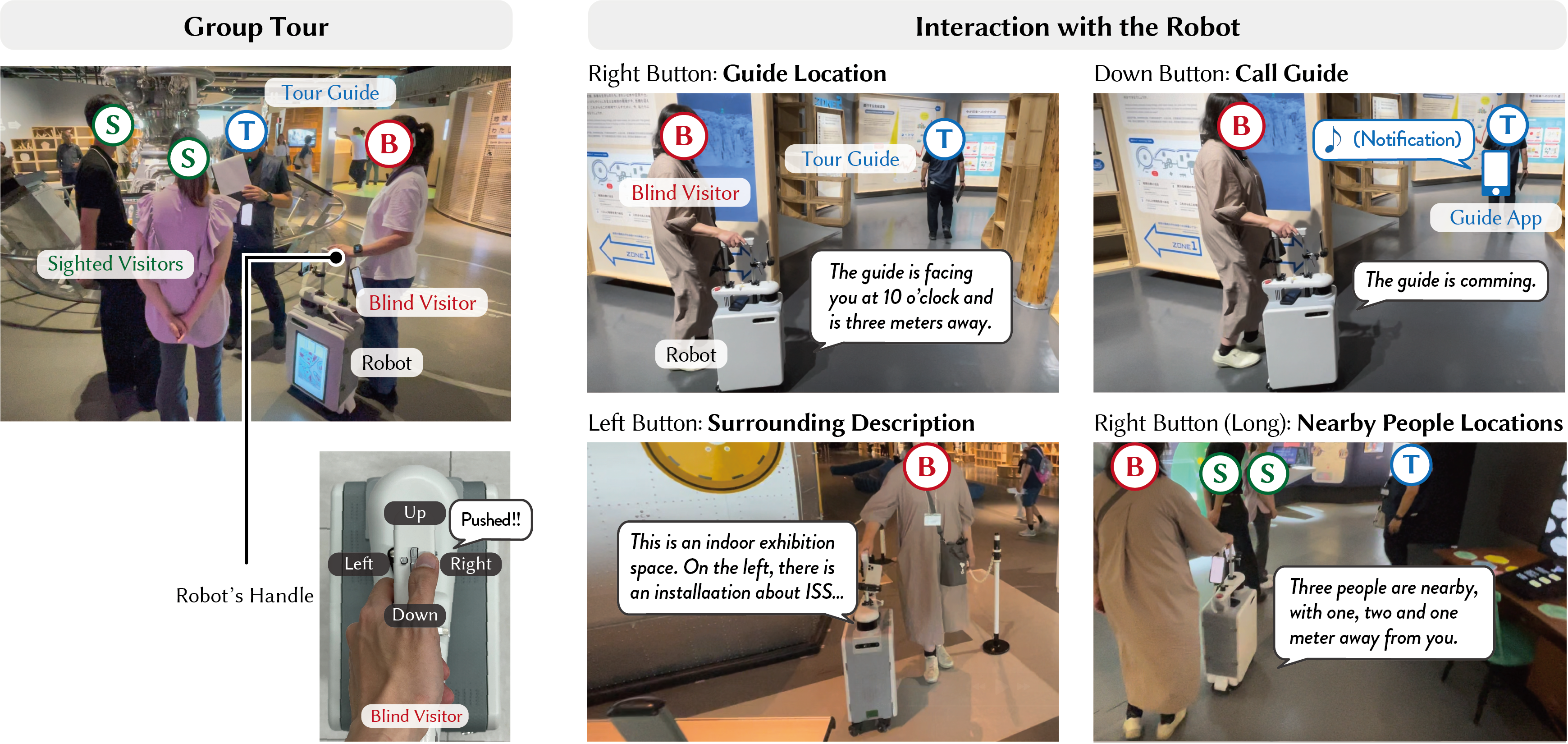

Robot Capabilities: Describing, Locating, and Connecting the Museum Experience

The robot employs a Surrounding Description capability, utilizing onboard sensors to analyze the immediate environment and generate spoken descriptions of exhibits and the museum space. This functionality extends beyond simple object recognition to include spatial relationships – detailing the position of exhibits relative to the user and to each other – and contextual information, such as exhibit titles, artist names, and relevant historical data. The system dynamically adjusts descriptions based on the user’s position and orientation, providing a continuously updated and relevant awareness of the surroundings. Data sources include visual sensors, depth cameras for spatial mapping, and pre-loaded museum exhibit data, all processed to construct coherent and informative verbal reports.

The robot incorporates a Nearby People Location system utilizing sensor data to identify individuals within a defined proximity. This functionality enables the robot to verbally announce the presence of registered group members and the designated Tour Guide. Identification is achieved through pre-programmed profiles or, potentially, real-time facial recognition. The system’s purpose is to facilitate group cohesion and improve communication during the museum visit by proactively informing users of the whereabouts of key individuals, thereby fostering social interaction and preventing separation within the group.

The Call Guide Feature functions by establishing a direct audio communication channel between the user and the designated tour guide via the robotic platform. Upon user activation, the robot transmits an audio request to a pre-configured communication endpoint, typically a radio or networked device carried by the tour guide. This allows the user to verbally articulate their need for assistance – such as clarification on an exhibit, directions within the museum, or reporting an issue – without needing to locate the guide independently. The system is designed for one-to-one communication, prioritizing the user’s request and ensuring a prompt response from the tour guide, thereby facilitating efficient and personalized support throughout the museum visit.

The robot facilitates interaction with designated “Touch Experience” exhibits, allowing users to physically explore objects while receiving contextual information. This functionality is achieved through a combination of proximity sensing and verbal feedback; the robot detects when a user is engaging with a tactile exhibit and provides descriptive audio commentary relevant to the object being touched. This integration of tactile and auditory stimuli is intended to enhance learning through multi-sensory engagement, accommodating diverse learning styles and providing a more immersive museum experience. The system is designed to support exhibits featuring varying textures, shapes, and materials, offering descriptive details appropriate to the specific object being explored.

Towards Personalized Exploration and a Future of Inclusive Museum Design

The effectiveness of the assistive robotic system hinges on its capacity for personalization, allowing features to be dynamically adjusted to suit each user’s unique requirements and preferences. This isn’t simply about adjusting volume or display settings; the robot learns an individual’s typical movement patterns, preferred levels of detail in descriptions, and even their communication style. By tailoring the information presented and the manner in which it is delivered, the system avoids overwhelming the user with unnecessary data or presenting it in a format that is difficult to process. This individualized approach fosters a sense of agency and control, enabling blind participants to confidently navigate complex environments and fully engage with museum exhibits, ultimately maximizing the benefits of the assistive technology and ensuring a truly inclusive experience.

The assistive robot fundamentally shifts the museum experience for blind individuals by prioritizing independent exploration and detailed environmental awareness. Rather than passive accompaniment, the system delivers nuanced contextual information – descriptions of exhibits, spatial layouts, and even the positioning of other tour participants – enabling users to navigate and interact with exhibits on their own terms. This proactive support fosters a sense of agency, allowing participants to fully engage in group tours without relying heavily on sighted guides or feeling disconnected from the experience; the robot essentially functions as a personalized extension of senses, bridging information gaps and promoting confident, self-directed learning within a shared cultural space.

Recent investigation confirms the practical application of mobile robotic assistance in broadening museum access for blind individuals, specifically during guided group tours. The study revealed that participants not only found the assistive robot feasible to use, but also reported a heightened sense of both safety and engagement throughout the tour experience. This positive reception was quantitatively supported by a System Usability Scale (SUS) score of 90.6, a result that signifies excellent system usability and demonstrates a strong potential for wider adoption of this technology in cultural heritage settings. The findings suggest that thoughtfully designed robotic aids can effectively bridge accessibility gaps and foster more inclusive experiences for all museum visitors.

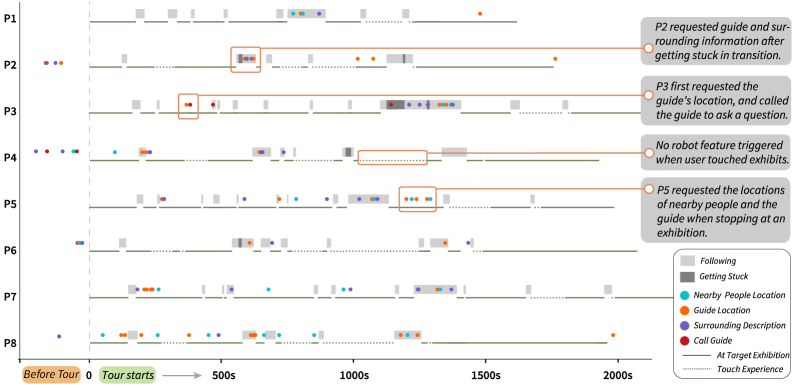

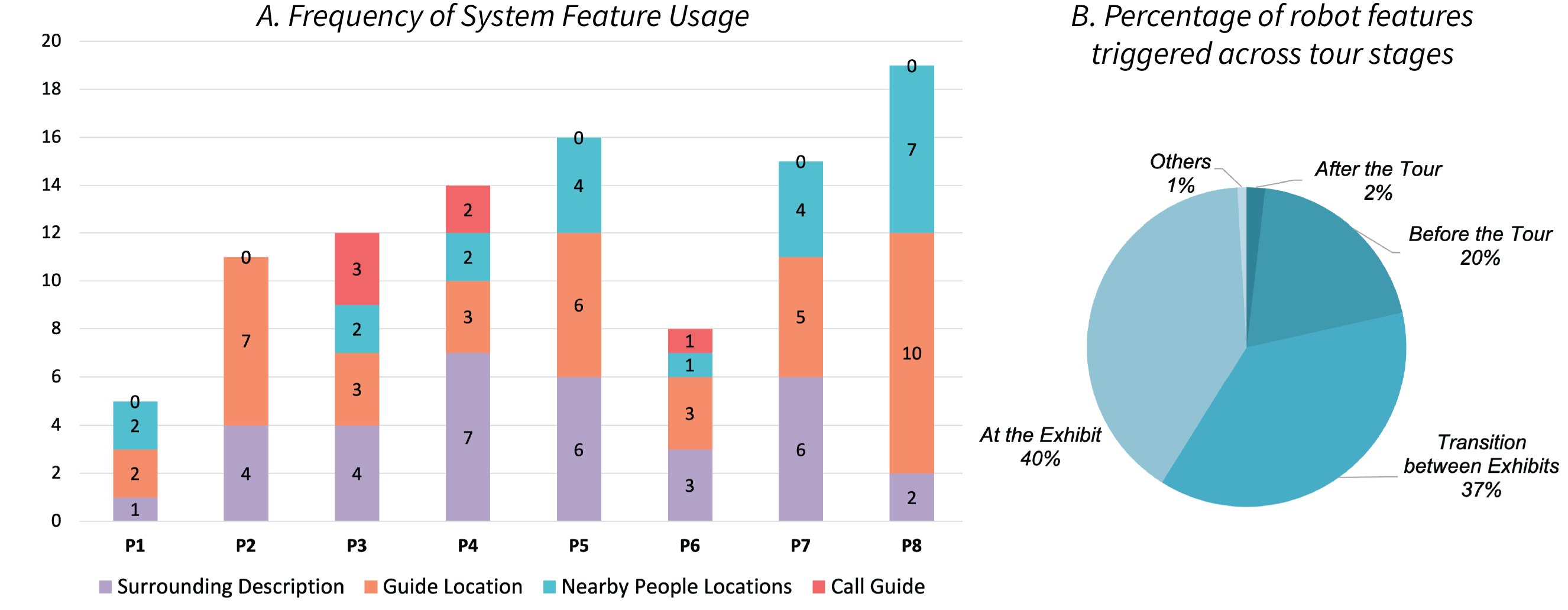

Participant interaction data revealed a clear preference for the robot’s navigational assistance and environmental awareness capabilities; the ‘Guide Location’ feature, utilized an average of 4.88 times per tour, consistently helped users maintain spatial orientation and confidently explore the museum environment. Closely following was the ‘Surrounding Description’ feature, accessed 4.13 times per tour, demonstrating a strong need for detailed contextual information about the exhibits and surrounding areas. This frequent engagement with both features underscores their critical role in facilitating independent exploration and enhancing the overall museum experience for blind individuals, suggesting that future iterations should prioritize the refinement and expansion of these core functionalities.

Ongoing development centers on refining the robot’s ability to perceive and respond to the dynamics of group interactions, moving beyond individual assistance to foster a genuinely collaborative museum experience. Researchers aim to equip the robot with sophisticated social awareness, enabling it to identify conversational cues, recognize participant engagement levels, and proactively mediate discussions – for example, by relaying questions from a visually impaired user to the tour guide or by summarizing key points for the benefit of the entire group. This enhanced ‘Group Interaction Awareness’ will not only improve communication flow but also cultivate a stronger sense of inclusion, allowing blind individuals to participate more fully in shared learning and social bonding during guided tours, ultimately transforming the assistive robot from a personal guide into a seamless member of the group.

The research highlights a crucial interplay between system structure and user experience, mirroring the principles of elegant design. This study demonstrates that assistive robotics for blind individuals isn’t merely about implementing navigation tools-it’s about crafting a cohesive system that facilitates genuine social engagement within a group dynamic. As Linus Torvalds aptly stated, “Talk is cheap. Show me the code.” This project embodies that sentiment, moving beyond theoretical accessibility to a practical demonstration of how carefully considered robotic assistance can unlock richer experiences. The success hinges on how well the technology integrates with, and supports, the natural flow of human interaction, acknowledging that every new dependency-such as reliance on UWB signals for positioning-introduces hidden costs to overall freedom and usability.

Beyond the Tour: Charting a Course for Embodied Assistance

The pursuit of robotic assistance for blind individuals navigating group settings reveals a fundamental truth: mobility is rarely the core challenge. Rather, it is the orchestration of information – the constant negotiation between personal space, social cues, and environmental awareness – that truly defines successful interaction. This work, while demonstrating a viable technological scaffold, merely scratches the surface of this complexity. One cannot simply introduce a ‘robotic heart’ into a social ‘bloodstream’ without considering the intricate network of unspoken communication it disrupts or, potentially, enhances.

Future efforts must move beyond the isolated task of navigation. A truly elegant solution demands a system that anticipates the needs of the individual within the group dynamic. This requires a shift from reactive assistance – responding to immediate obstacles – to proactive support, predicting potential social friction and offering subtle guidance. The current reliance on UWB technology, while functional, represents a limited sensory palette; a more holistic understanding will necessitate the integration of multimodal data – auditory, tactile, and even olfactory information – to build a richer, more nuanced representation of the environment.

Ultimately, the success of such systems will not be measured by technical precision, but by their ability to fade into the background. The goal is not to replace human connection, but to augment it, fostering greater independence and participation without drawing undue attention to the assistive technology itself. The challenge, then, lies in designing a system that is both powerfully capable and gracefully unobtrusive-a delicate balance, indeed.

Original article: https://arxiv.org/pdf/2602.04458.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash of Clans Unleash the Duke Community Event for March 2026: Details, How to Progress, Rewards and more

- Brawl Stars February 2026 Brawl Talk: 100th Brawler, New Game Modes, Buffies, Trophy System, Skins, and more

- Gold Rate Forecast

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Brent Oil Forecast

- eFootball 2026 Show Time Worldwide Selection Contract: Best player to choose and Tier List

- Free Fire Beat Carnival event goes live with DJ Alok collab, rewards, themed battlefield changes, and more

- MLBB x KOF Encore 2026: List of bingo patterns

- Magic Chess: Go Go Season 5 introduces new GOGO MOBA and Go Go Plaza modes, a cooking mini-game, synergies, and more

- Overwatch Domina counters

2026-02-05 10:14