Author: Denis Avetisyan

Researchers have developed a novel AI model inspired by the navigation strategies of insects, achieving robust point-goal navigation with minimal pre-training.

This work presents an insect-inspired AI architecture integrating the central complex and mushroom body for efficient online learning and obstacle avoidance in robotic navigation tasks.

Despite advances in artificial intelligence, achieving efficient and robust navigation remains a significant challenge, particularly with limited computational resources. This is addressed in ‘An Efficient Insect-inspired Approach for Visual Point-goal Navigation’, which presents a novel agent drawing inspiration from the neural circuitry of insect brains – specifically, the integration of path integration via a central complex and associative learning through a mushroom body. The resulting model achieves performance comparable to state-of-the-art navigation systems with dramatically reduced computational cost and demonstrates robust obstacle avoidance in simulated environments. Could this bio-inspired approach unlock new avenues for developing lightweight, adaptable AI for real-world robotics and navigation tasks?

Deconstructing the Map: The Limits of Pre-Programmed Space

Conventional robotics systems frequently depend on meticulously constructed maps to navigate and operate within their surroundings. While effective in static, well-defined environments, this reliance introduces a critical limitation when confronted with dynamic or previously unknown spaces. The pre-built map approach struggles with unexpected obstacles, changes in layout, or entirely novel locations, necessitating complete re-mapping before any task can be attempted. This inflexibility hinders a robot’s ability to respond to real-world unpredictability and restricts its deployment in environments that are not fully predictable, such as disaster zones, rapidly changing construction sites, or even everyday human-populated spaces. Consequently, there’s a growing need for robotic systems capable of operating autonomously without prior spatial knowledge, pushing the boundaries of robotic intelligence and adaptability.

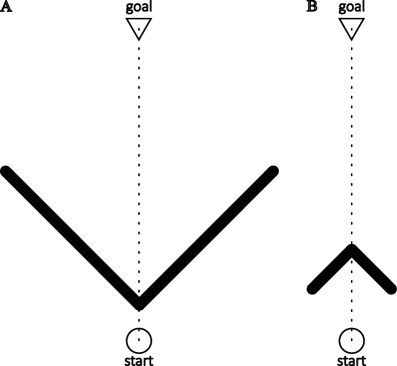

Point-goal navigation embodies a core challenge in artificial intelligence: enabling agents to achieve objectives in completely unfamiliar environments. Unlike traditional robotic systems that depend on detailed pre-existing maps, these agents must learn to navigate solely from a target location, expressed as a simple coordinate, without any prior understanding of the surrounding space. This necessitates the development of algorithms capable of simultaneous localization – determining the agent’s position – and path planning, all while operating under conditions of substantial uncertainty. The difficulty lies not merely in avoiding obstacles, but in actively exploring the environment to build a functional representation of it, a process akin to human spatial learning. Consequently, successful point-goal navigation demands not only efficient movement, but also robust methods for dealing with noisy sensor data and incomplete information, pushing the boundaries of autonomous agent capabilities.

Effective point-goal navigation demands a tightly integrated approach to localization and path planning, as an agent’s ability to reach a designated target hinges on both knowing where it is and determining how to get there. Localization, the process of accurately estimating an agent’s position within an unknown environment, presents a unique challenge without pre-existing maps or landmarks; solutions often involve sensor data fusion – combining information from cameras, lidar, and inertial measurement units – to build a real-time understanding of surroundings. Simultaneously, path planning algorithms must efficiently compute collision-free trajectories towards the goal, adapting to dynamic obstacles and uncertainties in the environment. The most successful strategies treat these two components not as separate problems, but as an iterative loop: improved localization refines the planned path, while navigating that path provides further data to enhance localization accuracy, ultimately enabling robust and adaptable movement through unfamiliar spaces.

![Despite a strong motor perturbation ([latex]b_{\omega} = 0.5[/latex] and [latex]n_{\omega} = 0.05[/latex]), the robot successfully navigated from a starting point of (-6, 2) to a goal of (-1, 0) within 500 frames, avoiding static obstacles as shown in the main trajectory and detailed in Supplementary Fig. 10.](https://arxiv.org/html/2601.16806v1/fig_png/gibson-example-path.png)

Simulating Reality: The Foundation for Scalable Exploration

Habitat is a high-fidelity simulation environment frequently utilized for the development and evaluation of Point-Goal Navigation (PGN) algorithms. These algorithms require robots to navigate to specified goal locations within a 3D space, and Habitat provides a platform for training and testing these capabilities at scale. The environment offers a diverse range of realistic indoor scenes, allowing researchers to assess PGN performance across varied layouts and visual conditions. Importantly, Habitat facilitates the generation of large datasets of simulated robot trajectories, which are critical for training data-hungry machine learning models used in PGN systems. This allows for rapid prototyping and iterative improvement of algorithms before deployment on physical robots, reducing both development time and cost.

Effective transfer of robotic algorithms trained in simulation to real-world deployment is predicated on the fidelity of the simulated environment. Discrepancies between simulated physics – including object interactions, friction, and gravity – and real-world phenomena introduce systematic errors that negatively impact performance. Similarly, inaccuracies in sensor modeling, such as noise profiles, field of view, and limitations in range or resolution, can lead to algorithms that fail to generalize. Addressing these issues requires robust physics engines and sensor models that accurately replicate real-world characteristics, enabling algorithms to learn policies applicable to genuine robotic tasks and environments.

iGibson distinguishes itself from typical robotic simulation environments through its emphasis on fidelity in both physical and visual rendering. The simulator utilizes a physics engine capable of modeling articulated objects, deformable materials, and complex contact dynamics with increased accuracy compared to commonly used platforms. Visually, iGibson employs photorealistic rendering techniques and leverages a large-scale scene dataset of indoor environments, allowing for more realistic sensor data generation. This enhanced realism extends to modeling lighting conditions, material properties, and sensor noise, contributing to improved transfer learning performance for robotic agents trained within the simulation.

![Insect-inspired navigation consistently outperforms ablated models across 100 independent trials in Habitat with Gibson4+ scenes, as measured by success rate [latex] SR [/latex] and path length [latex] SPL [/latex], and remains competitive with existing state-of-the-art approaches after accounting for simulator and dataset differences.](https://arxiv.org/html/2601.16806v1/x2.png)

The Echo of Experience: Building Robustness Through Continual Learning

Continual Learning (CL) is a machine learning paradigm designed to overcome the limitations of traditional models which suffer from catastrophic forgetting – the tendency to abruptly lose previously learned information when exposed to new data. Unlike models trained on static datasets, CL algorithms aim to accumulate knowledge incrementally over time, retaining performance on older tasks while adapting to new ones. This is achieved through various techniques that regulate the modification of model parameters, preventing the overwriting of crucial information from prior learning experiences. The core challenge in CL lies in balancing plasticity – the ability to learn new information – with stability – the retention of existing knowledge, and research focuses on developing strategies to achieve this balance efficiently and effectively.

Visual reafference, in the context of continual learning for autonomous navigation, functions as an error-correcting mechanism by comparing predicted sensory input with actual sensory input. This comparison generates a reafference signal-a discrepancy measure-indicating the difference between the expected and observed states. A low reafference signal confirms the agent’s internal state representation and validates successful actions, while a high signal triggers adjustments to the agent’s control policy or internal map. This feedback loop is critical for maintaining consistent localization and orientation throughout extended operation, preventing accumulated errors from degrading performance and enabling robust adaptation to new environments and conditions. The efficacy of visual reafference relies on accurate sensory processing and a robust state estimation framework.

Markov Chain Monte Carlo (MCMC) methods are employed within the continual learning framework to optimize the agent’s learning strategy by probabilistically sampling from a range of possible actions or parameters. This allows the agent to explore different learning approaches and identify those that maximize performance over time, effectively addressing the challenge of adapting to new environments without forgetting previously learned skills. The probabilistic nature of MCMC facilitates a balance between exploration and exploitation, enabling the agent to refine its learning process and improve its overall navigational efficiency in dynamic and complex environments. By iteratively sampling and evaluating different learning configurations, MCMC helps mitigate the impact of catastrophic forgetting and promotes robust, long-term learning capabilities.

![Insect-inspired models utilizing selective memory consolidation ([latex]Full-learnt[/latex]) outperform those employing excessive or hypothetical memory consolidation strategies across multiple trials.](https://arxiv.org/html/2601.16806v1/x5.png)

Selective Retention: Sculpting Memory for Long-Term Autonomy

The stabilization of learned skills hinges significantly on memory consolidation, a process crucial for preventing catastrophic forgetting – the abrupt and total loss of previously acquired knowledge when learning something new. This mechanism doesn’t simply store experiences; it selectively strengthens important memories related to successful navigation, effectively distilling crucial spatial information from complex environments. Without consolidation, robotic agents would constantly overwrite previously learned routes and strategies, rendering long-term autonomous operation impossible. The model demonstrates that by prioritizing and reinforcing these key navigational memories, agents can build a robust and enduring understanding of their surroundings, allowing for consistent performance even as environments change or become more complex. This process is fundamental to creating adaptive systems capable of continuous learning without sacrificing previously mastered skills.

Agents employing this novel system don’t simply accumulate navigational experience; they curate it. The model actively prioritizes and reinforces memories crucial for successful pathfinding, effectively distinguishing between essential landmarks and transient details. This selective strengthening allows the agent to maintain proficiency even as the environment undergoes alterations – new obstacles appear, familiar routes are blocked, or lighting conditions change. Rather than being overwhelmed by a constant influx of information, the system intelligently focuses on consolidating knowledge relevant to sustained performance, mirroring the way biological systems prioritize and retain vital skills while adapting to dynamic surroundings. The result is a robust and reliable navigational ability, capable of weathering environmental changes without catastrophic forgetting.

The developed model exhibits a marked enhancement in obstacle avoidance capabilities, achieving a substantial reduction in collisions during navigational tasks. Compared to a baseline odometry-collision model – which relies on simple reactionary responses to detected obstacles – this system proactively anticipates and navigates around impediments. This improvement isn’t simply about reacting faster; the model learns to predict potential collisions based on its accumulated spatial knowledge, enabling smoother and more efficient path planning. Consequently, the agent demonstrates a heightened ability to traverse complex environments with greater reliability and fewer disruptive encounters, suggesting a transition from reactive collision avoidance to a more sophisticated, predictive navigational strategy.

The integration of continual learning with memory consolidation presents a robust architecture for developing navigation systems capable of genuine adaptation. This framework allows agents to acquire new navigational skills without catastrophically forgetting previously learned ones, a common limitation in traditional robotic systems. By selectively reinforcing crucial memories while accommodating new information, the system effectively builds upon existing knowledge, enhancing performance in both familiar and novel environments. This approach doesn’t simply improve immediate navigational success; it fosters a resilient and dependable system, enabling agents to navigate dynamic spaces and overcome unforeseen obstacles with increased proficiency – ultimately moving closer to the goal of truly autonomous navigation.

The integration of continual learning and memory consolidation yields robotic agents demonstrably capable of sustained, dependable performance even amidst environmental shifts and extended operational periods. This isn’t simply about achieving faster completion times or shorter paths; it’s about building systems that retain learned skills, resisting the degradation often seen in traditional robotic navigation. By proactively stabilizing crucial navigational memories, the framework mitigates catastrophic forgetting – the sudden and complete loss of previously learned information – leading to a marked increase in the agent’s ability to adapt to novel situations and maintain consistent, reliable behavior. Consequently, these agents exhibit heightened robustness, minimizing errors and ensuring consistent functionality over time, which is paramount for real-world applications requiring dependable autonomous operation.

The pursuit of efficient navigation, as demonstrated in this work, echoes a fundamental principle of system understanding: deconstruction. This research doesn’t simply use insect neurobiology; it dissects the central complex and mushroom body, isolating core functionalities to build a robust, lightweight AI. As Vinton Cerf aptly stated, “Any sufficiently advanced technology is indistinguishable from magic.” This seemingly magical ability of the model to learn and navigate without extensive pre-training stems from a deliberate reduction to essential components, mirroring the process of reverse-engineering a complex system to reveal its underlying logic. The system’s capacity for online learning highlights that true intelligence isn’t about brute force computation, but elegant simplification.

Where Do We Go From Here?

This work establishes a functional, if simplified, insect-inspired navigational system. But the true test isn’t whether it works-simulations always flatter the architect-it’s what happens when the rules are bent. What if the ‘point-goal’ isn’t a fixed location, but a probabilistic distribution? Current implementations assume a static objective; introducing dynamic goals, or even deceptive ones, would rapidly expose the limitations of a system relying on consolidated memory traces. The elegance of the central complex/mushroom body pairing lies in its parsimony, but that same frugality might prevent adaptation to truly novel, adversarial environments.

The question isn’t merely one of algorithmic complexity. Robustness isn’t achieved through more layers, but through an understanding of failure. Where does this system break down? Is it vulnerable to sensory deprivation? To subtly altered spatial relationships? To the introduction of irrelevant stimuli designed to overload the ‘mushroom body’ with false memories? Answering these questions requires a deliberate program of ‘destructive testing’-not to dismantle the model, but to reveal the boundaries of its competence.

Ultimately, this work presents a compelling argument for bio-inspiration. However, true intelligence isn’t mimicry. It’s the ability to transcend the limitations of the source material. The next step isn’t to build a better insect brain, but to understand what that brain fails to do, and to engineer a solution that does it better-even if that solution bears no resemblance to its biological progenitor.

Original article: https://arxiv.org/pdf/2601.16806.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Overwatch Domina counters

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- Gold Rate Forecast

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- Breaking Down the Ending of the Ice Skating Romance Drama Finding Her Edge

- ‘Reacher’s Pile of Source Material Presents a Strange Problem

- Top 10 Super Bowl Commercials of 2026: Ranked and Reviewed

2026-01-26 09:48