Author: Denis Avetisyan

A new generation of neural interfaces is enabling more natural and precise control of robotic limbs, bringing assistive technology closer to seamless integration with daily life.

NOIR 2.0 demonstrates improved speed and accuracy in decoding neural signals for intuitive robotic arm operation in everyday tasks.

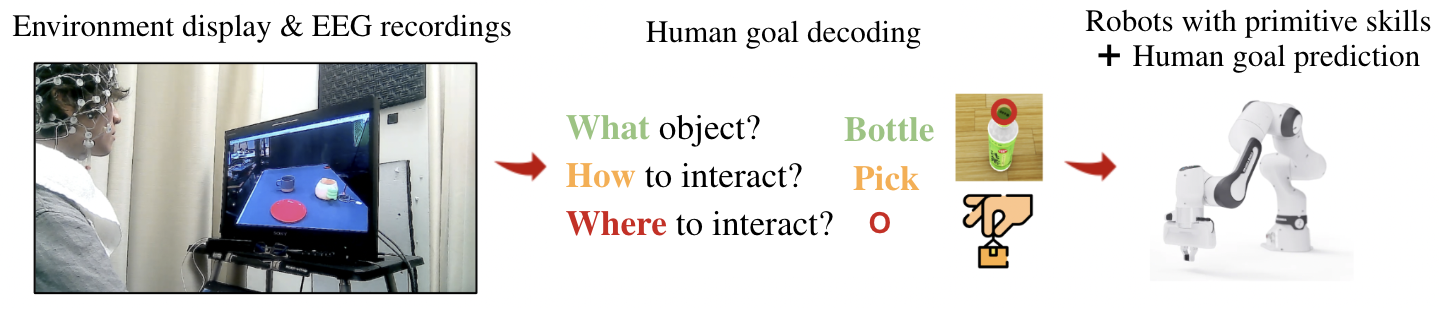

Despite advancements in assistive robotics, intuitive and efficient human control remains a significant challenge. This paper introduces NOIR 2.0: Neural Signal Operated Intelligent Robots for Everyday Activities, a brain-robot interface designed to address this limitation. By integrating enhanced neural decoding algorithms with few-shot robot learning-leveraging foundation models for dramatically improved adaptation-NOIR 2.0 achieves a 65% reduction in human time required for task completion. Could this represent a crucial step toward seamless, thought-controlled robotic assistance for daily life?

Decoding Intent: Bridging the Gap Between Thought and Action

Historically, directing a robotic system demanded meticulously detailed programming, a process akin to writing a comprehensive instruction manual for every conceivable action. This approach, while functional, created a significant disconnect between human intention and robotic response, hindering truly intuitive interaction. Each movement, each task, required explicit commands, limiting the robot’s ability to adapt to unforeseen circumstances or respond fluidly to dynamic environments. The inherent rigidity of this control method proved a considerable barrier, demanding substantial effort and expertise to translate even simple human desires into actionable robotic behaviors, ultimately restricting the potential for seamless human-robot collaboration.

The ambition to directly translate thought into action, by decoding human intent from brain activity, promises a profoundly intuitive interface between humans and machines. However, realizing this potential is fraught with technical hurdles. Acquiring meaningful signals requires sophisticated neuroimaging techniques – such as electroencephalography (EEG) or functional magnetic resonance imaging (fMRI) – each with limitations in spatial resolution, temporal accuracy, or practicality for real-world application. Furthermore, interpreting these complex neural patterns presents a significant computational challenge; the brain’s signals are inherently noisy, variable between individuals, and often subtle, demanding advanced signal processing and machine learning algorithms to discern underlying intent with sufficient reliability and speed. Successfully navigating these challenges is crucial for creating Brain-Robot Interfaces that are not only functional, but also robust, adaptable, and truly seamless for the user.

Existing Brain-Robot Interfaces (BRIs) often falter when confronted with the unpredictable nature of real-world tasks and individual human variation. Traditional systems, meticulously calibrated for specific actions, exhibit diminished performance when users attempt novel movements or when their cognitive states-such as fatigue or shifting attention-change. This lack of robustness stems from the difficulty in consistently extracting reliable control signals from the complex and noisy data generated by the brain. Furthermore, current BRIs typically require extensive recalibration for each new task, hindering their adaptability and limiting their potential for truly intuitive, long-term human-robot collaboration. The inherent variability in brain signals, coupled with the challenge of accounting for user-specific differences and changing conditions, presents a significant obstacle to widespread BRI adoption.

NOIR 2.0 represents a significant advancement in Brain-Robot Interface (BRI) technology, engineered to dramatically improve the efficiency of human-robot collaboration. This next-generation system addresses the limitations of earlier designs by focusing on robust signal acquisition and adaptable interpretation of human intent. Rigorous testing demonstrates the efficacy of this approach, with users completing tasks 45.97% faster overall, and crucially, experiencing a 65.11% reduction in the time they actively spend engaged in those tasks. These results suggest that NOIR 2.0 not only accelerates task completion, but also minimizes human effort, paving the way for more intuitive and effective partnerships between humans and robotic systems.

A Multi-Modal Decoding Pipeline: Extracting Command from Neural Signals

NOIR 2.0 utilizes Electroencephalography (EEG) as its primary input modality to capture brain activity. EEG is a non-invasive neuroimaging technique that measures electrical activity in the brain using electrodes placed on the scalp. These electrodes detect voltage fluctuations resulting from ionic current flows within the neurons of the brain. The resulting EEG signals are then digitized and processed to extract relevant features for decoding user intent. Because EEG is non-invasive, it allows for real-time monitoring of brain activity without requiring surgical implantation, making it suitable for broad applicability and user comfort.

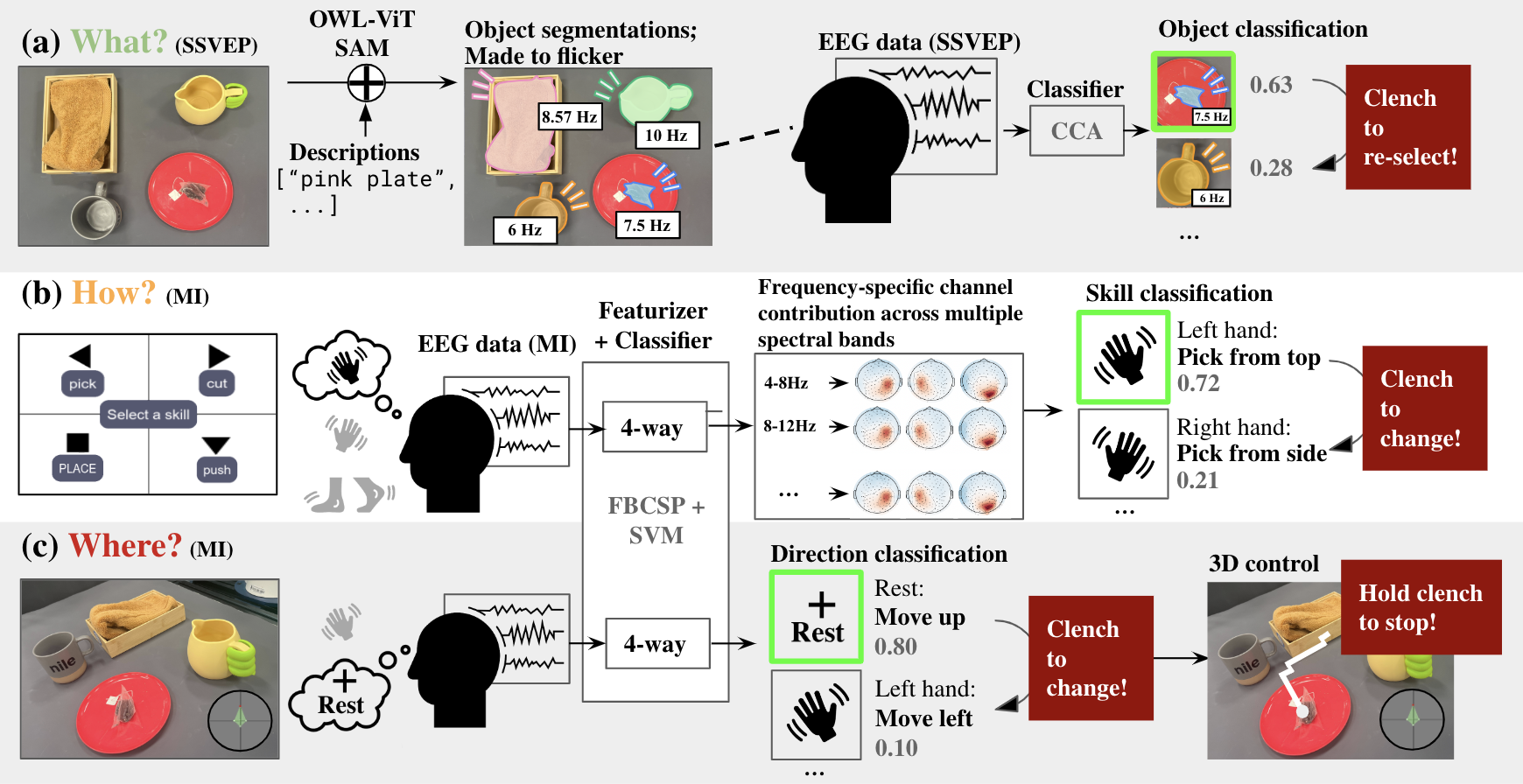

NOIR 2.0 utilizes two distinct neurophysiological signals for user input: Motor Imagery (MI) and Steady-State Visually Evoked Potential (SSVEP). MI detects intended actions through analysis of brain activity associated with imagining movements, without actual physical execution. Simultaneously, SSVEP detects user selections based on brain responses to flickering visual stimuli; different frequencies of flicker correspond to different selectable objects. By combining these two modalities, the system can interpret both internally generated commands – such as imagined hand gestures – and externally prompted selections, offering a versatile input method suitable for a range of applications.

Motor Imagery decoding within NOIR 2.0 employs a two-stage signal processing pipeline. Initially, the Filter-Bank Common Spatial Pattern (FBCSP) algorithm extracts discriminative features from the raw EEG data. FBCSP utilizes bandpass filters applied across multiple frequency bands, followed by the Common Spatial Pattern algorithm to maximize the variance of EEG signals related to imagined movements while suppressing noise. The resulting feature vectors are then inputted into a Support Vector Machine (SVM) classifier, trained to categorize specific motor imagery tasks – such as imagining left or right hand movements. The SVM identifies the intended action based on the extracted features, providing a decoded output representing user intent.

Steady-State Visually Evoked Potentials (SSVEPs) are decoded using Canonical Correlation Analysis (CCA). This technique analyzes the correlation between the brain’s electroencephalographic (EEG) signal and the frequencies of flickering visual stimuli presented to the user. Each stimulus flickers at a unique, distinguishable frequency; CCA identifies which frequency, and therefore which corresponding visual object, the user is focusing on. Testing demonstrates an 88% decoding accuracy for SSVEP signals at the time the task is performed, indicating a high degree of reliability in translating visual focus into a detectable brain signal.

Intelligent Task Execution: Learning Through Demonstration

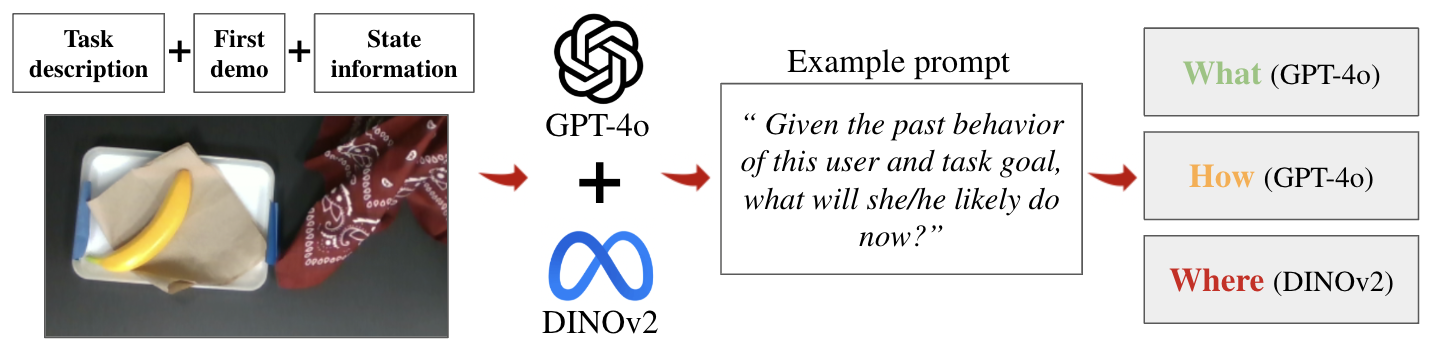

Retrieval-Based Imitation Learning forms the core of the system’s learning process, enabling the robot to acquire new skills by analyzing demonstrations of desired actions. This approach involves storing a database of previously performed tasks and their corresponding robot states and actions. When presented with a new task, the system retrieves the most similar demonstrated examples from this database. The robot then adapts these retrieved actions to its current state, allowing it to execute the task without explicit programming. This contrasts with traditional robot control methods and facilitates rapid skill acquisition through observation and replication of human or other demonstrated behaviors.

The R3M model serves as the foundational imitation learning component, pre-trained on a large dataset of robotic demonstrations to establish a general understanding of robotic manipulation. This pre-training significantly accelerates learning on new tasks by providing a strong initial policy. Subsequent fine-tuning with task-specific data further refines the model’s performance, adapting the generalized knowledge to the nuances of the current objective. This approach of starting with a pre-trained base and then specializing with targeted data reduces the amount of data required for each new task and improves sample efficiency compared to training from scratch.

NOIR 2.0 utilizes GPT-4o to translate high-level, decoded user intent into actionable robotic behaviors by performing both state understanding and task retrieval. The system leverages GPT-4o’s natural language processing capabilities to interpret the current environmental state, as perceived through sensor data, and subsequently identify the appropriate task or sequence of actions to fulfill the user’s request. This integration facilitates a crucial link between abstract goals and the specific parameters required to control the Franka Emika Panda robotic arm, allowing the system to dynamically select and execute tasks based on contextual awareness. The model effectively functions as an intermediary, ensuring the robot understands what needs to be done, given the current situation, before initiating any physical actions.

The system utilizes DINOv2 for semantic keypoint detection to facilitate parameter prediction during complex manipulation tasks. This results in a 79% DINO Parameter Prediction Accuracy at task execution time. Parameter predictions are then used to control the Franka Emika Panda robotic arm via an Operational Space Controller, which allows for precise and coordinated movements in task space, rather than joint space. This approach enables the robot to accurately perform manipulations based on visual input and predicted parameters, increasing the robustness and adaptability of the system.

Guaranteed Safety & Formal Verification: Establishing Reliable Operation

To ensure operational safety, NOIR 2.0 integrates electromyography (EMG) as a crucial interruption system. This technology monitors the user’s muscle activity, specifically looking for signals that deviate from the intended motor commands. If unintended muscle activation is detected – perhaps indicating an attempt to override the robot’s actions or a sudden loss of control – the system immediately halts robotic movement. This proactive safety measure minimizes the risk of unintended consequences and provides a critical layer of protection during brain-robot interaction, fostering a more secure and reliable user experience. The EMG system acts as a real-time safeguard, effectively creating a ‘break’ in the control loop when unexpected biological signals arise.

The robust operation of NOIR 2.0 hinges on a rigorous approach to task definition, achieved through the use of Behavior Description and Definition Language (BDDL). This formal specification allows developers to mathematically define the intended behavior of robotic actions, moving beyond traditional coding which can be ambiguous and prone to errors. By expressing tasks in a precise, machine-readable format, BDDL facilitates formal verification – a process where the system’s behavior is mathematically proven to meet specified safety and performance criteria. This isn’t merely testing; it’s a demonstration, before deployment, that the robot will behave predictably, even when faced with unforeseen circumstances or complex environmental interactions. The benefit extends beyond simple error prevention; it allows for the optimization of task execution and the establishment of guaranteed performance bounds, critical for applications demanding reliability and safety.

NOIR 2.0’s architecture prioritizes a collaborative dynamic between human intention and robotic action through hierarchical shared autonomy. This approach allows users to specify what they want the robot to achieve – a high-level goal like “grasp the mug” – while the system independently manages how that goal is executed, navigating the complexities of motor control and environmental interaction. By offloading detailed execution to the robot, the system reduces cognitive burden on the user and allows them to remain engaged at a strategic level. This division of labor isn’t about relinquishing control, but rather about distributing it intelligently, fostering a sense of partnership and building user trust in the Brain-Robot Interface’s ability to reliably translate thought into action.

The integration of robust safety protocols and formal verification techniques positions NOIR 2.0 as a significantly dependable Brain-Robot Interface. By combining preemptive safety mechanisms – such as halting operation upon detection of unintended muscular activity via Electromyography – with the rigorous predictability ensured by Behavior-Driven Development Language (BDDL), the system minimizes operational risks and maximizes user confidence. This comprehensive approach has demonstrably improved performance, with motor imagery skill selection accuracy increasing from 42% to 61% when compared to the initial NOIR system. This substantial gain underscores the efficacy of prioritizing both safety and formal guarantees in the development of advanced brain-computer interfaces, paving the way for more intuitive and reliable human-robot collaboration.

The pursuit of seamless human-robot interaction, as demonstrated by NOIR 2.0’s advancements in neural decoding, echoes a fundamental principle of computational elegance. Brian Kernighan aptly observed, “Debugging is twice as hard as writing the code in the first place. Therefore, if you write the code as cleverly as possible, you are, by definition, not smart enough to debug it.” This resonates with the complexities inherent in translating neural signals into robotic actions; a convoluted system, however ingeniously implemented, will inevitably suffer from inaccuracies. NOIR 2.0’s focus on robust and provable neural decoding, enabling faster and more accurate control, prioritizes clarity and reliability over purely clever solutions-a testament to the enduring power of mathematical purity in engineering.

What Remains to be Proven?

The advancement of NOIR 2.0, while demonstrating improved performance in decoding neural signals for robotic control, merely shifts the locus of the fundamental problem. The core challenge is not simply one of signal fidelity, but of establishing a provably robust mapping between the inherently noisy and high-dimensional space of cortical activity and the continuous demands of physical manipulation. Current metrics, centered on task completion rates, offer insufficient guarantees. A formal analysis of the system’s error bounds, considering the inevitable ambiguity in neural decoding, remains conspicuously absent.

Future iterations must address the asymptotic behavior of the learning algorithms. While performance improvements are reported, the rate of convergence-and, crucially, its dependence on the user-requires rigorous characterization. The implicit assumption of stationarity in neural signals is particularly suspect. A dynamically adaptive system, capable of tracking and compensating for changes in cortical state-and capable of proving its continued validity-represents a necessary, if difficult, progression.

Ultimately, the pursuit of truly intuitive control demands a departure from purely kinematic interfaces. The focus should shift towards decoding intent, rather than movement. This necessitates a deeper engagement with the computational principles underlying motor planning and a formalization of the information-theoretic limits of neural decoding. The current framework, however elegant in its engineering, remains, at its heart, an exercise in applied empiricism.

Original article: https://arxiv.org/pdf/2511.20848.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash Royale Best Boss Bandit Champion decks

- Vampire’s Fall 2 redeem codes and how to use them (June 2025)

- Mobile Legends January 2026 Leaks: Upcoming new skins, heroes, events and more

- World Eternal Online promo codes and how to use them (September 2025)

- Clash Royale Season 79 “Fire and Ice” January 2026 Update and Balance Changes

- Best Arena 9 Decks in Clast Royale

- Best Hero Card Decks in Clash Royale

- Clash Royale Furnace Evolution best decks guide

- FC Mobile 26: EA opens voting for its official Team of the Year (TOTY)

- Clash Royale Witch Evolution best decks guide

2025-11-27 12:06