Author: Denis Avetisyan

New research reveals that large language models fundamentally differ from humans in their ability to establish common ground during conversations.

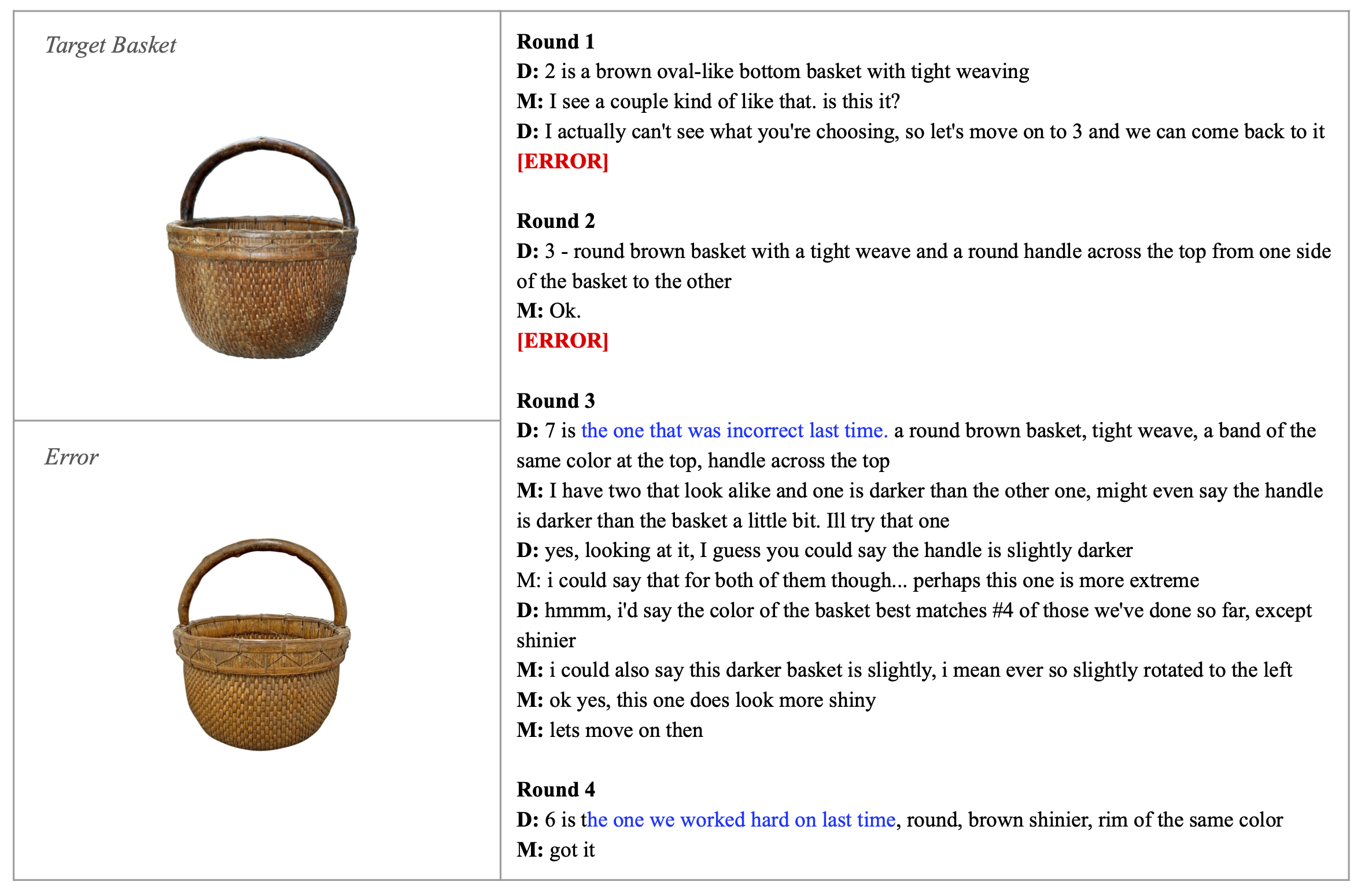

![Unlike human conversational partners who rapidly establish shared reference through lexical entrainment, this artificial intelligence pairing consistently fails to form efficient conceptual pacts, as evidenced by the verbose and repetitive descriptions-such as detailing the “[latex]picnic\,basket[/latex]” with identical exhaustiveness across multiple rounds-despite consistently identifying the correct target object, with one instance exhibiting a seemingly unrelated description yet still achieving correct selection.](https://arxiv.org/html/2601.19792v1/tables-figures/AI-AI_example.png)

This study demonstrates that large language models exhibit significant deficits in grounding and pragmatic competence within referential communication tasks, hindering effective human-AI collaboration.

Despite rapid advances in generative AI, a fundamental challenge remains in achieving truly collaborative human-AI interaction. This is explored in ‘LVLMs and Humans Ground Differently in Referential Communication’, which investigates how large vision-language models (LVLMs) and humans establish shared understanding during interactive tasks. Our referential communication experiments reveal that, unlike humans, LVLMs struggle to dynamically build ‘common ground’ and exhibit limited pragmatic competence when resolving ambiguous references. This raises a crucial question: can we equip AI agents with the interactive abilities necessary to seamlessly coordinate with humans in complex, real-world scenarios?

The Foundations of Shared Meaning: Establishing Common Ground

The very essence of successful communication resides in the establishment of common ground – the shared knowledge, beliefs, and assumptions that partners bring to an interaction. This isn’t merely about transmitting information, but about creating a mutual context for meaning. Before any message is truly received, communicators implicitly negotiate this shared understanding, ensuring that references, concepts, and even intentions are recognized similarly by all involved. Without this foundation, even seemingly straightforward exchanges can falter, as interpretations diverge and the potential for miscommunication dramatically increases. The strength of this common ground directly correlates with the efficiency and accuracy of the interaction, facilitating seamless collaboration and minimizing the cognitive load required to decipher meaning.

Effective communication isn’t simply about exchanging words; it relies heavily on unspoken agreements about how information is conveyed. These principles, formalized by philosopher Paul Grice as his Maxims of Conversation, dictate that participants should be truthful, relevant, clear, and concise. Adherence to these maxims allows listeners to infer not just the literal meaning, but also the speaker’s intended meaning, streamlining the exchange. For instance, the Maxim of Relevance ensures contributions are pertinent to the current topic, while the Maxim of Quantity discourages excessive or insufficient information. When these principles are consistently followed, communication becomes remarkably efficient, as listeners can confidently rely on the speaker to be cooperative and contribute meaningfully to the ongoing dialogue. Violations, even unintentional ones, can introduce ambiguity and necessitate clarification, hindering the flow of information and potentially leading to misinterpretations.

The success of any communicative exchange fundamentally relies on a process known as grounding references – essentially, ensuring both parties acknowledge the same entities and concepts being discussed. This isn’t merely about naming things; it involves a continuous, often subconscious, verification that the speaker and listener share a mutual understanding of what is being referenced, whether a physical object, an abstract idea, or even a previously mentioned topic. This confirmation can occur through explicit cues – like confirming “Do you see it?” – or implicitly, through monitoring the listener’s responses and adjusting communication accordingly. Without this iterative process of establishing shared reference, even seemingly simple statements can become ambiguous, leading to misinterpretations and breakdowns in effective interaction. The ability to reliably ground references is, therefore, a cornerstone of successful communication, enabling the efficient and accurate transmission of information.

The seamless flow of information relies heavily on a ‘Grounding Criterion’ – a shared assessment of what is already known and understood by all participants. When this criterion falters, communication rapidly degrades; ambiguous references, unacknowledged assumptions, and mismatched expectations quickly accumulate. This breakdown isn’t merely a matter of politeness, but a fundamental impediment to efficient information exchange; increased effort is then required for clarification, repetition, and error correction. Consequently, tasks become protracted, collaborative problem-solving is hindered, and the potential for misinterpretations escalates – highlighting that a firm foundation of mutual recognition is paramount for effective communication, regardless of the medium.

Modeling Grounding in Action: The Referential Communication Task

The Referential Communication Task is a methodology used to study collaborative dialogue and the process of grounding, where participants work together to identify target objects from a set of distractors. Typically, a Sender describes a target object to a Receiver, who must then identify it. This task structure necessitates a back-and-forth exchange, allowing researchers to observe how participants request and provide clarification, ultimately converging on a shared understanding of the referent. Variations of the task include different visual displays, object sets, and communication channels, but the core principle remains consistent: evaluating the efficiency and characteristics of interactive reference resolution.

Lexical entrainment, central to successful referential communication, describes the process by which communication partners align their linguistic choices during interaction. This convergence isn’t simply about using the same words; it involves adopting shared referring expressions – the specific labels or descriptions used to identify objects. The degree of entrainment is measurable by tracking the co-occurrence of these expressions between partners; higher co-occurrence indicates stronger entrainment. This alignment reduces ambiguity and cognitive load, facilitating efficient communication, as partners increasingly predict each other’s referring expressions based on established patterns within the ongoing interaction. Failure to achieve lexical entrainment results in increased communication effort and a higher likelihood of misunderstandings.

Task effort, as measured in the referential communication task, correlates inversely with the efficiency of shared understanding. Specifically, increased task effort – quantified by metrics such as the number of clarification requests, total dialogue length, or time taken to successfully identify objects – indicates a less efficient grounding process. Higher effort suggests participants are struggling to establish shared reference, requiring more communicative turns to resolve ambiguities or misinterpretations. Conversely, lower task effort implies quicker convergence on referring expressions and a more streamlined collaborative process, demonstrating efficient grounding and shared understanding between participants.

Human-Human Interaction serves as the foundational benchmark for evaluating performance in the Referential Communication Task. Prior to assessing any artificial intelligence system, paired human participants complete the task, establishing quantifiable metrics for success – including completion time, number of corrections required, and overall accuracy. These metrics define the expected level of performance and provide a comparative standard against which AI systems are measured. This approach allows for a direct assessment of how closely an AI system replicates human communicative efficiency and effectiveness in a collaborative referential task, and highlights areas where AI performance deviates from established human baselines.

Assessing AI Grounding Capabilities: LVLMs in the Loop

Large Visual-Language Models (LVLMs), specifically OpenAI’s GPT-5.2, were implemented as artificial agents within the Referential Communication Task. This task required paired agents – in this case, two instances of GPT-5.2 – to engage in dialogue where one agent describes a visual target and the other attempts to identify it. The utilization of GPT-5.2 as an AI partner allowed for quantitative assessment of its grounding capabilities, specifically its ability to connect language with visual information, and to evaluate performance changes over iterative communicative exchanges. This approach facilitated a comparative analysis against human performance in the same task, providing insights into the current limitations of AI in visually grounded communication.

Large Vision-Language Models (LVLMs) such as OpenAI GPT-5.2 acquire their grounding abilities – the capacity to connect language with visual information – through pre-training on extensive datasets. A prominent example is the COCO Dataset, which contains images paired with corresponding captions and object annotations. This dataset enables the models to learn statistical correlations between visual features and linguistic descriptions. However, the composition and biases present within the COCO Dataset, including the distribution of objects, scenes, and captioning styles, can directly influence the model’s subsequent performance on grounding tasks and potentially introduce limitations in generalizing to novel or under-represented scenarios. The reliance on these datasets means the model’s grounding is not necessarily based on genuine understanding, but rather on learned associations from the training data.

Evaluation of referring expression alignment utilizes several quantitative metrics. SBERT Cosine Similarity assesses the semantic similarity between the generated referring expression and the ground truth, producing a value between -1 and 1, where higher values indicate greater similarity. The Jaccard Index measures the overlap between the sets of words comprising the generated and ground truth expressions, calculated as the size of the intersection divided by the size of the union. Finally, ROUGE-L, a metric commonly used in summarization tasks, evaluates the longest common subsequence between the generated and reference expressions, providing a measure of recall-oriented overlap and capturing sentence-level similarity. These metrics collectively provide a comprehensive assessment of how well the AI’s referring expressions align with human-defined references.

In the Referential Communication Task, human participants demonstrated increasing accuracy, achieving over 90% by Round 4, indicative of improved collaborative understanding. Conversely, AI pairs utilizing the LVLM, beginning with 100% accuracy in Round 1, exhibited a consistent decline in performance across subsequent rounds. This trend suggests a fundamental limitation in the AI’s ability to leverage iterative interaction for improved grounding; rather than refining communication strategies based on feedback, the model’s performance degraded, highlighting a critical difference in learning and adaptation compared to human communication partners.

The PhotoBook dataset is a resource specifically designed for evaluating grounding capabilities within visually grounded dialogues. It comprises a collection of images paired with descriptive captions and subsequent referring expressions intended to identify specific objects or regions within those images. Unlike generic image datasets, PhotoBook is structured to simulate a collaborative scenario where participants engage in a turn-taking dialogue to locate items based on increasingly specific descriptions. This allows for the assessment of an AI model’s ability to not only understand visual content but also to maintain context and refine its referring expressions over multiple conversational turns, mirroring the complexities of human communication involving visual references.

Towards More Effective AI Communication

Recent investigations into Large Visual Language Models (LVLMs) reveal a compelling, though incomplete, progression towards truly effective communication. While these models demonstrate an ability to process and generate language grounded in visual information – exhibiting performance that initially suggests near-human capabilities – a discernible gap remains when contrasted with the nuanced interactions observed between people. Studies focusing on collaborative tasks show that humans consistently achieve higher levels of shared understanding with less effort, exhibiting smoother coordination and faster resolution of ambiguity. This suggests that current LVLMs, despite their technical sophistication, struggle with the subtle cues, common-ground assumptions, and adaptive strategies that characterize human communication, indicating a need for further research into the mechanisms that underpin robust and efficient information exchange.

Research indicates that, despite advancements in large language models, achieving comparable levels of shared understanding – known as grounding – with human communication necessitates significantly greater computational effort from artificial intelligence. Studies examining collaborative task completion reveal that AI pairs, while capable of performing tasks, demonstrate a consistently higher ‘Task Effort’ score than their human counterparts. This suggests that AI systems currently rely on more extensive processing to interpret ambiguous language, maintain context, and resolve miscommunications, effectively requiring more ‘cognitive resources’ to reach the same level of mutual understanding that humans achieve with relative ease. This disparity highlights a crucial area for development, as reducing the computational burden associated with grounding could lead to more efficient, robust, and ultimately, more natural AI communication systems.

Studies of collaborative dialogue reveal a crucial distinction in how humans and artificial intelligence achieve shared understanding. Human pairs consistently demonstrated increasing lexical overlap – the extent to which their word choices converged – throughout a task, a phenomenon known as ‘lexical entrainment’ indicative of successful grounding and mutual adaptation. Conversely, AI pairs exhibited a high degree of lexical overlap from the outset, but this remained relatively constant, failing to increase over time. This stagnation suggests that while AI can initially establish some common linguistic ground, it lacks the dynamic, adaptive capacity to refine communication and build upon that foundation in the same way humans do, potentially hindering deeper comprehension and collaborative problem-solving.

Successful communication hinges on ‘lexical entrainment’ – the subconscious alignment of language between individuals – and recent research indicates this process differs significantly between humans and artificial intelligence. Investigations into paired communication reveal that human partners progressively refine their vocabulary, converging on shared terms and phrases as understanding deepens; this dynamic adaptation isn’t yet mirrored in current AI systems, which often exhibit consistently high, but unchanging, lexical overlap. Therefore, designing AI communication systems that actively learn and adapt their language use – mirroring human patterns of convergence – promises to yield more efficient and robust interactions. By pinpointing the cognitive mechanisms underlying this human ability to establish shared meaning through language, developers can engineer AI agents capable of not just transmitting information, but of truly collaborating through increasingly streamlined and intuitive communication.

Establishing a shared understanding between agents relies heavily on consistent reference – a phenomenon researchers term the ‘Conceptual Pact’. Investigations suggest that bolstering an AI’s ability to negotiate and maintain these pacts – essentially, agreeing on how to talk about objects – significantly improves communication efficiency. Unlike humans who intuitively adapt referential terms based on context and partner understanding, current AI models often struggle with this dynamic adjustment. By equipping AI with mechanisms to proactively propose, confirm, and revise these conceptual agreements, researchers anticipate a substantial leap in the robustness and naturalness of AI interactions, moving beyond simple information exchange towards genuine collaborative understanding.

The study reveals a fundamental disconnect between how large language models and humans navigate the nuances of referential communication. While LLMs can process language, they falter when establishing shared understanding – a concept known as common ground. This deficiency underscores a critical limitation in their pragmatic competence. As Robert Tarjan aptly stated, “The key to good programming is the ability to understand the problem.” Similarly, these models demonstrate an inability to truly understand the communicative intent, relying instead on statistical patterns. The pursuit of elegant algorithms, therefore, must extend beyond mere functionality to encompass genuine comprehension and collaborative alignment, mirroring the mathematical purity inherent in successful human interaction.

Where Do We Go From Here?

The demonstrated divergence in grounding strategies between large language models and humans isn’t merely a performance gap; it exposes a fundamental difference in how these systems approach communication. The pursuit of scaled heuristics, while yielding impressive surface-level fluency, appears to sidestep the necessity of establishing genuine common ground. The models excel at mimicking pragmatic competence, but lack the underlying architecture to guarantee it. This work reminds that lexical entrainment, for a human, isn’t a statistical curiosity, but a necessary condition for coordinated action-a point often lost in the rush to benchmark perplexity.

Future research must move beyond assessing whether LLMs can produce referentially successful utterances, and focus on how their internal representations support-or fail to support-the dynamic negotiation of meaning. The focus should shift from generating plausible dialogue to verifying the mathematical properties of the underlying communicative process. Simply achieving higher scores on existing tasks provides little insight if the very foundation of the system remains ungrounded in provable principles.

The challenge, then, is not to build models that appear to understand, but to construct systems where understanding-and, crucially, the ability to demonstrate it-is a formal consequence of the architecture. The current trajectory risks enshrining a fundamentally brittle form of communication, where success is measured by transient empirical results rather than enduring logical consistency.

Original article: https://arxiv.org/pdf/2601.19792.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Overwatch Domina counters

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- 1xBet declared bankrupt in Dutch court

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- Gold Rate Forecast

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

2026-01-29 03:25