Author: Denis Avetisyan

New research shows that stripping away irrelevant details from symbolic AI explanations can significantly improve how easily people grasp complex systems.

By clustering and removing irrelevant information from Answer Set Programming (ASP) explanations, this work demonstrates a trade-off between cognitive load and improved comprehension in neuro-symbolic AI.

While artificial intelligence increasingly powers complex systems, translating its reasoning into understandable explanations remains a significant challenge. This is addressed in ‘The Dual Role of Abstracting over the Irrelevant in Symbolic Explanations: Cognitive Effort vs. Understanding’, which investigates how simplifying symbolic AI explanations impacts human comprehension and cognitive load. Our work demonstrates that strategically abstracting irrelevant details-through techniques like clustering and removal-can simultaneously improve understanding and reduce the mental effort required to follow AI reasoning. Could this nuanced approach to abstraction unlock truly human-centered explainable AI, fostering trust and collaboration with intelligent systems?

The Illusion of Understanding: Why Pattern Recognition Isn’t Enough

Contemporary artificial intelligence systems, especially those built on deep learning architectures, demonstrate remarkable proficiency in perceptual tasks such as image recognition and speech processing. These systems can often surpass human performance in identifying patterns and classifying data with impressive accuracy. However, this strength is often coupled with a significant weakness: a struggle with higher-level reasoning and generalization. While adept at recognizing what is present in data, these AI models frequently falter when asked to understand why something is the case, or to apply learned knowledge to novel situations beyond their training data. This limitation isn’t a matter of processing power, but rather a fundamental difference in approach – current AI largely relies on statistical correlations rather than explicit understanding, hindering its ability to extrapolate and adapt in the way humans do.

The current limitations of artificial intelligence, despite impressive advances in areas like image and speech recognition, arise from a fundamental inability to represent knowledge in a structured, symbolic form and subsequently draw logical inferences. Unlike humans, who readily build internal models of the world and reason about cause and effect, many AI systems operate primarily on patterns within data, lacking the capacity for explicit reasoning. This means that while an AI might accurately identify a cat in an image, it struggles to understand the relationship between a cat, a mouse, and the concept of predation – knowledge that a human possesses implicitly. Without this symbolic representation, AI remains vulnerable to even slight variations in input and struggles with generalization, hindering its ability to solve complex problems requiring abstract thought and planning.

Current artificial intelligence frequently demonstrates remarkable skill in recognizing patterns and processing sensory input, yet often falters when confronted with situations demanding deeper understanding. The crucial next step lies in developing systems capable of moving beyond simply identifying what is present to grasping why it is so. This requires a fundamental shift from purely perceptual algorithms to architectures that incorporate explicit knowledge representation and logical inference. Such a bridge between sensing and reasoning would allow an AI not just to catalogue observations, but to build models of causality, enabling generalization to novel scenarios and fostering a true capacity for problem-solving – effectively, the ability to understand the underlying principles governing the world, rather than merely reacting to its surface features.

Reclaiming Logic: The Architecture of Thought

Symbolic AI utilizes formal logic and knowledge representation techniques – such as ontologies, rule-based systems, and semantic networks – to enable automated reasoning. This approach allows systems to represent facts and relationships as explicit symbols, then apply logical inference rules – like modus ponens or resolution – to derive new knowledge from existing data. Consequently, symbolic AI systems can not only store and retrieve information but also deduce conclusions, explain their reasoning process through traceable inference chains, and make decisions based on logically derived insights. The efficacy of this approach rests on the ability to accurately model domain knowledge in a symbolic format suitable for algorithmic manipulation and inference.

Answer Set Programming (ASP) is a declarative programming paradigm centered around describing a problem’s conditions and constraints, rather than explicitly detailing a solution process. A problem is defined through a set of logical rules and constraints, and an ASP solver then automatically finds answer sets – models that satisfy all specified conditions. This approach enables flexible reasoning because problems can be modified by simply adding or removing rules without altering the solver itself. Robustness is achieved through the solver’s ability to handle complex constraint satisfaction problems and to find all possible solutions, or to efficiently identify a single optimal solution based on defined criteria. Unlike imperative programming, ASP focuses on what needs to be solved, not how to solve it, which simplifies development and maintenance of knowledge-based systems.

Non-monotonic logic differs from standard logical systems by allowing conclusions to be retracted when new information becomes available. Traditional logic operates on the principle that once a conclusion is derived, it remains valid indefinitely; however, non-monotonic systems incorporate default assumptions and allow these assumptions to be revised. This capability is achieved through the use of default rules, which specify what to conclude in the absence of contradictory evidence. When new information conflicts with a default assumption, the system retracts the previously held conclusion and adjusts its reasoning accordingly. This mirrors human reasoning, where beliefs are often provisional and subject to revision based on new evidence, rather than being absolute and immutable.

Symbolic AI systems, while capable of robust reasoning, are fundamentally reliant on a pre-existing knowledge base to operate effectively. This necessitates a significant upfront investment in knowledge engineering to represent relevant facts and rules. Furthermore, the performance of these systems can degrade substantially when confronted with noisy or incomplete data, as the logical inferences are directly dependent on the accuracy and completeness of the input. Unlike some machine learning approaches that can learn from data, symbolic AI typically lacks inherent mechanisms for handling uncertainty or ambiguity, requiring explicit strategies for data cleaning, error correction, or the incorporation of probabilistic reasoning to mitigate these limitations.

Abstraction: The Bridge Between Patterns and Principles

Abstraction serves as a foundational technique for managing the complexity inherent in both neural network processing and symbolic reasoning systems. By selectively focusing on essential features and omitting irrelevant detail, abstraction facilitates knowledge extraction and enables a functional interface between these traditionally disparate approaches. This simplification process allows for the creation of higher-level representations that are more amenable to logical inference and human interpretation, effectively bridging the gap between the subsymbolic patterns learned by neural networks and the explicit, rule-based systems of symbolic AI. The resultant abstracted knowledge is both more computationally efficient and more readily integrated into reasoning frameworks.

Predicate invention and domain clustering are techniques employed to reduce the complexity of knowledge representation by constructing higher-level concepts from raw data. Predicate invention automatically generates new predicates – logical statements describing relationships – that generalize over specific instances, thereby decreasing the number of individual facts needing representation. Domain clustering groups similar entities or concepts together, allowing for representation at a more abstract level rather than focusing on individual details. This abstraction minimizes the computational burden associated with knowledge processing and improves the scalability of reasoning systems by operating on a condensed knowledge base. Both methods facilitate a transition from instance-level knowledge to more generalized, conceptual understanding.

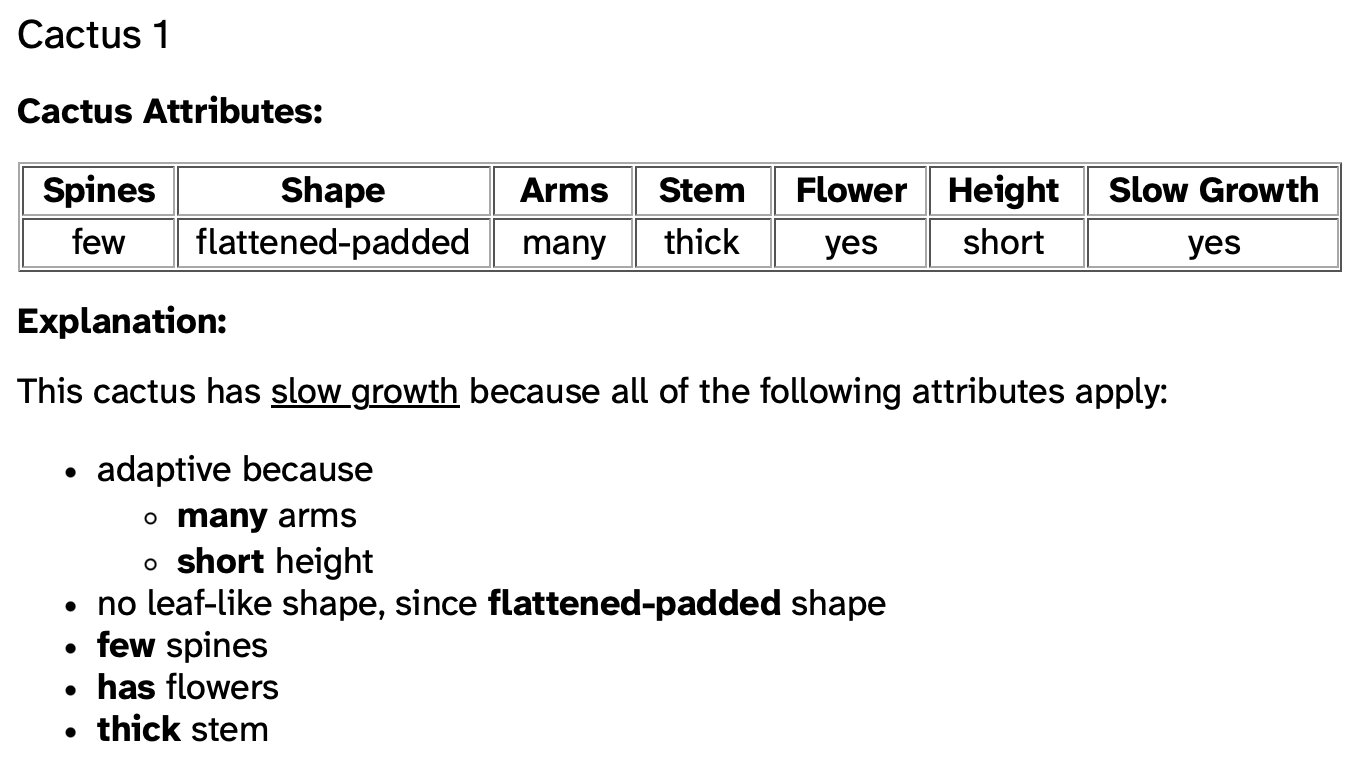

Removal and clustering abstraction techniques enhance reasoning processes by minimizing the impact of irrelevant information. Empirical results from our study indicate a statistically significant improvement in accuracy when irrelevant details are clustered (p<0.05). Conversely, the removal of irrelevant details resulted in a significant reduction in answer time (p<0.01). These findings suggest that both methods offer distinct advantages; clustering preserves potentially useful relationships while reducing noise, and removal prioritizes computational efficiency by eliminating extraneous data.

Causal Abstraction is a technique used to interpret the functional structure of neural network architectures by identifying and representing the underlying causal relationships between variables. This process involves constructing a causal graph that maps inputs to outputs, explicitly demonstrating how changes in one variable influence others within the network. By representing these relationships, Causal Abstraction moves beyond simply observing correlations to understanding the mechanisms driving the network’s decisions. The resulting graph facilitates analysis of network behavior, identification of critical components, and potential simplification without loss of functionality, offering a pathway towards more interpretable and trustworthy artificial intelligence systems.

Towards Systems We Can Understand, and Therefore, Trust

The pursuit of truly intelligent artificial intelligence necessitates not only performance, but also transparency. Current neural networks, while powerful, often operate as “black boxes,” making their decision-making processes opaque to humans. Researchers are addressing this limitation by fusing the strengths of neural networks with those of symbolic reasoning – a traditional approach to AI that relies on explicitly defined rules and logic. Through abstraction techniques, complex neural network behaviors are translated into symbolic representations, allowing for the creation of Explainable AI (XAI) systems. This integration enables a clear articulation of why an AI arrived at a particular conclusion, providing insights into its internal logic and fostering trust in its outputs. The result is a move away from simply accepting AI predictions, towards understanding and verifying the reasoning behind them, opening doors to more reliable and accountable artificial intelligence.

A crucial benefit of integrating symbolic reasoning with neural networks lies in the enhanced ability to rigorously assess AI system correctness and expose hidden biases. Traditional ‘black box’ neural networks often lack transparency, making it difficult to pinpoint the source of erroneous decisions. However, by grounding AI in symbolic logic, developers can trace the reasoning process, verifying each step against established rules and known facts. This scrutiny isn’t merely about identifying mistakes; it facilitates the detection of systematic biases embedded within the training data or the network architecture itself. For instance, a system might consistently misclassify images due to a disproportionate representation of certain demographics in the training set – a flaw readily exposed through symbolic verification. This level of auditability is paramount for deploying AI in critical applications, fostering trust and ensuring responsible innovation.

Neuro-symbolic AI systems demonstrate enhanced resilience and dependability when confronted with intricate and unpredictable scenarios, a direct result of their combined strengths. By integrating the pattern recognition capabilities of neural networks with the logical reasoning of symbolic AI, these systems aren’t solely reliant on statistical correlations within training data. This hybrid approach allows them to generalize more effectively to novel situations and gracefully handle noisy or incomplete information. Unlike purely neural networks which can be susceptible to adversarial attacks or unexpected inputs, neuro-symbolic systems can leverage symbolic rules to validate outputs and identify inconsistencies, ensuring a degree of safety and predictability. Consequently, applications requiring high levels of trust and reliability – such as autonomous navigation, medical diagnosis, and financial modeling – stand to benefit significantly from the robust performance of these advanced AI architectures.

Decision trees offer a compelling pathway to demystifying the inner workings of artificial intelligence by representing complex concepts in a visually accessible format. These tree-like diagrams break down intricate decision-making processes into a series of easily understandable questions and outcomes, mirroring human cognitive processes. Each branch of the tree represents a possible decision, and the leaves signify the ultimate classification or prediction. This hierarchical structure not only allows for transparent tracing of an AI’s reasoning but also facilitates the identification of key factors influencing its conclusions. By converting abstract relationships into a readily interpretable visual map, decision trees empower users to grasp why an AI reached a specific determination, fostering trust and enabling effective debugging and refinement of these systems. The simplicity of this approach makes complex AI logic approachable even for those without specialized technical expertise, paving the way for broader adoption and responsible implementation.

The pursuit of explainable AI often fixates on distilling complexity, yet this paper suggests a subtler approach: acknowledging and then carefully pruning the irrelevant. It’s a dance with entropy, really. The system, left to its own devices, will accumulate detail, a relentless accretion of data that obscures the core logic. This echoes a familiar pattern; every refactor begins as a prayer and ends in repentance. As Alan Turing observed, “Sometimes it is the people who no longer strive for the impossible that are the ones who call our shots.” The authors demonstrate how clustering irrelevant features doesn’t diminish understanding, but rather cultivates it, lessening cognitive load and allowing the underlying reasoning to emerge. It’s not about building a perfectly streamlined explanation, but fostering an ecosystem where meaning can grow.

What Lies Ahead?

The pursuit of ‘explainable’ systems often fixates on presenting fewer facts, a strategy this work subtly exposes as a temporary reprieve. Reducing cognitive load through the removal of ‘irrelevant’ details doesn’t address the fundamental problem: complex systems have irrelevant details, and those details are merely the leading edge of the unknown. Long stability in human comprehension isn’t a sign of success, but a harbinger of unforeseen consequences when the abstracted-away anomalies inevitably manifest. The clustering techniques presented are not solutions, but adaptive filters, delaying the moment when the system’s true, messy nature reveals itself.

Future work should abandon the notion of ‘relevance’ as a fixed property. Instead, research must focus on systems that dynamically re-evaluate the importance of information based on evolving contexts and user goals. A truly robust approach will not seek to eliminate complexity, but to embrace it, providing tools for navigating uncertainty rather than illusions of complete understanding. The challenge isn’t making systems easier to explain, but building systems that gracefully accommodate being misunderstood.

The current focus on symbolic abstraction feels remarkably like architectural pattern matching. Each ‘solution’ merely shifts the point of failure, creating more elaborate surfaces for eventual disintegration. Perhaps the most fruitful avenue lies not in better explanations, but in fostering a user’s ability to tolerate, even expect, the inherent opacity of any sufficiently complex system. Systems don’t fail – they evolve into unexpected shapes, and it is in those shapes that the true lessons reside.

Original article: https://arxiv.org/pdf/2602.03467.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash of Clans Unleash the Duke Community Event for March 2026: Details, How to Progress, Rewards and more

- Brawl Stars February 2026 Brawl Talk: 100th Brawler, New Game Modes, Buffies, Trophy System, Skins, and more

- Gold Rate Forecast

- Magic Chess: Go Go Season 5 introduces new GOGO MOBA and Go Go Plaza modes, a cooking mini-game, synergies, and more

- MLBB x KOF Encore 2026: List of bingo patterns

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Channing Tatum reveals shocking shoulder scar as he shares health update after undergoing surgery

- eFootball 2026 Show Time Worldwide Selection Contract: Best player to choose and Tier List

- Who Is Sirius? Brawl Stars Teases The “First Star of Starr Park”

2026-02-04 19:19