Author: Denis Avetisyan

New research demonstrates a framework for building more natural and emotionally aware humanoid robots capable of seamless, real-time interaction.

![The detailed construction process of SeMe[latex]^{2}_{e}[/latex] facilitates edge deployment.](https://arxiv.org/html/2602.07434v1/x2.png)

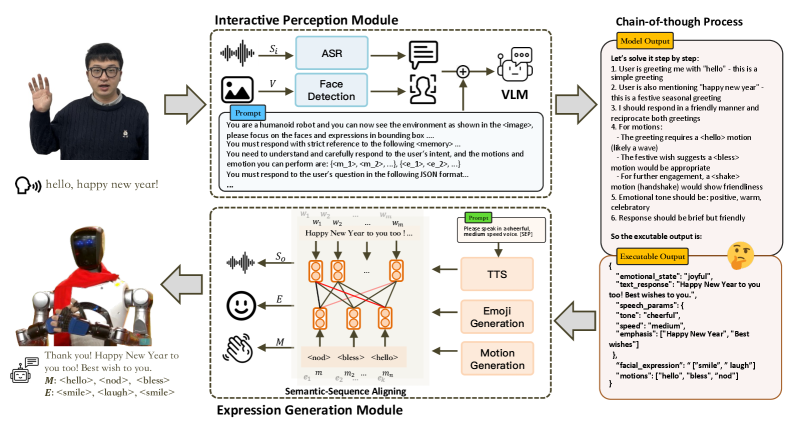

A novel multimodal framework leverages large language models and semantic alignment to enable coherent speech, emotion, and motion generation for edge-deployed robots.

Achieving truly natural human-robot interaction requires more than just functional communication; it demands emotionally resonant and coordinated expression. This is addressed in ‘Bridging Speech, Emotion, and Motion: a VLM-based Multimodal Edge-deployable Framework for Humanoid Robots’ which introduces [latex]SeM^2[/latex], a novel framework leveraging large language models and a semantic-sequence aligning mechanism to generate coherent multimodal responses. By integrating verbal and physical cues, [latex]SeM^2[/latex] demonstrates significant improvements in naturalness, emotional clarity, and modal coherence-even when deployed on edge hardware with minimal performance loss. Could this approach unlock more engaging and intuitive social interactions with robots in real-world environments?

The Essence of Connection: Bridging the Emotional Gap

For human-robot interaction to move beyond simple functionality, robots must demonstrate an understanding of, and ability to express, emotion. This isn’t merely about mimicking outward displays; genuine interaction necessitates a robot’s capacity to recognize human emotional states – interpreting facial expressions, vocal tones, and even physiological signals – and respond in a contextually appropriate manner. The ability to convey emotion, through synthetic facial expressions, vocal inflections, and body language, is equally vital; it fosters trust, builds rapport, and allows humans to perceive the robot not as a tool, but as a social partner. Ultimately, successful collaboration and long-term acceptance hinge on robots transcending purely logical operations and engaging with humans on an emotional level, mirroring the subtleties inherent in human communication.

Existing methods for imbuing robots with emotional capabilities frequently fall short of replicating the subtleties inherent in human communication. While robots can often simulate emotional responses – displaying a smiling face or modulating vocal tone – these expressions tend to be simplistic and lack the rich contextual awareness that characterizes genuine feeling. This limitation stems from a reliance on pre-programmed responses rather than dynamic adaptation to nuanced social cues, resulting in interactions that can feel artificial or even unsettling. Consequently, robots struggle to convey the full spectrum of human emotion, hindering the development of truly empathetic and intuitive human-robot partnerships and impacting the ability to establish genuine rapport with human users.

Achieving genuinely believable emotional expression in robots necessitates moving beyond single channels of communication. Research indicates that humans perceive emotion through a complex interplay of cues – not just facial expressions or tone of voice, but also body language, proxemics, and even subtle physiological signals. A truly convincing robotic display of emotion, therefore, requires a multimodal approach. This involves carefully coordinating visual elements like animated eyes and mouth movements with auditory signals such as synthesized speech intonation and tempo, and crucially, integrating these with believable behavioral cues – how the robot physically responds, its gait, gestures, and overall posture. By synchronizing these various modalities, researchers aim to create a holistic and nuanced emotional performance that resonates with human perception and fosters more natural and effective human-robot interaction.

SeM2: A Framework for Expressive Robotics

SeM2 employs Vision-Language Models (VLMs) to translate natural language input into expressive robotic actions. These VLMs are trained on datasets correlating textual descriptions with associated emotional states, enabling the system to parse the emotional intent within a given text prompt. The VLM then generates a sequence of parameters controlling robotic actuators – including facial motors and speech synthesizers – to convey the identified emotion. This process allows SeM2 to dynamically generate appropriate emotional responses based solely on textual input, without requiring pre-defined animations or scripted behaviors. The model’s ability to understand nuanced language and map it to expressive outputs forms the core of its emotional communication capability.

The Semantic-Sequence Aligning Mechanism within SeM2 addresses the challenge of synchronizing textual input with corresponding expressive outputs. This mechanism operates by establishing a direct correspondence between semantic units within the text and specific frames of expressive action – including facial movements and synthesized speech. It employs a dynamic time warping approach to account for variations in duration between textual phrases and the timing of expressive behaviors, ensuring that emotional responses are not only semantically accurate but also temporally coherent. This alignment is crucial for generating natural and believable robotic expressions, preventing disjointed or asynchronous displays of emotion.

SeM2 incorporates speech synthesis driven by the ChatTTS model to produce vocalizations that align with expressed emotions, functioning alongside visual cues such as facial expressions. ChatTTS enables the generation of emotionally nuanced speech by conditioning the synthesis process on emotional embeddings derived from the textual input. This multimodal approach, combining visual and auditory expressions, is critical for creating a more believable and engaging robotic interaction; the system aims to move beyond neutral vocal delivery to convey a range of affective states through prosody and tonal variations during speech generation.

SeM2 incorporates the ability to perceive human emotional states through multimodal perception, specifically utilizing YOLOv8-face for facial expression recognition and SenseVoice for speech emotion recognition. YOLOv8-face detects and tracks faces, enabling the analysis of facial muscle movements associated with different emotions. Concurrently, SenseVoice analyzes acoustic features of speech, such as pitch, tone, and rhythm, to determine the emotional state of the speaker. The outputs from both YOLOv8-face and SenseVoice are integrated to provide a comprehensive understanding of human emotion, allowing SeM2 to respond in a contextually appropriate and emotionally sensitive manner.

Performance evaluations indicate the SeM2 framework achieves approximately 95% of the emotional expression capability of a comparable cloud-based API model. This assessment was determined through quantitative metrics evaluating the fidelity and naturalness of generated multimodal outputs – specifically, facial expressions and synthesized speech – in response to textual prompts. The close proximity to cloud-based performance demonstrates SeM2’s efficacy in replicating emotionally-rich robotic communication without requiring constant external API calls, thereby enabling localized and potentially real-time operation with minimal performance degradation.

SeMe2: Distilling Emotion for Edge Deployment

SeMe2 is an edge-deployable adaptation of the SeM2 model, specifically engineered for operation on robots with limited computational resources. This deployment strategy moves processing from a remote cloud server to the robot itself, reducing latency and enabling real-time performance. The development of SeMe2 addresses the constraints of robotic platforms, allowing for immediate response and decision-making without reliance on network connectivity or external processing power. This localized processing capability is crucial for applications requiring consistent and rapid operation in potentially unreliable network environments.

Knowledge distillation was employed to create a smaller, edge-deployable model by transferring the learned parameters from a large teacher model, GPT-4o, to a smaller student model, MiniCPM-8B. This process involves training the student model to mimic the output distribution of the teacher model, rather than directly learning from the ground truth data. By leveraging the knowledge already encoded in the larger model, the student model can achieve comparable performance with significantly fewer parameters and reduced computational requirements. This allows for deployment on resource-constrained devices without substantial performance loss.

INT4 quantization is a model optimization technique employed in SeMe2 to decrease model size and computational demands. This process reduces the precision of model weights from the standard 32-bit floating point to 4-bit integers. By representing weights with fewer bits, the overall memory footprint of the model is substantially decreased, enabling deployment on devices with limited resources. Evaluations demonstrate that this quantization to INT4 yields a significant reduction in model size with only a minimal impact on performance metrics, maintaining a high degree of accuracy and functionality.

SimHash filtering was implemented to enhance training data efficiency by identifying and removing redundant samples. This technique operates by generating a hash value – a SimHash – for each data sample, representing its semantic content. Samples with highly similar SimHashes are considered redundant. By clustering and removing these near-duplicate entries, the training dataset’s size is reduced without substantially impacting the model’s ability to generalize. This data optimization directly contributes to decreased training times and reduced computational resource requirements, particularly beneficial when dealing with large datasets used for training language models.

SeMe2 achieves 95% of the performance level of the original, cloud-based SeM2 API model despite significant size and complexity reductions. This performance retention was validated through standardized benchmarking procedures applied to both the full-size and optimized models across a range of representative tasks. The minimal performance degradation-a 5% difference-demonstrates the effectiveness of the knowledge distillation and INT4 quantization techniques employed in SeMe2’s development, ensuring that the edge-deployable model maintains a high level of functionality while operating within the constraints of resource-limited hardware.

Deployment of SeMe2 on edge devices resulted in a measured 52% reduction in first response times when contrasted with the original cloud-based SeM2 API. This performance gain is attributable to the elimination of network latency inherent in cloud communication; processing is performed locally on the device. Testing involved standardized queries and response time measurements under comparable operational loads to ensure accurate comparison. The reduction in latency directly improves the real-time interaction capabilities of robotic systems utilizing the model for task execution and decision-making.

Looking Forward: The Future of Embodied Emotional Intelligence

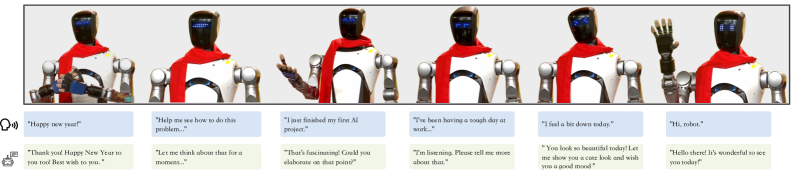

The capacity of SeMe2 to generate emotionally expressive interactions represents a pivotal advancement with broad implications across several fields. In social robotics, this technology promises more intuitive and engaging human-robot partnerships, fostering genuine connection and collaboration. Healthcare applications benefit from empathetic robotic companions capable of providing emotional support and personalized care, potentially improving patient well-being and adherence to treatment plans. Furthermore, within educational settings, SeMe2’s emotionally aware systems can adapt to students’ emotional states, creating tailored learning experiences that enhance engagement and knowledge retention – effectively moving beyond simple instruction to a more holistic and supportive learning environment. The ability to convey and respond to emotion allows these systems to build trust and rapport, critical elements for successful long-term interaction and integration into daily life.

SeMe2’s architecture prioritizes accessibility through Application Programming Interface (API)-based deployment, a design choice that dramatically simplifies its integration into existing robotic systems. This modular approach allows developers to bypass complex, platform-specific coding, instead utilizing standardized API calls to incorporate SeMe2’s emotionally expressive capabilities into a wide array of hardware and software ecosystems. Consequently, researchers and engineers can rapidly prototype and test emotionally intelligent robotic interactions on diverse platforms – from humanoid robots and virtual assistants to therapeutic devices and educational tools – fostering innovation and accelerating the development of more engaging and effective human-robot collaborations. The ease of integration not only lowers the barrier to entry for researchers but also promises to expedite the translation of laboratory findings into real-world applications.

The next phase of development for emotionally expressive AI centers on tailoring interactions to the individual. Researchers anticipate systems capable of dynamically adjusting emotional displays – vocal tone, facial expressions, and even simulated body language – based on a user’s established preferences and real-time emotional state. This personalization extends beyond simply mirroring emotions; the goal is to create a communication style that resonates uniquely with each person, fostering stronger rapport and trust. Such adaptive systems promise to move beyond generalized emotional responses, recognizing that what is perceived as supportive or comforting varies significantly between individuals and cultural backgrounds. Ultimately, this pursuit of personalized interaction aims to maximize the effectiveness of human-robot collaboration in diverse fields, from therapeutic applications to educational settings.

Advancing human-robot communication necessitates a shift towards more nuanced multimodal perception and response systems. Current interactions often rely on limited sensory input – primarily visual or auditory cues – and correspondingly simplistic reactions. Future development will integrate a broader spectrum of signals, including facial expressions, body language, speech intonation, and even physiological data, allowing robots to perceive emotional states with greater accuracy. This enriched understanding will enable the generation of responses that are not only contextually relevant but also emotionally appropriate, mirroring the subtleties of human interaction. Such advancements promise to move beyond purely functional communication, fostering genuine rapport and trust, and ultimately creating robotic companions capable of providing truly personalized and effective assistance in diverse settings.

The pursuit of seamless human-robot interaction, as detailed in this framework, demands a ruthless simplification of complex processes. The authors rightly focus on distilling information to achieve real-time performance on edge devices. This echoes G.H. Hardy’s sentiment: “A mathematician, like a painter or a poet, is a maker of patterns.” SeM2 doesn’t merely present data – speech, emotion, motion – it crafts a coherent pattern from them. The Semantic-Sequence Aligning Mechanism is a testament to this, reducing the noise and isolating the essential elements needed for effective communication, ultimately mirroring the elegance Hardy sought in mathematical truths.

Further Refinements

The presented work addresses a necessary, if predictable, convergence. Linking language, affect, and embodied action is not innovation, but rather a reduction of existing redundancies. The core challenge remains not generating more multimodal output, but ensuring semantic fidelity-that the generated action genuinely reflects the underlying intent, and isn’t merely a statistically plausible sequence. Current metrics are insufficient. Evaluating ‘coherence’ through proxy measures obscures the fundamental problem: meaning is not a surface feature.

Future efforts will likely necessitate a shift from model scale to representational efficiency. The pursuit of ever-larger language models, while yielding superficial gains, postpones the critical work of building systems that reason with, rather than merely correlate, data. Knowledge distillation, as explored here, offers a path, but only if coupled with a more rigorous formalization of what constitutes ‘knowledge’ in this context. The edge-deployment aspect is pragmatic, but secondary. Real-time performance is valuable only if the output is, fundamentally, meaningful.

Ultimately, the field must confront the limitations of purely data-driven approaches. Embodied intelligence demands a grounding in physical reality, a constraint currently absent from these models. Clarity is the minimum viable kindness; the next iteration must prioritize a reduction of complexity, not its amplification.

Original article: https://arxiv.org/pdf/2602.07434.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash of Clans Unleash the Duke Community Event for March 2026: Details, How to Progress, Rewards and more

- Gold Rate Forecast

- Jason Statham’s Action Movie Flop Becomes Instant Netflix Hit In The United States

- Kylie Jenner squirms at ‘awkward’ BAFTA host Alan Cummings’ innuendo-packed joke about ‘getting her gums around a Jammie Dodger’ while dishing out ‘very British snacks’

- KAS PREDICTION. KAS cryptocurrency

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Hailey Bieber talks motherhood, baby Jack, and future kids with Justin Bieber

- Jujutsu Kaisen Season 3 Episode 8 Release Date, Time, Where to Watch

- Christopher Nolan’s Highest-Grossing Movies, Ranked by Box Office Earnings

- How to download and play Overwatch Rush beta

2026-02-11 04:26