Author: Denis Avetisyan

Researchers have developed a new interface that lets users communicate animation ideas to artificial intelligence through intuitive, hand-drawn sketches and iterative refinement.

This work introduces SketchDynamics, a system leveraging sketch-based input and clarification cues for dynamic intent expression in animation generation.

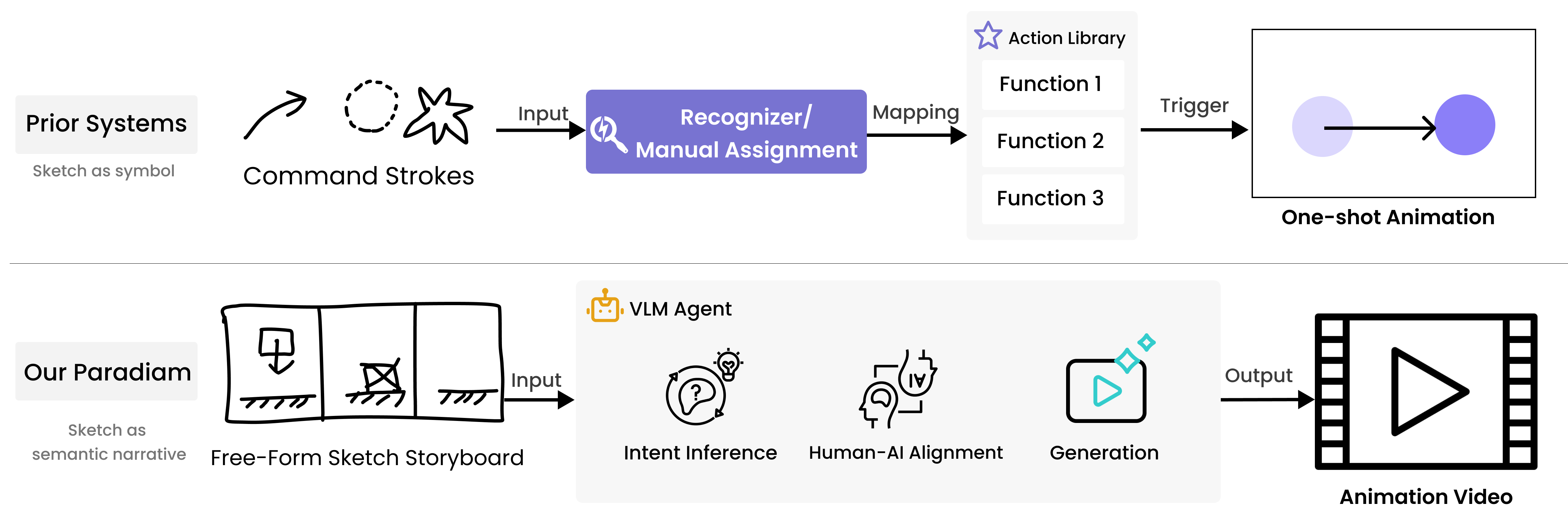

While intuitive for conveying motion, sketching as a means of directing animation generation has been hampered by systems requiring rigid inputs or predefined forms. This limitation motivates ‘SketchDynamics: Exploring Free-Form Sketches for Dynamic Intent Expression in Animation Generation’, which introduces an interactive paradigm allowing users to communicate dynamic intent to a vision-language model through free-form sketches. Our work demonstrates that this approach, instantiated via a sketch storyboard workflow, facilitates animation generation through iterative refinement guided by clarification cues and post-generation visual editing. Could this sketch-and-AI interaction bridge the gap between creative intention and automated outcome, unlocking new possibilities for 3D animation and video creation?

The Fragility of Expression: Bridging the Gap Between Sketch and Screen

The creation of compelling animation is frequently hampered by the steep learning curves associated with industry-standard software. These tools, while powerful, often prioritize technical precision over artistic intuition, demanding extensive training in complex interfaces and specialized workflows. This necessitates a considerable investment of time and resources before an animator can fully realize their creative vision, effectively acting as a barrier to entry for newcomers and slowing down the iterative process of experimentation. Consequently, the initial spark of an idea can be lost in translation as artists navigate layers of menus and parameters, ultimately diminishing the immediacy and fluidity of their expressive intent. The challenge lies in developing systems that empower artists, rather than requiring them to become technicians, fostering a more direct connection between conception and execution.

The translation of an artist’s initial sketch to finished animation often presents a significant hurdle, as current digital workflows frequently fail to capture the inherent nuance and spontaneity of hand-drawn work. Existing software often requires meticulous redrawing and refinement of sketched lines, effectively stripping away the natural imperfections and energetic qualities that define the original artistic intent. This process not only demands considerable time and technical expertise, but also introduces a disconnect between the conceptual fluidity of the sketch and the often rigid precision of the final animation. Researchers are actively investigating methods to preserve these subtle qualities – the slight wobble in a line, the varying pressure of a pencil stroke – and seamlessly integrate them into the digital pipeline, aiming for a system where the artist’s initial vision is more faithfully realized on screen.

The steep learning curve associated with professional animation software effectively raises the bar for entry, excluding individuals lacking extensive technical training or access to specialized education. This isn’t merely a matter of accessibility; the time investment required to master these tools directly impacts the iterative process of animation development. Prototypes, crucial for testing ideas and refining visual storytelling, become significantly slower to produce, hindering experimentation and innovation. Consequently, the potential for rapid visualization and creative exploration is curtailed, slowing down the entire production pipeline and limiting the number of individuals who can readily translate their imaginative concepts into animated form.

SketchDynamics: A System for Direct Expression

SketchDynamics enables the creation of animated content through direct input from freehand sketches, circumventing traditional workflows that require the construction of precise 3D models or the manual definition of keyframes. This is achieved by allowing users to draw directly to define object trajectories and behaviors; the system then interprets these sketches as animation directives. Consequently, content creation is expedited, as the time-consuming steps of explicit modeling and keyframing are eliminated, and users can focus on the conceptual design of the animation rather than the technical implementation.

SketchDynamics addresses the challenge of interpreting imprecise user input through the implementation of Clarification Cues. These cues consist of interactive prompts presented to the user when the system detects ambiguity within a sketch; examples include requests to specify object relationships, confirm object types, or define motion paths. The system employs a combination of geometric reasoning and machine learning to predict likely user intentions, presenting the most probable interpretations as options. User responses to these cues directly refine the system’s understanding, ensuring the generated dynamic content accurately reflects the creator’s desired outcome and minimizing the need for iterative correction. This iterative clarification process is crucial for translating freehand sketches, which inherently lack the precision of traditional modeling techniques, into deterministic animation parameters.

SketchDynamics utilizes the Manim animation library to convert user-created sketches into finished animations. This integration is achieved through a direct translation of sketched elements into Manim scene objects and animation sequences; geometric shapes, paths, and textual input are parsed and represented as Manim objects. The system then automatically generates the necessary Manim code to create animations based on the sketch, including transitions, movements, and visual effects. This process eliminates the need for manual coding of animations within Manim, streamlining content creation and enabling rapid prototyping of visual explanations and presentations. The resulting animations are rendered using Manim’s standard rendering pipeline, outputting high-quality video files.

Refinement as Dialogue: Shaping Motion with Precision

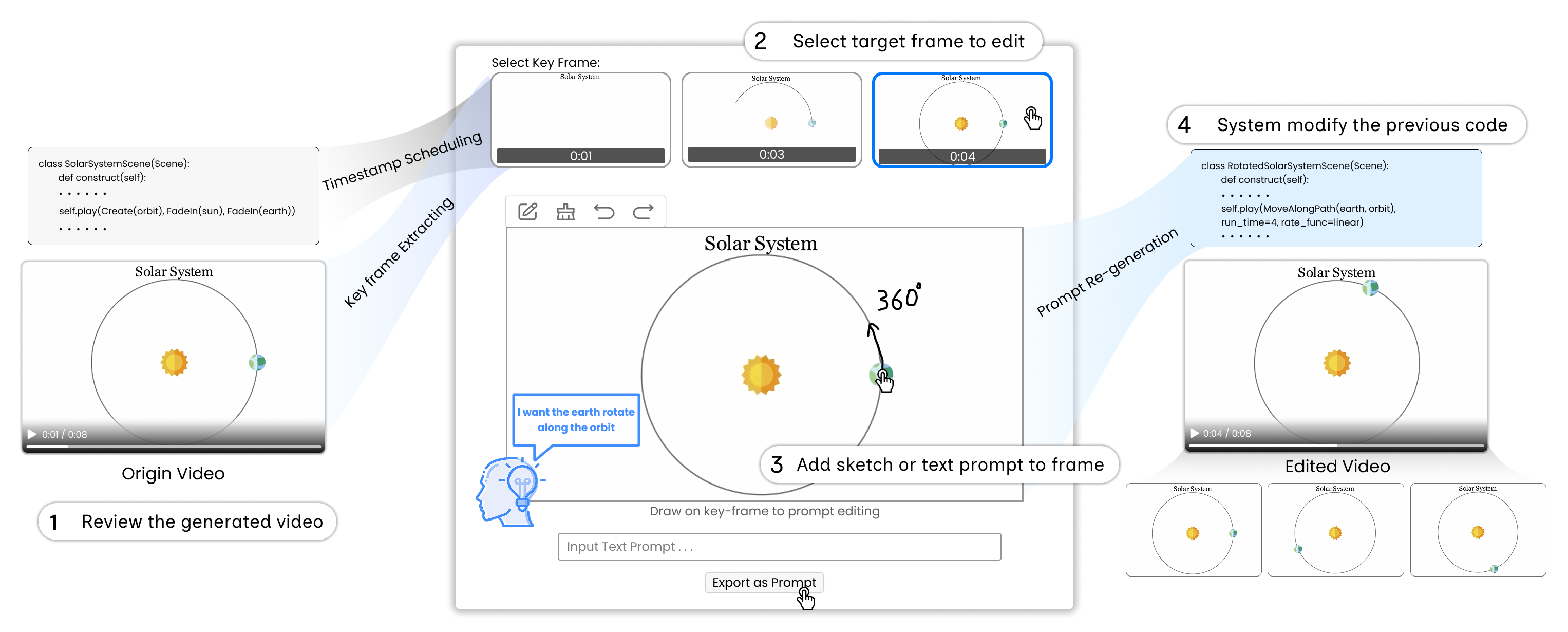

SketchDynamics enables iterative refinement of generated animations through the implementation of Refinement Cues. These cues provide users with direct access to edit the generated video output, allowing for precise adjustments to animation details such as timing, trajectory, and object interactions. This functionality moves beyond initial sketch-based generation by offering a feedback loop where users can actively shape the animation to meet their specific requirements. The system supports granular control, enabling alterations to individual frames or segments within the generated video, and facilitating a more tailored and accurate final product.

Keyframe Extraction within SketchDynamics identifies and isolates specific frames within a generated animation that represent significant poses or transitions. These extracted keyframes serve as discrete editing points, enabling users to directly manipulate the animation’s content at crucial moments rather than requiring adjustments to the entire video sequence. This targeted approach streamlines the refinement workflow by reducing the scope of editing required for each adjustment, and facilitates precise control over animation details. The system automatically identifies these keyframes based on motion and content changes, presenting them to the user as editable states.

Research indicates that a three-stage iterative refinement system, utilizing both clarification and refinement cues, demonstrably improves user alignment with generated animations. User evaluations across each stage of the process revealed substantial increases in reported alignment with the generated content, perceived user control over the animation, and overall ease of use. Statistical analysis confirmed a significant improvement in these metrics when compared to initial animation generation from sketches alone, indicating the efficacy of the iterative refinement approach.

Unlocking Potential: A New Horizon for Creative Expression

SketchDynamics fundamentally alters the landscape of animation creation by drastically reducing the technical skill required to produce compelling visuals. Traditionally, animation demanded expertise in complex software and painstaking frame-by-frame rendering; this system instead translates simple, hand-drawn sketches into fully realized animations with minimal effort. This lowered barrier to entry empowers individuals without formal training – from educators and hobbyists to designers and storytellers – to rapidly prototype ideas and express themselves through the dynamic medium of animation. By abstracting away the technical complexities, SketchDynamics shifts the focus from how to animate to what to animate, fostering a more inclusive and accessible creative environment and unlocking a wealth of previously untapped potential.

SketchDynamics dramatically shortens the timeline from initial concept to tangible prototype, enabling a significantly more agile creative process. By rapidly translating rough sketches into functional animations, the system allows designers and artists to quickly test ideas, receive feedback, and iterate on designs without the time-consuming effort of traditional animation techniques. This accelerated experimentation isn’t simply about speed; it facilitates a deeper exploration of possibilities, encouraging creators to embrace failure as a learning opportunity and refine their vision through continuous improvement. The result is a dynamic workflow where innovation is fostered not through painstaking production, but through a rapid cycle of sketching, prototyping, and refinement, ultimately leading to more polished and impactful creations.

Ongoing development of SketchDynamics prioritizes a more nuanced comprehension of user input, moving beyond simple shape recognition to interpret intricate sketch details and artistic intent. Researchers aim to enable the system to handle increasingly complex drawings, including those with overlapping elements, varying line weights, and implied depth. Crucially, future iterations will focus on seamless integration with industry-standard creative software – such as Adobe Animate and Blender – allowing artists to incorporate SketchDynamics as a powerful tool within existing workflows, rather than a standalone application. This interoperability will unlock new possibilities for hybrid animation techniques, blending the immediacy of hand-drawn sketches with the precision and control of digital tools, ultimately broadening the scope of creative expression.

The pursuit of seamless human-AI collaboration, as detailed in SketchDynamics, acknowledges the inherent ephemerality of design. Systems, even those built upon advanced vision-language models, are not static endpoints but rather evolve within a continuous cycle of refinement. As Linus Torvalds observed, “Talk is cheap. Show me the code.” This sentiment resonates deeply with the research’s emphasis on iterative sketching and visual editing-tangible demonstrations of intent that surpass mere verbal descriptions. The interface isn’t about achieving a perfect, finished animation immediately, but facilitating a conversation where the system learns and adapts, mirroring the organic progression of any creative endeavor. Every architecture, including these AI models, lives a life, and SketchDynamics offers a glimpse into how we might witness-and guide-its evolution.

The Horizon Recedes

The pursuit of translating intention into motion is, at its core, a negotiation with entropy. This work, by attempting to externalize dynamic intent through sketch, doesn’t so much solve the problem of communication as delay its inevitable decay. Systems of representation, however elegant, are always approximations-a sketch is not the animation, merely a transient map. The clarity gained through iterative refinement and clarification cues is not a destination, but a temporary reprieve from ambiguity.

Future development will likely focus on the illusion of responsiveness – crafting algorithms that appear to understand nuance, even when operating on fundamentally incomplete data. The true challenge isn’t generating visually appealing motion, but managing the increasing complexity of the interface between human desire and computational execution. The system’s capacity for post-generation visual editing highlights a tacit acknowledgement: perfect translation is unlikely, and control will always remain, to some degree, an exercise in damage mitigation.

Ultimately, the field edges toward a question of sustainability. How much refinement can a system withstand before the cost of maintaining the illusion of understanding outweighs its benefits? Stability, it seems, is often simply a prolonged prelude to more complex failure. The horizon of perfect animation generation recedes with every step forward.

Original article: https://arxiv.org/pdf/2601.20622.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Overwatch Domina counters

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- 1xBet declared bankrupt in Dutch court

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- Gold Rate Forecast

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

2026-01-29 15:03