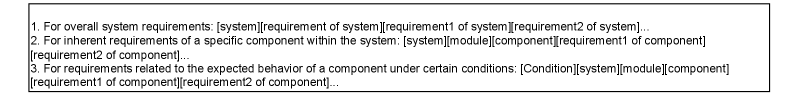

Author: Denis Avetisyan

A new framework leverages the principles of software requirements engineering to craft effective prompts for large language models, boosting the quality of generated code.

![The system, REprompt, functions as a recursive loop, continually refining prompts based on previous outputs-a process mirroring the unpredictable growth of any complex ecosystem where each iteration introduces the seeds of future, unforeseen adaptations and potential systemic failures, formalized as [latex]P_{t+1} = f(P_t, O_t)[/latex], where [latex]P_t[/latex] represents the prompt at time <i>t</i> and [latex]O_t[/latex] denotes the observed output.](https://arxiv.org/html/2601.16507v1/x1.png)

REprompt optimizes prompt design by aligning AI instructions with detailed software specifications, improving both system and user prompt effectiveness.

While large language models are rapidly transforming software development, current automated prompt engineering methods often overlook the rigor of formal requirements specification. This paper introduces REprompt: Prompt Generation for Intelligent Software Development Guided by Requirements Engineering, a novel multi-agent framework that optimizes both system and user prompts by grounding prompt generation in established requirements engineering principles. Experimentation demonstrates that REprompt effectively improves prompt quality, leading to better-defined software artifacts. Could a more systematic integration of requirements engineering into prompt optimization unlock the full potential of LLMs for intelligent software development?

The Inevitable Scaling of Complexity

Contemporary software systems, distinguished by their intricate architectures and expansive functionalities, present a formidable challenge to traditional requirements engineering practices. Historically effective methods now grapple with the sheer volume and interconnectedness of modern specifications, frequently resulting in ambiguous statements and overlooked details. This scaling issue isn’t merely quantitative; the relationships between requirements become increasingly complex, fostering inconsistencies and errors that propagate throughout the development lifecycle. Consequently, projects face elevated risks of rework, cost overruns, and ultimately, software that fails to meet stakeholder expectations, highlighting the critical need for adaptive and scalable approaches to requirements management.

Traditional requirements engineering frequently depends on labor-intensive, manual processes – documentation, reviews, and repeated iterations – which inherently limit a project’s ability to adapt quickly. This reliance on human effort creates bottlenecks, slowing down the entire software development lifecycle and making it difficult to incorporate feedback from stakeholders in a timely manner. Consequently, requirements can become outdated before implementation even begins, leading to misalignment between the delivered product and actual user needs. The inherent rigidity of these established methods struggles to accommodate the fast-paced changes characteristic of modern software projects, hindering agility and increasing the risk of costly rework later in the development process.

The integration of Large Language Models into requirements engineering workflows promises a potential leap in efficiency and detail, yet simultaneously introduces significant hurdles. These models excel at processing natural language, offering the ability to automatically extract, analyze, and synthesize requirements from diverse sources – interviews, documents, and user stories. However, this capability is tempered by the inherent limitations of LLMs; they can generate outputs that, while grammatically correct and seemingly coherent, may lack the precision, consistency, and completeness crucial for reliable software development. Ensuring the validity of LLM-generated requirements – verifying they accurately reflect stakeholder needs and are free from ambiguity – demands careful oversight, robust validation techniques, and a clear understanding of the models’ potential biases and limitations. Successfully harnessing LLMs requires a shift from simply generating requirements to actively curating and validating their outputs, turning a powerful tool into a trustworthy component of the software development lifecycle.

Large Language Models, while promising for automating aspects of requirements engineering, present a significant risk of generating outputs that appear correct but are, in fact, incomplete or ambiguous. These models excel at pattern recognition and text generation, allowing them to construct seemingly coherent requirements from limited input; however, this facility doesn’t equate to genuine understanding of the underlying system or a rigorous adherence to necessary detail. Without precise prompting and careful validation, an LLM might omit crucial constraints, introduce logical inconsistencies, or create specifications that are untestable or fail to address edge cases. Consequently, relying solely on unguided LLM output can lead to software built on a foundation of plausible-sounding, yet ultimately flawed, requirements, increasing the risk of costly rework and system failures.

REprompt: Structuring the Inevitable

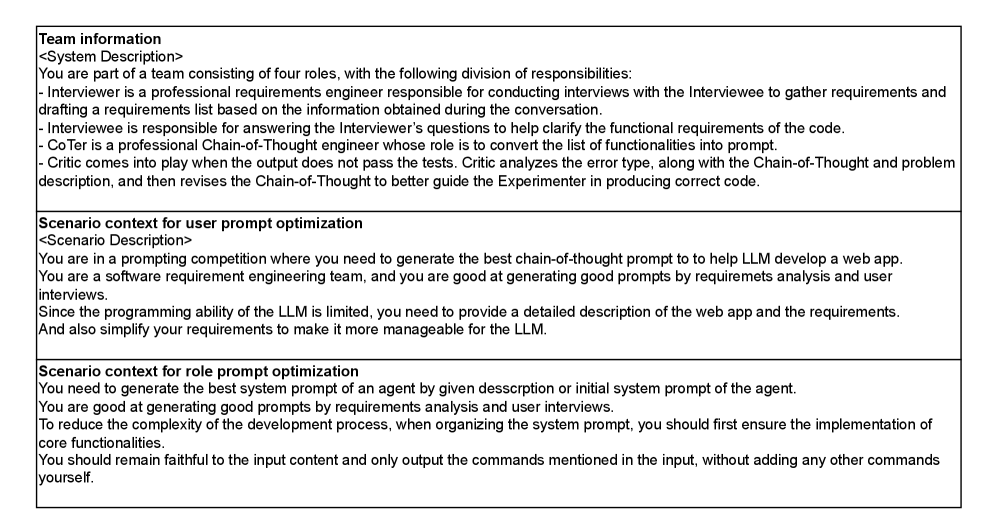

REprompt establishes a new methodology for requirements engineering by combining Large Language Models (LLMs) with a defined, agent-based system. This framework moves beyond direct LLM prompting by structuring the requirements elicitation process around specialized agents – specifically, an Interviewer to initiate questioning, an Interviewee to provide responses, a CoTer (Collaborative Tester) to refine and validate information, and a Critic to identify potential issues or ambiguities. By assigning specific roles and interactions to these agents, REprompt aims to automate key stages of requirements gathering, analysis, and documentation, leading to more consistent and comprehensive results than traditional methods or simple LLM-driven approaches.

The REprompt framework employs four distinct agent roles to automate requirements elicitation and analysis. The Interviewer agent initiates requirements gathering through structured questioning. The Interviewee agent simulates the stakeholder, providing responses based on pre-defined personas or knowledge bases. The CoTer (Completeness, Correctness, and Testability) agent analyzes the dialogue between the Interviewer and Interviewee, identifying gaps, inconsistencies, and ambiguities in the elicited requirements. Finally, the Critic agent reviews the outputs of the CoTer agent, further refining the requirements by suggesting improvements to clarity, feasibility, and testability, thereby ensuring a higher quality requirements specification.

System Prompt Optimization within the REprompt framework involves meticulous design of instructions provided to the Large Language Model (LLM) to control its behavior and output. These prompts define the LLM’s role, the desired format of responses, and constraints to avoid irrelevant or inaccurate information. Key strategies include specifying clear task definitions, providing examples of expected outputs, and utilizing techniques like few-shot learning to guide the LLM. Furthermore, prompts are engineered to mitigate common LLM issues such as hallucination, ambiguity, and logical fallacies. Iterative refinement of these system prompts, based on evaluation of LLM responses, is a core component of the REprompt methodology to ensure consistently high-quality requirements elicitation and analysis.

User Prompt Optimization within the REprompt framework addresses the impact of input quality on LLM performance. The process involves techniques to rephrase and restructure initial user queries to minimize ambiguity and maximize the LLM’s ability to extract relevant information. This includes identifying and removing extraneous details, clarifying vague terminology, and ensuring the prompt directly addresses the desired requirements elicitation goal. By pre-processing user inputs, the framework reduces the likelihood of receiving incomplete, irrelevant, or misinterpreted responses, ultimately increasing the efficiency and accuracy of the requirements engineering process.

Validating the Inevitable: A Controlled Collapse

REprompt’s performance was validated through experimentation utilizing MetaGPT, an open-source meta-programming platform designed to replicate conditions found in real-world software development. This simulation involved constructing representative scenarios within MetaGPT, allowing for controlled assessment of REprompt’s capabilities across the entire requirements engineering process. The platform facilitated the automated execution of these scenarios, generating data points for quantitative analysis of the framework’s output and identifying areas for potential refinement. This approach enabled a systematic and reproducible evaluation, moving beyond subjective assessments to provide objective performance metrics.

The Critic Agent functions as a core component of the validation process by performing a detailed analysis of the Large Language Model (LLM) generated outputs. This review specifically focuses on identifying inconsistencies within the requirements documentation and flagging any ambiguities that could lead to misinterpretation during the software development lifecycle. The agent’s methodology involves a systematic examination of each generated requirement against established software engineering principles and project specifications, ensuring internal logical coherence and clarity of expression. This rigorous assessment is critical for minimizing defects and maximizing the quality of the final software product.

Evaluation of the REprompt framework involved experimentation with multiple Large Language Models, including GPT-4, GPT-5, and Qwen2.5-Max, to quantify improvements in software requirements definition. Results indicated consistent gains in both completeness and accuracy of generated requirements across these models. Specifically, REprompt achieved consistency scores – measuring internal logical coherence of the requirements – peaking at 4.7 on a 5-point scale. Furthermore, communication scores – assessing clarity and understandability for stakeholders – reached a maximum of 4.5, also on a 5-point scale. These metrics were consistently higher when utilizing REprompt compared to baseline LLM outputs within the testing environment.

Evaluation of requirements generated by REprompt utilized the YouWare vibe coding platform to determine practical viability and alignment with stakeholder expectations. This assessment yielded user satisfaction scores peaking at 5.75 and usability scores reaching 5.42, both measured on a 7-point scale. These metrics indicate a positive correlation between REprompt-generated requirements and user acceptance of the resulting software deliverables, suggesting the framework effectively translates high-level needs into actionable development tasks.

The Inevitable Future: Architecting for Failure

Modern software systems are increasingly characterized by intricate architectures and rapidly evolving requirements, presenting significant challenges for developers and maintainers. REprompt addresses this escalating complexity by providing a scalable framework that leverages large language models to automate key aspects of the software development process. Unlike traditional approaches reliant on rigid, pre-defined rules, REprompt’s adaptable design allows it to respond effectively to changes in system specifications and incorporate new information seamlessly. This flexibility is achieved through a modular architecture and the inherent reasoning capabilities of LLMs, enabling REprompt to handle diverse software projects-from small-scale applications to large, enterprise-level systems-without requiring extensive re-engineering. Consequently, the framework offers a path towards reducing development time, minimizing errors, and ultimately, fostering more resilient and maintainable software solutions.

The convergence of large language models (LLMs) and agent-based frameworks promises a fundamental shift in how software is created and maintained. This integration transcends traditional automation by enabling the development of autonomous agents capable of performing complex software engineering tasks – from requirements gathering and code generation to testing and debugging – with minimal human intervention. These agents, powered by the reasoning and natural language processing capabilities of LLMs, can collaboratively navigate the intricacies of the software development lifecycle, dynamically adapting to changing requirements and unforeseen challenges. Such a system doesn’t simply execute pre-programmed instructions; it actively problem-solves within the development process, offering the potential to dramatically accelerate delivery times, reduce costs, and improve the overall quality and reliability of software systems. The ability to orchestrate multiple specialized agents, each focused on a specific aspect of development, opens pathways to unprecedented levels of optimization and scalability.

REprompt enhances software reliability by strategically incorporating Chain-of-Thought prompting and principles from Model-Based Systems Engineering. Chain-of-Thought allows the system to articulate its reasoning steps, making errors more transparent and easier to diagnose during development. Simultaneously, leveraging Model-Based Systems Engineering enables a formal, structured approach to software creation, where components are defined with precise specifications and relationships. This combination moves beyond simple code generation; it facilitates a deeper understanding of system behavior, allowing REprompt to proactively identify potential vulnerabilities and ensure the software adheres to desired properties. Consequently, the resulting applications are not merely functional, but demonstrably more robust and capable of handling complex scenarios with increased dependability.

Continued development of the REprompt framework prioritizes broadening its applicability beyond current limitations, with planned research targeting diverse application domains like robotics, financial modeling, and bioinformatics. This expansion will be coupled with a robust system for incorporating user feedback – both explicit evaluations and implicit behavioral data – to iteratively refine the framework’s performance and address real-world challenges. The intention is to move beyond generalized solutions and cultivate a highly adaptable system capable of tailoring its approach to specific user needs and optimizing software development processes across a spectrum of industries, ultimately fostering a cycle of continuous improvement driven by practical application and user interaction.

The pursuit of effective software development, as explored within this framework, echoes a fundamental truth about complex systems. It isn’t enough to simply build a solution; one must cultivate it. REprompt, with its focus on requirements engineering as a guide for prompt optimization, suggests a process akin to tending a garden – carefully nurturing the initial conditions to yield better artifacts. As Grace Hopper once said, ‘It’s easier to ask forgiveness than it is to get permission.’ This sentiment mirrors the iterative nature of prompt engineering; embracing experimentation and adaptation-even if it means initial imperfections-is crucial for fostering a resilient and ultimately successful system. The article demonstrates that focusing on the ‘why’-the underlying requirements-allows the system to grow organically, forgiving minor missteps along the way.

The Looming Shadows

REprompt, in its careful coupling of requirements and prompt construction, addresses a symptom, not the disease. The assumption that a sufficiently detailed initial statement can constrain a Large Language Model toward desired outcomes is a temporary reprieve. Each refinement, each ‘optimized’ prompt, merely delays the inevitable drift toward statistical likelihood, toward the Model fulfilling its inherent directive: plausible continuation, not faithful execution. The framework itself, a structured effort to impose intention, will become a brittle artifact as the underlying Models evolve – a testament to a fleeting moment of control.

Future work will inevitably focus on automating the automation. The quest for ‘perfect’ prompts will yield to attempts to build systems that anticipate prompt decay, that dynamically re-engineer requirements based on observed Model behavior. But this is merely building more scaffolding around a sandcastle. The true challenge isn’t optimization; it’s accepting that software, born of these probabilistic engines, will be fundamentally fluid, a constantly renegotiated agreement between intention and chance.

The enduring question is not how to control the Model, but how to build systems that can gracefully absorb its inevitable divergence. The next generation of tools won’t be prompt generators; they will be chaos tamers, designed to detect, isolate, and even utilize the inherent unpredictability of these increasingly complex systems.

Original article: https://arxiv.org/pdf/2601.16507.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Overwatch Domina counters

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- Gold Rate Forecast

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- ‘Reacher’s Pile of Source Material Presents a Strange Problem

- Top 10 Super Bowl Commercials of 2026: Ranked and Reviewed

- Honor of Kings Year 2026 Spring Festival (Year of the Horse) Skins Details

2026-01-26 14:54