Author: Denis Avetisyan

Researchers have developed a new method to bridge the gap between human dexterity and robotic manipulation by effectively translating tactile information across different sensor types.

TactAlign enables robust policy transfer from human demonstrations to robots via cross-sensor alignment of tactile data in a learned latent space.

Leveraging human demonstrations for robotic manipulation is often hampered by the significant differences in sensing modalities between people and robots. This work introduces TactAlign: Human-to-Robot Policy Transfer via Tactile Alignment, a novel approach that bridges this gap by transferring human tactile signals to robots with disparate embodiment. TactAlign achieves this through a rectified flow that maps both human and robot tactile observations into a shared latent space, eliminating the need for paired datasets or manual labeling. By enabling cross-sensor alignment, can this method unlock truly generalizable and scalable human-to-robot learning for increasingly complex dexterous tasks?

Decoding the Sensory Gap: The Robot’s Struggle to Feel

Effective robotic manipulation frequently depends on a robot’s ability to “feel” its environment through tactile sensing, yet a fundamental challenge lies in translating information gathered from diverse sensor types. These sensors, which might include force/torque sensors, vision systems, and tactile arrays, each provide unique data streams that are often incompatible. Establishing a reliable bridge between these disparate inputs proves difficult, as the data formats, coordinate systems, and even the underlying physical principles differ considerably. This incompatibility prevents a cohesive understanding of the manipulation task and restricts the robot’s capacity to integrate sensory information for robust and adaptable control-essentially hindering the creation of a unified “sense of touch” for the robot.

A core difficulty in teaching robots through human demonstration lies in the inherent inconsistencies of those demonstrations and a critical data scarcity. Human movements, even when repeating the same action, exhibit natural variations in speed, force, and trajectory; capturing and translating this variability into robotic control is a substantial challenge. Compounding this issue is the lack of simultaneously recorded data from diverse sensor types – a robot might receive visual input and force feedback, but rarely are these streams precisely synchronized and paired during a human teaching session. This absence of aligned multi-sensor data severely limits the robot’s ability to correlate different sensory inputs and construct a robust understanding of the demonstrated task, hindering its capacity to adapt to new situations or generalize beyond the specific examples it has been shown.

A significant obstacle to effective robotic learning lies in the difficulty of transferring knowledge gained from human instruction to novel situations. Current robotic systems, even those equipped with sophisticated tactile sensors, struggle to extrapolate beyond the specific parameters of a demonstrated task; a robot expertly guided through one instance may falter when presented with even slight variations. This inflexibility stems from a reliance on precisely matched sensor data during the learning process, meaning the robot doesn’t inherently understand how to adapt its actions when encountering unforeseen circumstances. Consequently, robots often require extensive retraining for each new scenario, hindering their potential for truly versatile manipulation and limiting their usefulness in dynamic, real-world environments where adaptability is paramount.

![A human demonstration dataset was collected using the OSMO [45] tactile glove, enabling full dexterity and capturing both shear and normal tactile signals.](https://arxiv.org/html/2602.13579v1/x9.png)

TactAlign: Bridging the Sensory Divide

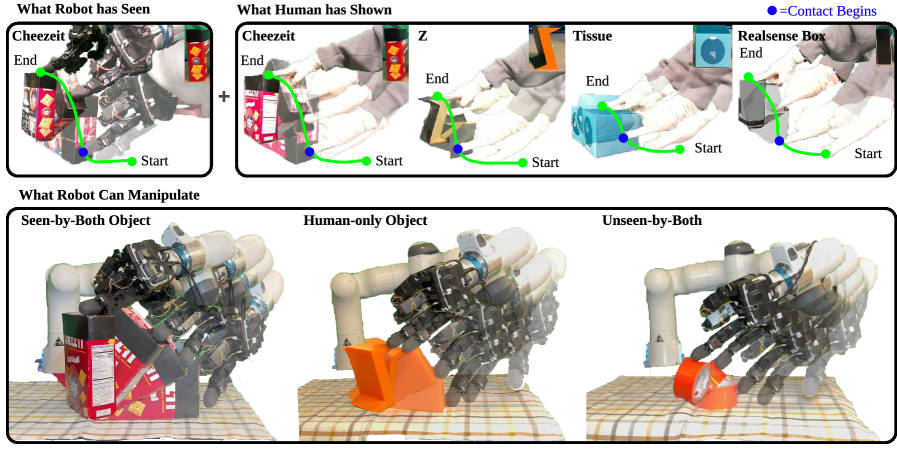

TactAlign addresses the challenge of transferring tactile data learned from human demonstrations to robotic systems when direct sensor correspondence is unavailable. This method enables knowledge transfer from unpaired demonstrations by aligning tactile data across different sensors, even without pre-defined mappings between them. The system achieves this by learning a representation that minimizes the discrepancy between tactile inputs from a human and a robot, effectively bridging the gap caused by differing sensor characteristics and mounting configurations. This allows a robot to leverage human demonstrations for tasks such as manipulation and assembly, even when the robot’s tactile sensors are not identical to those used by the human demonstrator.

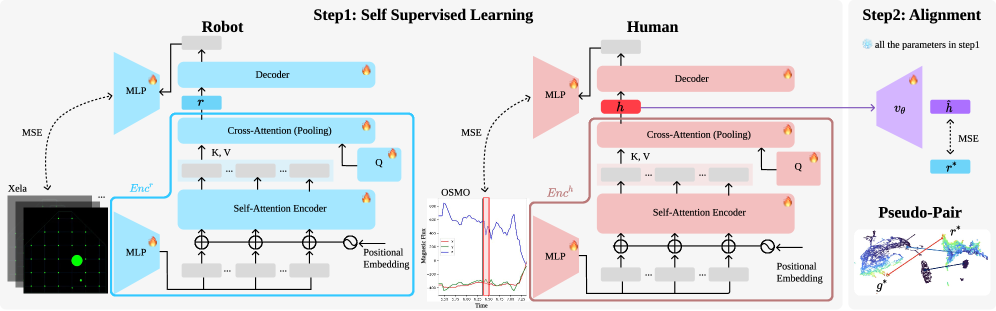

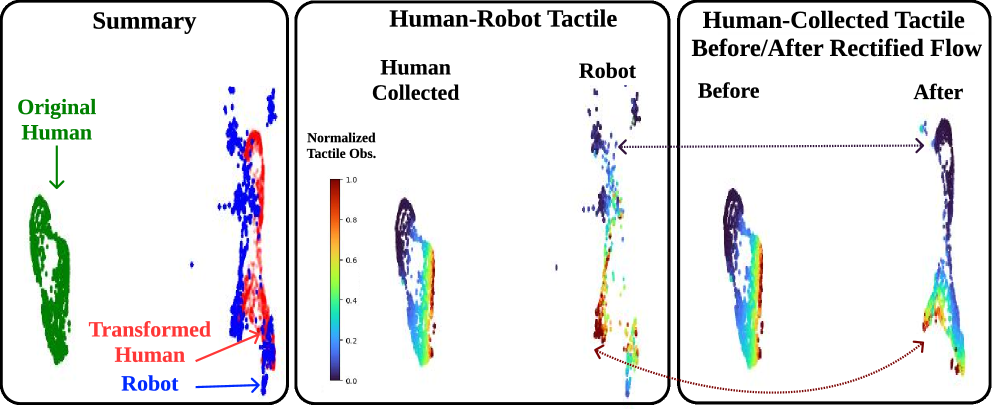

Rectified Flow, the central component of TactAlign, establishes a learned correspondence between tactile data originating from human and robotic sensors. This technique employs a normalizing flow model to map both datasets into a shared, latent space. By learning this latent mapping, the system can relate tactile sensations experienced by a human demonstrator to the corresponding sensor readings from a robot. The model is designed to minimize the distributional divergence between the human and robot tactile data within this latent space, effectively enabling cross-sensor knowledge transfer despite the inherent differences in sensor characteristics and data acquisition methods. This approach circumvents the need for precisely synchronized or geometrically aligned sensor data, a common limitation in traditional tactile learning systems.

Pseudo-Pairs are artificially created data points used to train the TactAlign system when direct correspondence between human and robot tactile sensor readings is unavailable. These pairs are generated by identifying the closest human tactile data sample to a given robot tactile data sample within a learned latent space. This process effectively establishes a temporary, albeit synthetic, alignment that allows the rectified flow network to learn the underlying mapping between the two sensor modalities. The generation of Pseudo-Pairs circumvents the need for expensive and time-consuming synchronized data collection from both human demonstrators and robotic systems, enabling knowledge transfer even with unpaired datasets.

Proof of Concept: Demonstrating Robustness Across Tasks

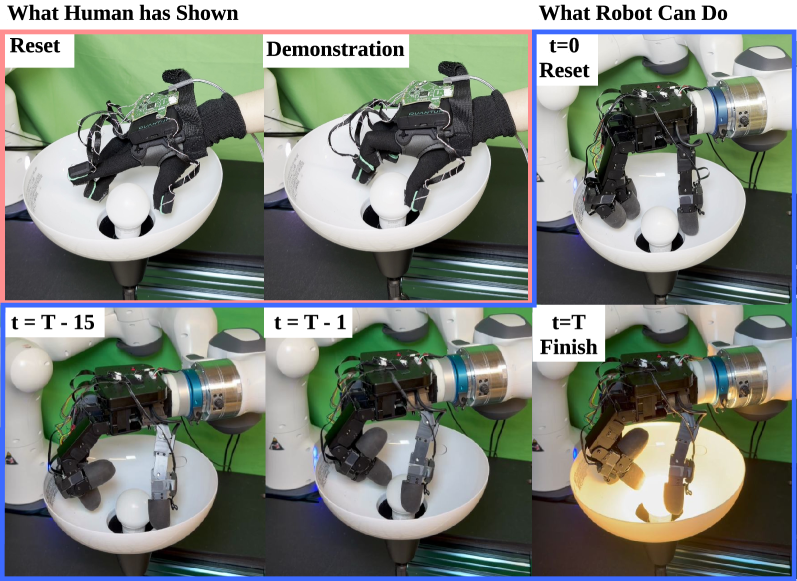

TactAlign was evaluated across a diverse set of robotic manipulation tasks to assess its generalizability. These tasks included fundamental skills like ‘Insertion’ and ‘Pivoting’, intermediate complexity actions such as ‘Lid Closing’, and a high-difficulty task – ‘Light Bulb Screwing’ – requiring precise force control and dexterity. This suite of tasks was selected to represent a range of challenges commonly encountered in robotic manipulation and to provide a comprehensive assessment of TactAlign’s performance beyond any single, specialized skill.

TactAlign demonstrably improves the success rate of Human-to-Robot (H2R) co-training. Quantitative results indicate a +59% improvement in H2R co-training success when utilizing TactAlign compared to training without tactile input. Furthermore, the implementation of TactAlign yields a +51% improvement in success rate when contrasted with H2R co-training performed without data alignment. These gains indicate that incorporating tactile data, specifically when aligned using the proposed method, significantly enhances the effectiveness of the co-training process.

Evaluation of TactAlign on the ‘Light Bulb Screwing’ task demonstrated a 100% improvement in success rate when compared to policies trained without tactile input. This indicates a complete transition from failure to success with the implementation of TactAlign for this specific, complex manipulation task. The improvement was measured by assessing the number of successful task completions with and without the integration of tactile data and alignment techniques.

The implementation of the tactile alignment method resulted in a 78% reduction in Earth Mover’s Distance (EMD) post-alignment. EMD quantifies the dissimilarity between two probability distributions; a lower EMD value indicates a greater degree of correspondence. This substantial decrease demonstrates that the tactile alignment process effectively minimized the distributional gap between the robot’s sensory data and the training data, suggesting improved generalization and transfer learning capabilities. The metric confirms a significant improvement in the alignment of data distributions, facilitating more effective co-training and policy transfer.

Beyond Mimicry: Towards True Sensory Integration

The capacity for robots to interact with the physical world hinges on robust tactile perception, and recent work has focused on bolstering this ability through self-supervised learning. Researchers pretrained a ‘Tactile Encoder’ – a neural network designed to interpret tactile sensor data – using a technique where the system learns from unlabeled data by predicting inherent properties of the tactile input itself. This approach sidesteps the need for extensive human-labeled datasets, a significant bottleneck in robotics. By exposing the encoder to a wealth of raw tactile information and tasking it with reconstructing or predicting elements within that data, the network develops a more nuanced understanding of texture, shape, and force. Consequently, the pretrained encoder exhibits improved feature extraction capabilities, enabling more accurate and efficient tactile perception when applied to downstream tasks like object recognition and manipulation.

The acquisition of robust tactile perception relies heavily on the quality of data used for training, and this research leveraged the advanced capabilities of the OSMO Tactile Glove and Xela Sensor to facilitate high-fidelity data collection from both human subjects and robotic platforms. These sensors, chosen for their sensitivity and precision, enabled the capture of nuanced tactile information – including pressure distribution, texture, and slippage – crucial for building accurate models of touch. By gathering data concurrently from humans and robots, the study created a diverse dataset that accounted for variations in grasping strategies and sensor characteristics, ultimately enhancing the generalizability and robustness of the developed tactile perception systems. This dual approach not only broadened the scope of learning but also allowed for valuable cross-validation between human and robotic performance.

Co-training offered a novel approach to bolstering tactile perception by strategically leveraging data from both human and robotic sources. This technique involved training separate models – one on human-collected data from the OSMO Tactile Glove and Xela Sensor, and another on robot-generated data – and then having each model teach the other. The process iteratively refined both models’ abilities, as each learned from the other’s strengths and compensated for its own weaknesses, resulting in a significantly accelerated learning curve and demonstrably improved performance compared to training on a single dataset. This synergy not only enhanced the robustness of the tactile perception system but also highlighted the potential for cross-domain learning between humans and robots in complex sensory tasks.

The research detailed in ‘TactAlign’ embodies a fundamental principle: understanding arises from controlled disruption. The paper doesn’t simply accept the limitations of differing robotic sensors; it actively seeks to bridge the gap through latent space mapping and rectified flow – essentially, reverse-engineering the sensing process. This mirrors a core tenet of knowledge acquisition: one must dismantle the established system to truly comprehend its workings. As Bertrand Russell observed, “The difficulty lies not so much in developing new ideas as in escaping from old ones.” TactAlign demonstrates this perfectly, challenging the assumption that direct sensor correspondence is necessary for successful human-to-robot transfer, and instead forging a path through abstract alignment. The very act of cross-sensor alignment is a deliberate ‘break’ from conventional methodologies, yielding improved dexterous manipulation.

Pushing Beyond the Surface

The presented work, while demonstrating a functional bridge between human tactile experience and robotic systems, implicitly highlights the vast gulf that remains. TactAlign establishes a mapping – a translation, if one will – but translation always involves loss. The crucial question isn’t simply whether the robot responds to tactile input, but whether it genuinely understands it – a distinction rarely addressed in robotics. Future efforts must move beyond correlating sensor data and begin to model the underlying physics of contact, the nuances of material properties, and the inherent uncertainty in real-world interaction.

One wonders if the current focus on latent space alignment isn’t a sophisticated form of hand-waving. Aligning data is useful, but it sidesteps the problem of representation. What is actually being aligned? Is it a true equivalence of sensory experience, or merely a statistical correlation? A more rigorous approach would involve actively breaking the system – introducing perturbations, varying materials, and deliberately creating failure cases – to reveal the limits of the learned mapping and expose the assumptions embedded within it.

Ultimately, the true test of this line of inquiry won’t be in achieving smoother robotic manipulation, but in building systems capable of predicting their own sensory experience. If a robot cannot anticipate the consequences of its actions, it remains, at its core, a complex automaton. The pursuit of genuine tactile intelligence demands a willingness to dismantle established paradigms and embrace the inherent messiness of the physical world.

Original article: https://arxiv.org/pdf/2602.13579.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Overwatch Domina counters

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- 1xBet declared bankrupt in Dutch court

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

- Gold Rate Forecast

2026-02-17 23:44