Author: Denis Avetisyan

Researchers have released a comprehensive dataset designed to accelerate the development of learning-based control systems for challenging, high-speed robots.

This dataset provides high-fidelity data from a Mini Wheelbot robot to benchmark and improve dynamics learning, reinforcement learning, and nonlinear control algorithms.

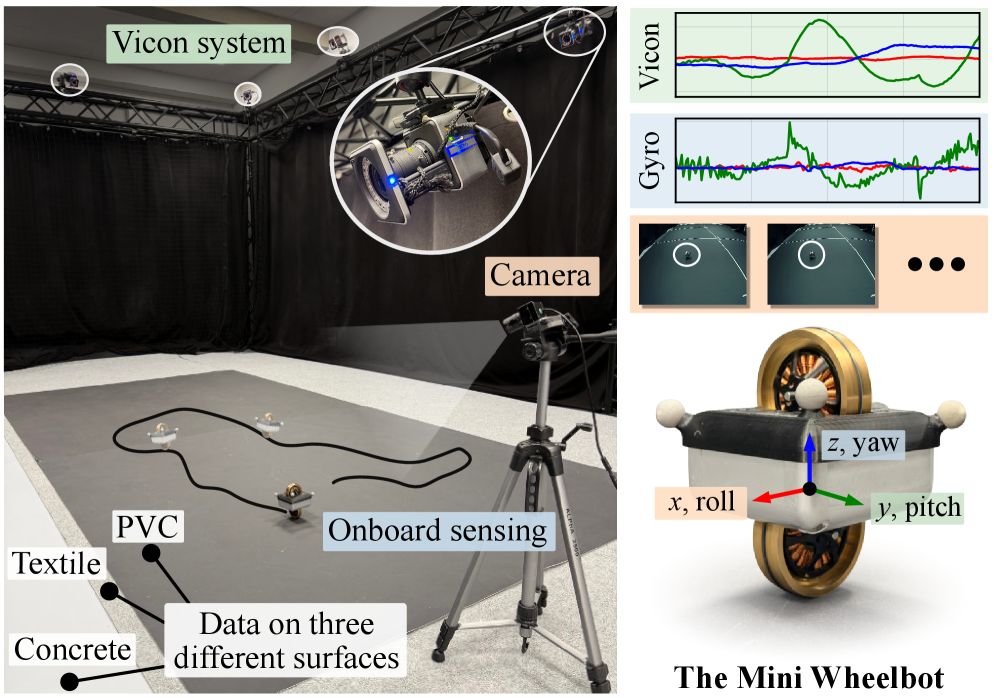

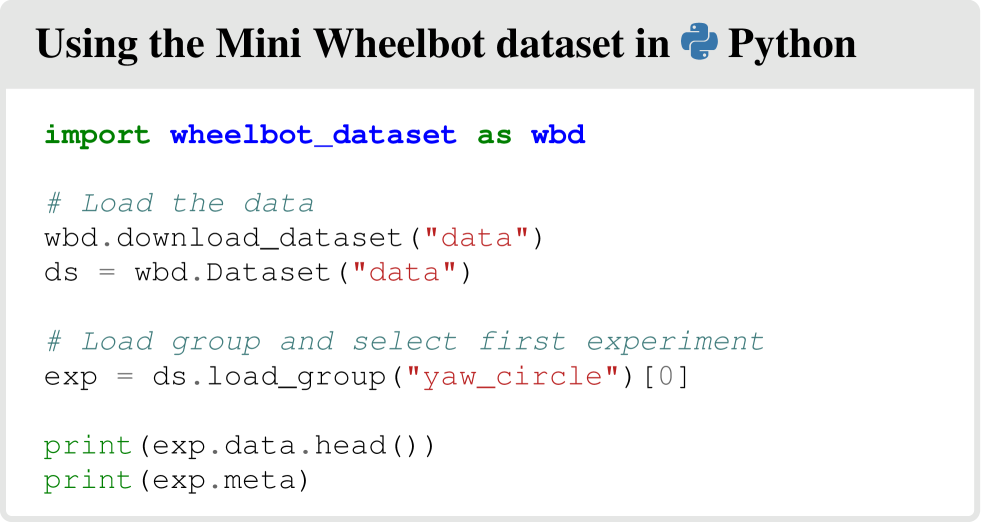

Developing robust learning algorithms for dynamic robots is often hampered by limited access to comprehensive, real-world data. This paper addresses this challenge by introducing the Mini Wheelbot Dataset-a high-fidelity collection of synchronized sensor readings, motion capture data, and video logs from an open-source, balancing reaction wheel unicycle. The dataset facilitates benchmarking of control, state estimation, and dynamics learning algorithms on a fast, unstable, and nonlinear robotic system, featuring experiments across diverse surfaces and control paradigms. Will this resource accelerate progress in learning-based robotic control and enable more adaptable and resilient autonomous systems?

Understanding Dynamics: A Foundation for Reliable Robotics

The development of truly reliable robotic systems hinges on a deep understanding of dynamics – how forces and motion interact to govern a robot’s behavior. Accurate modeling and prediction of these dynamic interactions are not merely academic exercises; they are fundamental to achieving stable locomotion, precise manipulation, and robust responses to unpredictable environments. Without a predictive capability, robots struggle to maintain balance, execute complex movements, or adapt to changing conditions, leading to failures and limitations in real-world applications. Consequently, significant research effort is devoted to refining dynamic models and developing control algorithms that can effectively leverage these models, ultimately paving the way for more capable and dependable robotic technologies.

The Mini Wheelbot presents a compelling solution for researchers seeking reliable dynamics data, achieving this through a carefully considered balance of simplicity and accessibility. Unlike many complex robotic systems, the Mini Wheelbot’s quasi-symmetric, balancing design minimizes extraneous variables, allowing for focused data collection on core dynamic principles. This streamlined approach significantly eases the process of model identification and validation. Crucially, the robot’s open-source nature fosters collaboration and reproducibility; researchers can freely access, modify, and extend the platform, sharing improvements and ensuring the robustness of findings. This combination of a manageable physical system and an open design paradigm positions the Mini Wheelbot as an invaluable tool for advancing the field of robotic dynamics and control.

The Mini Wheelbot’s computational core centers around a Raspberry Pi Compute Module 4 (CM4), a powerful and versatile system-on-a-chip that handles both data acquisition and real-time control. This onboard processing capability is crucial, allowing the robot to log sensor data-such as wheel velocities, inertial measurement unit readings, and motor currents-at high frequencies and with minimal latency. Furthermore, the CM4 facilitates the implementation and testing of diverse control algorithms, from simple proportional-integral-derivative (PID) controllers to more sophisticated model predictive control schemes. This flexibility empowers researchers to rapidly prototype and evaluate different control strategies directly on the physical robot, fostering an iterative design process and accelerating advancements in robotics and control theory. The open-source nature of both the hardware and software stack ensures reproducibility and encourages community contributions, further expanding the Mini Wheelbot’s potential as a valuable research platform.

Data Acquisition: Establishing Ground Truth Through Controlled Excitation

The Mini Wheelbot’s actuation utilized a linear State-Feedback Controller in conjunction with Pseudo-Random Binary Signals (PRBS) as the driving input. PRBS were selected to provide broadband excitation, ensuring the robot traversed a diverse set of operational states during data collection. This approach enabled comprehensive sampling of the robot’s dynamic behavior, facilitating robust learning and identification of its characteristics. The State-Feedback Controller maintained stable operation while the PRBS input induced variations in velocity and direction, effectively mapping the system’s response across its operational envelope.

A Motion Capture System was utilized to provide precise ground truth data regarding the Mini Wheelbot’s pose during experimentation. This system tracked the robot’s position and orientation in three-dimensional space, generating a reference trajectory independent of the onboard sensors. The high accuracy of the Motion Capture System is essential for objectively evaluating the performance of the robot’s state estimation algorithms, allowing for quantitative comparison between estimated and actual states. This data serves as the benchmark against which the efficacy of various estimation techniques can be rigorously assessed and validated.

The resulting dataset comprises 11 GB of data, representing 13 million state transitions recorded from the Mini Wheelbot during excitation via a Pseudo-Random Binary Signal. Data was logged at a sampling rate of 1 kHz, capturing sensor readings, state estimations derived from the onboard controller, and ground truth pose measurements obtained from the Motion Capture System. This comprehensive dataset serves as the primary resource for identifying and modeling the robot’s dynamic behavior, facilitating the development and validation of control and estimation algorithms.

From Data to Prediction: Modeling Dynamics for Anticipatory Control

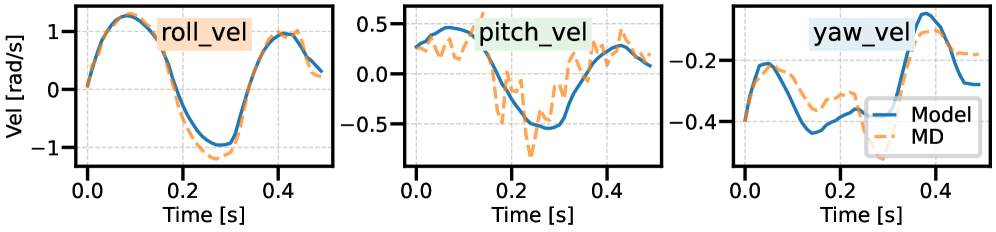

Dynamics Learning was performed using a dataset comprised of 13 million state transitions recorded from robot operation. This learning process aims to establish a predictive model capable of forecasting the robot’s future state. The model utilizes the robot’s current state and the actions taken as inputs to determine the subsequent state. This data-driven approach allows the system to learn the complex relationships governing the robot’s behavior without requiring explicit physical modeling, enabling prediction of future states based on observed data.

The dynamics learning process utilized a Multi-Layer Perceptron (MLP) to predict future robot states based on current state and action inputs. This MLP functions as a regression model, mapping the high-dimensional state and action space to a predicted subsequent state. To manage computational demands and accelerate training, the original dataset of state transitions was sub-sampled to 100 Hz, effectively reducing the data volume while maintaining sufficient temporal resolution for accurate dynamics modeling. This downsampling involved selecting one state transition every 10 milliseconds, resulting in a reduced dataset suitable for efficient MLP training and subsequent prediction.

The learned dynamics model functions as a predictive component within advanced control architectures, enabling improved robot performance through more accurate state estimation and control signal generation. By anticipating future states based on current conditions and actions, control algorithms can proactively adjust behavior, leading to increased robustness against disturbances and uncertainties. This predictive capability is critical for implementing model predictive control (MPC) and reinforcement learning techniques, where accurate predictions are essential for optimizing control policies and achieving desired task outcomes. Furthermore, the model facilitates trajectory planning and simulation, allowing for offline validation of control strategies and improved safety during real-world deployment.

Beyond Prediction: Impact and Future Trajectories for Autonomous Systems

To enhance the Mini Wheelbot’s maneuverability, researchers implemented a sophisticated control strategy leveraging Nonlinear Model Predictive Control (MPC). This approach relies on predicting the future behavior of the robot, enabling proactive adjustments to optimize performance. However, traditional MPC can be computationally expensive for complex systems; therefore, an approximate MPC (AMPC) was realized using a neural network. This neural network effectively learned the robot’s dynamics from collected data, providing a fast and accurate substitute for real-time calculations within the MPC framework. The result was a control system capable of navigating challenging terrains and executing intricate movements with improved precision and responsiveness, demonstrating the potential of data-driven control for robotics.

To further assess the fidelity of the dataset and the learned dynamic models, researchers employed Reinforcement Learning (RL) techniques to train the Mini Wheelbot to autonomously navigate predefined racing tracks. This approach treated the robot’s control as a sequential decision-making problem, where an RL agent learned optimal policies – sets of actions – to maximize its progress around the track. Successful training demonstrated the capacity of the learned models to generalize beyond the initial data used for their creation, and provided a practical validation of their accuracy in a more complex, dynamic environment. The resulting policies not only showcased the robot’s ability to race, but also offered insights into the effectiveness of the learned dynamics in supporting sophisticated behaviors beyond simple trajectory tracking.

Despite advancements in controlling the Mini Wheelbot’s movements, limitations persist in precise yaw angle management – the robot’s rotation around a vertical axis. Future research will concentrate on minimizing the impact of uncontrolled yaw, potentially through algorithmic compensation or hardware modifications. Alternatively, integrating yaw control directly into the overall control strategy offers a path toward a fully controllable system, enabling more complex maneuvers and improved performance on challenging terrains. This could involve developing sophisticated sensor fusion techniques to accurately estimate yaw or implementing advanced control algorithms capable of simultaneously managing both translational and rotational movements, ultimately unlocking the Mini Wheelbot’s full potential for autonomous navigation and racing.

The pursuit of robust robotic control, as demonstrated by the Mini Wheelbot dataset, often leads to intricate models. However, abstractions age, principles don’t. Blaise Pascal observed, “The eloquence of angels is silence.” This resonates with the dataset’s core aim: to move beyond overly complex control schemes. The data allows researchers to focus on fundamental dynamics learning – finding the essential principles governing the robot’s behavior. Every complexity needs an alibi, and this dataset provides a path toward simpler, more effective solutions for controlling fast, unstable systems. It’s a reduction, a paring away of the unnecessary.

Where to Next?

The proliferation of robotic datasets often feels less like progress and more like accumulation. The Mini Wheelbot dataset, however, offers a pointed simplicity. It resists the temptation to model everything, instead focusing on a system genuinely demanding of learned control. This is a virtue. Yet, the core challenge remains stubbornly unaddressed: transfer. A controller expertly tuned for this specific robot, on this specific surface, still reveals little about navigating the irreducible chaos of the real world.

Future work must confront this directly. Not with ever-larger datasets – a palliative, at best – but with methods that actively discount unnecessary detail. The Wheelbot’s dynamics, though nonlinear, are fundamentally tractable. Scaling this approach to systems exhibiting true complexity-those riddled with unmodeled friction, sensor noise, and actuator imperfections-demands a different calculus. A willingness to sacrifice precision for robustness, to embrace approximation as a principle, not a failing.

Ultimately, the value of this dataset, and others like it, lies not in what they contain, but in what they compel researchers to leave out. The pursuit of perfect simulation is a fool’s errand. The true test of robotic intelligence isn’t mastering a known universe, but learning to thrive in an unknowable one.

Original article: https://arxiv.org/pdf/2601.11394.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash Royale Best Boss Bandit Champion decks

- Vampire’s Fall 2 redeem codes and how to use them (June 2025)

- Best Arena 9 Decks in Clast Royale

- Country star who vanished from the spotlight 25 years ago resurfaces with viral Jessie James Decker duet

- World Eternal Online promo codes and how to use them (September 2025)

- JJK’s Worst Character Already Created 2026’s Most Viral Anime Moment, & McDonald’s Is Cashing In

- ‘SNL’ host Finn Wolfhard has a ‘Stranger Things’ reunion and spoofs ‘Heated Rivalry’

- Solo Leveling Season 3 release date and details: “It may continue or it may not. Personally, I really hope that it does.”

- M7 Pass Event Guide: All you need to know

- Kingdoms of Desire turns the Three Kingdoms era into an idle RPG power fantasy, now globally available

2026-01-20 22:26