Author: Denis Avetisyan

Researchers have unveiled a new dataset and tracking method to improve how drones understand and follow multiple objects based on natural language descriptions.

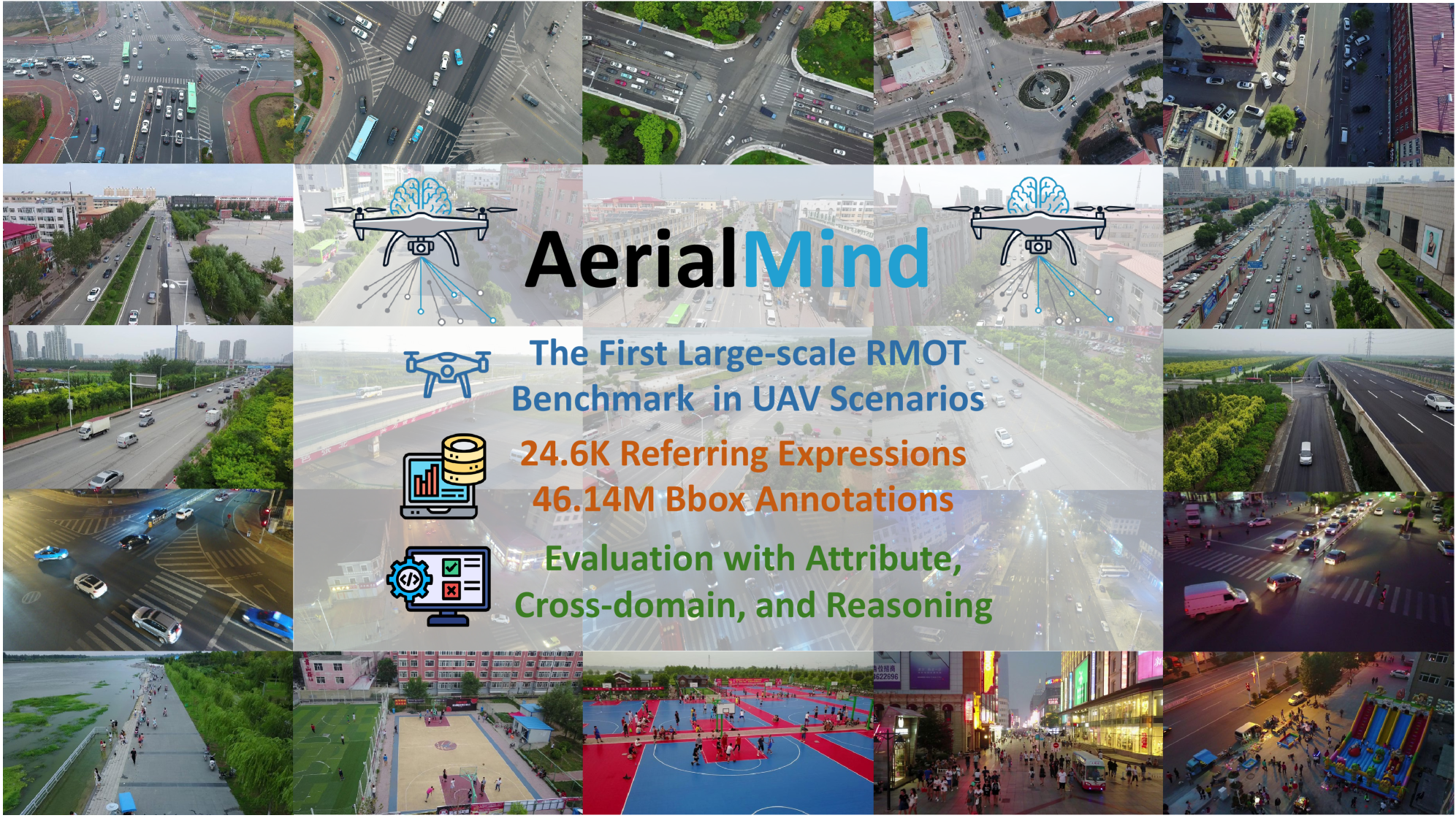

This work introduces the AerialMind dataset and HETrack, a novel approach for referring multi-object tracking in challenging UAV scenarios.

While significant progress has been made in referring multi-object tracking, current benchmarks largely overlook the complexities of aerial observation and language interaction crucial for unmanned aerial vehicle (UAV) applications. This work introduces AerialMind: Towards Referring Multi-Object Tracking in UAV Scenarios, a large-scale dataset and accompanying HawkEyeTrack method designed to bridge this gap by focusing on language-guided perception in dynamic aerial environments. We demonstrate enhanced cross-modal fusion and scale adaptive contextual refinement, facilitating robust tracking of multiple objects based on natural language queries. Could this advance pave the way for truly intelligent, interactive UAV systems capable of complex, real-world tasks?

The Limits of Sight: Confronting Aerial Tracking Challenges

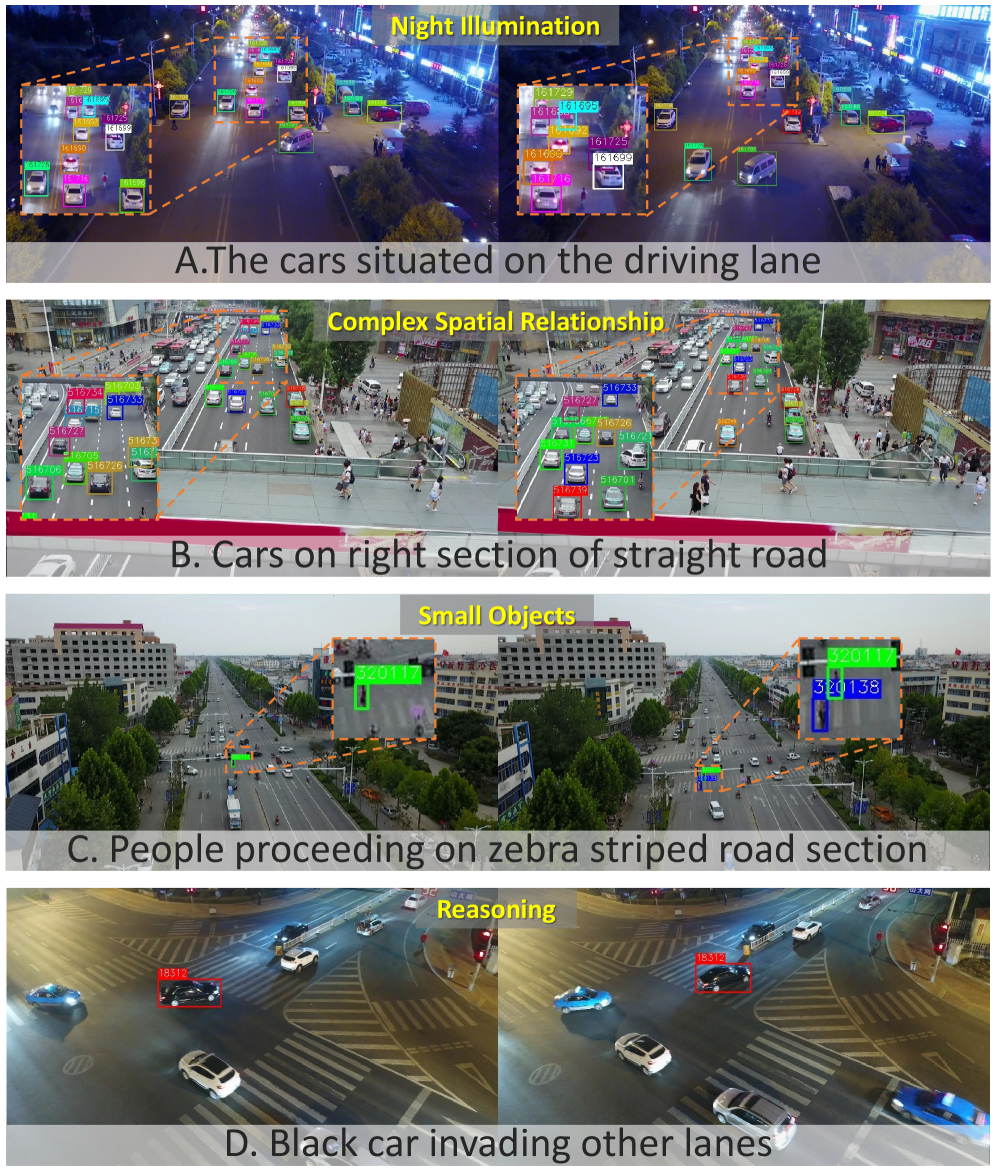

Conventional multi-object tracking systems, while effective in many scenarios, encounter significant difficulties when applied to aerial footage. The inherent perspective of unmanned aerial vehicles (UAVs) introduces extreme scale variations – an object appearing large nearby can rapidly diminish in size as distance increases – challenging algorithms designed for relatively consistent object sizes. Simultaneously, occlusion, where objects are partially or fully hidden by others or environmental features, is dramatically more frequent in aerial views due to the expansive field of vision and the potential for dense scenes. These combined factors lead to frequent identity switches and tracking failures, necessitating the development of specialized algorithms capable of handling these unique challenges and maintaining robust performance in dynamic, overhead environments.

The development of reliable aerial tracking systems is significantly hampered by a critical shortage of comprehensive training data. Current datasets used to train these systems often fall short in both size and the detail of their annotations. Robust trackers, particularly those intended for use with unmanned aerial vehicles (UAVs), require exposure to vast numbers of annotated aerial images and videos to accurately identify and follow multiple objects amidst complex backgrounds and varying conditions. Existing datasets frequently lack sufficient examples of occluded objects, diverse lighting scenarios, and the wide range of scales inherent in aerial footage. This scarcity of richly annotated data forces researchers to rely on limited resources, ultimately hindering the creation of trackers capable of consistently performing in real-world applications like search and rescue operations or automated infrastructure inspection, where precision and reliability are paramount.

The ability to consistently and accurately track multiple objects in aerial video is paramount for a growing number of real-world applications. In time-sensitive scenarios like search and rescue operations, reliable tracking algorithms can dramatically improve the speed and efficiency of locating individuals in distress, potentially meaning the difference between life and death. Similarly, infrastructure inspection – encompassing power lines, bridges, and pipelines – benefits from automated tracking that identifies defects and anomalies with precision, reducing inspection times and associated costs. Perhaps most significantly, robust aerial tracking is a foundational element for the development of truly autonomous navigation systems in unmanned aerial vehicles (UAVs), allowing these platforms to safely and effectively operate in complex environments without constant human intervention, opening doors to applications ranging from package delivery to environmental monitoring.

AerialMind: Forging a New Benchmark in UAV Perception

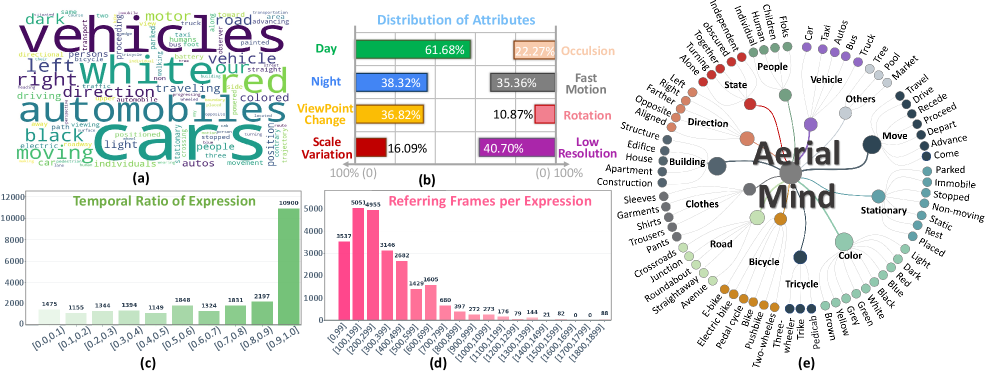

The AerialMind dataset represents a substantial advancement in resources for Unmanned Aerial Vehicle (UAV) tracking research by providing a large-scale benchmark composed of over 140,000 frames captured from multiple UAV platforms and diverse environments. This scale surpasses existing datasets like VisDrone and UAVDT, facilitating the training and evaluation of more robust and generalizable tracking algorithms. The dataset specifically addresses the challenges inherent in aerial tracking, including varying viewpoints, scale changes, and complex backgrounds, and is designed to support research into both single-target and multi-target tracking methodologies. Its size and diversity enable more comprehensive performance assessments and encourage the development of algorithms capable of handling real-world deployment scenarios.

AerialMind addresses limitations in existing UAV tracking datasets, VisDrone and UAVDT, by significantly increasing both the volume of video data and the variability of represented scenarios. While VisDrone provides a large quantity of data, its focus is broader than dedicated tracking, and UAVDT is limited in scale. AerialMind incorporates over 20 hours of 1080p60 video, a 2.6x increase over UAVDT and comparable to VisDrone, but with a greater emphasis on complex tracking challenges. This expansion includes diverse environments – urban, rural, and coastal – and varied operational conditions, such as different weather patterns, times of day, and camera viewpoints, ultimately providing a more robust benchmark for evaluating UAV tracking algorithms.

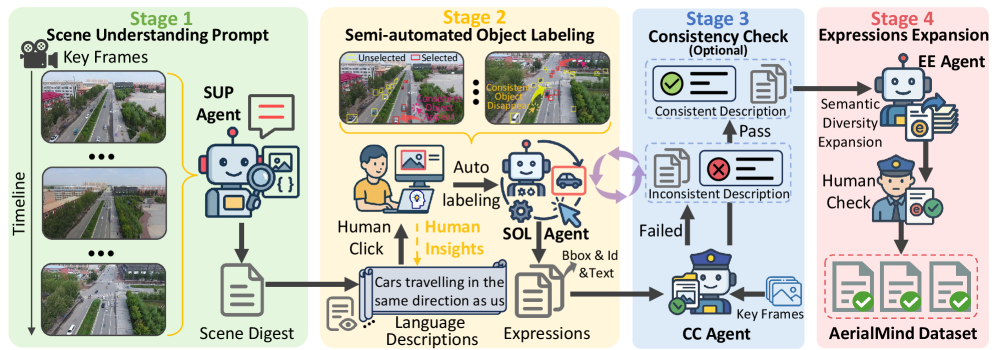

The AerialMind dataset utilizes the COALA (Consistent Object Annotation for Large-scale datasets) Framework to streamline the annotation process and maintain label fidelity. COALA employs a semi-automated approach, combining machine learning-based pre-annotation with human-in-the-loop verification and correction. This methodology significantly reduces annotation time compared to fully manual labeling, while also minimizing inconsistencies and errors. Specifically, COALA facilitates consistent bounding box annotation across diverse aerial video sequences, and includes quality control measures such as inter-annotator agreement checks and automated consistency validation, resulting in a dataset with demonstrably high-quality labels suitable for robust UAV tracking algorithm training and evaluation.

HawkEyeTrack: Synthesizing Vision and Language for Aerial Dominance

HawkEyeTrack (HETrack) represents a new approach to visual object tracking specifically engineered for the challenges presented by aerial imagery. Traditional tracking algorithms often struggle with the significant scale changes, occlusions, and complex backgrounds common in aerial environments. HETrack addresses these limitations through a design prioritizing robustness in these conditions, enabling reliable tracking of targets across extended sequences and varying viewpoints. The method aims to provide improved performance in applications such as drone-based surveillance, search and rescue operations, and autonomous navigation where maintaining accurate target identification is crucial despite the inherent difficulties of aerial tracking scenarios.

The Co-evolutionary Fusion Encoder (CFE) within HawkEyeTrack facilitates the integration of heterogeneous data modalities – specifically, visual features and language instructions – for improved target tracking. Visual information is extracted using a ResNet50 convolutional neural network, providing a feature vector representing the observed scene. Simultaneously, language instructions are processed by a RoBERTa transformer model to generate a contextual embedding. The CFE then employs a co-evolutionary mechanism to iteratively refine the fusion of these feature vectors, allowing for reciprocal influence between visual and linguistic representations. This process enables the model to effectively leverage both visual cues and semantic guidance during tracking, enhancing robustness and accuracy, particularly in challenging aerial environments.

The Scale Adaptive Contextual Refinement (SACR) module addresses the challenges posed by substantial scale variations inherent in aerial imagery. This module operates by dynamically adjusting the receptive field size based on the estimated scale of the target object. Specifically, SACR employs deformable convolutions with learnable offsets to adapt to differing object sizes without requiring explicit multi-scale feature extraction. This adaptive approach allows the model to maintain consistent performance across a range of altitudes and distances, improving tracking accuracy and robustness in scenarios where target appearance changes significantly with scale. The module’s design minimizes information loss during scale transitions, contributing to more reliable tracking in complex aerial environments.

The HawkEyeTrack training process utilizes the AdamW optimizer, a variant of the Adam algorithm incorporating weight decay for improved regularization and generalization. AdamW decouples the weight decay from the gradient update, leading to more stable training, particularly with larger learning rates. This approach addresses the limitations of standard L2 regularization when combined with adaptive learning rate methods like Adam. Specifically, the implementation employs a learning rate of $10^{-4}$ and a weight decay of $0.05$, empirically determined to facilitate efficient convergence on the training dataset and achieve optimal performance in aerial tracking scenarios.

Beyond Benchmarks: The Expanding Impact of Robust Aerial Tracking

The newly developed HETrack system establishes a new benchmark in unmanned aerial vehicle (UAV) tracking performance, achieving state-of-the-art results on a standard industry benchmark. Trained using the extensive AerialMind dataset, HETrack attained a HOTA (High Order Track Accuracy) score of 31.46%, surpassing previous methods in its ability to accurately locate and follow objects over time. This metric considers both detection and association accuracy, meaning the system excels not only at identifying targets, but also at maintaining consistent tracking even through temporary occlusions or challenging conditions. The significant improvement demonstrated by HETrack highlights the potential of large-scale datasets and advanced algorithms to drive progress in UAV autonomy and open doors to more reliable and intelligent aerial systems.

HETrack demonstrates exceptional robustness in challenging real-world scenarios, as evidenced by its high scores on the HOTAS and HOTAM metrics. Achieving a HOTAS (Scene-Robustness) score of 34.37%, the algorithm maintains accurate tracking even amidst cluttered backgrounds and varying lighting conditions. Complementing this, the 31.12% HOTAM (Motion-Resilience) score indicates HETrack’s ability to reliably follow targets undergoing rapid or erratic movements, a critical capability for applications involving fast-moving objects or unstable platforms. These scores collectively highlight the algorithm’s resilience to common disturbances, signifying a significant advancement towards dependable autonomous tracking in dynamic environments.

The development of unmanned aerial vehicle (UAV) systems capable of interpreting natural language represents a significant leap toward truly interactive and autonomous operation. Rather than relying on pre-programmed flight paths or manual control, these systems can respond to verbal commands – for instance, “follow the red car” or “scan the field for injured livestock.” This capability, demonstrated through the HETrack algorithm, allows for dynamic task assignment and real-time adjustments based on changing conditions, moving beyond simple object tracking to contextual understanding. The implications extend to a wide range of applications, enabling more flexible and efficient use of UAVs in complex environments and paving the way for intuitive human-machine collaboration in fields like search and rescue, environmental monitoring, and precision agriculture.

The development of HETrack and its demonstrated performance unlock significant potential across several critical sectors. Automated search and rescue operations stand to benefit from a system capable of reliably tracking individuals in complex environments, increasing the speed and effectiveness of aid delivery. Precision agriculture can leverage this technology for detailed crop monitoring and targeted intervention, optimizing resource allocation and improving yields. Furthermore, the ability to autonomously monitor infrastructure – such as bridges, power lines, and pipelines – promises to enhance safety, reduce maintenance costs, and prevent potentially catastrophic failures through early detection of anomalies. These applications represent just a fraction of the possibilities arising from advancements in robust, language-directed UAV tracking systems.

The advancement of unmanned aerial vehicle (UAV) autonomy is significantly propelled by the synergistic effect of extensive datasets and robust tracking algorithms. Prior limitations in available data often hindered the development of truly adaptable and intelligent UAV systems; however, the creation of large-scale datasets, such as AerialMind, provides the necessary foundation for training sophisticated algorithms like HETrack. This pairing enables more accurate and resilient object tracking, even in challenging real-world conditions. The resulting improvements aren’t merely incremental; they establish a new benchmark for performance and facilitate more rapid innovation within the field, encouraging further exploration of advanced techniques in areas like scene understanding, motion prediction, and ultimately, the creation of fully autonomous UAVs capable of tackling complex tasks.

The development of AerialMind and HETrack exemplifies a deliberate dismantling of conventional tracking limitations. This pursuit isn’t about creating a flawless system, but rather, rigorously testing the boundaries of what’s possible in language-guided perception. As Paul Erdős once stated, “A mathematician knows a lot of things, but he doesn’t know everything.” This sentiment mirrors the iterative process of refining HETrack; each benchmark achieved isn’t a final solution, but a stepping stone revealing new challenges within the AerialMind dataset. The scale adaptive contextual refinement component, for instance, isn’t simply added to a system; it actively challenges established methods, forcing a re-evaluation of how contextual information should be integrated for robust tracking in complex UAV scenarios.

What Breaks Down Next?

The introduction of AerialMind, and methods like HETrack, doesn’t resolve the problem of aerial perception; it merely shifts the point of failure. Current systems, even those leveraging language guidance, still struggle with the inherent ambiguity of real-world scenarios. A UAV’s view isn’t pristine; it’s noise, occlusion, and rapidly changing perspectives. The dataset’s scale is commendable, but scale alone doesn’t address the fundamental challenge: can a machine truly understand what it is tracking, or is it simply becoming proficient at pattern matching? The system will inevitably encounter descriptions-referring expressions-designed to be deliberately misleading or subtly ambiguous, exposing the limitations of current cross-modal fusion techniques.

Future work must move beyond simply improving tracking accuracy. The real test lies in adversarial scenarios – crafting inputs designed to exploit the system’s weaknesses. Attribute-based evaluation is a step in the right direction, but the attributes themselves are human constructs, imposed upon a reality that rarely conforms to neat categorization. A truly robust system needs to be able to question the validity of those attributes, to identify when a description is incongruent with observed data.

Ultimately, the goal shouldn’t be to create a perfect tracker, but to build a system that knows when it is failing-and can articulate why. Until a machine can gracefully admit its limitations, the pursuit of language-guided perception remains a sophisticated game of deception, rather than a genuine understanding of the world below.

Original article: https://arxiv.org/pdf/2511.21053.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash Royale Best Boss Bandit Champion decks

- Vampire’s Fall 2 redeem codes and how to use them (June 2025)

- Mobile Legends January 2026 Leaks: Upcoming new skins, heroes, events and more

- World Eternal Online promo codes and how to use them (September 2025)

- Clash Royale Season 79 “Fire and Ice” January 2026 Update and Balance Changes

- Best Arena 9 Decks in Clast Royale

- M7 Pass Event Guide: All you need to know

- Clash Royale Furnace Evolution best decks guide

- Best Hero Card Decks in Clash Royale

- How to find the Roaming Oak Tree in Heartopia

2025-11-27 22:12