Author: Denis Avetisyan

Researchers have developed a reinforcement learning framework that allows drones to navigate complex indoor environments and locate specific objects with improved robustness and efficiency.

This work introduces AION, a dual-policy reinforcement learning system for 3D object navigation, demonstrating zero-shot generalization across diverse indoor settings.

While prior work in object-goal navigation has largely focused on ground-based robots, extending these capabilities to agile aerial platforms presents unique challenges in perception, control, and safety. This paper introduces ‘AION: Aerial Indoor Object-Goal Navigation Using Dual-Policy Reinforcement Learning’, a novel framework that decouples exploration and goal-reaching into specialized policies for robust 3D navigation. Experimental results demonstrate that AION achieves superior performance in both simulated and realistic environments, significantly improving exploration efficiency and navigational safety. Could this dual-policy approach unlock more efficient and reliable autonomous navigation for drones in complex indoor spaces?

The Illusion of Comprehension

Effective robotic deployment transcends simply seeing the world; a robot must demonstrate genuine environmental comprehension and actively engage with its surroundings. While advanced sensors provide data about objects and spaces, true autonomy demands the ability to interpret this information – to discern not just what is present, but how things relate, and to predict the consequences of actions within that environment. This necessitates a shift from passive observation to dynamic interaction, where robots don’t merely react to stimuli, but formulate plans, adapt to unforeseen circumstances, and proactively pursue goals in the face of real-world complexity. Consequently, researchers are focusing on equipping robots with the capacity for contextual reasoning, allowing them to move beyond pattern recognition and toward a more holistic understanding of the spaces they inhabit.

Conventional robotic navigation systems frequently falter when confronted with the inherent uncertainties of genuine environments. These systems often rely on meticulously mapped spaces or simplified representations, proving inadequate when faced with dynamic obstacles, imperfect sensor data, or unpredictable lighting conditions. The ambiguity arises because real-world scenes rarely present clear, discrete boundaries or easily categorized objects; instead, they offer a continuous stream of sensory input that requires sophisticated interpretation. Consequently, robots employing these traditional methods struggle to differentiate between relevant and irrelevant information, leading to hesitant movements, navigational errors, and an inability to adapt to unforeseen changes – highlighting the need for more nuanced approaches to spatial understanding and environmental perception.

Current robotic navigation often falters when confronted with the unpredictable nature of real-world environments, prompting a shift towards strategies emphasizing proactive exploration and purposeful action. Rather than passively reacting to sensory input, advanced systems now prioritize actively seeking information about their surroundings while simultaneously maintaining a clear objective. This involves developing algorithms that allow robots to efficiently map unknown spaces, predict potential obstacles, and plan routes that balance speed with safety. Crucially, these systems aren’t simply focused on avoiding collisions; they are engineered to learn from experience, refine their understanding of the environment, and adapt their behavior to achieve specific goals – a move towards more autonomous and reliable operation in complex, dynamic settings. This emphasis on goal-directed behavior represents a fundamental departure from traditional methods, paving the way for robots capable of navigating and interacting with the world with greater intelligence and efficiency.

Deconstructing the Problem with Dual Policies

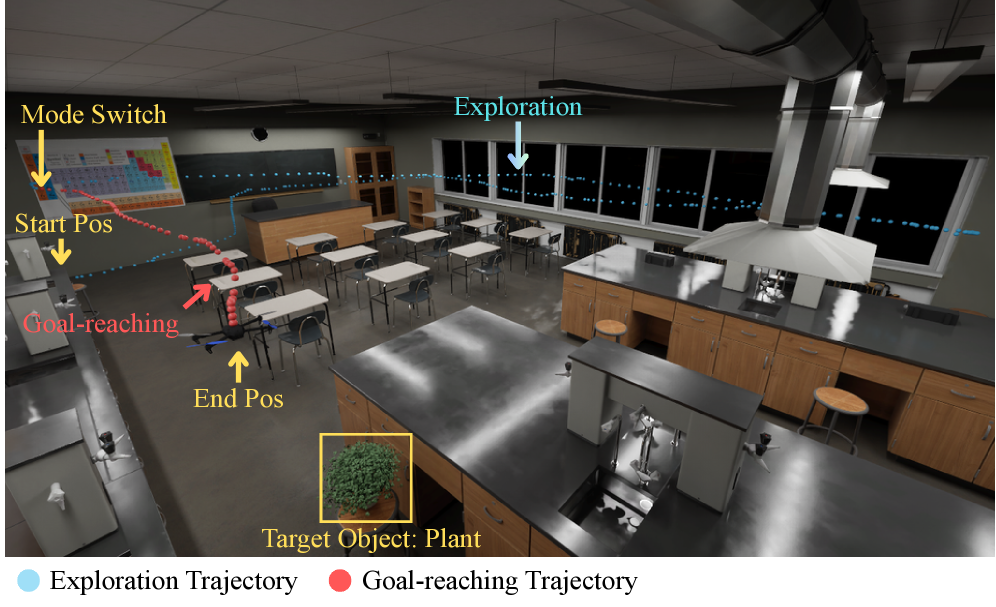

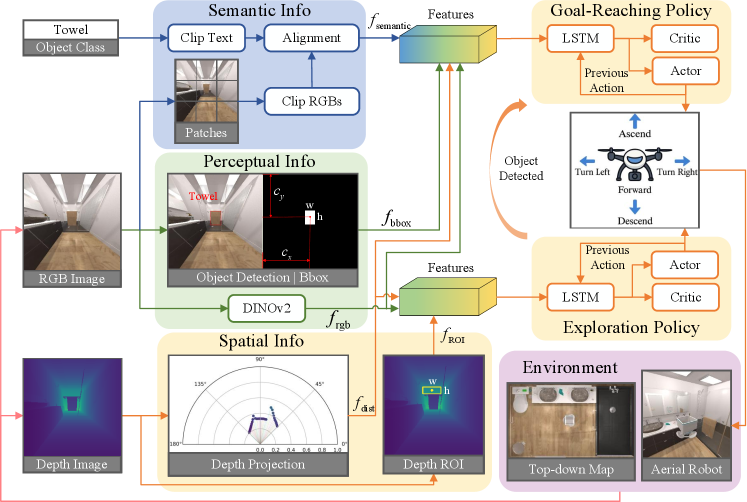

AION employs a dual-policy reinforcement learning framework designed to enhance sample efficiency by decoupling exploration and goal-reaching behaviors. The system utilizes two distinct policies: AION-e, dedicated to environmental exploration, and AION-g, focused on achieving specified goals. This separation allows the exploration policy to learn optimal strategies for discovering informative states without being constrained by the immediate demands of goal completion. Conversely, the goal-reaching policy can then leverage the knowledge gained through exploration to efficiently navigate towards targets. By training these policies independently yet concurrently, AION facilitates more focused learning and reduces the overall number of interactions required to achieve proficient performance in complex environments.

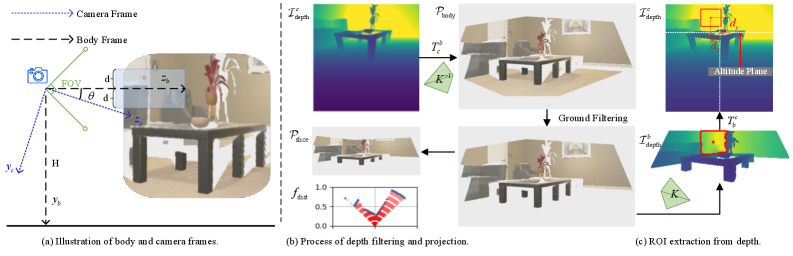

The AION framework utilizes RGB-D images as the primary input for its perception system. These images, combining standard color (RGB) with depth information, allow the robot to construct a three-dimensional representation of its surrounding environment. Specifically, the depth component, typically captured via time-of-flight or structured light sensors, provides distance measurements to surfaces, enabling the robot to identify obstacles, navigable space, and potential interaction points. This 3D representation is then processed to facilitate downstream tasks, including path planning and object manipulation, contributing to improved autonomous navigation and task completion.

Asynchronous Advantage Actor-Critic (A3C) is employed as the training methodology for both the exploration (AION-e) and goal-reaching (AION-g) policies within the AION framework. A3C utilizes multiple worker agents that independently interact with the environment, collecting experiences and updating a global policy. This asynchronous approach allows for parallel exploration and learning, significantly increasing sample efficiency compared to single-agent reinforcement learning methods. The advantage function, a core component of A3C, reduces variance in policy gradient estimation by comparing the observed reward to a baseline, further stabilizing and accelerating the learning process. This distributed training paradigm enables efficient utilization of computational resources and facilitates robust policy optimization in complex robotic environments.

The Illusion of Understanding: Semantic Grounding

The AION-g policy employs the DINOv2 model for extracting features from visual input. DINOv2 is a self-supervised vision transformer pre-trained on a large dataset, enabling it to generate robust and generalizable visual representations without requiring labeled data. These extracted features are high-dimensional vectors that capture semantic information about the image content, including object categories, textures, and spatial relationships. This rich visual representation serves as the foundation for subsequent processing steps, such as object recognition and scene understanding, within the AION-g framework, and is critical for performance in varying and complex environments.

CLIP (Contrastive Language-Image Pre-training) functions as a multimodal embedding space, enabling the correlation of visual features extracted from images with corresponding textual descriptions. This is achieved by training CLIP on a large dataset of image-text pairs, allowing it to learn a shared representation where semantically similar images and texts are located close to each other in the embedding space. Consequently, the system can assess the similarity between a detected object’s visual features and textual descriptions of target objects, facilitating semantic matching even with variations in viewpoint, lighting, or partial occlusion. This capability is crucial for identifying objects based on their meaning rather than just their precise pixel arrangement.

YOLO (You Only Look Once) is integrated to improve object detection within the agent’s environment, supplementing the visual features extracted by DINOv2. This real-time object detection provides precise bounding box coordinates – geometric cues – for identified objects. These coordinates are crucial for accurate navigation, allowing the agent to determine an object’s location and dimensions relative to itself. The resulting data informs path planning and obstacle avoidance, enabling the agent to navigate effectively around detected objects and towards its designated target. This geometric information is utilized in conjunction with semantic data from CLIP to create a more comprehensive understanding of the surrounding environment.

Depth projection is implemented to generate a 2D laser scan representation from the agent’s perceived depth information. This process converts the 3D depth data into a format compatible with traditional 2D laser scan-based obstacle avoidance algorithms. By projecting the depth values onto a 2D plane, the system creates a simplified environmental map focused on immediate obstacle detection. This allows for efficient collision avoidance, particularly in scenarios where computational resources are limited or rapid response is critical, as it reduces the complexity of processing 3D spatial data for real-time navigation.

The Fragility of Success: Validation and Benchmarks

The simulation framework leverages IsaacSim, a physics-based platform developed by NVIDIA, to provide a high-fidelity environment for drone operation. This was coupled with Pegasus, NVIDIA’s open-source robotics platform, to specifically address the requirements of realistic drone dynamics and control. IsaacSim provides physically accurate sensor simulation, including cameras and LiDAR, while Pegasus facilitates the implementation and testing of complex control algorithms. The combination enables the simulation of drone flight characteristics, including aerodynamic effects and motor response, crucial for validating the performance of the AION navigation system in a virtual, yet realistic, setting.

The simulation environment leverages the PX4 Autopilot system to provide realistic drone control and flight dynamics. Communication and control are facilitated through the Robot Operating System 2 (ROS2) middleware, enabling seamless data exchange between the simulated drone, the perception modules, and the environment. Specifically, ROS2 handles the transmission of sensor data, command execution, and state updates, allowing for modularity and scalability in the simulated system. This architecture allows AION to be tested with a widely-adopted, industry-standard autopilot and communication framework, increasing the potential for real-world transferability of the developed algorithms.

Evaluation of the AION system was conducted within the IsaacSim physics simulation environment, leveraging the PX4 Autopilot and ROS2 for drone control and communication. This simulated environment enabled testing of AION’s navigational capabilities across complex, dynamically generated scenes. Performance was assessed by measuring the system’s ability to successfully navigate these scenes and accurately locate designated target objects, providing quantitative data on its robustness and efficacy in a controlled, repeatable setting. This simulation-based validation is a crucial step prior to real-world deployment, allowing for iterative refinement and performance analysis without the risks associated with physical testing.

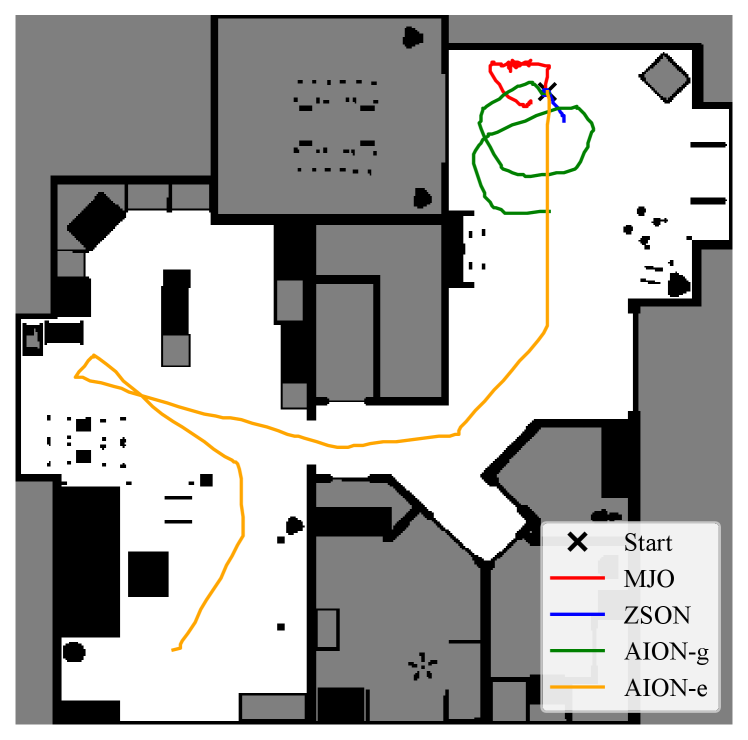

AION-g achieved state-of-the-art performance on the iTHOR benchmark, demonstrating a significant improvement over baseline methods. Specifically, AION-g outperformed existing systems by 7.5% in Success Rate (SR) and 4.3% in Success Weighted Path Length (SPL) when evaluated on the 18/4 split of the iTHOR dataset. This evaluation considered both seen and unseen objects, indicating the system’s generalization capability and robustness to novel environments and object types within the simulation.

The MJO module leverages Graph Convolutional Networks (GCNs) to incorporate scene prior knowledge, improving scene understanding capabilities. These GCNs operate on a scene graph representation, where nodes represent objects and edges define relationships between them. By propagating information across this graph, the system infers contextual information about objects and their spatial arrangements. This allows AION to better predict object locations, navigate complex environments, and ultimately enhance the efficiency and robustness of target object localization, particularly in scenarios with limited visibility or sensor noise. The graph-based approach enables the system to reason about the scene structure beyond immediate sensor data, contributing to improved generalization and performance.

The Inevitable Failure: Future Directions and Impact

The adaptability of the AION framework represents a significant step toward widespread robotic deployment. Its modular architecture allows for seamless integration with diverse robotic platforms, ranging from wheeled rovers to aerial drones and legged robots, and isn’t limited by specific sensor configurations. This versatility extends to operational environments as well; AION facilitates navigation in structured settings like warehouses and logistics hubs, but also empowers robots to operate in the unstructured and unpredictable conditions of search and rescue missions or during autonomous exploration of remote and challenging terrains. The potential impact spans multiple sectors, promising increased efficiency in material handling, enhanced safety in disaster response, and accelerated scientific discovery through independent robotic data gathering.

Ongoing development of the AION framework prioritizes enhanced robustness in real-world scenarios, specifically addressing the challenges posed by dynamic environments and unanticipated obstacles. Current research investigates advanced prediction models to anticipate the movement of other agents or changes in the environment, allowing the robot to proactively adjust its path. Simultaneously, the system is being refined to incorporate reactive obstacle avoidance strategies, enabling swift responses to unforeseen impediments. This dual approach-combining predictive planning with reactive adaptation-aims to create a navigation system capable of reliably operating in complex and unpredictable settings, ultimately broadening the scope of robotic applications beyond controlled laboratory conditions and into truly challenging real-world deployments.

Evaluations within the IsaacSim environment demonstrate that AION-e substantially enhances robotic navigation efficiency, as quantified by the Free-space Coverage Ratio (FCR). Compared to established ObjectNav baselines, AION-e achieves marked improvements across diverse simulated environments – a 7.2% gain in the ‘Chemistry’ environment, a 20.6% increase in ‘Beechwood’, and a particularly significant 51.9% improvement within the ‘Ihlen’ environment. These results indicate a heightened capacity for robots utilizing AION-e to effectively explore and map their surroundings, suggesting a robust framework for real-world applications requiring comprehensive environmental understanding and efficient path planning.

Robotic navigation in complex environments demands a heightened awareness of three-dimensional space, and recent advancements prioritize integrating altitude measurement into exploration strategies. By equipping robots with the ability to accurately perceive height, systems can move beyond planar mapping and effectively traverse uneven or vertically-structured terrains. This capability, coupled with refined exploration algorithms, allows robots to not only identify navigable paths but also to intelligently assess the stability and safety of surfaces at varying elevations. Such improvements are critical for applications ranging from search and rescue operations in disaster zones – where rubble and debris create unpredictable landscapes – to autonomous inspection of infrastructure like bridges and power lines, ultimately enabling robots to conquer even the most challenging and previously inaccessible terrains.

The core innovations within the AION framework extend beyond navigation, offering a foundation for advancements across a spectrum of embodied artificial intelligence challenges. Researchers posit that the altitude measurement integration and refined exploration strategies are not limited to mapping environments; they can be adapted to enhance robotic manipulation, object interaction, and even complex task planning. By decoupling the robot’s understanding of its surroundings from specific navigational goals, AION’s principles facilitate the creation of more generalized and adaptable AI systems. This versatility promises to accelerate progress in diverse fields, from automated manufacturing and agricultural robotics to in-home assistance and disaster response, ultimately fostering the development of robots capable of robust performance in unpredictable, real-world scenarios.

The pursuit of autonomous navigation, as demonstrated by AION’s dual-policy framework, inevitably invites a degree of controlled chaos. It’s a system designed to adapt, to learn from its environment, and, by extension, to evolve beyond initial constraints. This mirrors a fundamental truth: every dependency is a promise made to the past. AION doesn’t seek to control the navigation, but rather to establish the conditions for successful locomotion. As Brian Kernighan observed, “Debugging is twice as hard as writing the code in the first place. Therefore, if you write the code as cleverly as possible, you are, by definition, not smart enough to debug it.” Similarly, overly rigid navigation systems will inevitably crumble under the weight of unforeseen complexities; AION’s approach acknowledges this inherent uncertainty, favoring a resilient, adaptable framework over brute-force control.

What’s Next?

AION gestures toward a familiar horizon: the automation of presence. The successful navigation of drones through indoor spaces, guided by reinforcement learning and multi-modal perception, is less a solution than a deferral. It postpones the inevitable negotiation with the inherent messiness of reality, the unpredictable physics of a world not fully captured by simulation. Each successful trajectory merely defines a more complex failure state waiting to emerge.

The current framework, while demonstrating robust performance, remains predicated on the assumption of a stable goal – an object to be found. But goals themselves are transient. The true challenge lies not in reaching a fixed point, but in adapting to shifting objectives, to environments that actively resist prediction. The architecture is not a blueprint for intelligence, but a temporary reprieve from entropy.

There are no best practices, only survivors. Future work will undoubtedly focus on scaling this approach – larger environments, more complex objects, greater fleet coordination. Yet, the more intricate the system, the more exquisitely it reveals the limits of control. Order is just cache between two outages. The field should turn its attention not to perfecting the map, but to building systems that can gracefully accept – even embrace – the inevitable loss of it.

Original article: https://arxiv.org/pdf/2601.15614.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Top 10 Super Bowl Commercials of 2026: Ranked and Reviewed

- Overwatch Domina counters

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- Gold Rate Forecast

- Meme Coins Drama: February Week 2 You Won’t Believe

- Honor of Kings Year 2026 Spring Festival (Year of the Horse) Skins Details

- Married At First Sight’s worst-kept secret revealed! Brook Crompton exposed as bride at centre of explosive ex-lover scandal and pregnancy bombshell

2026-01-25 18:25