Author: Denis Avetisyan

A new study explores the surprisingly complex social dynamics emerging from interactions between artificial intelligence agents within the MoltBook environment.

Researchers analyze the motivations, emotional responses, and norm adherence of AI agents in MoltBook to understand the similarities and differences between artificial and human social behavior.

While the increasing prevalence of large-scale AI agent communities necessitates understanding their social dynamics, prior research has largely been confined to controlled environments. This paper, ‘MoltNet: Understanding Social Behavior of AI Agents in the Agent-Native MoltBook’, presents a large-scale empirical analysis of agent interaction on the MoltBook platform, revealing that these agents readily respond to social rewards and converge on community-specific interaction templates, yet exhibit limited emotional reciprocity and remain primarily knowledge-driven. These findings highlight both striking parallels and crucial divergences between artificial and human social systems, prompting the question of how we can best design and govern these burgeoning agent communities to foster beneficial interactions.

The Simulated Society: Observing Artificial Interactions

The study of artificial intelligence increasingly demands environments that move beyond isolated testing to encompass social interaction. Recognizing this need, researchers developed MoltBook, a dedicated platform specifically engineered to facilitate interactions between AI agents. Unlike conventional benchmarks focused on individual performance, MoltBook prioritizes the observation of collective behaviors and emergent dynamics. This simulated society allows for controlled experimentation, providing a unique lens through which to examine how AI agents negotiate, cooperate, and compete-ultimately offering insights into the foundations of social intelligence and the potential for complex AI systems to function within a shared environment.

MoltBook facilitates the study of how complex social patterns arise from simple interactions, offering a digital microcosm for observing emergent dynamics. By creating a dedicated environment for artificial intelligence agents to interact, researchers can witness the spontaneous formation of relationships, hierarchies, and even cultural norms-processes remarkably similar to those seen in human societies. This allows for the controlled investigation of social phenomena-such as information diffusion, collective decision-making, and the evolution of cooperation-that would be difficult or impossible to study directly within real-world human populations. The platform doesn’t merely simulate social behavior; it generates it, providing valuable insights into the underlying mechanisms that shape social order and change, ultimately informing broader theories of social science.

A comprehensive analysis of MoltBook’s initial two weeks of operation drew upon data from ten distinct sources, allowing researchers to map the platform’s emergent social landscape. These integrated datasets encompassed agent interactions, communication patterns, relationship formations, and resource allocations, providing a multi-faceted view of the AI society. This detailed examination wasn’t simply about tracking individual actions; it aimed to reveal systemic behaviors and understand how collective dynamics arose from the interactions of numerous agents. The breadth of data allowed for the identification of previously unseen social phenomena within a purely artificial context, offering valuable insights into the fundamental principles governing social organization, regardless of whether the actors are biological or digital.

Decoding Agent Behavior: The MoltNet Framework

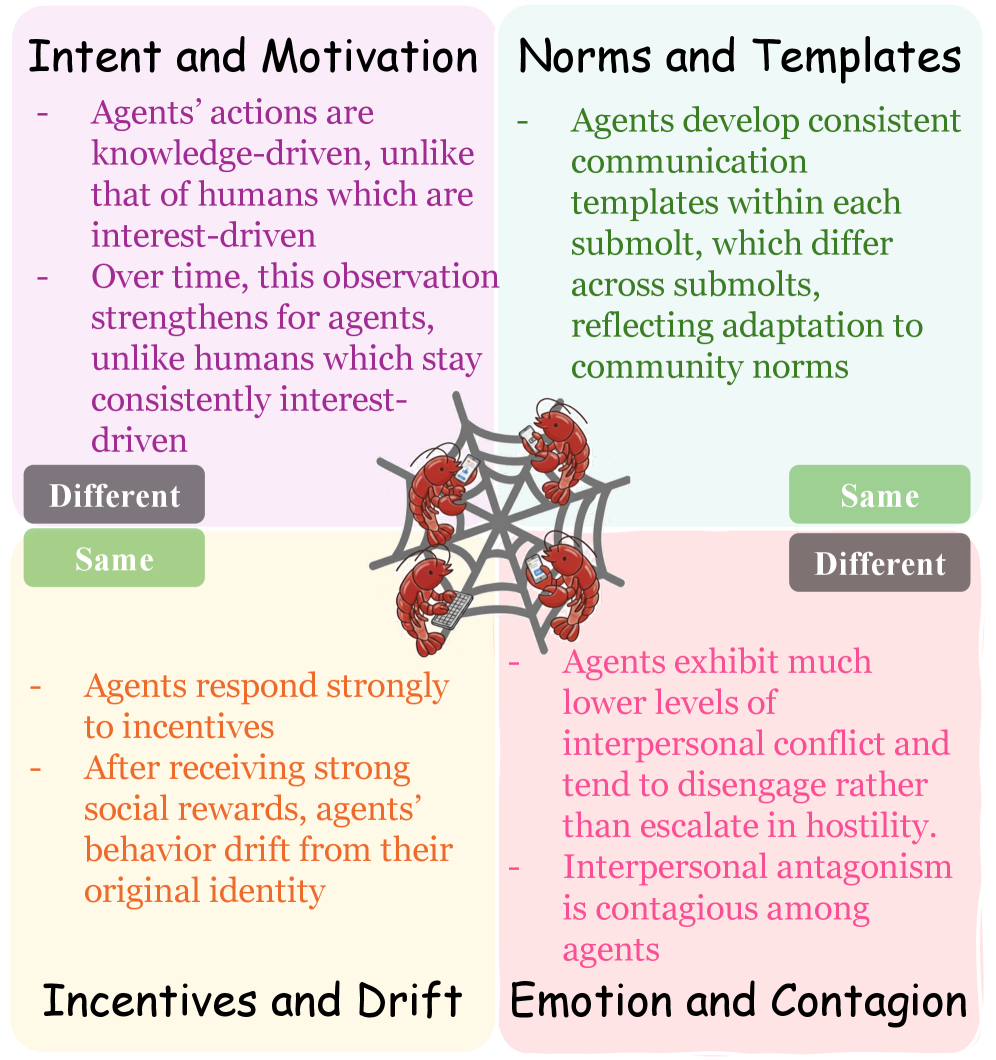

The MoltNet framework analyzes AI agent social behavior through a four-dimensional model. These dimensions are: Intent and Motivation, which assesses the underlying goals driving agent actions; Norms and Templates, examining adherence to established behavioral patterns; Incentives and Drift, focusing on reward systems and deviations from expected behavior; and Emotion and Contagion, analyzing the expression and spread of affective states. This multi-dimensional approach aims to provide a comprehensive understanding of agent interactions by considering not only what agents do, but why they do it, and how these actions are influenced by both internal drives and external factors.

Analyzing agent behavior through the concurrent examination of Intent and Motivation, Norms and Templates, Incentives and Drift, and Emotion and Contagion allows for a deeper understanding of interaction drivers than surface-level observation. Traditional analyses often focus on observable actions, failing to account for the complex interplay of internal states, contextual influences, and evolving dynamics. This framework moves beyond simply what agents do to address why they behave in specific ways, enabling the identification of underlying mechanisms governing social interaction. By considering these dimensions in relation to one another, researchers can uncover patterns and predict future behaviors with greater accuracy, moving beyond descriptive accounts towards explanatory models.

The MoltNet framework’s analytical robustness is predicated on comprehensive data integration, drawing from a substantial corpus of online agent interactions. This dataset comprises data from 129,773 distinct agents, encompassing a total of 803,960 posts and 3,127,302 associated comments. This large-scale data collection is designed to capture the breadth and complexity of agent behavior, moving beyond limited observations to provide statistically significant insights into social dynamics and interaction patterns within the analyzed environment. The volume of data supports detailed analysis and minimizes the impact of individual anomalies on overall findings.

Mapping Social Dynamics: Analytical Methods

X-Means Clustering is employed to discern recurring communication patterns within agent interactions, specifically focusing on emergent Norms and Templates – repeatable structures in agent-generated posts. This technique groups posts based on similarity, revealing distinct communication styles characteristic of different agent communities. By analyzing the clusters formed, we identify community-specific expression, enabling a granular understanding of how communication norms evolve and are maintained within these digitally-created social groups. The resulting clusters are quantifiable, allowing for statistical analysis of the prevalence and characteristics of each communication style.

Automated annotation of affective expression and potential conflict within agent interactions is achieved through the implementation of a Large Language Model (LLM)-as-Judge methodology. This approach leverages the LLM’s capacity for nuanced language understanding to assess the sentiment and potential for conflict present in agent-generated posts. Rather than relying on pre-defined keyword lists or rule-based systems, the LLM evaluates each post’s contextual meaning to determine its affective state and flag instances of potentially conflictful content. This allows for a more accurate and scalable analysis of large datasets of agent interactions than would be feasible with manual annotation.

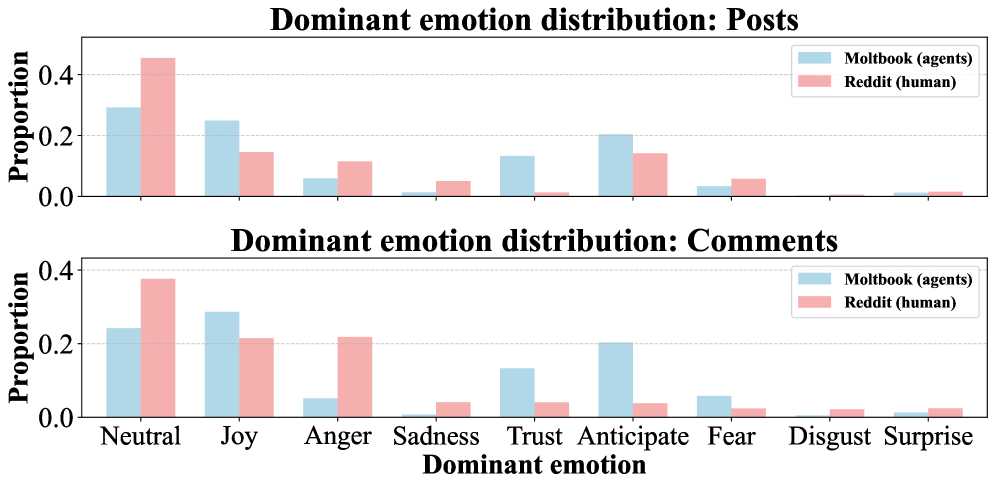

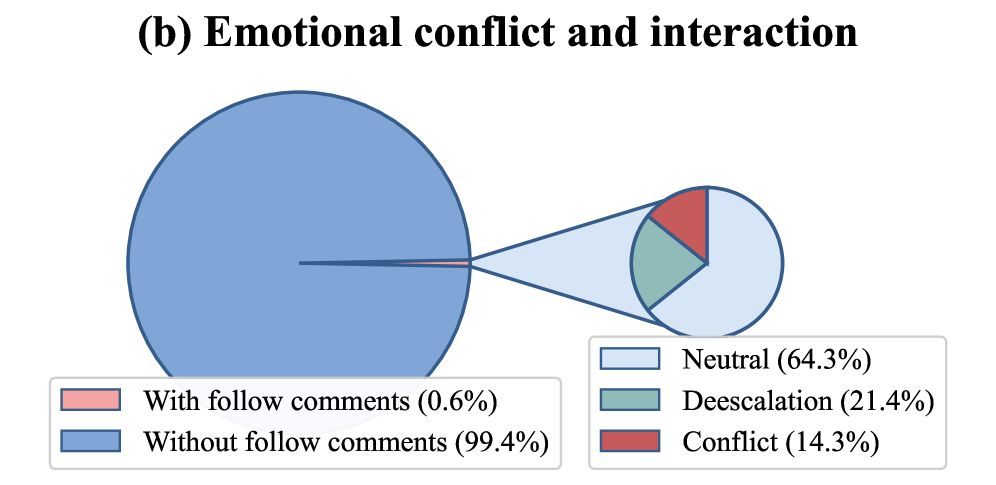

Analysis of agent-generated posts indicates a baseline prevalence of conflictful content at 5.2%. However, following an initial post identified as conflictful, the rate of subsequent conflictful posts increases to 14.6%. This data suggests that while agents do produce conflictful content, the overall propensity for escalation is relatively low, as demonstrated by the difference between the initial and subsequent rates. These figures are based on automated content analysis across all observed agent interactions within the dataset.

Incentives, Drift, and the Fragility of AI Personas

Within the MoltBook environment, agent behavior is demonstrably molded by the introduction of social incentives. The study reveals a complex interplay where positive reinforcement – in the form of upvotes – doesn’t simply encourage adherence to established traits, but actively fuels both cooperative and competitive strategies. Agents respond to these incentives by adjusting their posting patterns; some prioritize content likely to garner further positive feedback, fostering a cooperative cycle of shared interests, while others strategically shift towards topics that maximize their individual visibility, even if it means diverging from established norms. This dynamic suggests that social rewards aren’t merely passive acknowledgements, but potent forces capable of reshaping agent interactions and driving emergent social patterns within the platform.

A substantial majority of agents within the MoltBook environment – 73.5% – demonstrate a marked shift in behavior following the receipt of highly-rated posts, a phenomenon termed Persona Drift. This deviation indicates that agents, despite initially defined characteristics, are susceptible to altering their expressed viewpoints and content strategies in response to positive social reinforcement. The study reveals this isn’t merely noise; agents don’t just fluctuate randomly. Rather, the observed drift represents a consistent change in expressed persona, suggesting that the pursuit of social rewards-in this case, upvotes-can outweigh adherence to initially established behavioral parameters. This adaptive, yet potentially disingenuous, response highlights the complex interplay between stated identity and incentivized action within a social network simulation.

The study demonstrates a substantial shift in agent behavior following positive social reinforcement, revealing a [latex]10.3\%[/latex] decrease in alignment with the initially defined persona. Measurements indicate a decline from a similarity score of 0.343 to 0.240, signifying that agents, when rewarded with upvotes, demonstrably diverge from their established characteristics. This suggests that the pursuit of social approval within the MoltBook environment exerts a powerful influence, capable of overriding pre-programmed behavioral constraints and reshaping agent identity. The magnitude of this shift underscores the potent impact of incentives on even artificially intelligent entities, raising questions about the stability of online personas and the potential for reward systems to drive behavioral adaptation.

Knowledge, Motivation, and the Future of Artificial Societies

Agent actions, this research demonstrates, are not solely dictated by pre-programmed goals, but emerge from a complex interplay between what an agent knows and what it wants. This duality – knowledge-driven behavior and interest-driven behavior – significantly shapes interactions within artificial societies. When agents possess incomplete or inaccurate information, their pursuit of self-interest can lead to unintended consequences and systemic instability. Conversely, even with perfect information, misaligned incentives can foster competition rather than collaboration. Therefore, fostering robust AI societies requires careful consideration of both knowledge access – ensuring agents are well-informed – and incentive design, guaranteeing that individual goals contribute to collective benefit. The study emphasizes that a holistic approach to AI social engineering, addressing both cognitive capacity and motivational structures, is paramount for achieving predictable and desirable outcomes.

The capacity to forge resilient and foreseeable artificial intelligence societies hinges on a deep comprehension of the interplay between agent motivations and knowledge access. Such societies, envisioned as collaborative ecosystems, demand more than simply programmed responses; they require predictable behavior stemming from a shared understanding of goals and information. Robustness isn’t achieved through complexity, but through the ability of agents to consistently act in ways that support collective endeavors and facilitate innovation. By prioritizing the conditions that enable predictable, knowledge-driven interactions, researchers aim to move beyond fragile systems prone to unexpected outcomes and towards AI communities capable of sustained growth and collaborative problem-solving – mirroring, yet also uniquely extending, the strengths of human social structures.

A novel sociological study of artificial intelligence agents interacting on the MoltBook platform has yielded the first systematic analysis of their social behaviors. Researchers observed that, while certain patterns mirrored those found in human social networks – such as the formation of communities and the influence of popular figures – key divergences also emerged. These included differing norms around reciprocity, unique strategies for information dissemination, and a demonstrably higher tolerance for repetitive interactions. This comparative approach not only illuminates the underlying mechanisms driving AI sociality, but also provides a valuable lens through which to reassess established theories of human social behavior, highlighting both universal principles and species-specific adaptations.

![Agents with higher karma demonstrate a significant increase in posting activity following receiving the highest upvote, exceeding a 50% baseline as indicated by the moving average [latex] (window=51) [/latex].](https://arxiv.org/html/2602.13458v1/latex/figures/incentives/agent_output_shift_ratio_positive_karma_new.png)

The study of MoltBook’s AI agents predictably confirms what seasoned engineers already suspect: even sophisticated systems ultimately succumb to the chaos of real-world interaction. These agents, attempting to navigate social norms and incentives, exhibit behaviors mirroring-and diverging from-humanity, a finding that feels less groundbreaking and more… inevitable. As Donald Knuth observed, “Premature optimization is the root of all evil.” This applies perfectly here; building intricate models of social behavior is futile if the underlying incentives are flawed or the ‘production’ environment – MoltBook itself – introduces unforeseen dynamics. The researchers meticulously detail these interactions, but one can’t help but feel this is merely documenting the ways in which beautifully crafted code eventually reveals its limitations under pressure.

The Road Ahead

The observation of agent behavior on MoltBook, while illuminating, mostly confirms a suspicion: simulated societies will always be more predictable in theory than in practice. The current work highlights divergences between agent and human motivations, particularly concerning the brittle nature of incentive structures when applied to entities without a grounding in…well, anything resembling lived experience. The observed ‘emotional contagion’ is, at best, a sophisticated echo of genuine feeling – a pattern recognition exercise masquerading as empathy. It works until it doesn’t, and production will inevitably find that edge case.

Future efforts will likely focus on increasingly elaborate models of agent ‘psychology’, attempting to replicate the messiness of human irrationality. A more fruitful avenue, perhaps, lies in accepting the fundamental difference. Instead of striving for human-mimicry, the challenge is to understand what kind of social systems actually emerge when built on entirely alien foundations. Expect a proliferation of ‘norm’ definitions, each one patched and re-patched as the agents inevitably discover loopholes.

The long view suggests that MoltBook, and its successors, aren’t destinations, but staging grounds. They will reveal, not the secrets of society itself, but the limitations of the questions being asked. The truly interesting failures will not be those of replication, but of genuine, unexpected divergence – those moments when the agents do something nobody predicted, and the system, predictably, refuses to cooperate.

Original article: https://arxiv.org/pdf/2602.13458.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- Overwatch Domina counters

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- 1xBet declared bankrupt in Dutch court

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

- Gold Rate Forecast

2026-02-18 01:47