Author: Denis Avetisyan

A new study examines real-world student interactions with AI-powered teaching assistants in a cybersecurity course, revealing key insights into learning patterns and tutor effectiveness.

Large-scale analysis of student conversations and performance data demonstrates a correlation between reactive dialogue and lower course completion rates, informing best practices for AI tutor design and educational strategies.

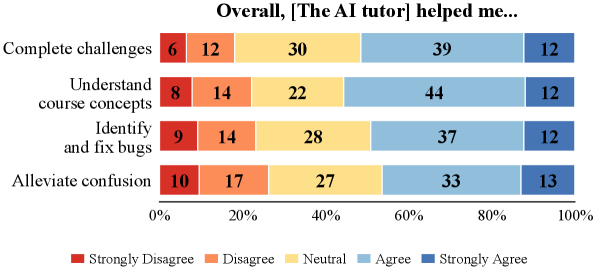

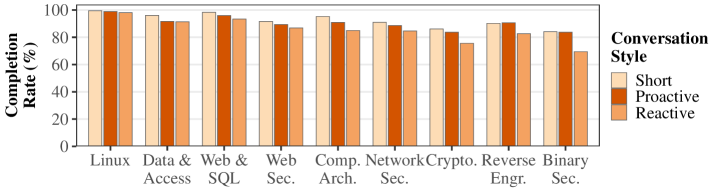

Despite growing demand for skilled cybersecurity professionals, scalable and effective educational approaches remain a challenge. This is addressed in ‘Do Hackers Dream of Electric Teachers?: A Large-Scale, In-Situ Evaluation of Cybersecurity Student Behaviors and Performance with AI Tutors’ which presents an observational study of 309 students interacting with an embedded AI tutor in an upper-division cybersecurity course, revealing distinct conversational styles-Short, Reactive, and Proactive-that significantly correlate with challenge completion. Notably, students employing reactive strategies demonstrated lower success rates, suggesting a need to refine AI tutor design and pedagogical integration. How can we best leverage AI tutors to foster proactive problem-solving and enhance cybersecurity education for all learners?

The Inevitable Scalability Paradox of Cybersecurity Education

Cybersecurity education frequently encounters a scalability issue when attempting to deliver individualized support to students grappling with intricate problems. The field’s rapidly evolving threat landscape demands constant curriculum updates and necessitates that students develop highly specialized skills, yet traditional course formats – often relying on lectures and generalized lab exercises – struggle to address the unique learning needs and varying skill levels within a class. This creates a significant challenge, as students requiring additional assistance may not receive it promptly, hindering their progress and potentially leading to frustration. The inherent complexity of cybersecurity concepts, coupled with the hands-on nature of effective learning in the domain, often overwhelms available resources, making truly personalized guidance – crucial for mastering the subject – difficult to provide at scale.

The very strength of cybersecurity education – its emphasis on practical, hands-on experience – paradoxically presents a significant logistical challenge. Because students learn best by actively engaging with systems and tackling realistic problems, instructors and teaching assistants face an overwhelming demand for individualized support. Each student’s unique approach to a challenge, and the inevitable troubleshooting required, quickly creates a bottleneck, limiting the number of students an instructor can effectively mentor. This isn’t simply a matter of time; the depth of expertise required to guide students through complex security scenarios means that scaling support necessitates either significantly increasing staff or developing innovative approaches to facilitate self-directed learning, both of which present substantial hurdles for many institutions.

The process of mastering cybersecurity demands active problem-solving, yet learners often encounter significant hurdles when independently navigating complex challenges. While exploration fosters deeper understanding, unguided attempts can quickly lead to frustration and stalled progress. This is because cybersecurity isn’t simply about memorizing facts; it requires applying knowledge to novel situations, a skill honed through iterative practice and informed experimentation. Without sufficient scaffolding – targeted feedback, hints, or curated resources – students may spend excessive time pursuing unproductive paths, hindering their learning momentum and potentially diminishing their confidence. Consequently, the effectiveness of hands-on cybersecurity education hinges not only on providing opportunities for active engagement, but also on strategically supporting students as they chart their course through these intricate landscapes.

![The AI Tutor operates by capturing a student's terminal and file context alongside their conversation with the system, sending this information to a large language model [latex] ext{LLM}[/latex] to generate context-aware responses.](https://arxiv.org/html/2602.17448v1/x1.png)

Augmenting the Curriculum: Introducing a Proactive Learning Partner

The AI Tutor functions as an integrated support system within the cybersecurity curriculum, offering students immediate assistance as needed. This intelligent assistant is designed to augment the learning experience by providing on-demand guidance and clarification of concepts. Unlike static learning materials, the AI Tutor responds directly to student input and adapts to their individual progress through the course. Its availability throughout the learning modules aims to reduce roadblocks and encourage continuous engagement with the cybersecurity content, fostering a more proactive learning environment.

The AI Tutor functions directly within the student’s Linux Environment, enabling it to analyze System Context to deliver targeted assistance. This context includes details of the current terminal session, such as the student’s working directory, the commands recently executed, and the output generated. By monitoring these elements, the tutor can infer the student’s current task and identify potential areas of difficulty. This allows the AI to offer help specifically tailored to the student’s immediate situation, rather than providing generic advice, and ensures relevance to the active learning process.

The AI Tutor utilizes the Socratic Method to foster independent problem-solving skills. Rather than directly providing solutions to cybersecurity challenges, the tutor responds to student commands and errors with clarifying questions and prompts. This approach encourages students to analyze their current approach, identify the root cause of issues, and formulate their own solutions. By guiding students through a series of targeted questions, the tutor facilitates a deeper understanding of the underlying concepts and promotes critical thinking, ultimately enhancing their ability to independently address future challenges within the Linux Environment.

The Echo of Agency: Fostering Proactive Learning Through Conversation

The AI Tutor is designed to support student-led exploration through a proactive conversation style. This approach differs from traditional tutoring systems by prioritizing student initiative in directing the learning process. Rather than directly presenting information, the AI Tutor responds to student queries and elaborates on their statements, offering targeted clarification only when necessary to address misconceptions or knowledge gaps. This method encourages students to actively construct their understanding, fostering a more engaged and self-directed learning experience, while the tutor functions as a responsive support mechanism rather than a primary lecturer.

Data collected from student interactions with the AI Tutor indicates a positive correlation between adopting a proactive conversation style and both engagement levels and conceptual understanding. Specifically, students who actively led the conversation and directed the learning process exhibited greater persistence in problem-solving and a more nuanced grasp of the subject matter as evidenced by their responses and the depth of their inquiries. These students consistently asked clarifying questions that went beyond surface-level comprehension, demonstrating an ability to identify knowledge gaps and formulate targeted requests for assistance-a behavior less frequently observed in students who relied more passively on the tutor’s guidance.

Analysis of student interactions with the AI Tutor indicates a positive correlation between the proactive conversation style and challenge completion rates, demonstrating a measurable improvement in learning outcomes. Statistical analysis revealed a significant interaction between conversation style and module difficulty [χ²(16) = 46.67, p < 0.001]. This indicates that the effect of the proactive conversation style on challenge completion is not uniform across all modules; its impact is demonstrably higher in more difficult modules, suggesting the approach is particularly effective when students are grappling with complex concepts.

The Inherent Risk: Enhancing Security Knowledge and Mitigating System Exposure

The very foundation of practical cybersecurity education – the Linux environment – inherently introduces students to potential system weaknesses. While crucial for developing hands-on skills, these systems are susceptible to vulnerabilities like command injection, where malicious code can be inserted into seemingly legitimate commands. This isn’t a flaw in the educational approach, but rather an intentional aspect of the learning process; students are directly exposed to the kinds of vulnerabilities security professionals encounter in real-world scenarios. The challenge lies in creating a safe and controlled environment where these weaknesses can be explored, understood, and ultimately, defended against, without compromising system integrity or creating actual security breaches.

Rather than merely blocking potentially harmful code, the AI Tutor is designed as a controlled environment for students to actively investigate security vulnerabilities like command injection. This approach allows learners to experiment with flawed code – and experience the consequences – without compromising system security or encountering real-world risks. By intentionally permitting, then analyzing, unsuccessful attempts, the system reinforces secure coding principles through direct experience. This methodology shifts the focus from simply avoiding errors to understanding why certain code is insecure, fostering a deeper and more lasting comprehension of secure development practices and building confidence in recognizing and mitigating future threats.

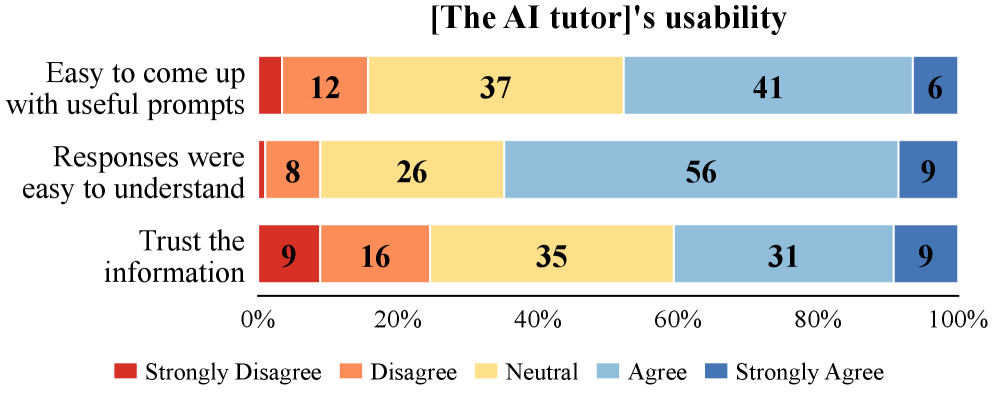

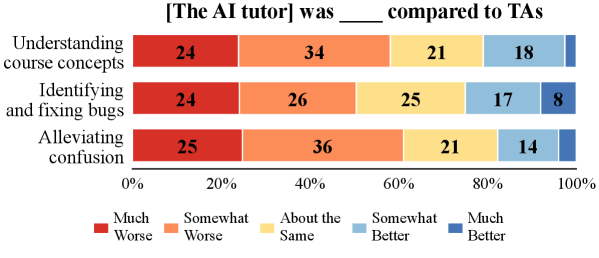

The implementation of an AI Tutor within a cybersecurity curriculum demonstrably reshapes the support structure for students, freeing up valuable time for Course Teaching Assistants to address more nuanced and challenging problems. Data analysis reveals a significant correlation between the AI Tutor’s conversational approach and student success; specifically, reactive conversational styles – those responding solely to immediate student actions – resulted in substantially lower challenge completion rates, decreasing the odds of success by 34 to 61%. This suggests that a more proactive, guiding approach is crucial for effective learning. However, current levels of student trust in the AI Tutor remain moderate, with only 40.6% reporting confidence in the information provided, highlighting a continued need to refine the system’s credibility and ensure alignment with established pedagogical principles.

The study illuminates a cyclical pattern – a system attempting to correct its own shortcomings. It observes how reactive conversational styles correlate with diminished completion rates, a clear indication that the initial design, while functional, necessitates refinement. This echoes Ken Thompson’s sentiment: “Everything built will one day start fixing itself.” The AI tutor, in essence, is an ecosystem in development. Its evolution isn’t about achieving perfect control, but about fostering an environment where adjustments emerge organically from student interactions, a dance between intention and unforeseen consequence. The system doesn’t deliver instruction; it reveals the gaps in its own logic, prompting a continuous process of self-correction.

The Silent Curriculum

The study illuminates not a triumph of intelligent tutoring, but the predictable contours of student entropy. Completion rates, those pale indicators of engagement, shift with conversational style-a reactive exchange proving more corrosive than proactive guidance. It is not merely a matter of ‘better’ AI, but of acknowledging the system isn’t built; it becomes. Each prompt answered, each query resolved, is a seed sown in the garden of future misunderstandings. The architecture of the interaction prophesies a specific mode of failure, a particular shape of ignorance.

The data whispers of a hidden curriculum, a pedagogy of learned helplessness subtly reinforced by the very tools intended to empower. The challenge isn’t to ‘fix’ the tutor, but to design for graceful degradation. To anticipate the points of systemic friction, the inevitable loops of confusion. The metrics of success-clicks, completions-are insufficient. The true measure lies in the quality of the questions students cease to ask.

Future work must abandon the pursuit of perfect instruction. Instead, it should focus on cultivating systems that diagnose their own limitations, that confess their inability to know. A tutor that admits its fallibility may, paradoxically, be the most effective teacher of all. The system remains incomplete, and so, too, must its evaluation be. Debugging never ends; only attention does.

Original article: https://arxiv.org/pdf/2602.17448.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Overwatch Domina counters

- 1xBet declared bankrupt in Dutch court

- Gold Rate Forecast

- Magic Chess: Go Go Season 5 introduces new GOGO MOBA and Go Go Plaza modes, a cooking mini-game, synergies, and more

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- eFootball 2026 Show Time Worldwide Selection Contract: Best player to choose and Tier List

2026-02-22 11:29