Author: Denis Avetisyan

A new framework leverages smart meter data to build detailed behavioral profiles of energy consumers, moving beyond simple demographic segmentation.

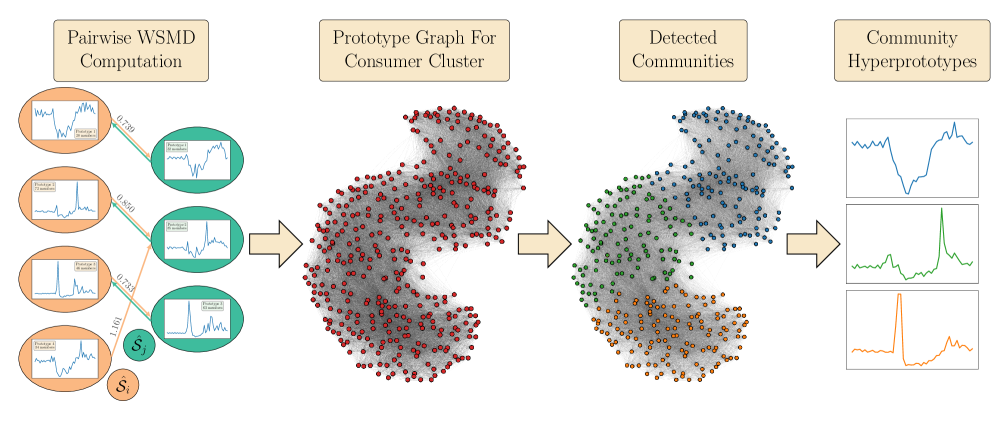

This paper presents CROCS, a two-stage clustering approach for behaviour-centric consumer segmentation using smart meter data.

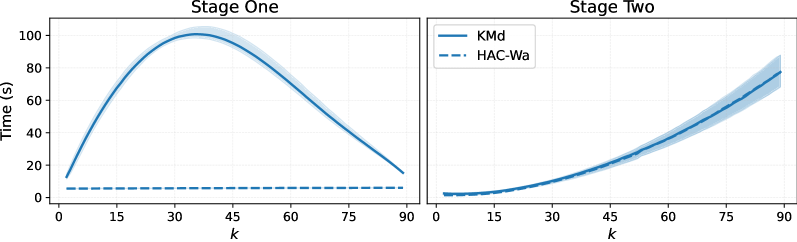

Effective demand-side management is increasingly vital for balancing modern electricity grids, yet existing consumer segmentation methods struggle to capture the diversity of real-world usage behaviours. This paper introduces CROCS: A Two-Stage Clustering Framework for Behaviour-Centric Consumer Segmentation with Smart Meter Data, a novel approach that addresses these limitations by first summarizing individual consumption patterns and then clustering consumers based on the similarity of those summaries. CROCS utilizes a weighted set-to-set distance metric and community detection to reveal interpretable consumer groups exhibiting shared diurnal behaviours, demonstrating robustness to data anomalies and efficient scalability. Will this framework enable more targeted and effective demand response programs, ultimately optimizing grid stability and reducing costs?

The Limits of Pattern Recognition

Despite their remarkable ability to generate human-quality text, Large Language Models frequently falter when confronted with problems demanding more than simple pattern recognition. These models excel at mimicking language structure and identifying correlations within vast datasets, yet struggle to perform reliable multi-step inference – the ability to connect disparate pieces of information across several logical steps. Tasks requiring logical deduction, such as solving mathematical word problems or evaluating the validity of arguments, reveal this limitation; while a model might grasp individual sentences, it often fails to synthesize them into a coherent and accurate conclusion. This isn’t a matter of insufficient data, but rather a fundamental challenge in moving beyond statistical association to genuine understanding and reasoning capability, highlighting a crucial gap between fluency and true intelligence.

Conventional natural language processing techniques often falter when confronted with the subtleties of human reasoning. These methods, frequently reliant on statistical correlations within text, struggle to grasp the underlying logic, context, and common sense knowledge necessary for complex tasks. Consequently, performance on reasoning benchmarks tends to be brittle – meaning even slight variations in problem phrasing or the introduction of irrelevant information can lead to significant errors. This fragility stems from an inability to truly understand the problem, instead relying on pattern matching that breaks down when faced with novelty or ambiguity. The limitations highlight a critical need to move beyond superficial textual analysis towards systems capable of genuine inferential thought.

Current progress in Large Language Models (LLMs) increasingly demonstrates that simply increasing the number of parameters-a strategy known as ‘scaling’-yields diminishing returns when tackling complex reasoning challenges. While larger models can store and recall more information, they don’t necessarily understand it in a way that facilitates reliable multi-step inference. This suggests a fundamental shift is needed; future advancements must prioritize techniques that improve the quality of reasoning itself, rather than solely focusing on model size. Researchers are now exploring methods like incorporating symbolic reasoning, knowledge graphs, and more robust training paradigms designed to explicitly teach LLMs how to deduce, analyze, and solve problems – moving beyond pattern recognition to genuine cognitive ability. The pursuit of better reasoning isn’t about making models bigger; it’s about making them smarter.

Guiding Models Toward Logical Thought

Prompt engineering leverages the sensitivity of Large Language Models (LLMs) to input phrasing to elicit desired behaviors, specifically improving reasoning capabilities. LLMs, while possessing vast knowledge, do not inherently demonstrate robust reasoning; however, carefully constructed prompts can activate and guide the model’s existing parameters towards more logical and coherent outputs. This is achieved not through modifying the model’s weights, but through strategically formatting the input text to encourage the model to access and utilize its internal knowledge in a structured manner. Techniques include providing specific instructions, defining the desired output format, and incorporating examples to demonstrate the reasoning process, effectively shaping the model’s response without retraining.

Chain of Thought (CoT) prompting is a technique used to improve the reasoning capabilities of Large Language Models (LLMs) by explicitly requesting the model to demonstrate its reasoning process. Instead of directly asking for a final answer, CoT prompts instruct the LLM to first articulate the intermediate steps taken to arrive at a conclusion. This is achieved by including examples in the prompt that showcase a problem, followed by a detailed, step-by-step explanation of how to solve it, and finally the answer. By mimicking human cognitive processes, CoT prompting not only enhances the accuracy of LLM responses, particularly in complex reasoning tasks, but also increases the interpretability of the model’s decision-making process, allowing users to understand how the model arrived at a given conclusion.

Self-Consistency improves the accuracy of Large Language Model outputs by leveraging the inherent stochasticity of the decoding process. Instead of relying on a single generated reasoning path, the model generates multiple independent reasoning chains from the same prompt. These chains are then evaluated to determine a final answer via aggregation, typically through majority voting. This process mitigates the impact of potentially flawed or misleading reasoning in any single chain, increasing the overall reliability and robustness of the result, particularly in complex reasoning tasks where a single, correct path may not be immediately obvious. The technique does not require retraining the model, but rather adjusts the decoding strategy at inference time.

Demonstrating Gains Across Reasoning Tasks

Empirical evaluations across multiple complex reasoning benchmarks consistently indicate substantial performance improvements when employing Chain of Thought and Self-Consistency decoding methods. Gains have been documented on datasets designed to assess arithmetic, commonsense, and symbolic reasoning capabilities. Specifically, these methods move beyond traditional language model approaches by generating intermediate reasoning steps, allowing for more accurate solutions to complex problems. Performance is measured via metrics such as accuracy and, in some cases, exact match, demonstrating statistically significant improvements over baseline models that do not utilize these decoding strategies.

Chain of Thought and Self-Consistency decoding methods exhibit pronounced efficacy in reasoning tasks categorized as Arithmetic, Commonsense, and Symbolic. Arithmetic Reasoning involves solving mathematical problems requiring multi-step calculations; these methods improve accuracy beyond direct solution attempts. Commonsense Reasoning, which necessitates understanding implicit knowledge about the world, benefits from the structured inference facilitated by these techniques. Similarly, Symbolic Reasoning – manipulating abstract symbols and applying logical rules – sees performance gains through the methods’ ability to trace reasoning steps. This contrasts with models relying on pattern matching, which struggle with problems demanding compositional generalization and systematic reasoning; these methods enable the model to move beyond identifying surface-level correlations to applying underlying principles.

Performance enhancements achieved through Chain of Thought and Self-Consistency prompting are not limited to scenarios with extensive training data or fine-tuning. Empirical evidence indicates significant gains are also realized in zero-shot settings, where the model receives no prior examples for the specific task, and in few-shot settings, where only a limited number of examples are provided. This demonstrates a capacity for generalization beyond memorization of training data, suggesting these techniques enable the model to apply reasoning skills to novel problem instances with minimal adaptation. The observed improvements in both zero-shot and few-shot contexts highlight the adaptability of these prompting methods and their potential for broader application across diverse reasoning tasks.

Scale and the Path to True Intelligence

Investigations into advanced prompting techniques, such as Chain of Thought and Self-Consistency, reveal a strong correlation between model scale and performance gains. These methods, which encourage models to articulate their reasoning steps, appear significantly more effective when implemented with larger language models. The increased capacity of these models allows them to not only generate more extensive and coherent reasoning chains but also to benefit from the iterative refinement inherent in Self-Consistency, where multiple reasoning paths are explored and a consensus is reached. This suggests that simply increasing model size isn’t a complete solution for enhancing reasoning abilities; rather, larger models provide a fertile ground for these prompting techniques to flourish, unlocking a greater capacity for complex problem-solving and nuanced understanding.

Recent advancements in large language models demonstrate that simply increasing model scale isn’t the sole pathway to improved reasoning capabilities. While larger models often exhibit a greater capacity for complex thought, the methods used to elicit that reasoning are equally crucial. Studies reveal that intelligently designed prompting techniques, which guide the model through a deliberate reasoning process, can significantly enhance performance, sometimes even surpassing the gains achieved by increasing model size alone. This suggests that focusing on how a model reasons – refining its internal thought processes through carefully crafted prompts – is paramount. Consequently, future progress hinges not only on building larger models, but on developing innovative methods to unlock and optimize their inherent reasoning potential, paving the way for truly intelligent systems.

Continued advancement in large language model reasoning capabilities necessitates a multi-pronged research approach extending beyond simply increasing parameter counts. Future studies should prioritize the development of innovative prompt engineering techniques, moving past current methods to unlock more sophisticated reasoning pathways within existing models. Crucially, these prompting strategies must be integrated with architectural improvements – exploring how model design can synergistically enhance the effects of carefully crafted prompts. Ultimately, the true test lies in applying these combined advancements to increasingly complex reasoning tasks – those demanding nuanced understanding, common sense, and the ability to navigate ambiguity – pushing the boundaries of what these systems can achieve and demonstrating genuine progress toward artificial general intelligence.

The pursuit of precise control over typesetting elements, as demonstrated by this framework for customizing float behavior, echoes a fundamental human need for order. Hannah Arendt observed, “The banality of evil lies in the inability to think.” This research, while focused on technical specifications-naming conventions, placement rules, file extensions-represents a rejection of that banality through deliberate, thoughtful construction. By meticulously defining these seemingly minor details, the framework avoids the ‘evil’ of ambiguity and ensures clarity in the final presentation of data, establishing a predictable and logical structure from the raw input.

Beyond Arrangement

The preoccupation with precise placement – the meticulous dictation of where a thing should be – reveals a fundamental anxiety. This work, while elegantly addressing the mechanics of float control, implicitly acknowledges the ongoing struggle to impose order on inherently chaotic data. The question is not whether these elements can be positioned, but whether that positioning truly elucidates meaning, or merely creates the illusion of it. Subsequent effort should not dwell on further refinement of placement algorithms, but on methods to assess the informational value of alternative arrangements.

The current focus on naming conventions and file extensions feels, inevitably, like a preoccupation with taxonomy. The impulse to categorize, to label, is strong, yet often obscures the underlying relationships. Future research would benefit from moving beyond mere organization and exploring dynamic systems – where the placement of these elements is not predetermined, but emerges from the data itself. Perhaps the true advancement lies not in controlling the float, but in allowing it to find its own level.

Ultimately, this work represents a step towards a more deliberate aesthetic in data presentation. However, the field must resist the temptation to equate precision with understanding. The goal is not to create a perfectly ordered document, but one that, in its arrangement, reveals a glimpse of the underlying truth – however messy or unpredictable that truth may be.

Original article: https://arxiv.org/pdf/2601.10494.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash Royale Best Boss Bandit Champion decks

- Vampire’s Fall 2 redeem codes and how to use them (June 2025)

- World Eternal Online promo codes and how to use them (September 2025)

- Best Arena 9 Decks in Clast Royale

- Country star who vanished from the spotlight 25 years ago resurfaces with viral Jessie James Decker duet

- M7 Pass Event Guide: All you need to know

- Mobile Legends January 2026 Leaks: Upcoming new skins, heroes, events and more

- Solo Leveling Season 3 release date and details: “It may continue or it may not. Personally, I really hope that it does.”

- Kingdoms of Desire turns the Three Kingdoms era into an idle RPG power fantasy, now globally available

- JJK’s Worst Character Already Created 2026’s Most Viral Anime Moment, & McDonald’s Is Cashing In

2026-01-18 19:45