Author: Denis Avetisyan

A new pipeline leverages the power of artificial intelligence to automate and accelerate the analysis of animal behavior, offering a deeper understanding of complex neural processes.

This review details an AI-enhanced approach combining in-context learning and tensor decomposition for improved analysis of fear generalization in mice.

Traditional pipelines in behavioral neuroscience are often hampered by time-consuming data preparation and rigid analytical workflows. This limitation motivates the work presented in ‘Transforming Behavioral Neuroscience Discovery with In-Context Learning and AI-Enhanced Tensor Methods’, which introduces an AI-enhanced pipeline leveraging In-Context Learning and novel tensor decomposition methods to accelerate insights from complex experimental data-specifically, the study of fear generalization in mice. Our approach demonstrates superior performance compared to standard practices and machine learning baselines, enabling domain experts to focus on interpretation rather than pipeline maintenance. Could this paradigm shift unlock a new era of efficient and impactful discovery in behavioral neuroscience and beyond?

The Elusive Signature of Fear: Decoding Behavioral Nuance

The neural underpinnings of fear are central to understanding how animals and humans respond to threats, yet dissecting these circuits presents a significant challenge. Traditional behavioral analyses often fall short in capturing the nuanced ways in which organisms discriminate between varying degrees of threat, or generalize fear responses to similar stimuli. This limitation stems from the complexity of fear – it isn’t simply a binary ‘threat/no threat’ response, but rather a spectrum influenced by contextual cues, prior experience, and individual variability. Consequently, researchers struggle to bridge the gap between observable behavior and the intricate neural activity driving it, hindering progress in fully decoding how the brain processes and responds to danger. A more detailed approach is needed to map the neural pathways involved in fear discrimination and to understand the precise computations the brain performs when assessing risk.

The detailed study of animal – and ultimately human – fear relies heavily on observing and categorizing behaviors, but current methods present significant bottlenecks. Traditionally, researchers meticulously watch hours of video footage, manually annotating each instance of a specific behavior like freezing, vocalization, or approach/avoidance. This process is not only incredibly time-consuming, limiting the scale of investigations, but also introduces a considerable degree of subjectivity – different observers may interpret the same behavior differently, impacting the reliability of the data. Consequently, obtaining statistically robust results from large datasets becomes challenging, and the potential for bias in interpreting nuanced fear responses is increased, hindering progress in understanding how animals discriminate between threats and generalize fear memories.

The nuanced process of threat processing forms the cornerstone of understanding how fear responses develop and spread beyond initial stimuli. It isn’t simply a matter of identifying danger, but rather a complex evaluation involving sensory input, contextual analysis, and internal states – all contributing to whether a stimulus is perceived as threatening and how strongly the organism reacts. This evaluation dictates not only the immediate fear response, but also the generalization of fear to similar, yet potentially harmless, situations. A complete picture of threat processing requires disentangling the neural circuits responsible for detecting, assessing, and reacting to danger, as well as understanding how these circuits interact with learning and memory systems to create lasting fear associations. Ultimately, unraveling these intricacies is crucial for developing targeted interventions for anxiety disorders and other fear-related conditions, as it allows for a precise understanding of how fear takes hold and propagates.

![The fear conditioning and discrimination task utilizes three environments: a safe environment ([latex]CS^{-}[/latex]) indicating safety, a threatening environment ([latex]CS^{+}[/latex]) paired with mild foot shocks, and a neutral home-cage analog ([latex]NS[/latex]).](https://arxiv.org/html/2602.17027v1/FIG/Environments.jpg)

Automated Insight: A Data-Driven Pipeline for Behavioral Analysis

Data preparation is the initial and critical phase of our analytical pipeline, encompassing data cleaning, transformation, and validation to guarantee high data quality for subsequent machine learning applications. This process includes handling missing values through imputation or removal, correcting inconsistencies, and normalizing data formats. Feature engineering is also performed to create relevant variables from raw data, improving model performance and interpretability. Rigorous validation checks are implemented to identify and address outliers and errors, ensuring the reliability and accuracy of the data used for training and evaluation. The emphasis on meticulous preparation directly correlates with the robustness and generalizability of the resulting machine learning models.

In-Context Learning (ICL) facilitates model adaptation to novel datasets by leveraging a few demonstrations directly within the input prompt, eliminating the need for parameter updates or full retraining cycles. This approach relies on the model’s pre-existing knowledge and its ability to generalize from a limited number of examples provided in the prompt itself. By framing the task with illustrative instances, ICL allows the model to infer the desired behavior and apply it to unseen data, significantly reducing the computational cost and time associated with traditional fine-tuning methods. The process is particularly beneficial when dealing with frequently changing datasets or when rapid prototyping and iteration are required, as it enables quick deployment of analytical pipelines without extensive model redevelopment.

The automated behavioral analysis pipeline leverages Vision Language Models (VLMs) to label video data, significantly increasing processing speed and minimizing subjective bias inherent in manual annotation. This approach circumvents the limitations of traditional methods by automatically identifying and categorizing behaviors directly from video footage. Performance was evaluated on imbalanced behavioral datasets, yielding a Macro F1 score of 0.545, demonstrating the VLM’s capacity to effectively generalize across less frequent behavioral occurrences while maintaining reasonable precision and recall across all classes.

Unveiling Neural Dynamics: A Window into the Language of Fear

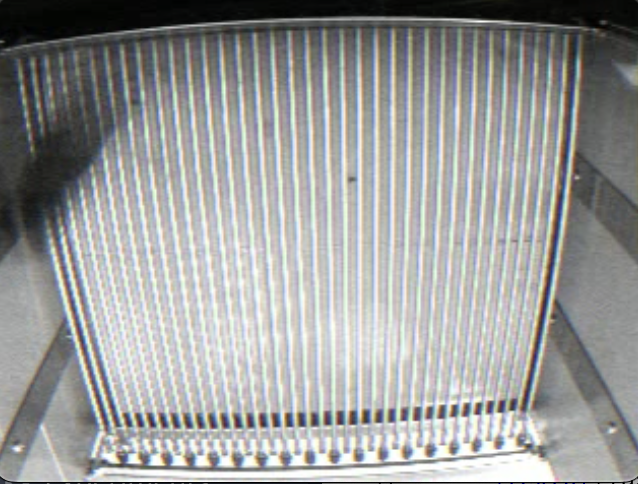

Calcium imaging is a neuroscientific technique used to monitor neuronal activity by measuring changes in intracellular calcium concentration. This method leverages the principle that neuronal firing leads to an influx of calcium ions, which can be detected using fluorescent indicators. These indicators emit light when bound to calcium, allowing researchers to visualize and quantify neuronal activity in real-time with single-cell resolution. The technique is typically implemented using microscopy to observe neuronal populations in vivo or in vitro, providing a direct measure of neuronal responses to various stimuli and enabling the study of neural circuits underlying behavior.

Neural Additive Tensor Decomposition (NATD) was implemented to model the non-linear relationships present in neuronal activity data and its correlation to observed behaviors. This approach extends traditional methods, such as Canonical Polyadic Decomposition (CPD), by allowing for additive combinations of tensor components, thereby capturing more intricate data structures. Quantitative evaluation demonstrated that NATD achieves a 46% reduction in Root Mean Squared Error (RMSE) when compared to CPD. This improvement in model accuracy indicates NATD’s superior ability to represent the underlying data and predict behavioral outcomes based on neuronal activity patterns.

Analysis of neuronal circuitry, utilizing calcium imaging data, has identified specific activity patterns correlated with fear discrimination behaviors. These patterns were observed during behavioral tasks designed to elicit fear responses, and subsequent analysis revealed a statistically significant relationship between neuronal activation and the ability to discriminate between threatening and non-threatening stimuli. Performance evaluation of this analysis on imbalanced behavioral datasets – where the representation of fear responses differs significantly from neutral states – yielded a Balanced Accuracy of 0.801, indicating robust performance in classifying fear-related behaviors despite data asymmetry. This metric accounts for unequal class distribution, providing a more reliable assessment of the model’s ability to accurately detect fear responses compared to standard accuracy measures.

Predictive Power and Future Trajectories: Towards a Deeper Understanding

The accuracy of behavioral prediction models benefits significantly from autoregressive in-context learning. This technique leverages the sequential nature of behavior, allowing models to consider past actions when forecasting future ones – essentially, learning to predict what happens next based on a history of events. By incorporating this temporal dimension, predictions become more consistent and reliable, as the model doesn’t treat each moment in isolation. This approach moves beyond simply identifying what might happen to understanding how a sequence of behaviors unfolds, resulting in a more nuanced and ultimately more accurate representation of complex actions and reactions. The enhancement in temporal consistency proves particularly valuable in fields like neuroscience, where understanding the progression of states is crucial for interpreting underlying biological processes.

Retrieval-Augmented Generation (RAG) represents a significant advancement in predictive modeling by moving beyond inherent model knowledge and actively incorporating relevant information from external sources during the prediction process. This technique doesn’t simply rely on patterns learned during training; instead, it dynamically searches for and integrates contextual data – such as prior experiences, environmental cues, or specific situational details – that directly inform the prediction. By grounding its assessments in this retrieved information, the model achieves a more nuanced and accurate understanding of complex behaviors. The benefit of this approach lies in its ability to address ambiguity and account for factors not explicitly present in the initial training data, leading to predictions that are not only more reliable but also more readily interpretable and aligned with real-world observations.

The developed framework demonstrates considerable promise in deciphering the neural underpinnings of fear, offering a pathway toward more effective treatments for anxiety disorders and personalized interventions. Validation of the model’s interpretations reveals a Matthews Correlation Coefficient (MCC) of 0.517, indicating a statistically significant ability to distinguish between fear-related and neutral stimuli. Further bolstering its credibility, a moderate agreement of 0.59, as measured by Cohen’s Kappa, was achieved between the model’s output-derived from the Ventral Lateral Amygdala (VLM)-and assessments provided by domain experts in fear and anxiety research. This concordance suggests the AI is capturing clinically relevant patterns in neural activity, paving the way for objective biomarkers and potentially predictive tools to guide therapeutic strategies.

![The proposed AR-ICL method labels behavior by analyzing data at the [latex]t[/latex]-th frame.](https://arxiv.org/html/2602.17027v1/x2.png)

The pursuit of understanding fear generalization, as detailed within the study, reveals a predictable arc. Systems, even those as complex as neural networks within a mammalian brain, exhibit decay and eventual transformation. This aligns with the observation that ‘the only thing that is constant is change.’ The pipeline presented isn’t about achieving a perfect, static understanding of fear; instead, it’s a method for gracefully observing the evolution of neural patterns. Tensor decomposition, combined with in-context learning, doesn’t halt the system’s trajectory but offers a more refined lens through which to witness its unfolding, recognizing that improvements in analytical methods themselves are subject to the same temporal constraints. The study acknowledges that architectures-be they biological or computational-live a life, and researchers are merely charting its course.

What Remains to Be Seen?

The presented pipeline, while demonstrating a capacity to accelerate analysis of behavioral data, does not erase the fundamental challenge: every failure is a signal from time. The refinement of algorithms for tensor decomposition, coupled with In-Context Learning, offers a more efficient mapping of neural states, but does not resolve the inherent ambiguity within those states themselves. The architecture, like all architectures, will accrue entropy. The crucial question isn’t simply what patterns are revealed, but how those patterns degrade-and how gracefully.

Future iterations will inevitably confront the limits of current Vision Language Models. The transfer of knowledge from large datasets, while expedient, may introduce biases-ghosts in the machine, reflecting the assumptions embedded within the training data. Refactoring is a dialogue with the past; each attempt to improve the model necessitates a careful reckoning with its origins. A focus on explainability-on understanding why a model arrives at a particular conclusion-will prove essential, even if complete transparency remains an asymptotic goal.

Ultimately, the value of this approach lies not in its predictive power, but in its capacity to frame new questions. The true measure of success won’t be the number of insights generated, but the quality of the questions that remain unanswered-those that resist easy resolution, and compel a deeper engagement with the complexities of neural systems.

Original article: https://arxiv.org/pdf/2602.17027.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Overwatch Domina counters

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- Gold Rate Forecast

- 1xBet declared bankrupt in Dutch court

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

2026-02-20 11:01