Author: Denis Avetisyan

A new framework enables teams of aerial and ground robots to navigate challenging environments more safely and efficiently by understanding the world around them.

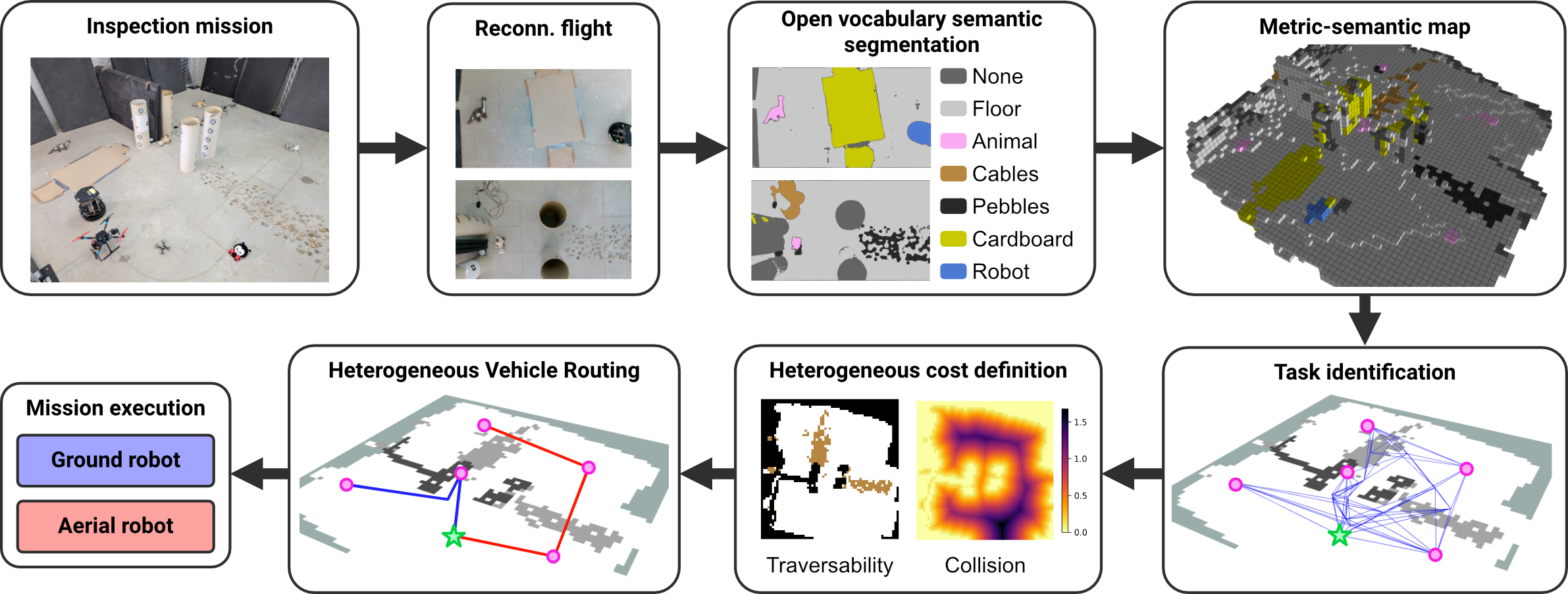

This work presents CHORAL, a traversal-aware planning system for heterogeneous multi-robot routing leveraging semantic mapping and platform-specific cost functions within a ROS 2 environment.

Effective autonomous monitoring of complex environments demands robotic teams capable of adapting to unknown spaces, yet current approaches often treat robots as homogeneous entities or neglect continuous routing based on detailed environmental understanding. This work introduces CHORAL: Traversal-Aware Planning for Safe and Efficient Heterogeneous Multi-Robot Routing, a framework that integrates semantic mapping-built from open-vocabulary vision-with a capability-aware vehicle routing formulation. By explicitly modeling each robot’s traversal costs, we demonstrate significant improvements in both safety and efficiency for heterogeneous teams performing inspection tasks. Could this integrated approach unlock more robust and adaptable robotic solutions for real-world monitoring and exploration?

Decoding the Static: The Challenge of Dynamic Environments

Conventional robotic inspection systems frequently encounter difficulties when deployed in real-world settings characterized by unpredictable layouts and constant change. Unlike the controlled conditions of factory floors, environments such as construction sites, disaster zones, or even aging infrastructure present a significant challenge due to the presence of obstacles, varying lighting conditions, and moving elements. This often results in decreased operational efficiency, as robots require frequent human intervention to navigate unforeseen circumstances or correct errors. Furthermore, the inability to reliably adapt to dynamic changes introduces substantial safety risks, potentially leading to collisions, damage to equipment, or harm to personnel, thereby limiting the scope of autonomous inspection and necessitating cautious, often slow, operational protocols.

Reliable robotic operation hinges on a system’s capacity to not only traverse varied landscapes, but also to build an accurate understanding of its surroundings. Unlike controlled factory floors, real-world inspection environments present unpredictable challenges – uneven ground, obstacles, and changing conditions all demand sophisticated navigation and perception. A robot incapable of adapting to these complexities will struggle to complete tasks efficiently, or even safely. Consequently, significant research focuses on equipping robots with advanced sensors and algorithms that allow them to map, analyze, and react to environmental changes in real-time, effectively creating a dynamic internal representation of the world around them. This ability to perceive and understand, rather than simply react, is the foundation for truly autonomous and dependable performance.

Existing autonomous inspection systems frequently encounter limitations when confronted with real-world complexities. Traditional approaches, reliant on pre-programmed routes or meticulously mapped environments, struggle to adjust to unexpected obstacles, shifting conditions, or novel situations. This inflexibility stems from a dependence on rigid algorithms and a lack of robust perception capabilities, hindering their ability to generalize beyond controlled settings. Consequently, these systems often require frequent human intervention, negating the benefits of full autonomy and increasing operational costs. The pursuit of true autonomous inspection, therefore, necessitates the development of systems capable of dynamic replanning, sensor fusion, and advanced machine learning techniques to navigate and interpret unpredictable environments with resilience and efficiency.

Ensuring operational safety in complex environments demands precise hazard assessment and robust collision avoidance. Recent advancements have focused on minimizing the probability of accidents during autonomous operation, and a novel system has demonstrated significant progress in this area. Through a combination of advanced sensor fusion and predictive algorithms, the system accurately identifies potential hazards – ranging from unexpected obstacles to unstable terrain – and dynamically adjusts its trajectory to mitigate risk. Rigorous testing, encompassing both detailed simulations and real-world experiments across diverse landscapes, reveals a substantial reduction in accident probability compared to conventional methods. This heightened level of safety not only protects valuable assets but also paves the way for wider adoption of autonomous inspection in challenging and unpredictable settings.

Orchestrated Perception: Heterogeneous Teams and Semantic Mapping

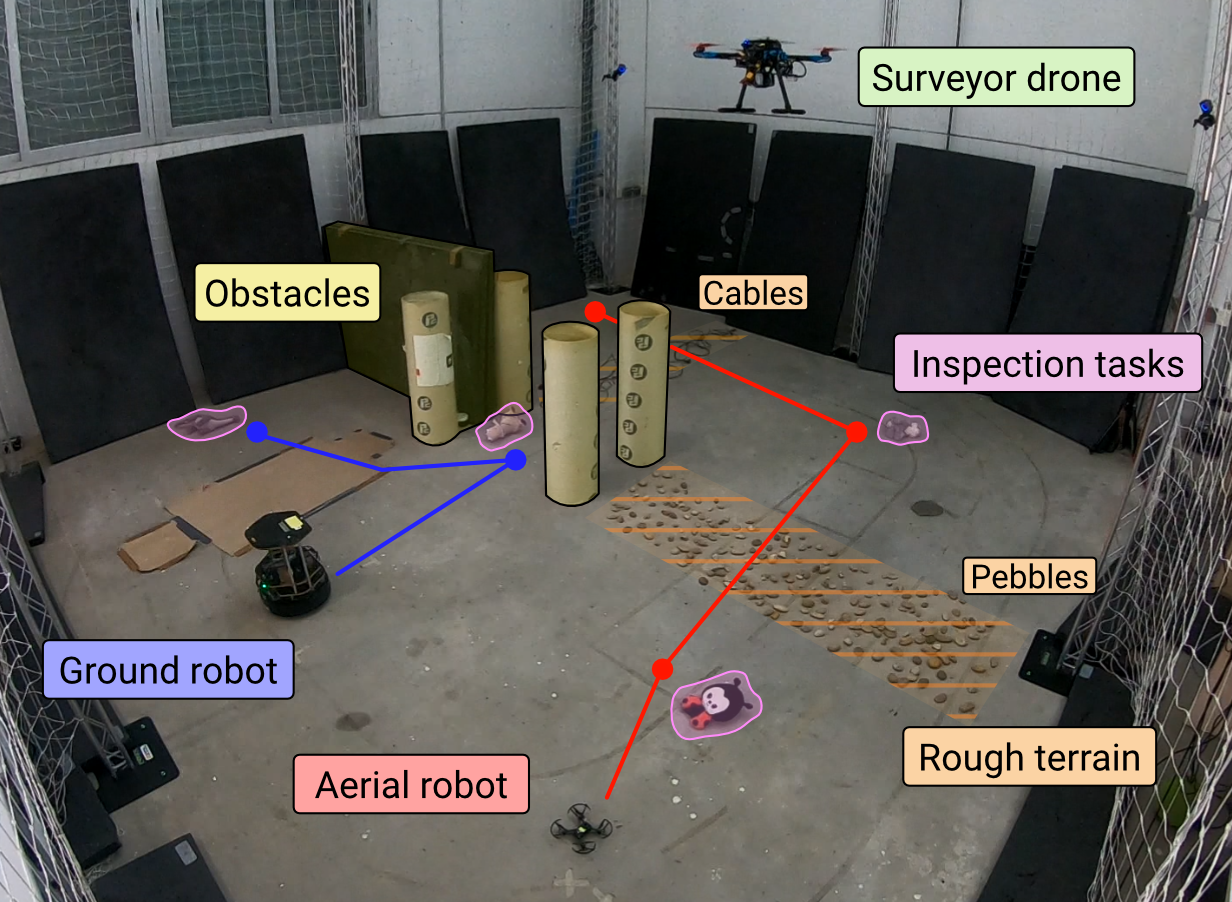

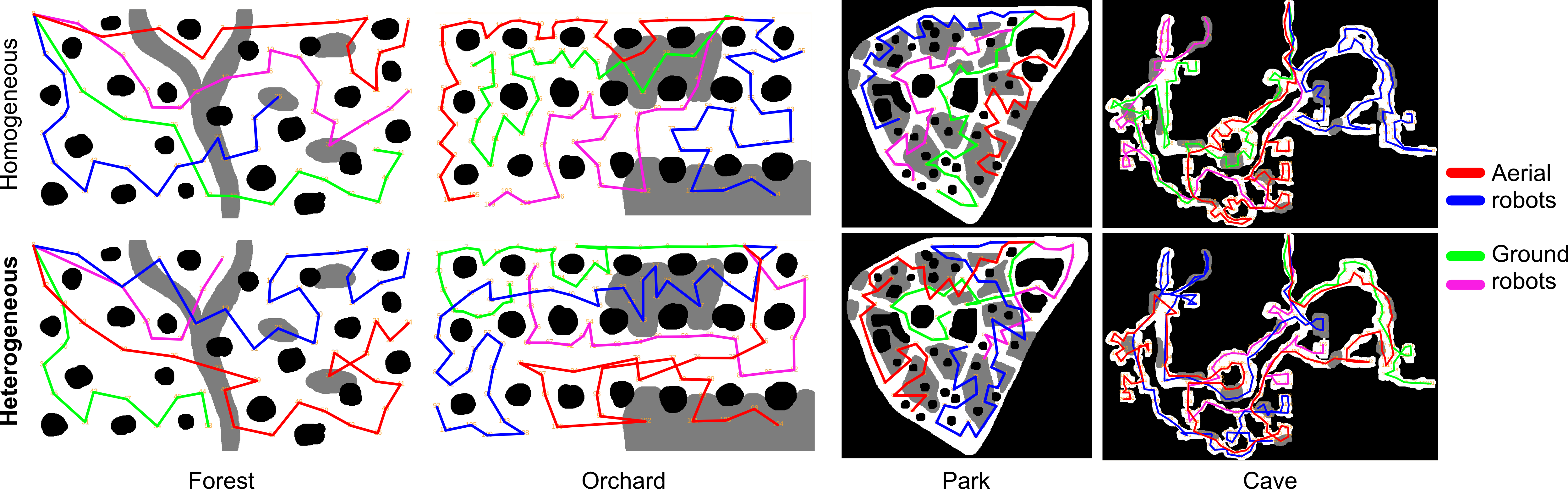

The proposed Heterogeneous Multi-Robot Inspection system utilizes a collaborative approach, deploying robots with complementary capabilities to achieve more thorough environmental assessments than could be accomplished by a single robot. This involves coordinating robots possessing differing strengths – for example, aerial drones for broad overviews and rapid navigation, coupled with ground robots for detailed close-up inspection and manipulation. By leveraging these varied capabilities, the system aims to improve inspection speed, accuracy, and the ability to access difficult-to-reach areas, ultimately providing a more comprehensive understanding of the inspected environment. The architecture is designed to dynamically allocate tasks based on each robot’s specific strengths and the requirements of the inspection process.

The Metric-Semantic Map integrates precise geometric data, typically obtained from sensors like LiDAR and cameras, with high-level semantic understanding of the environment. This is achieved by associating measured geometric primitives – points, lines, planes – with identified objects and their attributes. The map doesn’t simply represent where things are, but what those locations contain and their functional relevance; for example, distinguishing between a ‘wall’, a ‘pipe’, and a ‘valve’. This combined representation enables advanced robotic reasoning, allowing the system to not only navigate effectively but also to interpret the inspected environment in a manner analogous to human inspectors, ultimately improving inspection efficiency and accuracy.

The Metric-Semantic Map is initially constructed via a Reconnaissance Flight phase, utilizing aerial data acquisition to establish a preliminary environmental representation. This flight prioritizes broad area coverage and the capture of foundational geometric data, including spatial coordinates and scale. Simultaneously, the flight identifies key features and semantic elements within the environment, creating a base layer for subsequent detailed inspection by other robotic platforms. The data gathered during Reconnaissance Flight serves as a crucial prerequisite, enabling accurate localization, path planning, and targeted data collection during the detailed inspection phases, and minimizes the need for extensive re-mapping by slower ground-based robots.

The system utilizes Open-Vocabulary Vision Models to perform environmental analysis, enabling the identification and categorization of features without requiring pre-defined labels for each object. This approach facilitates advanced reasoning capabilities, allowing the robots to understand the context of their surroundings and plan inspections accordingly. Performance evaluations demonstrate solver convergence times of less than 2 minutes when processing complex maps with a team consisting of one drone and one ground robot, indicating efficient computational processing and real-time applicability of the vision system.

The Algorithmic Skeleton: Efficient Path Planning and Navigation

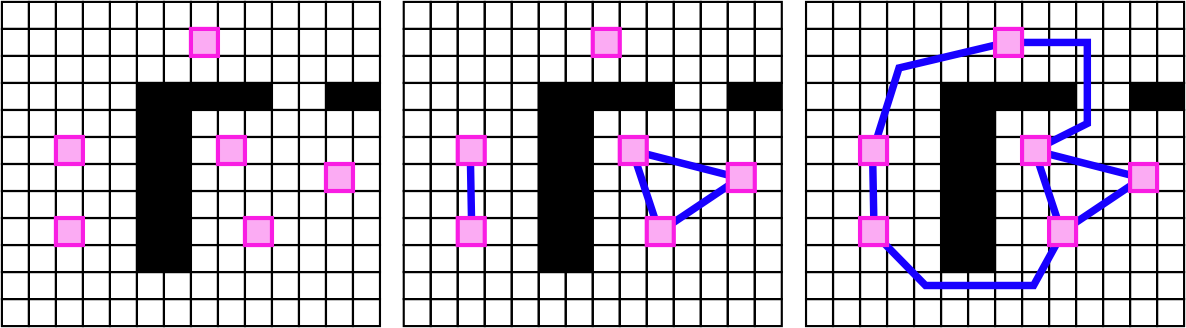

Probabilistic Roadmap (PRM) construction is utilized to pre-compute a network of feasible paths for inspection robots operating within a defined environment. This involves randomly sampling configurations within the robot’s configuration space and connecting them to form a graph, with edges representing collision-free paths. The sampling process continues until a sufficiently dense roadmap is generated, enabling efficient path planning between any two points in the environment. Collision detection is performed to validate the feasibility of each potential connection. The resulting PRM serves as a static representation of the navigable space, reducing the computational burden of real-time path planning during robot operation.

The RRT algorithm builds upon the initial probabilistic roadmap (PRM) by iteratively refining the path connections to improve solution quality and exploration efficiency. Unlike standard RRT, RRT employs a rewiring strategy; when a new random sample is connected to the tree, it not only adds the new node but also attempts to reconnect existing nodes if a shorter path is found through the new sample. This process continues until a specified convergence criterion is met, guaranteeing asymptotic optimality-paths are continually improved until they approach the optimal solution. The algorithm’s efficiency stems from its ability to efficiently explore high-dimensional configuration spaces while providing provable guarantees regarding path cost, making it suitable for complex robotic environments.

A Search is implemented as a pathfinding algorithm operating within the pre-constructed Probabilistic Roadmap (PRM) to refine initial path suggestions. This algorithm evaluates paths based on a cost function that combines the actual distance traveled with a heuristic estimating the distance to the goal, ensuring efficient path optimization. The heuristic used is the Euclidean distance, providing an admissible and consistent estimate. A Search systematically explores the PRM, expanding nodes with the lowest estimated total cost until the optimal path, minimizing traversal cost, is identified. This localized optimization complements the global path planning provided by PRM construction and RRT* refinement.

The system utilizes a solution to the Heterogeneous Vehicle Routing Problem (HVRP) to optimize task assignment for the inspection robot fleet. This approach considers the unique capabilities of each robot when allocating inspection tasks, moving beyond simple distance-based routing. Implementation of the HVRP consistently achieves higher efficiency in overall inspection time compared to standard formulations. Solver convergence times remain consistent at approximately 120 seconds across varied map configurations, demonstrating predictable performance and scalability of the routing solution.

From Theory to Deployment: System Integration and Real-World Impact

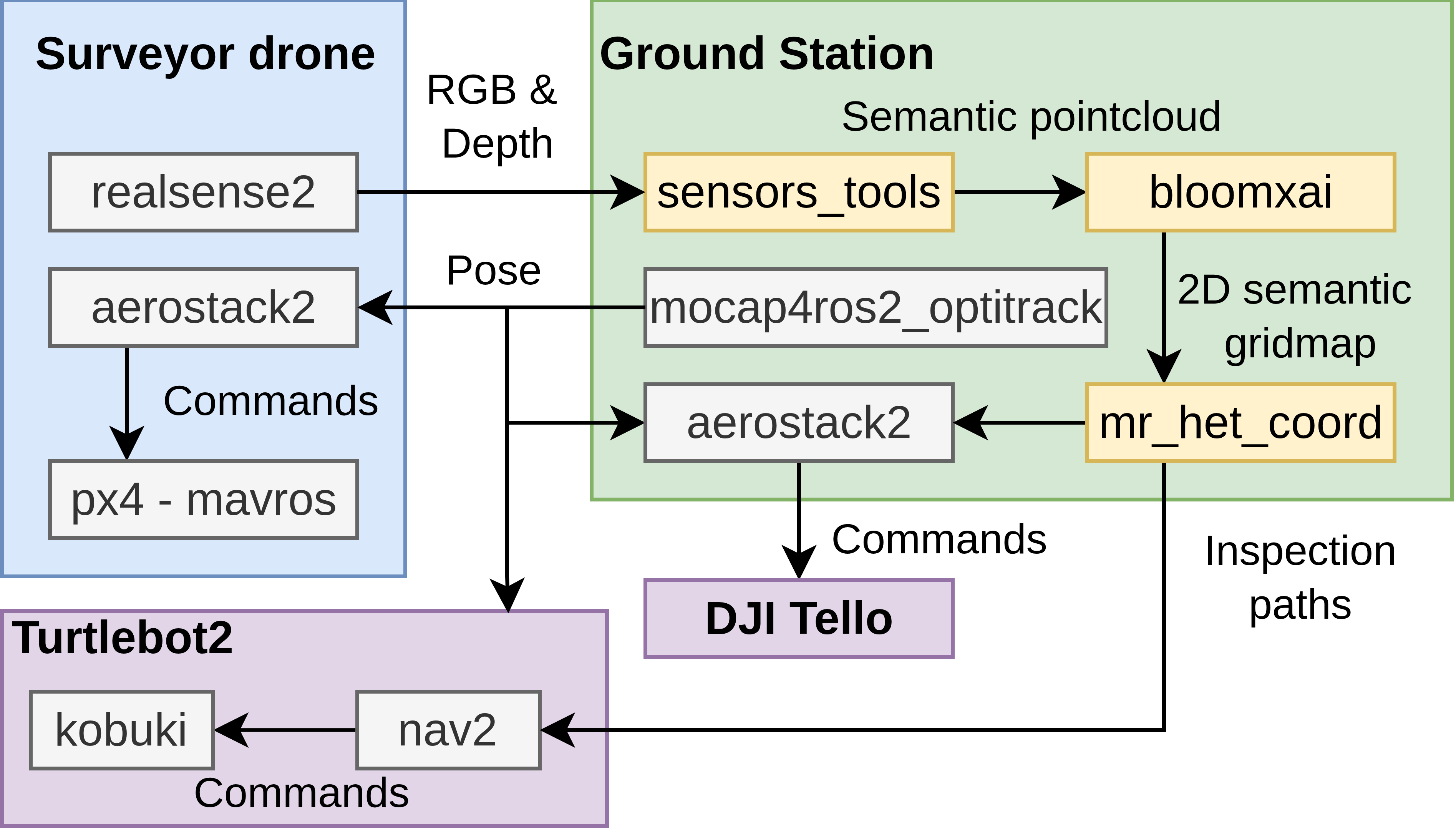

The architecture of this robotic system leverages the Robot Operating System 2 (ROS 2) framework, a key design choice that facilitates modularity and robust communication between all hardware and software components. ROS 2 provides a flexible publishing-subscription model, allowing different modules – such as those handling sensor data, localization, mapping, and robot control – to exchange information asynchronously. This distributed approach not only simplifies development and debugging but also enhances the system’s scalability and resilience. By adhering to ROS 2 standards, the system benefits from a large open-source community, pre-built tools, and readily available libraries, accelerating the development process and promoting interoperability with other robotic systems. The framework’s real-time capabilities are critical for accurate robot navigation and inspection, ensuring reliable performance in dynamic environments.

Precise robot localization is achieved through the integration of OptiTrack motion capture technology, a critical component for reliable map building and autonomous navigation. This system leverages OptiTrack’s infrared cameras to track the robot’s position and orientation in three-dimensional space with sub-millimeter accuracy, surpassing the limitations of traditional odometry or SLAM approaches in challenging environments. By providing a ground truth reference for the robot’s pose, OptiTrack minimizes drift and ensures the creation of consistent and accurate maps, which are essential for successful path planning and task execution. The high fidelity of this localization data directly translates to improved navigational performance and the ability for the robot to operate effectively in complex and dynamic real-world scenarios.

The system’s capacity for detailed environmental understanding hinges on the integration of RealSense D435i cameras, which deliver dense depth information and high-resolution imagery. These cameras aren’t simply visual sensors; their data forms the foundation for both the creation of accurate three-dimensional maps and the execution of detailed inspection routines. Point cloud data generated by the D435i is processed to identify features, obstacles, and areas of interest within the robot’s operating environment. This rich perceptual input enables the system to navigate complex spaces autonomously and perform tasks such as identifying structural defects or monitoring changes in the environment with a high degree of precision, ultimately enhancing the reliability and scope of robotic operations.

Rigorous validation of the integrated system involved deployment on both a ground-based Turtlebot2 robot and a DJI Tello drone, showcasing its adaptability across distinct robotic platforms and operational environments. Testing revealed efficient performance in real-world scenarios, with the Turtlebot2 completing inspection tasks in approximately 25 seconds, while the more agile DJI Tello achieved notably faster execution times of around 10 seconds. These results demonstrate the system’s capability to provide timely and accurate data acquisition, irrespective of the robot’s mobility and highlighting its potential for diverse applications ranging from indoor facility monitoring to aerial infrastructure inspection.

The system detailed in this research doesn’t merely navigate; it challenges the established routes, much like a curious mind dissecting a complex machine. CHORAL’s integration of semantic mapping and traversability costs isn’t simply about finding a path, but about understanding why certain paths are preferred or avoided. This echoes Bertrand Russell’s sentiment: “The whole problem with the world is that fools and fanatics are so certain of themselves.” The framework doesn’t assume a pre-defined ‘correct’ route; instead, it actively assesses the environment, questioning initial assumptions about robot capabilities and space accessibility. It’s a system built on intellectual exploration, treating potential ‘bugs’ – or unexpected traversability assessments – as signals to refine its understanding of the operational landscape.

Beyond the Horizon

The presented framework, while demonstrably effective in coordinating heterogeneous robot teams, merely scratches the surface of true environmental understanding. The reliance on semantic mapping, though a step removed from purely geometric approaches, still presupposes a world neatly divisible into traversable and non-traversable regions. Reality, of course, delights in gradients – surfaces offering varying degrees of risk, dynamic obstacles shifting the cost of passage in real-time. Future work must dismantle this binary, embracing probabilistic traversability and actively seeking the edge of what’s possible, rather than cautiously avoiding the unknown.

Furthermore, the current formulation treats the vehicle routing problem as a pre-mission optimization. A more robust system will necessitate continuous replanning, not in response to failure, but as a core operational principle. The robots should be permitted-even encouraged-to deviate from the prescribed path if sensor data reveals a more efficient, or even a more interesting, route. This implies a shift from centralized control to a degree of distributed intelligence, a willingness to cede some predictability for the sake of adaptability.

Ultimately, the challenge lies not in simply navigating a known space, but in collaboratively constructing a map of possibility. The robots are not merely tools for inspection; they are probes, pushing the boundaries of understanding. The true metric of success will not be the number of tasks completed, but the number of assumptions overturned.

Original article: https://arxiv.org/pdf/2601.10340.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash Royale Best Boss Bandit Champion decks

- Vampire’s Fall 2 redeem codes and how to use them (June 2025)

- World Eternal Online promo codes and how to use them (September 2025)

- How to find the Roaming Oak Tree in Heartopia

- Best Arena 9 Decks in Clast Royale

- Mobile Legends January 2026 Leaks: Upcoming new skins, heroes, events and more

- Brawl Stars December 2025 Brawl Talk: Two New Brawlers, Buffie, Vault, New Skins, Game Modes, and more

- ATHENA: Blood Twins Hero Tier List

- Solo Leveling Season 3 release date and details: “It may continue or it may not. Personally, I really hope that it does.”

- How To Watch Tell Me Lies Season 3 Online And Stream The Hit Hulu Drama From Anywhere

2026-01-16 19:13