Author: Denis Avetisyan

New research explores how an adaptive robotic interview coach balances supportive feedback with targeted skill development to improve user performance and reduce anxiety.

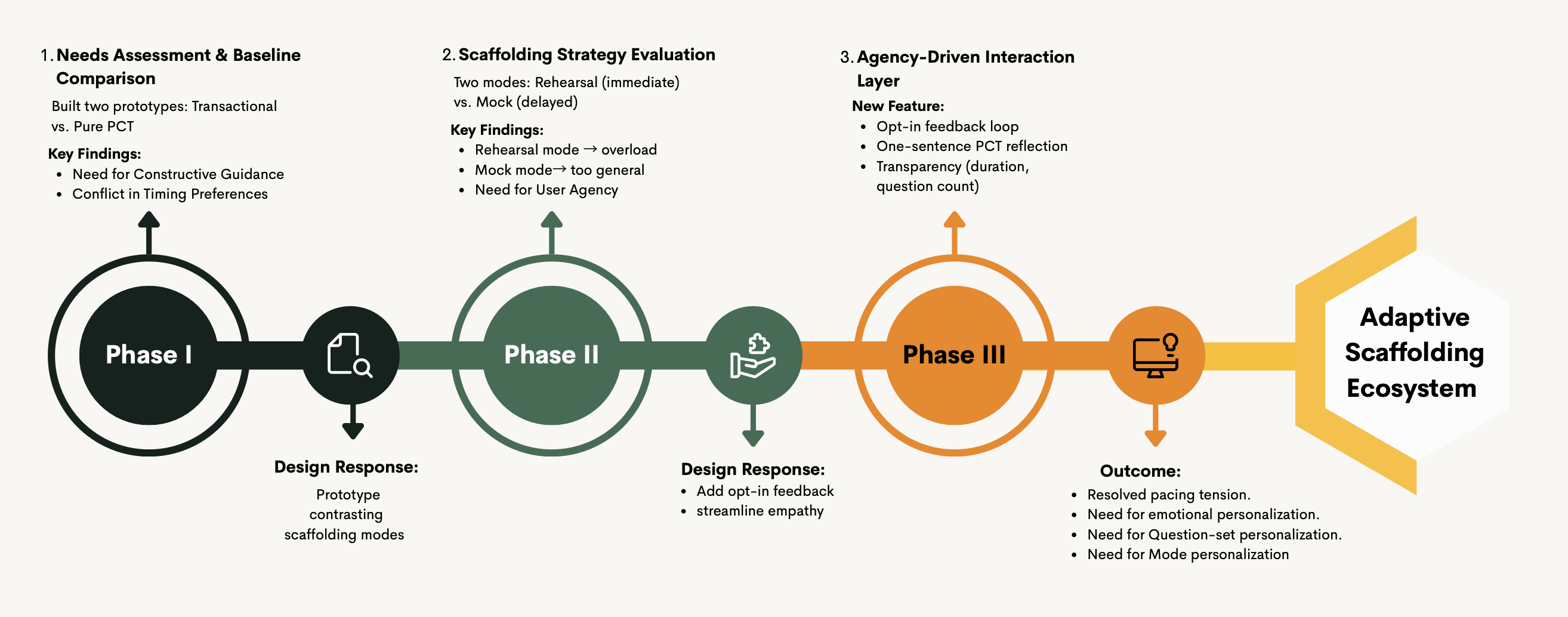

An iterative design process produced a robotic system that effectively leverages psychological safety and adaptive scaffolding to optimize the interview preparation experience.

Balancing empathetic support with actionable guidance remains a key challenge in automated coaching systems. This research, detailed in ‘Bridging Psychological Safety and Skill Guidance: An Adaptive Robotic Interview Coach’, investigates how social robots can effectively prepare individuals for job interviews without inducing anxiety or cognitive overload. Through an iterative design process, we demonstrate that an adaptive system-one allowing users to control the timing of feedback-successfully navigates this tension, fostering both psychological safety and skill development. Could this approach redefine robotic coaching as a dynamic ecosystem, prioritizing user agency and a responsive balance between support and challenge?

The Illusion of Scaffolding: Why ‘Help’ Often Hinders

Conventional educational scaffolding, while intending to support learning, frequently proves ineffective due to its standardized approach. Often, these strategies deliver a uniform level of assistance irrespective of a student’s existing knowledge or skill set, inadvertently fostering dependency rather than independent problem-solving. This one-size-fits-all methodology overlooks the crucial variability in individual learning paces and pre-existing capabilities, potentially hindering progress for both struggling and advanced learners. Consequently, students may become reliant on the provided support, unable to confidently tackle challenges when assistance is gradually withdrawn – a situation that ultimately undermines the goal of cultivating self-directed learning and genuine understanding.

The concept of educational scaffolding, while intended to provide temporary support for learning, presents a curious paradox. Research indicates that either excessive guidance or a complete lack of support can impede a student’s development. Overly directive scaffolding, though seemingly helpful, can foster dependency and prevent the learner from developing crucial problem-solving skills and self-reliance. Conversely, a detached approach, assuming the learner is ready for independent work before they’ve truly grasped the foundational concepts, can lead to frustration, disengagement, and ultimately, stalled progress. This delicate balance – knowing when to provide assistance and, crucially, when to withdraw it – is central to effective instruction, demanding educators carefully assess individual needs and dynamically adjust their support strategies.

Truly impactful educational strategies navigate a delicate equilibrium between offering necessary support and fostering independent learning, a complexity heightened within sensitive social dynamics. Research demonstrates that interventions which rigidly dictate steps, or conversely, abruptly abandon guidance, often prove ineffective; instead, successful programs dynamically adjust to the learner’s evolving capabilities and the specific social landscape. This necessitates careful consideration of cultural norms, pre-existing relationships, and potential power imbalances, ensuring that assistance empowers individuals without undermining their agency or creating unintended dependencies. Ultimately, the goal is to cultivate self-directed learning within a supportive framework, allowing individuals to confidently navigate challenges and contribute meaningfully to their communities.

Giving Learners the Reins: Agency-Driven Interaction

The Agency-Driven Interaction Layer is a system designed to grant learners direct control over their feedback experience. This is achieved by allowing users to determine when they receive feedback and, crucially, what type of feedback is delivered – for example, choosing between hints, solutions, or explanatory materials. This design is predicated on principles of Self-Determination Theory, specifically the tenets of autonomy, competence, and relatedness; by fostering a sense of control over the learning process, the system aims to increase intrinsic motivation and enhance learning outcomes. The layer functions as an intermediary between the learning environment and the learner, enabling personalized support that aligns with individual preferences and promotes self-directed learning.

The system employs ‘Implicit Sensing’ techniques, utilizing data streams from learner interactions – such as response times, error rates, and interaction patterns – to infer anxiety levels without requiring explicit self-reporting. Algorithms analyze these data to identify indicators of cognitive load or frustration. Based on these inferences, the system dynamically adjusts the level and type of support provided, ranging from hints and simplified explanations to more extensive guidance, with the goal of maintaining psychological safety and preventing learner disengagement. This adaptive approach differs from traditional methods by proactively responding to inferred emotional states rather than relying on learner-initiated requests for help.

Traditional adaptive scaffolding systems adjust support levels based on inferred learner performance; however, this design extends that functionality by explicitly granting learners control over the scaffolding process itself. Rather than automated adjustments, the system allows learners to directly request specific types of assistance – such as hints, examples, or simplified explanations – and to regulate the timing of these interventions. This learner-directed approach shifts the focus from reactive support determined by the system, to proactive self-regulation facilitated by the system, enabling learners to customize the learning experience to their individual needs and preferences and fostering a sense of autonomy.

Testing the Waters: A Robotic Coaching System

An Iterative Design Study methodology was utilized to develop and refine the robotic coaching system. This involved cyclical development, where the system-built upon the Misty II Social Robot platform-was repeatedly designed, implemented, evaluated, and refined based on user interaction and collected data. This iterative process allowed for continuous improvement of the system’s functionality and user experience, addressing identified shortcomings and optimizing performance throughout the study. The methodology prioritized real-world application and user feedback as integral components of the design and refinement stages, ensuring the final system was both effective and user-centered.

The robotic coaching system’s core functionality leveraged the OpenAI GPT-4 Realtime API to facilitate dynamic, conversational interactions. This API was integrated with a software stack utilizing Node.js for backend logic and React.js for the user interface. Node.js handled API requests, data processing, and system control, while React.js provided a responsive and interactive front-end for users to engage with the coaching prompts and receive feedback delivered via the Misty II robot. This combination enabled real-time natural language processing and generation, allowing the robot to adapt its coaching strategy based on user input and progress.

The robotic coaching system was evaluated against a transactional control system baseline to quantify its efficacy. The study incorporated varied scaffolding strategies, specifically ‘Rehearsal Mode’ and ‘Mock Mode’, to assess their impact on user experience. Results indicated a statistically significant reduction in user-reported social anxiety – quantified with a p-value of less than 0.001 – when utilizing the agency-driven system. Furthermore, user satisfaction, as measured by post-session surveys, achieved a high average score of 91.3 out of 100, indicating a positive user response to the implemented robotic coaching approach.

The Illusion of Progress: What We’ve Learned (And What Remains)

The study’s results underscore a critical link between empowering learners and fostering positive educational outcomes, especially when addressing sensitive challenges like social anxiety. An agency-driven intervention-where individuals actively shape their learning experience-yielded a statistically significant reduction in reported social anxiety levels, with a p-value of less than .001 indicating a robust effect. This approach didn’t merely alleviate anxiety; it also garnered exceptionally high user satisfaction, reaching a score of 91.3 out of 100, suggesting that learners not only benefited from the intervention but also found the experience inherently valuable and engaging. These findings emphasize that providing opportunities for self-direction isn’t simply a pedagogical preference, but a crucial component in creating learning environments where individuals feel safe, supported, and capable of growth.

The robotic platform distinguishes itself as a uniquely adaptable system for delivering individualized coaching and support, offering a departure from traditional, often rigid, educational interventions. Its modular design facilitates easy deployment across diverse learning environments and accommodates a wide range of learner needs, circumventing logistical hurdles associated with human-led coaching. This scalability isn’t merely about reaching more individuals; the platform’s architecture allows for the customization of coaching strategies, tailoring the experience to specific learning goals and psychological profiles. Furthermore, the robotic form factor presents opportunities for consistent delivery of support, minimizing variability introduced by human factors and enabling researchers to isolate the impact of specific interventions with greater precision – a crucial asset for future studies seeking to refine and optimize personalized learning approaches.

Continued investigation into this robotic coaching platform should prioritize assessing its adaptability beyond the initial study’s scope, extending to diverse subjects and varied groups of learners. While current findings demonstrate a preservation of therapeutic rapport – evidenced by a statistically insignificant difference in RoSAS Warmth Scores – future studies can focus on actively enhancing learner competence. Initial data suggests a modest increase in competence scores, rising from a baseline of 5.81 to 6.25, indicating potential for further refinement of the intervention to maximize skill development across a broader range of educational applications and individual needs.

The pursuit of seamless human-robot interaction often overlooks the messy reality of deployment. This research, focusing on adaptive scaffolding within an interview coach, highlights a crucial tension: balancing psychological safety with genuine skill development. It’s a pragmatic approach, acknowledging that even the most elegant algorithms must yield to user agency. As Edsger W. Dijkstra observed, “It’s not that we need more intelligence, but that we need less.” The system doesn’t attempt to solve interview anxiety, but rather provides a controlled environment where users can practice, adapting to their cognitive load and maintaining a sense of control – a compromise that, at least for now, has survived the inevitable chaos of real-world application. Everything optimized will one day be optimized back, and this work seems to accept that fate with a cautious, iterative design.

What’s Next?

The demonstrated balance between psychological safety and skill guidance, while encouraging, feels less like a solution and more like a temporary reprieve. The system functions within a constrained domain – interview practice. Extending this adaptive scaffolding to more complex, less structured learning environments will undoubtedly reveal the brittleness inherent in any attempt to model ‘person-centered’ interaction. Tests are, after all, a form of faith, not certainty.

Future iterations will likely grapple with the inevitable problem of edge cases. How does the system respond to a user deliberately sabotaging the process, or exhibiting signs of distress outside the pre-defined parameters? More fundamentally, the research sidesteps the question of why someone would consistently choose robotic guidance over human interaction. Automation doesn’t ‘save’ anyone; it simply shifts the points of failure.

The true measure of this work won’t be elegant algorithms, but the frequency of Monday morning incidents. Will these systems ultimately reduce cognitive load, or merely redistribute it onto a support team tasked with untangling the consequences of unanticipated user behavior? The interesting problems, predictably, lie not in the design, but in the deployment.

Original article: https://arxiv.org/pdf/2601.10824.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash Royale Best Boss Bandit Champion decks

- Vampire’s Fall 2 redeem codes and how to use them (June 2025)

- World Eternal Online promo codes and how to use them (September 2025)

- Best Arena 9 Decks in Clast Royale

- Country star who vanished from the spotlight 25 years ago resurfaces with viral Jessie James Decker duet

- ‘SNL’ host Finn Wolfhard has a ‘Stranger Things’ reunion and spoofs ‘Heated Rivalry’

- Solo Leveling Season 3 release date and details: “It may continue or it may not. Personally, I really hope that it does.”

- Kingdoms of Desire turns the Three Kingdoms era into an idle RPG power fantasy, now globally available

- M7 Pass Event Guide: All you need to know

- JJK’s Worst Character Already Created 2026’s Most Viral Anime Moment, & McDonald’s Is Cashing In

2026-01-19 16:10