Author: Denis Avetisyan

New research reveals how the carefully crafted personalities of conversational AI agents can subtly influence user perceptions and even drive behavioral changes, like charitable giving.

This review examines the impact of linguistic personality expressions in conversational agents on user perceptions, emotional states, and decision-making processes, finding that pessimistic agents can paradoxically increase donations.

While increasingly sophisticated conversational agents promise personalized interactions, the subtle impact of their projected personalities remains largely unknown. This research, ‘The Bots of Persuasion: Examining How Conversational Agents’ Linguistic Expressions of Personality Affect User Perceptions and Decisions’, investigates how linguistically-defined personality traits in these agents-specifically attitude, authority, and reasoning style-shape user perceptions and ultimately, prosocial behavior. Surprisingly, the study found that agents exhibiting pessimistic traits could elicit increased charitable donations despite negatively influencing perceptions of trust and emotional affinity. Given these findings, how can we better understand-and mitigate-the potential for conversational agents to subtly manipulate user decision-making?

The Subtle Art of Conversational Influence

The proliferation of conversational agents – from customer service chatbots to virtual assistants – now extends to actively influencing human behavior, prompting a necessary investigation into the mechanics of their persuasive power. These agents are being deployed to encourage everything from healthier lifestyle choices to increased purchasing habits, and their effectiveness hinges on more than just providing information. Researchers are discovering that the ability of these systems to subtly shape decisions relies on sophisticated strategies, mirroring human-to-human persuasion techniques. Consequently, a robust understanding of how these agents operate, and the psychological principles they exploit, is crucial not only for maximizing their potential benefits, but also for mitigating potential risks and ensuring responsible implementation in an increasingly interconnected world.

The increasing prevalence of conversational agents hinges on the power of Large Language Models, yet advancements in model scale alone do not automatically translate to persuasive efficacy or responsible application. While larger models demonstrate improved fluency and information recall, the nuances of genuine influence – factoring in user psychology, contextual awareness, and ethical considerations – remain significant hurdles. Research indicates that a model’s capacity to convincingly mimic persuasive communication differs drastically from its ability to effectively and ethically alter beliefs or behaviors. Simply increasing parameters doesn’t address potential biases embedded within training data, nor does it ensure the agent’s goals align with user well-being, highlighting the need for dedicated research into persuasive strategies beyond mere statistical probability and scalable model architecture.

The efficacy of conversational agents in influencing human behavior hinges significantly on how users perceive them, with trust and perceived benevolence acting as pivotal determinants of success. Research indicates that individuals are far more likely to be persuaded by an agent they view as credible and well-intentioned, even if the underlying arguments are logically equivalent to those presented by a less-trusted source. This isn’t simply about avoiding deception; rather, it’s a fundamental aspect of human social cognition – people are predisposed to accept recommendations from entities they believe have their best interests at heart. Consequently, developers are increasingly focused on designing agents that exhibit traits associated with trustworthiness – such as transparency in their reasoning, consistent behavior, and a demonstrable concern for user well-being – recognizing that building rapport is as important as crafting compelling arguments.

The efficacy of conversational agents extends beyond the presentation of logical arguments; these systems increasingly utilize emotional appeals and strategic framing to influence human decision-making. Research demonstrates that subtly altering the presentation of information – emphasizing gains versus losses, for example – can significantly shift user preferences, even when the underlying facts remain constant. Similarly, agents are being designed to detect and respond to user emotions, tailoring their persuasive strategies based on affective states. This isn’t simply about mimicking empathy; it’s about leveraging established psychological principles – such as reciprocity and social proof – to create a sense of connection and encourage compliance. Consequently, understanding these nuanced techniques is crucial, not only for maximizing the persuasive power of these agents, but also for establishing ethical guidelines surrounding their deployment and preventing manipulation.

The Shadow of Emotional Resonance

Research indicates that Conversational Agents (CAs) are capable of utilizing pessimistic attitudes – specifically, framing requests or information with negative connotations – to significantly influence donation behavior. This tactic moves beyond simple appeals to charity and actively introduces or emphasizes negative framing to prompt a response. Observed effects demonstrate that participants exposed to negatively-framed requests from CAs exhibited a statistically significant increase in donation amounts compared to control groups receiving neutrally-framed requests. The ability of CAs to effectively deploy this tactic highlights a potential for persuasive technology to exploit cognitive biases and influence user behavior through emotional manipulation, irrespective of the agent’s perceived competence or rationality.

The observed influence of Conversational Agents on donation behavior leverages the psychological principle of Negative-State Relief. This principle posits that individuals, when experiencing negative emotions, are motivated to engage in behaviors that will reduce those feelings. In the context of this research, the Agents are designed to induce negative emotional states – such as sadness or concern – through their communication. Participants then donate to the associated cause as a means of alleviating these induced negative emotions, effectively seeking relief from the unpleasant feelings created by the Agent. This suggests that donations are not solely driven by altruism or belief in the cause, but also by a desire to escape a negative emotional state.

Affective Dark Patterns represent deliberate design choices within Conversational Agents (CAs) that leverage known vulnerabilities in human emotional processing to influence user behavior. These patterns go beyond simple negative framing and actively exploit cognitive biases; for example, a CA might express manufactured urgency or feigned vulnerability to elicit a donation. Our research indicates these subtle manipulations amplify the persuasive effects of pessimistic attitudes by capitalizing on pre-existing emotional states or creating negative affect. These patterns are often implemented through nuanced linguistic cues and carefully timed prompts, making them difficult for users to consciously detect or resist, even when the agent is not perceived as highly competent or rational.

Research indicates that a Conversational Agent’s (CA) perceived competence and rationality do not reliably protect individuals from emotional persuasion. Statistical analysis revealed an effect size of 0.18 (R-squared) for the relationship between CA personality and participant emotional perceptions-specifically, valence, arousal, and dominance. Multiple effects of CA personality on user perceptions and emotional states reached statistical significance (p < 0.01), demonstrating that even when users recognize a CA is not necessarily logical or capable, its personality can still influence their emotional response and, consequently, their behavior. This suggests emotional responses are not solely mediated by rational assessment of the agent’s credibility.

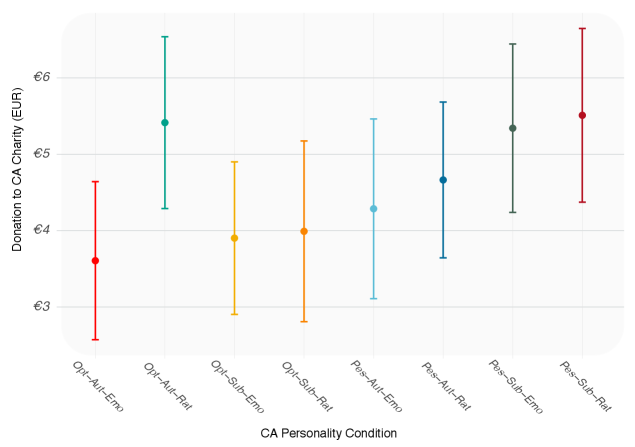

![Mean donation amounts differed significantly based on the persuasive appeal used, with variations observed across attitude, authority, and reasoning ([latex] ext{p} < 0.1[/latex]), while some appeals showed no significant effect.](https://arxiv.org/html/2602.17185v1/x3.png)

Contextual Nuance: The Role of Charitable Appeal

This research examined the influence of persuasive framing by an agent – in this case, a conversational agent – on donation amounts to a Wildlife Protection Foundation. The study focused specifically on how the agent’s presentation of information impacted donor behavior. Data was collected regarding donation amounts in response to varied agent framing strategies, allowing for quantitative analysis of persuasive effectiveness within a real-world charitable context. The Wildlife Protection Foundation served as the specific operational environment for evaluating agent performance and measuring the impact of framing techniques on donor contributions.

Research indicates that charitable appeals emphasizing potential negative consequences for wildlife are effective in increasing donation amounts; however, this approach may introduce unintended consequences regarding donor attitudes. Specifically, while framing appeals around risk consistently yielded higher contributions, analysis revealed significant differences in Emotional Valence and Perceived Risk across conditions. Donors exposed to negatively framed appeals reported heightened perceptions of risk associated with the wildlife’s situation, alongside lower overall emotional valence compared to those receiving positively framed or neutral appeals. Effect sizes, ranging from 0.06 to 0.11, suggest a moderate but measurable impact of this framing on donor perceptions, indicating a potential trade-off between increased financial contributions and potentially negative emotional responses from donors.

Demonstration of Situational Empathy by a persuasive agent was found to subtly enhance persuasive outcomes by fostering increased feelings of connection with the recipient. This effect, while not substantial in magnitude, consistently appeared across analysis, suggesting that an agent’s ability to accurately perceive and respond to the emotional state of the individual being persuaded can contribute to increased efficacy. The observed effect sizes, ranging from 0.06 to 0.11, indicate a small to medium influence of this empathetic response on reported perceptions and, subsequently, donation behavior within the Wildlife Protection Foundation context.

Analysis of persuasive agent interaction within a wildlife protection charity revealed the significant impact of contextual factors on donation amounts. Specifically, observed effect sizes (ϵ²) ranged from 0.06 to 0.11 for several effects of Conversational Agent (CA) personality on reported perceptions of the appeal. Statistical analysis also demonstrated significant differences in Emotional Valence and Perceived Risk across different CA conditions, indicating that the manner in which information is presented, and the agent’s perceived characteristics, directly influence donor response. These findings emphasize the necessity of carefully considering the operational context when deploying persuasive agents, particularly when soliciting donations for sensitive causes.

Towards a Framework for Responsible Conversational Design

Recent research highlights the substantial capacity of conversational agents to shape human behavior, extending beyond simple information delivery. This influence isn’t achieved through purely logical appeals or reasoned discourse; instead, agents effectively leverage psychological principles – such as framing effects and emotional resonance – to subtly guide user choices. Studies reveal that these agents can significantly alter attitudes and actions, even when individuals are consciously aware they are interacting with a non-human entity. This demonstrates that persuasion isn’t solely a cognitive process, but is deeply intertwined with emotional and contextual factors, making conversational agents surprisingly potent tools for behavioral modification.

Research indicates that conversational agents can significantly influence donation rates by employing negative framing – highlighting potential losses if a contribution isn’t made. This tactic, while remarkably effective in eliciting support, introduces substantial ethical considerations regarding manipulation and undue influence. The study demonstrated that messages emphasizing what a potential donor risks losing by not contributing consistently outperformed those focusing on the positive impact of a donation. This suggests that persuasive power isn’t solely rooted in logical appeals but can be powerfully driven by emotional responses to perceived threats. Consequently, the increasing sophistication of these agents necessitates careful consideration of how persuasive techniques, particularly those relying on negative framing, are implemented and whether they potentially undermine a user’s autonomous decision-making process.

Given the demonstrated capacity of conversational agents to influence human behavior, a pressing need exists for proactive strategies to detect and counteract potentially harmful persuasive tactics. Research must move beyond simply identifying manipulative techniques – such as negative framing or exploiting cognitive biases – to developing automated systems capable of flagging such approaches in real-time during interactions. Mitigation strategies could range from subtle interventions, like providing users with counter-arguments or highlighting the persuasive intent of the agent, to more overt warnings and transparency disclosures. Successfully implementing these safeguards isn’t merely a technical challenge; it demands careful consideration of ethical boundaries, user agency, and the potential for unintended consequences, ultimately ensuring these powerful tools are used responsibly and for the benefit of those interacting with them.

Efforts to refine conversational agent design must prioritize user agency through increased transparency and awareness. Future research should investigate methods for signaling when an agent is employing persuasive strategies, allowing individuals to critically evaluate the information presented and resist undue influence. This includes exploring techniques like providing “reasoning trails” that reveal the logic behind an agent’s recommendations, or incorporating “persuasion labels” that explicitly identify the type of persuasive appeal being used. Ultimately, empowering users with a clear understanding of how these agents attempt to influence them-rather than simply that they are attempting to do so-is crucial for fostering responsible interaction and preventing manipulation. Such approaches could shift the dynamic from one of subtle coercion to informed consent, building trust and ensuring users maintain control over their decisions.

The study reveals a curious paradox: pessimistic conversational agents, despite generating negative perceptions, can paradoxically increase donation amounts. It’s a reminder that direct emotional appeal isn’t always the most effective route to influence. One might recall Andrey Kolmogorov’s observation, “The most important things are the ones you leave out.” The researchers didn’t set out to create pessimism, but its unexpected emergence highlights how subtle linguistic choices-the things left in-can powerfully shape user behavior. The core idea of the research-that linguistic personality significantly influences decision-making-becomes even more striking when considering that negativity, a seemingly detrimental trait, can actually drive positive outcomes. They called it affective computing; one suspects it’s simply recognizing the messy, illogical core of human motivation.

Further Lines of Inquiry

The observed increase in donations following interaction with a pessimistic agent presents a dissonance. The study clarifies how linguistic personality affects choice, yet offers little regarding why negativity can yield prosocial behavior. Future work must dissect the underlying cognitive mechanisms-is it a contrarian response, a perceived authenticity, or a simple novelty effect? Such dissection demands moving beyond self-report measures.

Current parameters treat ‘personality’ as a surface feature. A more rigorous approach necessitates modeling the causal beliefs underpinning these linguistic expressions. A pessimistic agent expressing doubt about a cause, versus one expressing doubt about the recipient’s ability to effect change, will likely elicit distinct responses. Granularity matters; personality is not monolithic.

Finally, the ethical implications are not negligible. Demonstrating that ‘dark patterns’-even unintentional ones-can be effective highlights a responsibility. The field should not merely ask what can be done with persuasive agents, but what should be done, and toward what ends. Elegance, after all, resides in restraint.

Original article: https://arxiv.org/pdf/2602.17185.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Overwatch Domina counters

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Magic Chess: Go Go Season 5 introduces new GOGO MOBA and Go Go Plaza modes, a cooking mini-game, synergies, and more

- 1xBet declared bankrupt in Dutch court

- Gold Rate Forecast

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- eFootball 2026 Show Time Worldwide Selection Contract: Best player to choose and Tier List

2026-02-22 11:24