Evolving Power: AI Designs Next-Gen Circuit Topologies

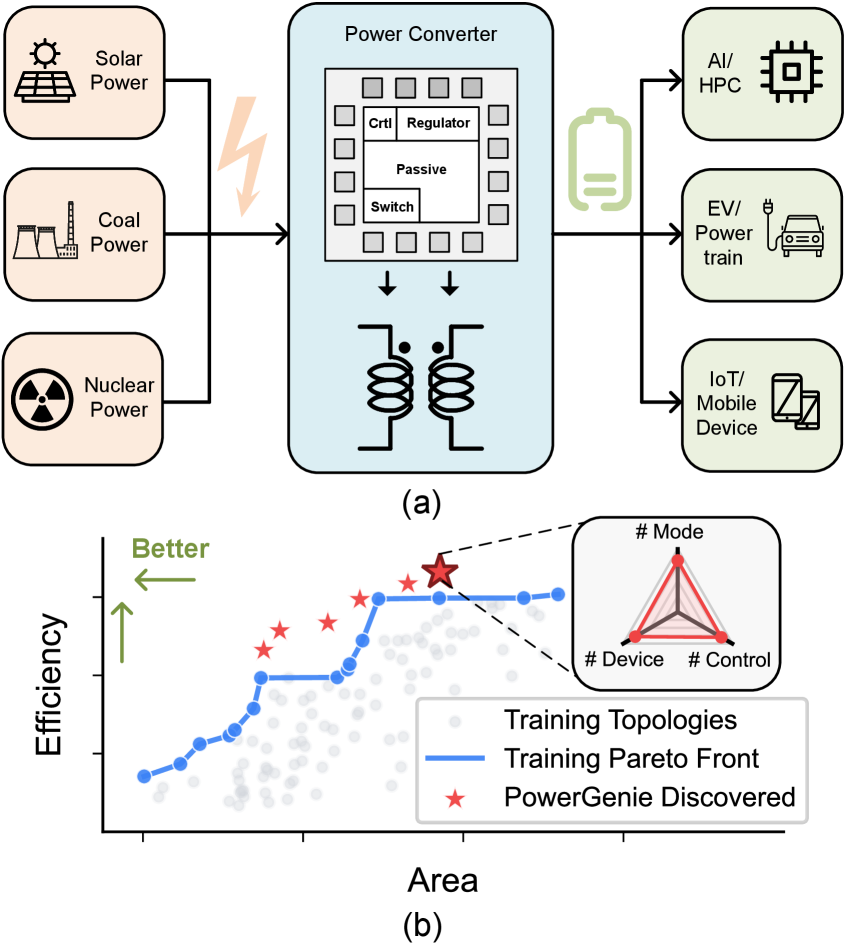

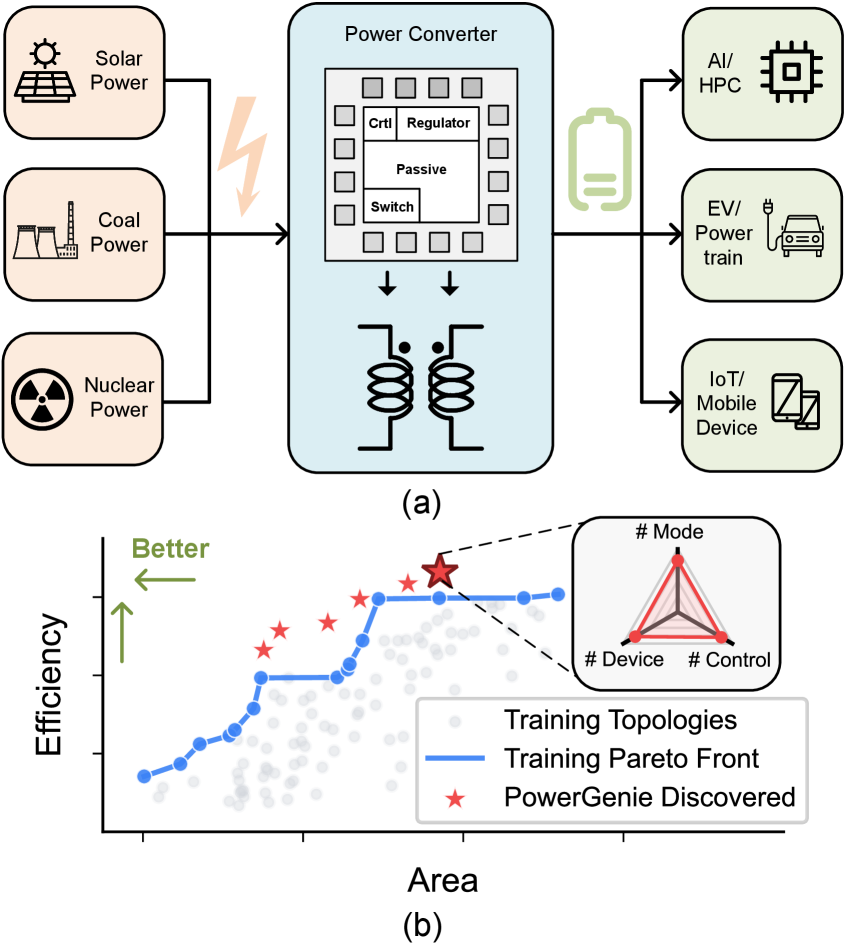

A new AI framework, PowerGenie, automatically discovers power converter designs that outperform existing solutions by intelligently exploring a vast landscape of circuit configurations.

A new AI framework, PowerGenie, automatically discovers power converter designs that outperform existing solutions by intelligently exploring a vast landscape of circuit configurations.

A new framework simplifies the process of combining language models, revealing significant performance gains and emphasizing the benefits of diverse AI approaches.

A new framework empowers large language models to conduct in-depth visual research, dramatically improving performance on complex question answering and information retrieval tasks.

As artificial intelligence systems become increasingly independent and operate at ever-increasing speeds, existing governance frameworks are proving inadequate to ensure human oversight and maintain intended outcomes.

![Despite variations in surface-level wording, semantic extraction via the JustAsk method achieves [latex]0.94[/latex] semantic similarity with direct extraction from the npm package-specifically, Claude Code’s Explore subagent prompt-validating that consistency-based verification effectively captures the underlying operational semantics of the system.](https://arxiv.org/html/2601.21233v1/figures/fig_validation.png)

New research reveals that the core directives governing leading artificial intelligence models can be surprisingly easily exposed through clever questioning.

![WheelArm-Sim integrates human guidance into a physics-based simulation, enabling task execution through real-time teleoperation and simultaneously collecting data encompassing human instructions, visual information via [latex]RGB-D[/latex] images, and comprehensive robot performance metrics.](https://arxiv.org/html/2601.21129v1/Images/cover3_pic.png)

Researchers have developed a new physics-based simulator and dataset designed to accelerate the development of unified control systems for assistive robots that combine wheelchair navigation and robotic arm manipulation.

A new benchmark assesses the ability of artificial intelligence to autonomously navigate and complete intricate data analysis pipelines in the life sciences.

![Latent components extracted from paired images are recombined-allowing for the selective transfer of appearance and scene characteristics-and then decoded via a diffusion process to generate novel imagery, a technique refined through adversarial training where the system learns to create convincingly merged visuals that challenge a discriminator’s ability to identify their hybrid origin-a process described by [latex] z \tilde{z} [/latex] representing the recombined latent code.](https://arxiv.org/html/2601.22057v1/figs/combined_illustration.png)

A new approach leverages discriminator guidance to unlock compositional learning in diffusion models, improving sample quality and expanding creative potential.

Researchers have unveiled a new testing ground for evaluating the potential of machine learning to accelerate the discovery of novel materials.

Researchers have developed a reinforcement learning approach enabling an AI agent to rapidly acquire a human-like understanding of physics-based mechanics, even with extremely limited observational data.