Smart Robotics: Minimizing Energy in Infrastructure Maintenance

![An end-to-end reinforcement learning framework achieves effective manipulation of articulated objects while actively regulating energy consumption by integrating RGB-D part segmentation, masked point-cloud sampling, and PointNet-based visual encoding with proprioceptive states, and enforcing an explicit energy constraint through a constrained SAC controller utilizing a Lagrangian mechanism [latex] \mathcal{L} [/latex].](https://arxiv.org/html/2602.12288v1/images/architecture.png)

A new approach to robotic manipulation uses artificial intelligence to efficiently operate and maintain complex components, reducing energy consumption and downtime.

![An end-to-end reinforcement learning framework achieves effective manipulation of articulated objects while actively regulating energy consumption by integrating RGB-D part segmentation, masked point-cloud sampling, and PointNet-based visual encoding with proprioceptive states, and enforcing an explicit energy constraint through a constrained SAC controller utilizing a Lagrangian mechanism [latex] \mathcal{L} [/latex].](https://arxiv.org/html/2602.12288v1/images/architecture.png)

A new approach to robotic manipulation uses artificial intelligence to efficiently operate and maintain complex components, reducing energy consumption and downtime.

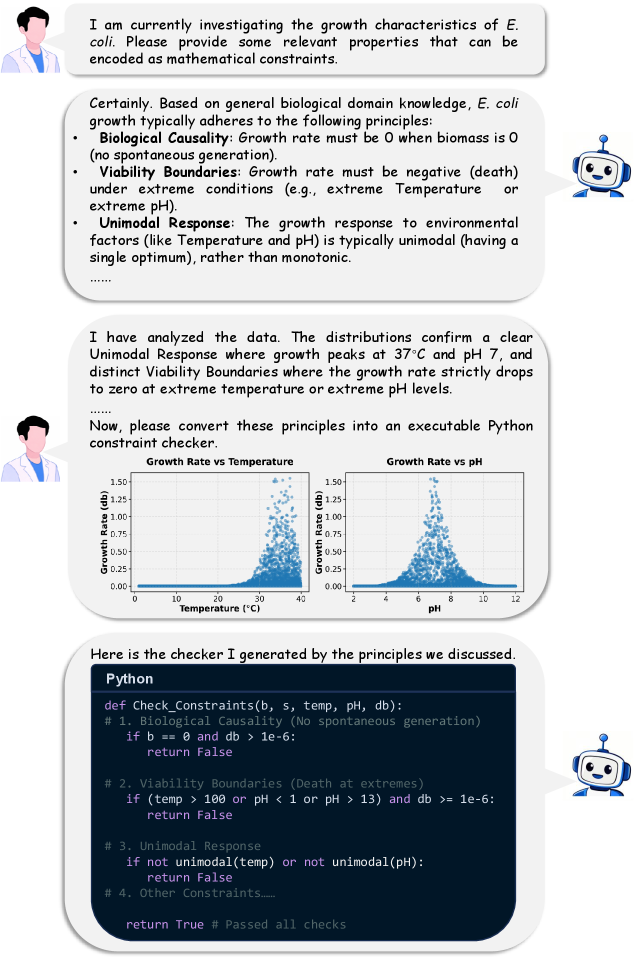

A new framework addresses the challenge of spurious correlations in equation discovery, ensuring that derived formulas align with established scientific principles.

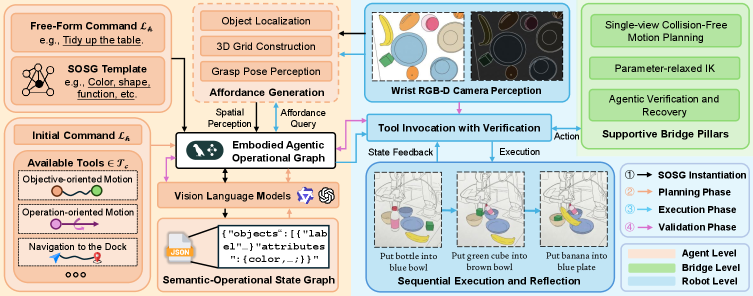

Researchers have developed a novel framework that empowers robots to tackle complex manipulation tasks without prior training by combining high-level reasoning with real-time feedback.

![CellScape constructs a cellular landscape by jointly modeling spatial proximity and gene co-expression, employing a dual-branch architecture that generates both spatial embeddings [latex]Z_{\text{spatial}}[/latex] and intrinsic gene expression embeddings [latex]Z_{\text{intrinsic}}[/latex], thereby enabling a nuanced understanding of cellular organization and facilitating diverse downstream analyses in spatial omics data.](https://arxiv.org/html/2602.12651v1/figs/fig1_v3.png)

Researchers have developed a powerful deep learning framework to decode the complex spatial arrangement of cells and their genomic interactions within tissues.

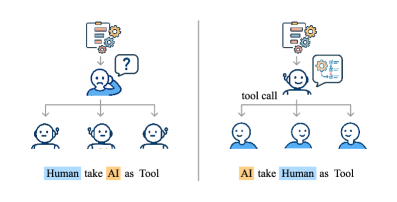

New research proposes a shift in how humans and AI work together, suggesting AI-driven workflows can boost efficiency and reduce mental strain.

![Across five manipulation tasks, a curriculum informed by force feedback-specifically, the CRAFT enhancement to both [latex]\pi_0[/latex]-base and RDT models-consistently elevates task success rates, with particularly pronounced improvements observed in scenarios demanding substantial physical contact, thus demonstrating the efficacy of force-aware fine-tuning.](https://arxiv.org/html/2602.12532v1/x4.png)

A new framework prioritizes tactile feedback to help robots master complex, contact-rich manipulation tasks.

![Agent skills are structured through a progressive disclosure architecture, loading information in stages to efficiently manage context window limitations while retaining access to complex procedural knowledge, with token estimations reflecting per-skill averages as detailed in prior work [35].](https://arxiv.org/html/2602.12430v1/x1.png)

A new paradigm is emerging where large language models aren’t just responding to prompts, but actively learning and applying specific skills to complete complex tasks.

Researchers have enhanced robotic tactile sensing by fusing internal vision with audio input, allowing robots to identify fabrics with human-like accuracy.

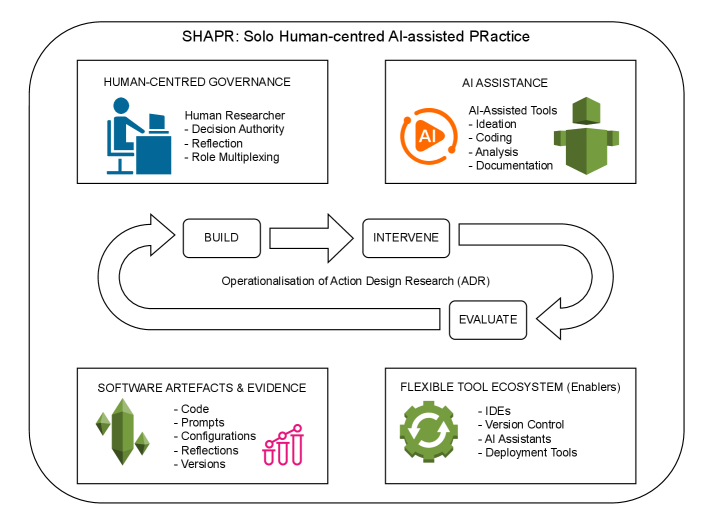

A new framework offers practical guidance for researchers leveraging artificial intelligence to develop software independently, prioritizing both methodological rigor and personal learning.

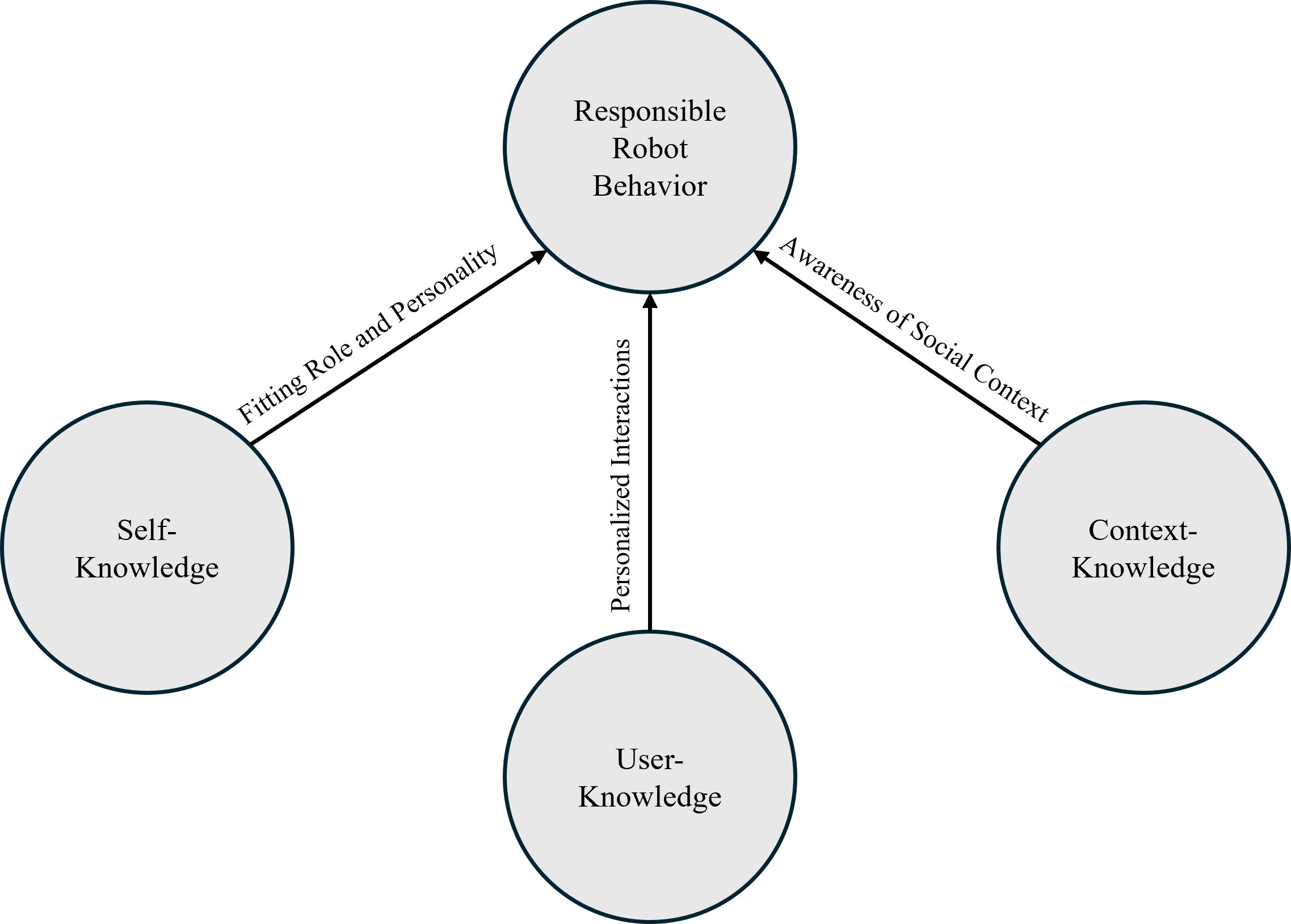

As generative social robots move into classrooms, careful consideration of their underlying knowledge is crucial for effective and responsible learning support.