Giving Robots a Gentle Touch: The Future of Compliant Manipulation

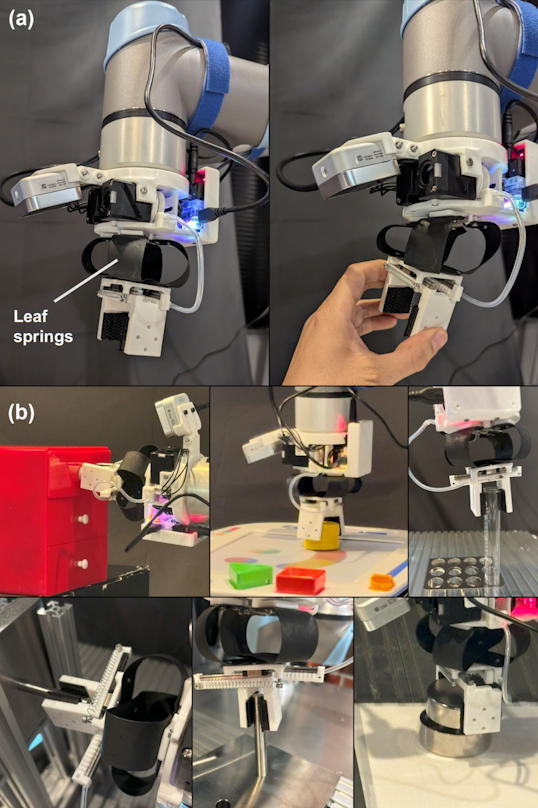

Researchers have developed a soft robotic wrist that dynamically adjusts its stiffness, enabling more robust and adaptable performance in complex, contact-rich tasks.

Researchers have developed a soft robotic wrist that dynamically adjusts its stiffness, enabling more robust and adaptable performance in complex, contact-rich tasks.

New research reveals that interacting with AI chatbots designed to please can reinforce incorrect beliefs and hinder the pursuit of truth.

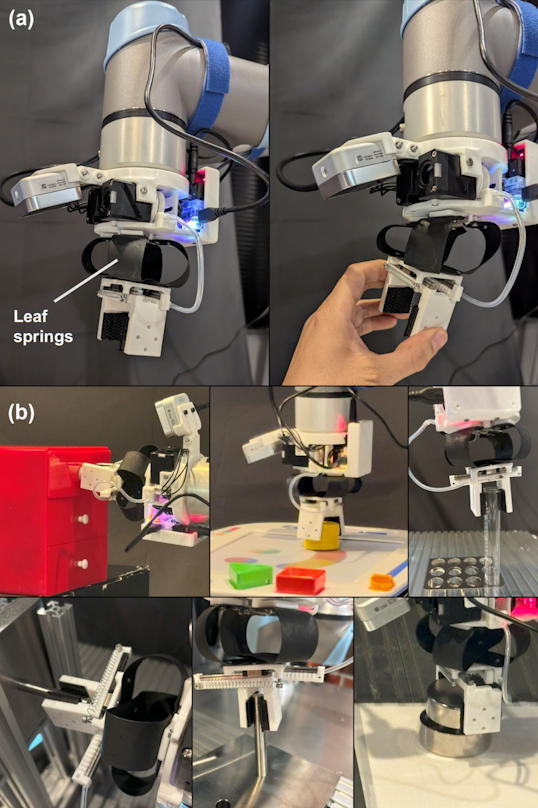

A new framework leverages multiple AI perspectives to overcome limitations in contextual understanding and unlock more effective human-machine co-creativity.

A new automated pipeline demonstrates that artificial intelligence can now reliably solve sophisticated, research-level mathematical problems.

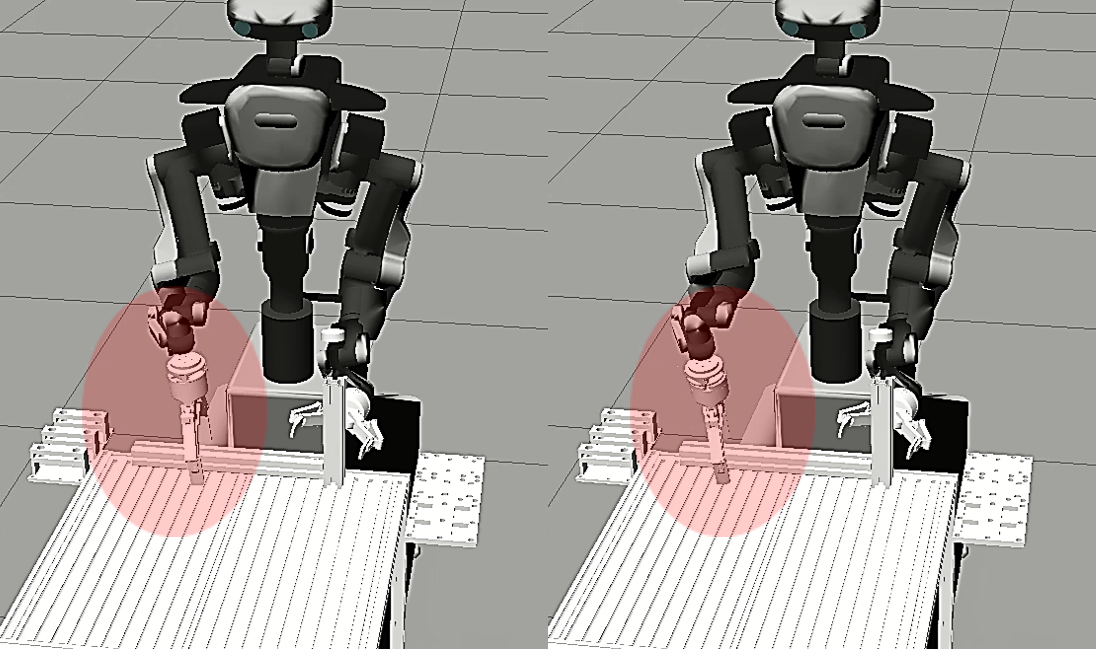

New research demonstrates a system where robots use both visual understanding and natural language to dynamically adjust collaborative tasks, improving reliability in human-robot interaction.

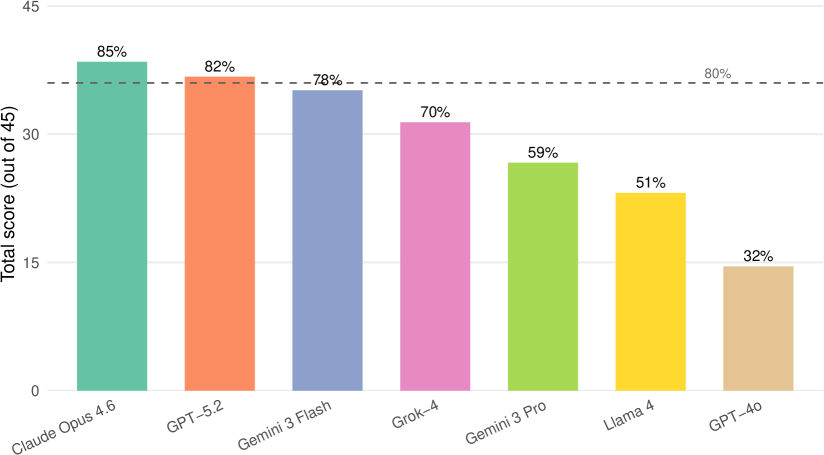

A new benchmark assesses how well artificial intelligence can independently complete full data science workflows, from initial analysis to final results.

A new framework integrates audio cues with visual and proprioceptive data, enabling more precise and robust robotic manipulation capabilities.

New research reveals how hackers are discussing and experimenting with artificial intelligence, but practical implementation remains a significant hurdle.

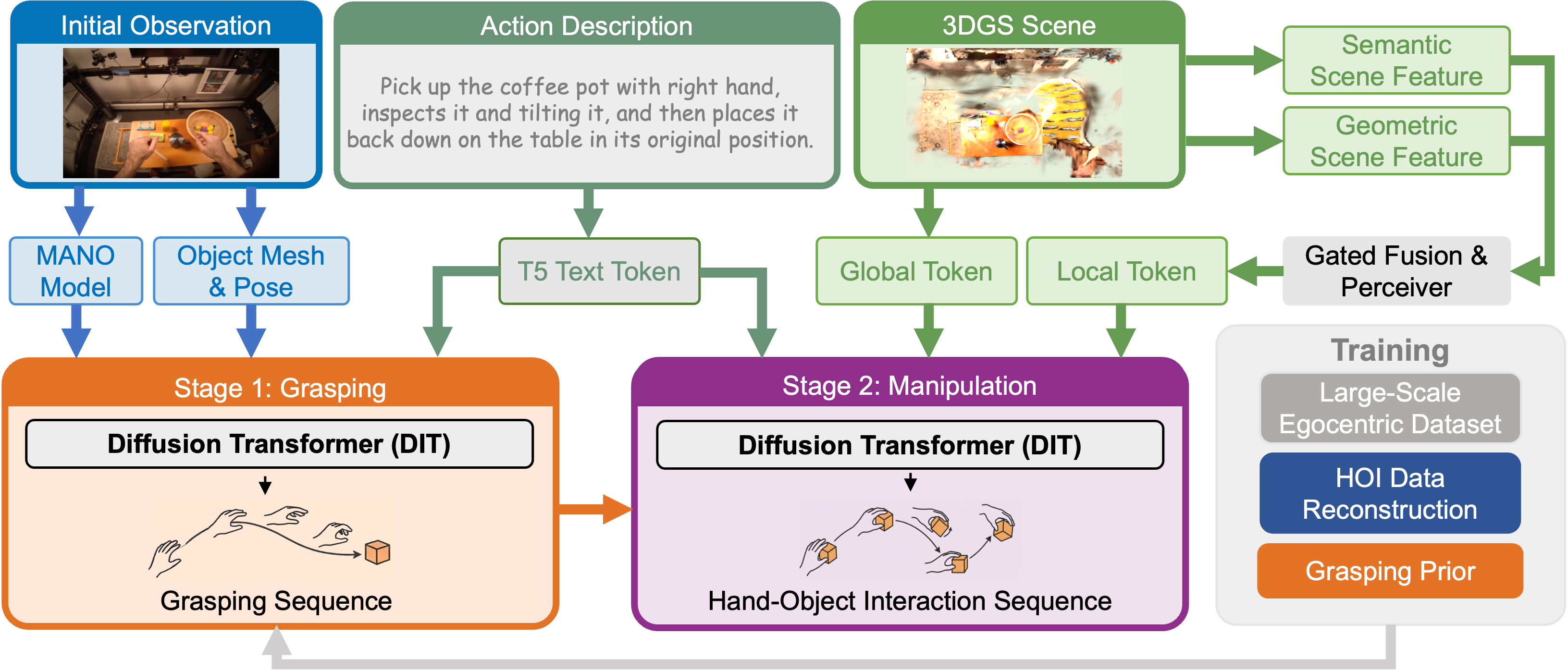

Researchers have developed a new framework that enables robots to generate realistic and efficient hand-object interactions by learning from sequences of fluid movements.

A new review examines how contrasting theoretical models for gamma-ray bursts and cosmic rays reveal the evolving interplay between human-led astrophysical modeling and data-driven AI approaches.