Author: Denis Avetisyan

Researchers have developed a novel method for enabling artificial intelligence to learn and utilize communication strategies that mirror human concept building.

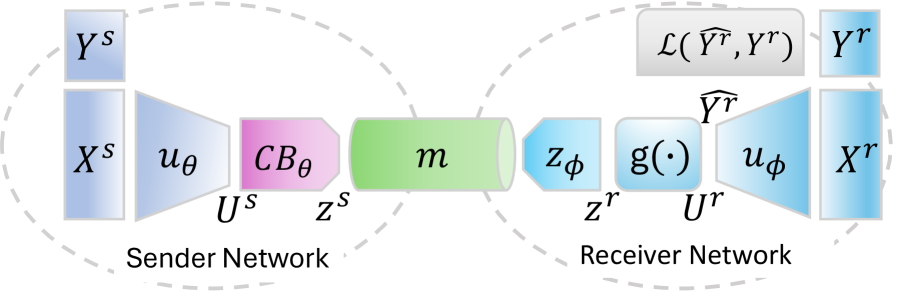

This paper introduces ‘Composition through Decomposition,’ a two-step reinforcement learning framework that achieves robust compositional generalization in emergent communication by first learning discrete concepts and then composing them.

Humans readily combine known concepts to understand novelty, a capacity often lacking in artificial neural networks. This challenge is addressed in ‘CtD: Composition through Decomposition in Emergent Communication’, which introduces a novel two-step method enabling agents to learn and utilize compositional generalization for describing images. By first decomposing visual input into discrete concepts and subsequently composing these into complex descriptions, the approach achieves robust zero-shot generalization-performing well on unseen images without additional training. Could this ‘decompose-then-compose’ strategy unlock more flexible and human-like communication capabilities in artificial intelligence?

The Fragile Dance of Accuracy and Composition

The very essence of communication hinges on a delicate balance between faithfully representing information – accuracy – and the capacity to build complex ideas from simpler components – compositionality. However, these two qualities frequently exist in tension; improvements in one often come at the expense of the other. A system capable of flawlessly recalling individual facts might struggle to synthesize them into a coherent narrative, while a system adept at combining ideas may sacrifice precision in the details. This inherent trade-off presents a significant challenge in fields ranging from artificial intelligence to linguistics, as truly effective communication requires not just the transmission of data, but the nuanced construction of meaning from its constituent parts. Achieving both accuracy and compositionality remains a central goal in the pursuit of robust and versatile communication systems.

Contemporary neural networks, despite achieving remarkable feats in areas like image recognition and natural language processing, often falter when tasked with complex reasoning. The core of this difficulty lies in their struggle to simultaneously maintain both accuracy and compositional ability; while adept at recognizing patterns, they can stumble when required to combine simple concepts into nuanced understandings of multi-faceted scenarios. This isn’t simply a matter of scale; increasing network size doesn’t reliably solve the problem. Instead, the architecture itself frequently limits the network’s capacity to disentangle relevant information and build logical connections, resulting in outputs that, while superficially plausible, lack the rigorous coherence needed for dependable reasoning in complex domains. Consequently, applications demanding precise, structured thought – such as scientific discovery or legal analysis – continue to pose significant challenges for current artificial intelligence systems.

The challenge of maintaining both accuracy and compositional reasoning in neural networks is significantly compounded by a phenomenon akin to superposition in quantum physics. As information is processed through successive layers, representations don’t remain neatly separated; instead, they become entangled, effectively blurring the lines between distinct concepts. This entanglement hinders clear signal transmission, making it difficult for the network to isolate and accurately convey specific details. The result is a diminished capacity for complex reasoning, as the network struggles to disentangle these superimposed representations and reliably combine simple elements into meaningful, coherent outputs. Consequently, even slight variations in input can trigger unpredictable and inaccurate responses, highlighting the need for architectures that promote representational clarity and prevent this debilitating entanglement.

Building from the Ground Up: Decomposition as a Principle

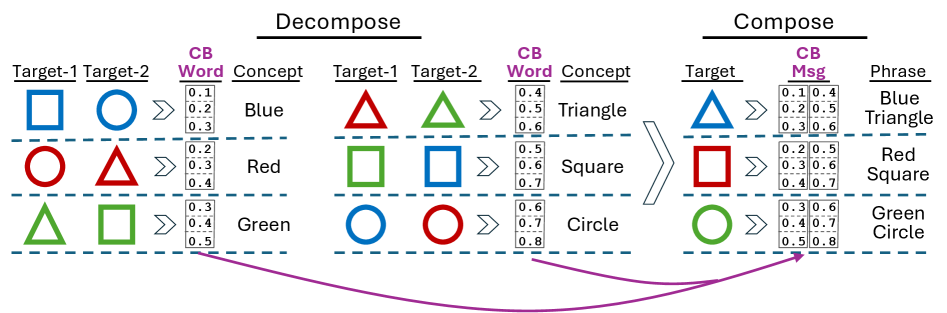

Composition Through Decomposition is a learning strategy wherein agents initially acquire distinct representations for fundamental concepts before learning to combine these representations to convey more complex information. This contrasts with end-to-end learning, where complex messages are learned directly without explicit conceptual grounding. The method focuses on building a modular system; individual concept representations are established and refined independently, allowing for greater flexibility and generalization when constructing novel messages. This approach facilitates the creation of complex communications from a limited set of basic elements, improving efficiency and interpretability compared to holistic message learning.

A Discrete Codebook functions as a foundational element in compositional communication by establishing a finite set of vector representations for fundamental concepts. This approach contrasts with continuous vector spaces, offering a discrete and interpretable communication channel. Each vector within the codebook corresponds to a specific concept, and messages are constructed by combining these vectors. The use of a finite set simplifies the learning process and allows for explicit representation of compositional structure, as the space of possible combinations is constrained and more readily navigable during training and inference. This structured space facilitates the development of agents capable of both encoding and decoding complex messages built from these core conceptual units.

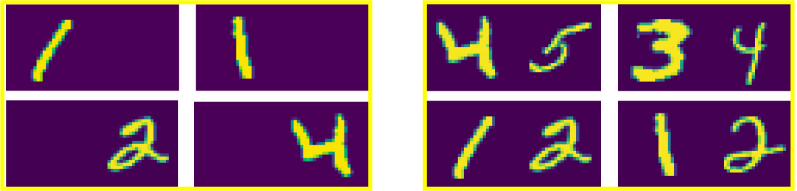

The MultiTargetCoordinationGame is a simulated environment designed to train agents in compositional generalization. It presents agents with scenarios requiring them to coordinate actions to achieve goals involving multiple, dynamically positioned targets. The game’s structure forces agents to learn representations of individual concepts – such as target identification and spatial relationships – and then combine these representations to formulate novel, complex behaviors. Crucially, the environment features variable numbers of targets and randomized target locations during each training episode, preventing agents from relying on memorized sequences and instead necessitating the development of a compositional understanding of the task. Performance is evaluated based on the agent’s ability to successfully navigate to and interact with the designated targets, providing a quantitative measure of its compositional skill.

The Art of Abstraction: Discretization and Efficient Learning

Vector Quantization (VQ) is a data compression technique that maps a continuous input vector to the closest vector within a finite set of pre-defined codebook vectors. This process effectively discretizes the input space, reducing the amount of information required for representation. The codebook, consisting of K vectors, each of dimension D, serves as a lookup table; for any input vector, the algorithm identifies the codebook vector with the minimum distance, typically calculated using Euclidean distance. The index of this selected codebook vector then represents the original input, creating a manageable and potentially lower-dimensional communication channel. This discretization is lossy, as information is lost in the mapping, but it allows for efficient representation and transmission of data, particularly in scenarios where bandwidth or storage is limited.

VQ-VAE (Vector Quantized Variational Autoencoder) builds upon vector quantization by learning the codebook itself during training. Traditional vector quantization relies on a pre-defined codebook; however, VQ-VAE integrates the codebook learning process into the autoencoder architecture. This is achieved by treating the codebook as a set of learnable embedding vectors and using a straight-through estimator to enable gradient propagation through the discrete quantization step. By learning a quantized latent space, VQ-VAE creates a more efficient data representation by reducing the dimensionality and forcing the model to learn a compact set of representative features, thereby improving both compression and generative capabilities.

Gumbel-Softmax is a technique used to address the non-differentiability of discrete variables during gradient-based training in neural networks. It operates by re-parameterizing a discrete variable, typically representing a category selection, as a continuous relaxation. This is achieved by adding Gumbel noise to the logits and applying a softmax function, resulting in a probabilistic distribution over the discrete categories. The temperature parameter within the softmax controls the smoothness of this distribution; a lower temperature approaches a one-hot encoding, representing a discrete choice, while a higher temperature yields a softer, more continuous distribution. This allows gradients to flow through the discrete sampling process, enabling the network to learn effectively despite the inherent non-differentiability of discrete choices. The resulting gradient estimate is a continuous proxy for the discrete operation, facilitating optimization via standard backpropagation algorithms.

Reinforcement Learning (RL) is integrated into the framework to optimize agent behavior within the constraints of the discretized communication channel established through vector quantization and learned latent spaces. Specifically, agents are trained via RL algorithms to effectively encode and decode messages using the limited, discrete codebook. This approach allows the system to learn optimal communication strategies, maximizing information transfer despite the imposed discretization. The RL training process focuses on rewarding agents for successful communication, resulting in policies that efficiently utilize the available discrete representation for both sending and receiving information.

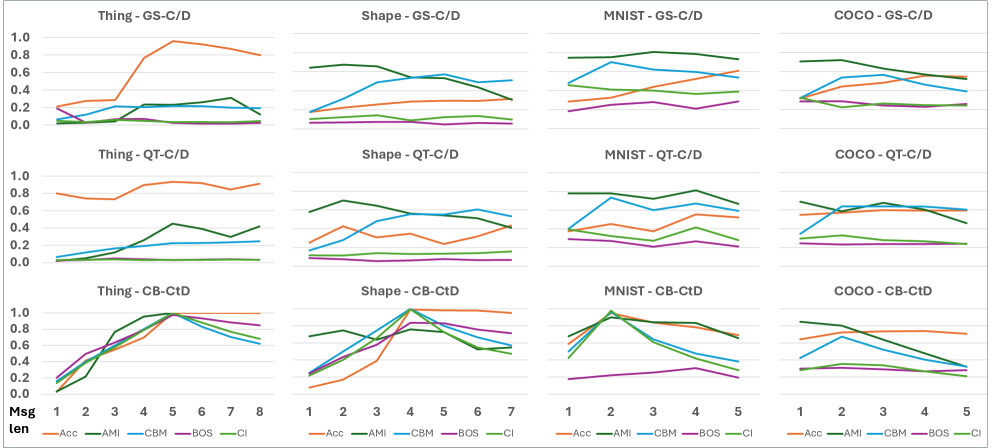

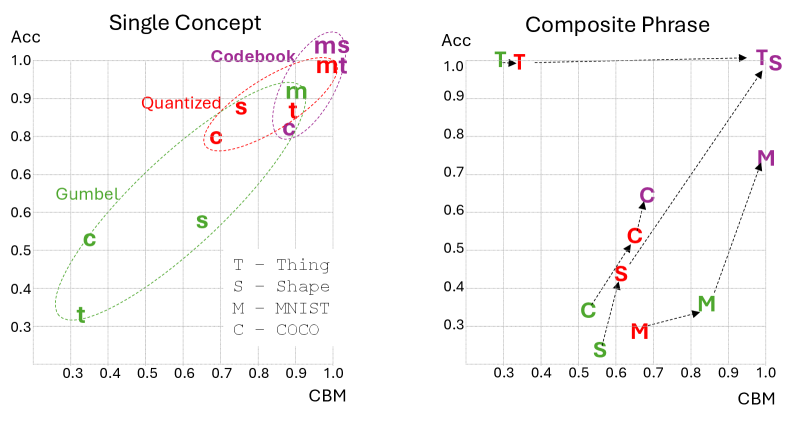

Evaluation of the Composition through Decomposition (CtD) method across multiple datasets yielded perfect Accuracy (1.00), indicating flawless performance in task completion. Furthermore, the method achieved a Compositional Bootstrapping Measure (CBM) of 1.00 and a Compositional Generalization Index (CI) of 1.00. These results demonstrate the method’s ability to not only perform accurately on observed data but also to generalize effectively to novel compositional structures, validating the framework’s efficacy in learning and applying compositional representations.

The Echo of Meaning: Emergent Communication and System Demonstration

Emergent communication offers a compelling paradigm for investigating the origins of language and coordination, sidestepping the need for pre-programmed linguistic structures. Rather than relying on existing vocabulary or grammatical rules, this framework allows agents to develop communication systems from scratch through repeated interaction and reinforcement learning. The process begins with agents attempting to convey information – be it about their internal states or the external environment – and receiving feedback based on the success of that transmission. This iterative cycle encourages the spontaneous creation of signals and shared meanings, fostering a communication protocol uniquely tailored to the specific task and the interacting agents. By observing how these systems evolve, researchers gain valuable insights into the fundamental principles governing language acquisition and the conditions necessary for effective collaboration, even in the absence of explicit instruction or shared prior knowledge.

Index-based communication offers a compelling solution to the challenges of building communication systems between agents. Rather than relying on complex, pre-defined linguistic structures, this approach utilizes a discrete codebook – a finite set of symbols or ‘indices’ – allowing agents to reference concepts in a remarkably efficient manner. Each index within the codebook corresponds to a specific concept, and agents learn to associate these indices with the relevant information during interaction. This streamlined process circumvents the need for intricate language parsing and generation, enabling rapid and effective information exchange. By focusing on referencing concepts directly through these indices, the system achieves a level of clarity and conciseness that is crucial for successful coordination and collaborative problem-solving between agents.

The ContextConsistentObverter serves as a compelling demonstration of the communication framework’s capabilities, revealing how agents can achieve effective coordination through learned communication. This system challenges agents to navigate a shared environment and accomplish tasks reliant on successfully conveying information about object locations, essentially building a language from scratch. Through repeated interactions and a discrete codebook, agents develop a shared understanding, enabling them to request and provide information with increasing accuracy. The Obverter’s success isn’t merely about transmitting data; it highlights the emergence of a functional, context-dependent communication system where meaning is derived from shared experience and the need to solve a common problem, proving the potential for artificial agents to develop collaborative skills without pre-programmed linguistic rules.

The foundation of this research lies in the Information Bottleneck principle, a theory positing that effective communication necessitates distilling information down to its most essential components. Rather than transmitting every detail, the system prioritizes retaining only the data crucial for achieving a specific goal, thereby maximizing efficiency and minimizing redundancy. This approach ensures that agents don’t simply exchange noise, but rather focus on transmitting only the information that genuinely impacts their coordinated actions. By embracing this principle, the study fosters a streamlined communication process where messages are concise, meaningful, and directly relevant to the task at hand, ultimately enabling robust and adaptive interaction between agents.

The robustness of the developed communication system is quantitatively demonstrated by achieving a perfect Adjusted Mutual Information (AMI) score of 1.00. This metric rigorously assesses the similarity between the intended messages and the received interpretations, effectively confirming a complete alignment between communicative intent and understanding. A score of 1.00 signifies that the emergent language, developed entirely through agent interaction, exhibits a level of coherence and consistency comparable to established linguistic systems. This result not only validates the efficacy of the index-based communication approach but also underscores the potential for artificial agents to autonomously construct meaningful and reliable communication protocols, paving the way for more sophisticated multi-agent coordination and collaborative problem-solving.

The pursuit of compositional generalization, as demonstrated in this work, echoes a fundamental truth about complex systems. They rarely arise from pristine design, but from the accretion of discrete elements, imperfectly assembled. This paper’s ‘Composition through Decomposition’ method, with its emphasis on learning concepts before composing them, isn’t so much an architecture as a recognition of inevitable fragmentation. As Blaise Pascal observed, “The belly is an ungovernable master.” So too are the emergent properties of communication; they will find a way, even through a codebook built on imperfect, discrete foundations. The architecture isn’t structure – it’s a compromise frozen in time, and this method simply acknowledges that compromise upfront.

What Lies Ahead?

The pursuit of compositional generalization, as demonstrated through this ‘Composition through Decomposition’ method, merely reframes an ancient problem. It splits the challenge of meaning – of coordinating action across agents – into discrete steps, a tempting architectural gesture. Yet, it does not escape the fundamental truth: every element introduced to manage complexity introduces a new surface for failure. The discrete codebook, intended as a scaffold for shared understanding, is itself a dependency, a point of systemic fragility.

Future work will undoubtedly explore larger codebooks, more sophisticated reinforcement learning schemes, and attempts to scale this approach to multi-target coordination scenarios of increasing complexity. However, these expansions risk amplifying the inherent brittleness. The system learns concepts, then learns to combine them – but it does not learn to unlearn them, to adapt when the underlying assumptions prove false.

The true test will not be achieving higher scores on benchmark tasks, but observing the system’s behavior when confronted with genuine novelty, with situations that fall outside the carefully curated training distribution. It is then that the limitations of discrete representations, of forced decomposition, will become most apparent. The system will either gracefully degrade, or it will fall together, revealing that every connection is, ultimately, a potential point of collapse.

Original article: https://arxiv.org/pdf/2601.10169.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash Royale Best Boss Bandit Champion decks

- Vampire’s Fall 2 redeem codes and how to use them (June 2025)

- World Eternal Online promo codes and how to use them (September 2025)

- Best Arena 9 Decks in Clast Royale

- Mobile Legends January 2026 Leaks: Upcoming new skins, heroes, events and more

- Country star who vanished from the spotlight 25 years ago resurfaces with viral Jessie James Decker duet

- How to find the Roaming Oak Tree in Heartopia

- M7 Pass Event Guide: All you need to know

- Solo Leveling Season 3 release date and details: “It may continue or it may not. Personally, I really hope that it does.”

- ATHENA: Blood Twins Hero Tier List

2026-01-18 09:42