Author: Denis Avetisyan

This review examines the burgeoning field of AI agents designed to embody distinct personalities and engage in realistic interactions.

An analysis of current progress, persistent challenges, and future research directions in role-playing language agent development.

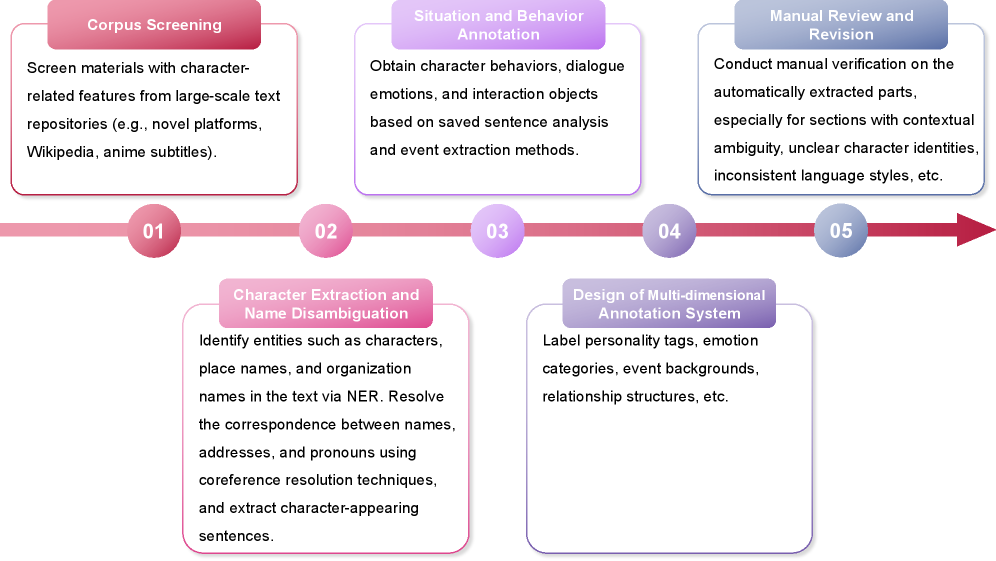

While creating truly believable virtual characters remains a significant challenge, the burgeoning field of role-playing language agents-explored in ‘Role-Playing Agents Driven by Large Language Models: Current Status, Challenges, and Future Trends’-is rapidly advancing through innovations in large language models. This paper systematically reviews the current state of these agents, detailing progress from basic template responses to sophisticated personality modeling and memory mechanisms crucial for consistent behavior. It highlights key areas like data construction, evaluation metrics, and emerging techniques for driving realistic interactions-but what further breakthroughs will be necessary to create agents capable of truly immersive and engaging narratives?

The Illusion of Agency: Addressing the Limits of Current AI

Many contemporary conversational AI systems struggle to maintain a consistent persona throughout interactions, often delivering responses that feel disjointed or robotic. This inconsistency stems from a reliance on pattern-matching and statistical prediction, rather than a deep understanding of character and motivation. Consequently, these agents frequently fail to exhibit the believable behaviors – the subtle nuances in language, the appropriate emotional responses, and the consistent adherence to a defined identity – necessary for truly engaging interactions. The resulting experiences can feel superficial, hindering the development of rapport and limiting the potential for immersive storytelling or collaborative problem-solving, ultimately frustrating users seeking genuine connection or assistance.

The pursuit of genuinely immersive digital experiences necessitates artificial agents capable of far more than simple response generation; these agents must convincingly embody distinct roles and dynamically adjust their behavior within evolving scenarios. Current limitations in artificial intelligence often result in interactions feeling stilted or predictable, breaking the suspension of disbelief crucial for deep engagement. To overcome this, research focuses on equipping agents with the capacity for nuanced characterization, enabling them to not merely respond to situations, but to react as a specific persona would, complete with consistent motivations, beliefs, and emotional responses. This dynamic adaptation extends beyond pre-programmed scripts; it requires agents to learn from interactions, refine their understanding of the environment, and modify their behavior accordingly, fostering a sense of genuine agency and believability that transcends purely functional interactions.

Successfully imbuing artificial intelligence with believable agency requires more than simply generating coherent dialogue; it demands a robust system for representing and simulating the intricacies of personality. The challenge isn’t just defining traits like ‘optimism’ or ‘sarcasm’, but also modeling how those traits manifest in varied situations, influencing decision-making, emotional responses, and even subtle linguistic choices. Current research focuses on developing computational models that move beyond simple keyword associations, instead aiming to capture the underlying cognitive and emotional architecture that drives consistent, nuanced behavior. This involves representing traits as dynamic parameters influencing an agent’s internal state and, crucially, allowing for internal conflicts and inconsistencies – imperfections that paradoxically contribute to a sense of authenticity. The ability to simulate these complex internal processes is proving essential for creating AI agents that feel less like sophisticated chatbots and more like genuinely engaging characters.

Recent breakthroughs in artificial intelligence are yielding agents capable of convincingly portraying consistent and engaging personalities, a feat largely driven by advancements in Role-Playing Language Agents (RPLAs). These agents move beyond simple chatbot functionality by leveraging large language models to not only generate responses but to do so from a defined character perspective, maintaining that persona across extended interactions. Unlike earlier systems prone to inconsistent behavior, RPLAs utilize techniques like memory networks and persona conditioning to retain character-specific details – including backstory, motivations, and even stylistic quirks – resulting in more immersive and believable exchanges. This capability is poised to revolutionize fields like entertainment, education, and customer service, promising interactions that feel less like communicating with a machine and more like engaging with a distinct, memorable individual.

Constructing a Consistent Self: The Foundation of Believable Characters

Personality modeling for effective role-playing necessitates a system capable of representing an agent’s consistent traits and behavioral tendencies. This involves defining parameters that govern responses to stimuli, influencing decision-making, and shaping overall interaction patterns. A robust model extends beyond simple categorization, requiring the quantification of these traits to enable predictable and reproducible behavior. The system must capture both broad personality characteristics and more nuanced individual quirks, allowing for a differentiated and believable character representation. This foundation is critical for generating responses that align with the established persona across varied conversational contexts and interaction scenarios.

Personality modeling within interactive agents is quantitatively grounded through the utilization of established psychological scales. These scales, such as the Big Five Inventory (OCEAN – Openness, Conscientiousness, Extraversion, Agreeableness, Neuroticism) and the Myers-Briggs Type Indicator, provide a framework for defining and measuring personality traits along defined dimensions. By assigning numerical values to these dimensions, agent personalities become data-driven and consistently reproducible. This approach allows for the creation of profiles representing a wide spectrum of behaviors and reactions, facilitating more realistic and predictable agent responses within simulations and interactive environments. Furthermore, the use of standardized scales enables objective comparison and analysis of agent personalities, as well as the potential for generating agents with specifically targeted personality characteristics.

Effective personality consistency in agents requires the implementation of robust memory mechanisms to retain and recall past interactions and character-defining events. These mechanisms function as a persistent store of experiences, allowing the agent to reference prior behavior, relationships, and stated preferences when responding to new stimuli. Specifically, these systems must log details such as conversational history, significant events experienced by the agent, and established relationships with other entities. Without this historical context, agents risk exhibiting inconsistent behavior or contradicting previously established character traits, hindering believability and immersion. The scope of memory recall should be configurable, balancing computational cost with the need for long-term consistency.

Dynamic Personality Evolution within agent models addresses the non-static nature of personality by enabling alterations to quantified traits over time. This is achieved through algorithms that adjust agent behavior based on interactions and experiences, simulating personal growth and change. Evaluation of this dynamic evolution is performed using established psychological assessments, with current implementations achieving a personality consistency rate of 80.7%. This metric indicates the degree to which an agent’s responses and behaviors remain aligned with its core personality profile despite ongoing modifications, ensuring a believable and coherent character over extended interactions.

The Engine of Expression: Language Models as Embodied Minds

Large Language Models (LLMs) function as the core component enabling natural language processing within autonomous agents. These models, typically based on the transformer architecture, are pre-trained on massive datasets of text and code, allowing them to predict the probability of a sequence of words. This capability facilitates both natural language understanding – interpreting user inputs – and natural language generation – formulating coherent and contextually relevant responses. The scale of these models, often measured in billions of parameters, directly correlates with their ability to capture nuances in language and generate human-quality text, effectively serving as the ‘brain’ for an agent’s linguistic interactions.

Large language models (LLMs) function as the core linguistic component enabling agent communication. Both open-source and closed-source LLMs provide the capacity for natural language generation and understanding, allowing agents to formulate responses and interpret user inputs. Open-source models offer transparency and customization, while closed-source models, often characterized by greater scale, may deliver enhanced performance on specific tasks. The choice between these model types depends on factors such as resource availability, the need for model control, and desired levels of performance; both, however, are fundamentally responsible for translating internal agent states into coherent and contextually relevant language output.

Reinforcement Learning from Human Feedback (RLHF) is a crucial technique for tailoring agent responses to specific behavioral profiles. This process involves an iterative refinement of the language model through human evaluation of generated outputs; human raters provide feedback on the quality and appropriateness of responses, which is then used as a reward signal to train the model. By optimizing for human preferences, RLHF moves beyond simple next-token prediction to encourage responses that align with desired role-playing styles, such as helpfulness, honesty, or a particular persona. The resulting models demonstrate improved coherence, relevance, and overall alignment with human expectations, leading to more engaging and believable agent interactions.

The incorporation of emotion modeling and multimodal integration significantly improves the expressiveness of AI agents. Emotion modeling allows agents to generate responses that reflect and respond to emotional cues, while multimodal integration enables them to process and generate information through multiple channels – such as text, audio, and visual inputs. This combined approach has demonstrably increased agent performance, as evidenced by a 93.47% accuracy rate achieved on the LIFECHOICE dataset, which is designed to evaluate empathetic and emotionally intelligent responses in conversational AI.

Beyond Scripted Realities: The Promise of Collaborative Storytelling

The advent of multi-agent systems represents a significant leap forward in narrative generation, moving beyond single-character storytelling to dynamic, interactive experiences. These systems utilize multiple, independent agents – each possessing its own goals, knowledge, and behaviors – to collaboratively construct a narrative. Rather than a pre-scripted plot, the story emerges from the interactions between these agents, creating unpredictable and engaging scenarios. This approach allows for a level of complexity and realism previously unattainable, as agents can respond to each other and the unfolding events in a believable manner. The result is not simply a story told to an audience, but a story created through the collective actions of autonomous entities, opening new avenues for immersive entertainment and simulation.

Effective collaborative storytelling within multi-agent systems hinges on the depth of each agent’s understanding of both the narrative world and its inhabitants. Robust character knowledge isn’t simply a memorization of facts, but a dynamic representation of relationships, motivations, and histories; each agent must maintain a consistent internal model of who knows what, and how those connections influence behavior. This necessitates going beyond simple attribute lists to encompass nuanced understandings of emotional states, past interactions, and predicted responses, allowing agents to react believably within the unfolding story. Without this foundational awareness, interactions become stilted and illogical, undermining the illusion of genuine collaboration and hindering the creation of compelling, cohesive narratives.

For multi-agent systems to convincingly co-create narratives, each agent’s behavior must remain consistent and believable throughout the interaction. This is achieved by grounding agent actions in established personality models, effectively providing a behavioral framework. These models aren’t merely superficial traits; they dictate how an agent interprets events, reacts to others, and pursues goals, ensuring actions align with a defined character. By leveraging these consistent behavioral patterns, the system avoids jarring inconsistencies that would break immersion for an observer. The success of this approach is evident in its ability to generate coherent, multi-character stories, demonstrating that personality-driven consistency is key to believable collaborative storytelling within artificial intelligence.

The development of collaborative storytelling systems extends far beyond mere entertainment, offering substantial benefits for diverse applications. Training simulations, for instance, can achieve heightened realism through dynamic, unscripted interactions between virtual characters, allowing trainees to hone interpersonal skills in unpredictable scenarios. Perhaps even more promising are therapeutic applications, where patients could engage with AI-driven characters to practice social interactions or process emotional experiences in a safe, controlled environment. The efficacy of this approach is underscored by recent evaluations, which demonstrate a 93.47% accuracy rate in navigating complex, multi-character novel scenarios – a testament to the system’s ability to generate believable and coherent narratives through collaborative artificial intelligence.

The Pursuit of Authentic Simulation: Measuring What Truly Matters

The development of robust role-playing agents hinges on the ability to objectively measure their performance, necessitating carefully designed evaluation metrics. These metrics move beyond simple task completion to assess the consistency and believability of an agent’s behavior over extended interactions. Without such quantifiable measures, progress remains anecdotal and subjective; improvements cannot be reliably tracked or compared across different agent architectures. Effective metrics must capture not only whether an agent achieves a goal, but how it does so – assessing factors like emotional responsiveness, conversational coherence, and the ability to maintain a consistent persona. This pursuit of objective assessment is paramount, as it enables researchers to iterate on designs, identify shortcomings, and ultimately build agents capable of truly immersive and engaging role-playing experiences.

Existing methods for gauging the quality of role-playing agents frequently struggle to assess the subtleties that define truly believable behavior. Traditional metrics often prioritize task completion or adherence to pre-defined scripts, overlooking the importance of nuanced responses, emotional consistency, and adaptive improvisation. This limitation stems from a reliance on easily quantifiable features, while the essence of believability resides in complex, often unquantifiable aspects of human interaction – things like appropriate pauses, subtle shifts in tone, and the ability to convincingly portray internal states. Consequently, agents may ‘pass’ conventional tests while still feeling robotic or unnatural to human interactors, highlighting the need for evaluation techniques that move beyond surface-level analysis and delve into the cognitive and emotional dimensions of believable agency.

The pursuit of genuinely believable artificial intelligence benefits significantly from insights gleaned from cognitive neuroscience. Researchers are increasingly looking to the human brain – specifically, how it processes social cues, predicts behavior, and experiences empathy – to build more robust evaluation metrics. Studies examining neural responses to social interactions reveal patterns related to trust, deception, and emotional contagion; these findings can inspire algorithms that assess an agent’s ability to convincingly simulate these complex human characteristics. By incorporating principles of predictive processing – the brain’s constant attempt to anticipate sensory input – evaluation methods can move beyond simple turn-taking coherence and instead focus on whether an agent’s actions consistently align with established expectations, fostering a greater sense of realism and immersion for the user.

The ultimate benchmark for evaluating role-playing agents lies not in objective scores, but in the subjective feeling of genuine interaction. Current evaluation methods struggle to quantify the nuances of believability – the sense of presence, emotional resonance, and consistent personality that defines a truly engaging character. Future research must therefore prioritize metrics that directly assess the experience of interacting with these agents. This involves exploring techniques beyond simple behavioral analysis, potentially incorporating physiological data – such as changes in heart rate or skin conductance – or utilizing advanced surveys designed to capture the user’s sense of immersion and emotional connection. Successfully capturing this subjective dimension will be pivotal in creating agents that are not merely responsive, but truly feel alive, unlocking the potential for profoundly immersive and meaningful experiences.

The pursuit of behavioral consistency in Role-Playing Language Agents, as detailed in the study, echoes a fundamental principle of elegant design. It strives to reduce extraneous complexity, focusing on core characteristics that define a character’s actions. This aligns perfectly with the sentiment expressed by Tim Berners-Lee: “The Web is more a social creation than a technical one.” The study’s emphasis on crafting believable agents isn’t merely about technical achievement; it’s about fostering a richer, more intuitive interaction-a social creation built upon a foundation of consistent digital personas. The goal isn’t to replicate every nuance of human behavior, but to establish a core set of traits that govern responses, ensuring the agent feels authentic and predictable.

Where Does This Leave Us?

The proliferation of Role-Playing Language Agents (RPLAs) has, predictably, outstripped genuine understanding. Current work focuses on simulating personality, a task easily mistaken for achieving it. The field’s obsession with large language models, while understandable, risks treating the symptom – fluent text generation – as the disease. Behavioral consistency, the holy grail of believable agents, remains elusive, often addressed with superficial constraints rather than fundamental architectural changes. If an agent’s actions are dictated by patching inconsistencies, it is not consistent at all; it merely appears so.

Future progress necessitates a ruthless simplification. The drive for ever-larger models must yield to a demand for models that know what they do not know. True character consistency will not arise from forcing outputs to align with pre-defined traits, but from building agents capable of internal self-representation – a model of their own beliefs, desires, and limitations. Evaluation metrics, currently fixated on surface-level coherence, should prioritize demonstrable reasoning and adaptive behavior.

The current trajectory suggests a proliferation of increasingly sophisticated parrots. The challenge, then, is not to make agents speak like people, but to imbue them with the capacity to think – or, failing that, to convincingly simulate the appearance of thought. If it cannot be explained simply, it is not understood. And a character whose actions are inexplicable is, ultimately, no character at all.

Original article: https://arxiv.org/pdf/2601.10122.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash Royale Best Boss Bandit Champion decks

- Vampire’s Fall 2 redeem codes and how to use them (June 2025)

- World Eternal Online promo codes and how to use them (September 2025)

- Best Arena 9 Decks in Clast Royale

- How to find the Roaming Oak Tree in Heartopia

- Mobile Legends January 2026 Leaks: Upcoming new skins, heroes, events and more

- Solo Leveling Season 3 release date and details: “It may continue or it may not. Personally, I really hope that it does.”

- ATHENA: Blood Twins Hero Tier List

- How To Watch Tell Me Lies Season 3 Online And Stream The Hit Hulu Drama From Anywhere

- Sunday City: Life RolePlay redeem codes and how to use them (November 2025)

2026-01-16 22:38