Author: Denis Avetisyan

A new library demonstrates the potential-and challenges-of combining traditional agent programming with the power of modern large language models.

This paper details the astra-langchain4j integration, exploring the benefits of retrieval-augmented generation within agentic systems and highlighting limitations in LLM reasoning and prompt engineering.

While the promise of agentic AI hinges on robust reasoning, current Large Language Models (LLMs) often exhibit limitations in complex problem-solving. This is explored in ‘astra-langchain4j: Experiences Combining LLMs and Agent Programming’, which details the development and evaluation of a library integrating LLMs with the ASTRA agent programming language. The authors demonstrate successful LLM integration for agentic tasks, but also highlight challenges related to prompt engineering and the inherent difficulties in eliciting reliable reasoning from LLMs. As agentic systems become increasingly sophisticated, how can we best bridge the gap between the potential of LLMs and the demands of truly autonomous, intelligent agents?

The Inevitable Limits of Reactive Systems

Conventional artificial intelligence systems often falter when confronted with real-world complexity, exhibiting limited capacity to navigate unpredictable situations or adjust to evolving circumstances. These systems, typically designed for narrow, predefined tasks, struggle with environments characterized by incomplete information, unforeseen events, and the need for flexible decision-making. Their reliance on static datasets and rigid algorithms hinders their ability to generalize knowledge or exhibit true adaptability. This limitation stems from a core inability to reason – to infer, plan, and act strategically in response to novel challenges – effectively confining them to scenarios mirroring their training data. Consequently, traditional AI frequently requires extensive retraining or human intervention when faced with even minor deviations from expected conditions, highlighting a critical gap in their capacity for robust, autonomous operation.

Agentic AI represents a fundamental shift in artificial intelligence, moving beyond reactive responses to embrace proactive problem-solving. Unlike traditional AI systems designed for specific tasks, agentic systems employ autonomous agents capable of defining goals, developing plans, and executing actions to achieve those goals – all without constant human intervention. This capability stems from an agent’s ability to perceive its environment, reason about potential outcomes, and adapt its strategies based on observed results. The implications are substantial; rather than simply responding to requests, these systems can independently identify opportunities, address challenges, and even anticipate future needs, effectively functioning as collaborators capable of handling complex, dynamic situations with a level of autonomy previously unattainable. This move towards proactive intelligence promises to unlock applications ranging from automated scientific discovery to personalized assistance and beyond, fundamentally reshaping how humans interact with technology.

Agentic AI systems are fundamentally built upon the synergistic combination of Large Language Models (LLMs) and carefully designed agent architectures. These architectures provide LLMs with the necessary tools and frameworks to move beyond simple text generation and engage in proactive, goal-directed behavior. Rather than passively responding to prompts, these systems can independently formulate plans, utilize external tools – such as search engines or APIs – and iteratively refine their approach to achieve specified objectives. ASTRA, for example, showcases this integration through a prototype library that facilitates seamless LLM incorporation, allowing developers to build agents capable of complex reasoning and autonomous action. This approach moves beyond the limitations of traditional AI by enabling systems to dynamically adapt to unforeseen circumstances and pursue solutions in complex, real-world environments.

ASTRA: Architecting for the Inevitable Complexity

ASTRA is a novel agent programming language specifically engineered to address the challenges of integrating Large Language Models (LLMs) into functional agent architectures. Existing agent frameworks often lack the flexibility to fully leverage the reasoning and generative capabilities of LLMs, while direct prompting of LLMs lacks the structure and control necessary for reliable, complex tasks. ASTRA provides a declarative approach to agent construction, allowing developers to define agent behavior through a combination of LLM calls and programmatic logic. This facilitates the creation of agents capable of complex reasoning, planning, and execution, while maintaining control over the LLM’s actions and outputs, thereby bridging the gap between the potential of LLMs and the requirements of robust agent systems.

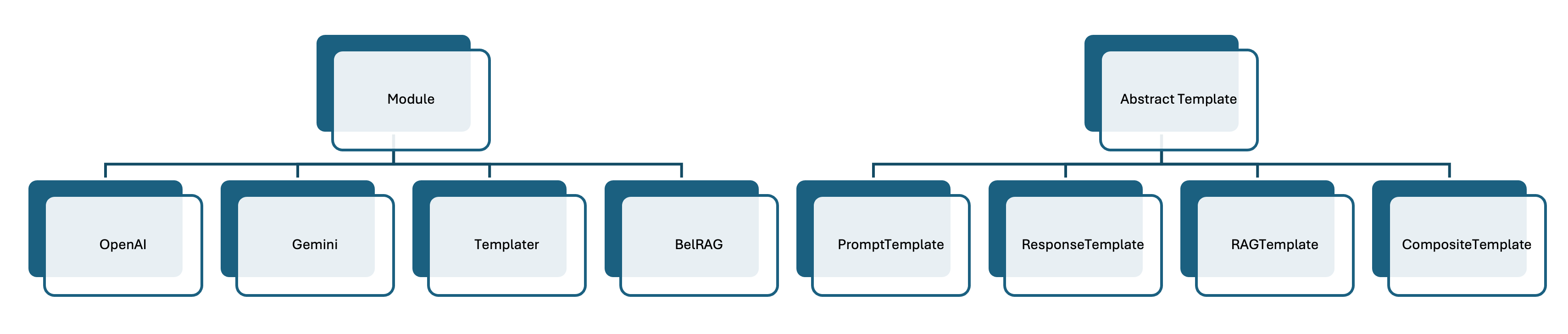

ASTRA utilizes ‘Modules’ to facilitate the extension of agent capabilities through the incorporation of custom functionalities. These Modules function as self-contained units of code, allowing developers to define and integrate specific tools, knowledge sources, or processing logic into the agent’s operational framework without modifying the core agent architecture. This modular approach promotes code reusability, simplifies maintenance, and enables dynamic adaptation of agent behavior by loading or unloading Modules as required, thereby enhancing the agent’s flexibility and scalability to address a wider range of tasks and environments.

ASTRA achieves compatibility with a broad spectrum of Large Language Models (LLMs) through its integration with LangChain4J, a Java-based framework. This integration simplifies the process of connecting ASTRA agents to various LLMs without requiring substantial code modifications. Practical application of this capability has been demonstrated through implementations across three distinct scenarios: automated customer support, complex data analysis, and robotic process automation. These scenarios highlight ASTRA’s versatility and ability to function effectively with different LLM backends within LangChain4J’s supported ecosystem.

Belief Revision: Accounting for the Inherent Unreliability of Data

Prompt engineering, the process of crafting effective input queries for Large Language Models (LLMs), is a critical determinant of output quality. While LLMs demonstrate impressive capabilities, their performance is highly sensitive to prompt phrasing, structure, and the inclusion of contextual information. Achieving optimal results necessitates a systematic approach to prompt design, including iterative testing and refinement to minimize ambiguity and maximize the likelihood of generating desired responses. Careful optimization involves balancing prompt length, specificity, and the inclusion of relevant examples or constraints to effectively guide the LLM’s reasoning process and prevent undesirable outputs like hallucinations or irrelevant information.

BeliefRAG enhances Retrieval-Augmented Generation (RAG) by incorporating an agent’s internal ‘Belief Revision’ process. This process allows the agent to actively evaluate the relevance and reliability of retrieved knowledge before integrating it into response generation. Specifically, the agent maintains a belief state representing its current understanding, and incoming retrieved documents are assessed for consistency with this state. Documents contradicting existing beliefs trigger a revision process, potentially updating the agent’s knowledge base or flagging the information as questionable. This dynamic belief management improves the accuracy and coherence of generated responses by ensuring the agent grounds its reasoning in consistently validated information, rather than passively accepting all retrieved content.

BeliefRAG enhances response quality by grounding agent outputs in retrieved knowledge, directly addressing issues of factual accuracy and contextual coherence. The system functions by utilizing an internal belief revision process, which evaluates and integrates retrieved information to refine the agent’s understanding before generating a response. This process ensures that responses are not solely based on the LLM’s pre-trained knowledge, but are actively informed by external, verified sources. A prototype implementation, detailed in our published research, demonstrates the efficacy of this approach in improving the reliability and relevance of generated text.

The Illusion of Control: Demonstrating ASTRA in Action

The ASTRA framework demonstrates robust control over large language models through the implementation of a Tic-Tac-Toe game, effectively showcasing its ability to guide LLM-based strategic decision-making. This example utilizes ‘JsonNode’ as a standardized data representation, enabling precise communication between the framework and the LLM regarding game state, available moves, and chosen actions. By encoding the Tic-Tac-Toe board, player turns, and potential outcomes within the ‘JsonNode’ structure, ASTRA can reliably interpret the LLM’s responses and enforce game rules, resulting in coherent and strategic gameplay. This controlled interaction highlights ASTRA’s capacity to move beyond simple prompting and actively manage the reasoning process of a language model within a defined environment, paving the way for more complex applications.

The framework demonstrates sophisticated task management through a travel planning example, utilizing a multi-agent system to simulate collaborative problem-solving. Agents within this system employ the Foundation for Intelligent Physical Agents (FIPA) Request Interaction Protocol to effectively delegate sub-tasks, such as flight and hotel booking, to specialized peers. This protocol facilitates a structured exchange of requests and responses, ensuring each agent understands its role and responsibilities within the broader planning process. The resulting workflow simulates a realistic scenario where complex objectives are broken down into manageable components, each handled by an agent with specific expertise, and then integrated to achieve a unified travel itinerary. This showcases not just individual agent capability, but the power of coordinated action within a distributed artificial intelligence system.

The adaptability of the ASTRA framework is demonstrated through a spectrum of applications, ranging from the straightforward logic of Tic-Tac-Toe to the intricacies of travel itinerary planning. This breadth underscores the system’s capacity to manage both simple, self-contained tasks and complex, collaborative endeavors. These examples aren’t merely theoretical; they are fully implemented and accessible within a prototype library, allowing for direct exploration of ASTRA’s functionality and serving as a foundation for developers to build upon. This practical availability highlights the framework’s potential for real-world implementation and further innovation across diverse application domains.

The Inevitable Drift: Towards Collaborative Systems and Unforeseen Consequences

ASTRA’s architecture benefits significantly from the incorporation of Large Language Models (LLMs) such as OpenAI’s and Gemini, dramatically broadening the spectrum of achievable agent behaviors. These LLMs provide ASTRA with advanced capabilities in natural language understanding and generation, allowing it to interpret complex instructions and formulate nuanced responses – moving beyond pre-programmed actions. This integration isn’t simply about adding voice control; it enables dynamic task planning, where the agent can reason about goals, adapt to changing circumstances, and even request clarification when needed. Consequently, ASTRA can now handle tasks demanding flexibility and common-sense reasoning, exhibiting behaviors that more closely resemble human intelligence and paving the way for more versatile and adaptable robotic systems.

The incorporation of advanced language models promises a shift towards genuinely collaborative interactions between humans and robots. Rather than requiring specialized commands or programming knowledge, future robotic systems can potentially understand and respond to natural language instructions, mirroring the ease of communication between people. This intuitive interface fosters a more seamless partnership, allowing individuals to delegate tasks with nuanced requests and receive responses that are contextually aware and readily understandable. Such advancements move beyond simple task execution, enabling robots to anticipate needs, offer suggestions, and adapt to changing circumstances – ultimately creating a more fluid and productive human-robot team dynamic.

Ongoing development centers on translating the capabilities of this agent framework into practical solutions for intricate real-world scenarios. Researchers are concentrating on enhancing the system’s capacity for robust reasoning and meticulous planning, moving beyond controlled environments to address the unpredictable nature of everyday tasks. This refinement builds directly upon the established prototype library and its successful demonstrations, aiming to create agents capable of not just responding to commands, but proactively anticipating needs and formulating effective strategies. The ultimate goal is to deploy these systems in complex domains – from assisting in dynamic manufacturing processes to providing nuanced support in healthcare settings – where reliable and adaptable robotic collaboration is paramount.

The pursuit of agentic AI, as detailed in this work, reveals a humbling truth about complexity. The integration of Large Language Models into a structured agent programming language like ASTRA isn’t about building intelligence, but rather cultivating an environment where emergent behaviors can arise. Ken Thompson observed, “Debugging is twice as hard as writing the code in the first place. Therefore, if you write the code as cleverly as possible, you are, by definition, not going to be able to debug it.” This resonates deeply with the challenges presented by LLMs; crafting prompts-the initial conditions-proves far more intricate than anticipated, and reasoning limitations quickly surface. The system doesn’t fail due to flawed construction, but because the very act of specifying intent introduces unforeseen consequences, echoing the inherent unpredictability of complex systems.

What Lies Ahead?

The coupling of agent-oriented programming with large language models, as demonstrated, is less a construction and more a precarious balancing act. Architecture is, after all, how one postpones chaos, and this work merely extends the delay. The limitations revealed – the fragility of LLM reasoning, the endless refinement of prompts – are not bugs to be fixed, but fundamental properties of the system. There are no best practices, only survivors, and the emergent behavior of these combined systems will be defined by what breaks first.

Future efforts will not center on achieving ‘perfect’ integration, a chimera in any complex system. Instead, the focus will inevitably shift toward resilience. How does one build agents that gracefully degrade when the oracle falters? How does one design systems that anticipate, and even benefit from, the inherent unpredictability of LLMs? The question isn’t how to make these models reason better, but how to build agents that can function despite* imperfect reasoning.

Order is just cache between two outages. The true measure of success in this field will not be the elegance of the architecture, but the robustness of the failure modes. The next phase is not about building intelligence, but about cultivating antifragility – systems that grow stronger from stress, rather than collapsing under it.

Original article: https://arxiv.org/pdf/2601.21879.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- MLBB x KOF Encore 2026: List of bingo patterns

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Overwatch Domina counters

- Brawl Stars Brawlentines Community Event: Brawler Dates, Community goals, Voting, Rewards, and more

- eFootball 2026 Starter Set Gabriel Batistuta pack review

- Honkai: Star Rail Version 4.0 Phase One Character Banners: Who should you pull

- Gold Rate Forecast

- 1xBet declared bankrupt in Dutch court

- Lana Del Rey and swamp-guide husband Jeremy Dufrene are mobbed by fans as they leave their New York hotel after Fashion Week appearance

- Clash of Clans March 2026 update is bringing a new Hero, Village Helper, major changes to Gold Pass, and more

2026-01-31 00:48