Author: Denis Avetisyan

New research demonstrates how artificial intelligence can significantly improve the effectiveness of asking strangers for job referrals.

AI agents leveraging Retrieval-Augmented Generation and reward modeling can enhance job referral requests, particularly for those initially deemed less promising.

Securing employment often hinges on leveraging personal networks, yet requesting referrals from strangers presents a unique communication challenge. This paper, ‘Building AI Agents to Improve Job Referral Requests to Strangers’, introduces AI agents designed to refine these requests, utilizing large language models and retrieval-augmented generation. Results demonstrate that these agents can significantly increase the predicted success rate of weaker requests-by up to 14%-without diminishing the effectiveness of already strong ones. Could this approach offer a scalable, low-cost method for identifying promising strategies to improve job seekers’ outcomes before broader implementation?

The Evolving Landscape of Opportunity

The pursuit of employment often hinges on the strength of one’s network, making effective job referral requests a critical, yet surprisingly challenging, component of a job search. Many candidates struggle to articulate a compelling reason for a connection to advocate on their behalf, frequently relying on generic templates or overly formal language that diminishes the personal touch. This difficulty stems from a common reluctance to directly ask for assistance, coupled with an underestimation of how much time and effort goes into writing a persuasive referral. Consequently, valuable networking opportunities are often missed, as requests fail to resonate with potential advocates or are simply overlooked amidst the constant flow of professional communication. A well-crafted request, however, can significantly increase the likelihood of a positive response and ultimately, open doors to previously inaccessible opportunities.

Conventional job referral requests frequently underperform due to a reliance on generic templates and a failure to demonstrate genuine connection. Many applicants treat these requests as transactional asks, overlooking the importance of building rapport and highlighting shared experiences with the potential referrer. Studies indicate that highly personalized requests, detailing specific reasons for approaching that individual and acknowledging their professional journey, yield significantly higher response rates. Simply requesting a referral without demonstrating prior engagement or understanding of the referrer’s network often results in the message being overlooked, as it lacks the crucial element of reciprocity and appears as a burden rather than a genuine connection seeking mutual benefit. Consequently, applicants miss opportunities to leverage their networks effectively, hindering their job search progress.

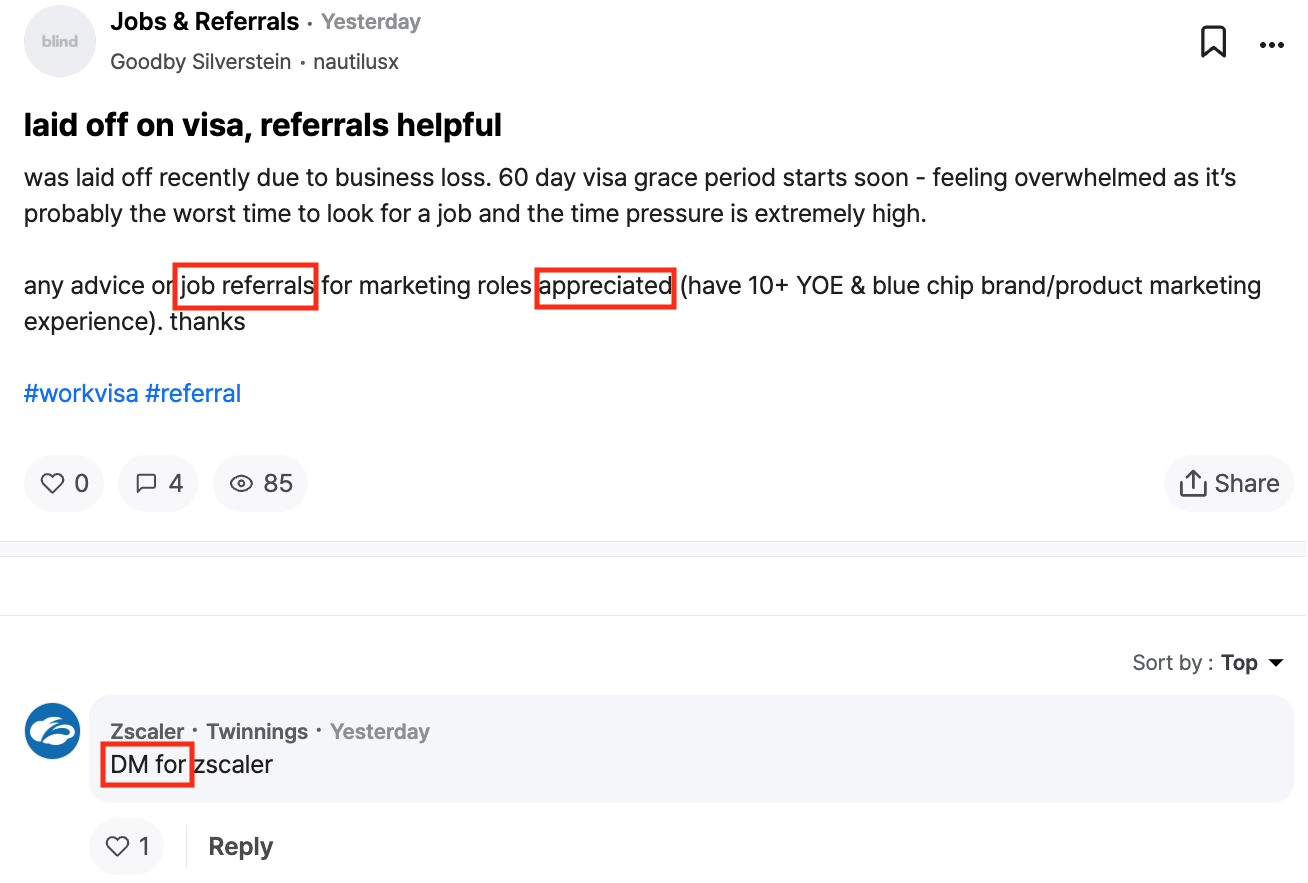

The proliferation of online platforms, notably the ‘Blind Platform’ and similar anonymous professional networks, has fundamentally reshaped the landscape of job referrals, presenting a double-edged sword for job seekers. While these digital spaces offer unprecedented access to individuals within target companies and industries – bypassing traditional gatekeepers and fostering direct connections – they also demand a nuanced approach to networking. The anonymity inherent in such platforms can both facilitate candid conversations and create challenges in establishing trust and credibility. Successful networking now requires understanding the unique culture of each platform, adapting communication styles accordingly, and navigating the potential for misinterpretation or disregard, all while maintaining a professional demeanor within an often informal environment. Effectively leveraging these tools necessitates a shift from broad outreach to targeted engagement, prioritizing quality connections over sheer volume.

Augmenting Connection: AI Agents and the Referral Process

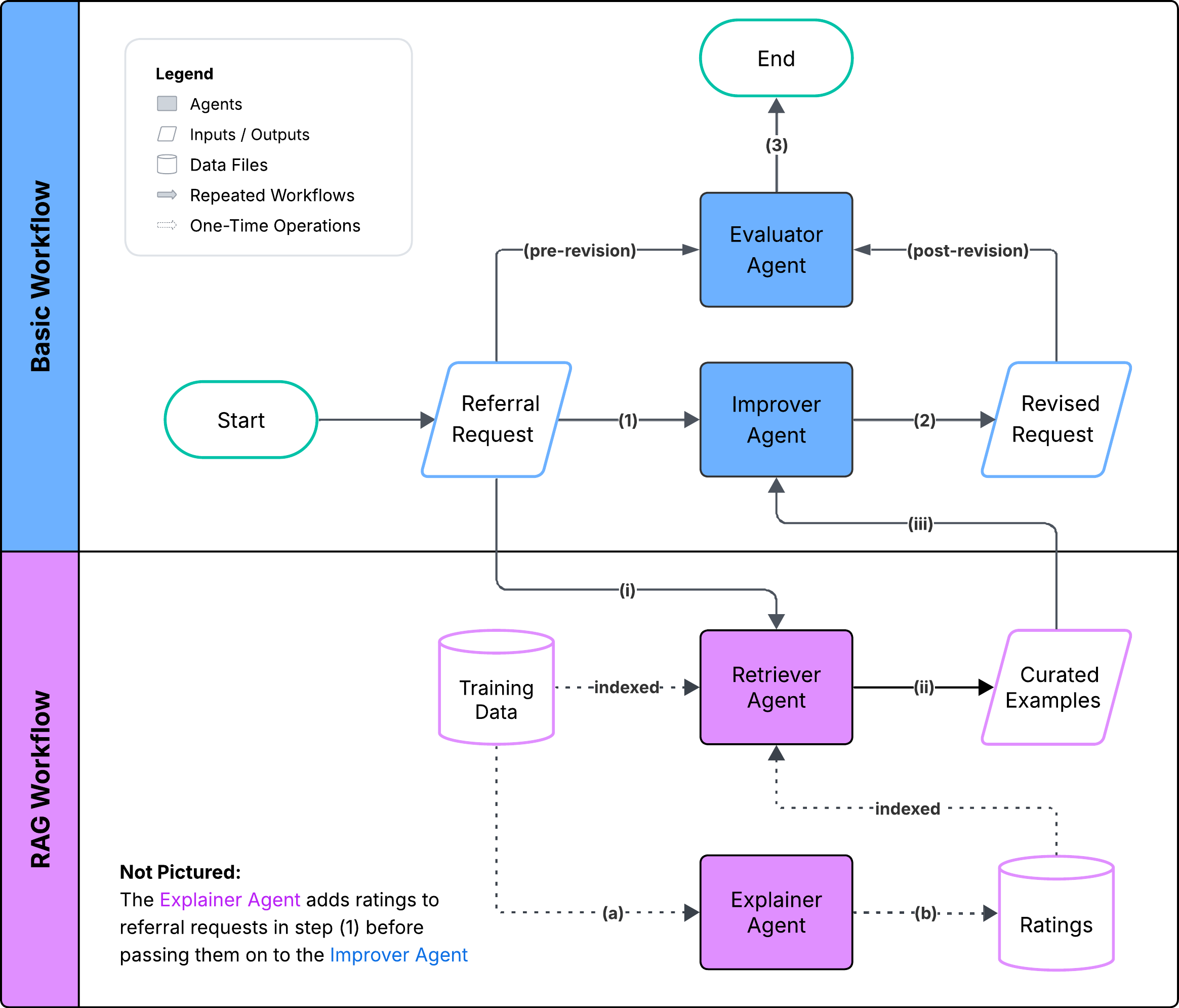

AI Agents are being implemented to enhance the job seeker’s referral request process through the utilization of Large Language Models (LLM). These agents function as assistive tools, accepting initial drafts of referral requests as input and then employing LLMs to suggest improvements. The core functionality centers on automating refinement of request language, aiming to produce more polished and impactful communications. This approach intends to reduce the burden on job seekers by providing real-time feedback and suggestions, ultimately increasing the effectiveness of their networking efforts. The agents are designed to integrate seamlessly into existing platforms, offering a user-friendly interface for request composition and revision.

AI agents employ Large Language Model (LLM) revisions as a core function to refine job seeker referral requests. This process involves analyzing initial drafts for attributes impacting effectiveness, specifically targeting areas such as grammatical clarity, sentence conciseness, and the overall persuasive impact of the language used. The LLM identifies opportunities to restructure phrasing, remove redundancy, and strengthen the request’s core message. These revisions are not simply cosmetic; the system is designed to proactively address weaknesses in the initial draft, improving its potential to elicit a positive response from potential referrers. The output of the LLM revision is a refined request intended to be more readily understood and favorably received.

The efficacy of AI-driven referral request revisions is directly dependent on a Reward Model that objectively evaluates request quality. This model utilizes a scoring system to assess factors contributing to successful referral outcomes, allowing the AI agent to prioritize improvements in those areas. Specifically, the Reward Model targets weaker requests – those initially predicted to have a lower success rate – with the goal of increasing their predicted success by 14%. This improvement is achieved through iterative refinement, where the AI agent proposes revisions and the Reward Model provides feedback, effectively guiding the agent towards generating higher-quality requests and maximizing the likelihood of a positive response.

Decoding Quality: The Mechanics of the Reward Model

The Reward Model employs a hybrid approach to encoding request characteristics, integrating both a TF-IDF Model and a Featurized Model. The TF-IDF Model assesses requests based on term frequency and inverse document frequency, quantifying the importance of words within a request relative to a corpus. Complementing this, the Featurized Model utilizes a set of engineered features derived from request data, such as request length, complexity metrics, and specific keyword presence. This combined methodology aims to capture a comprehensive representation of request attributes, allowing the model to differentiate between requests likely to yield successful LLM outputs and those requiring revision.

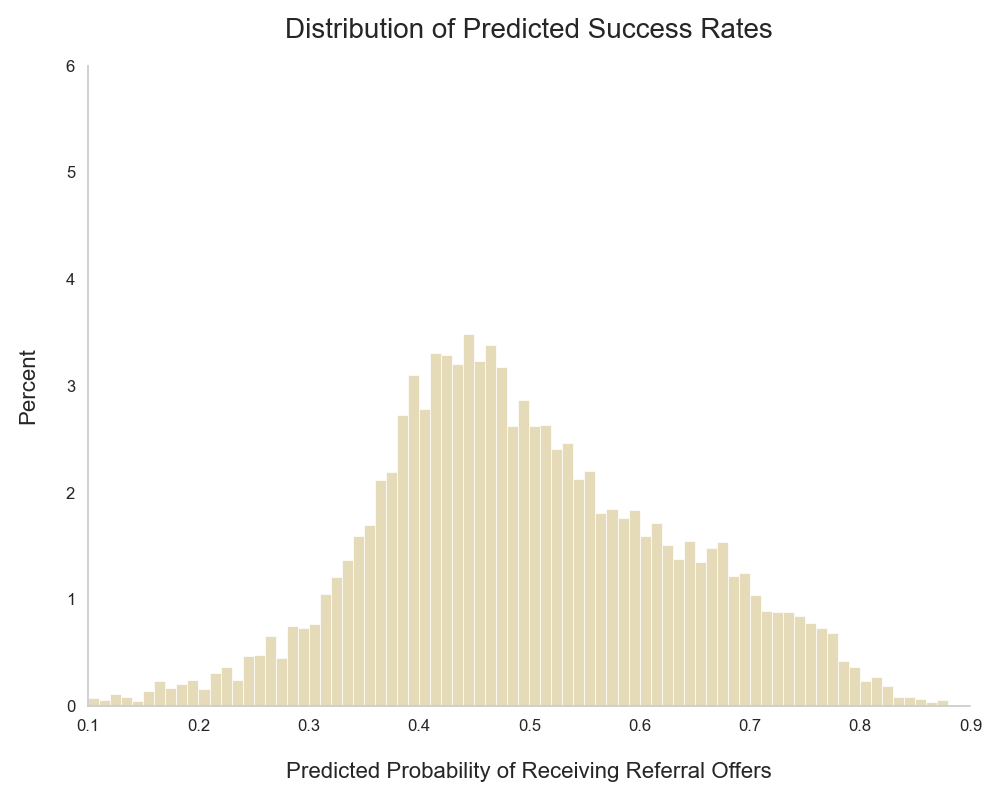

The Reward Model employs a Sentence Transformer to generate vector embeddings of incoming requests, enabling the quantification of semantic meaning. This process translates textual input into numerical representations which facilitate comparison and analysis. Evaluation of the model’s performance, measured by Area Under the Receiver Operating Characteristic curve (AUROC), yielded a score of 0.681, indicating its capacity to differentiate between requests likely to succeed and those likely to fail.

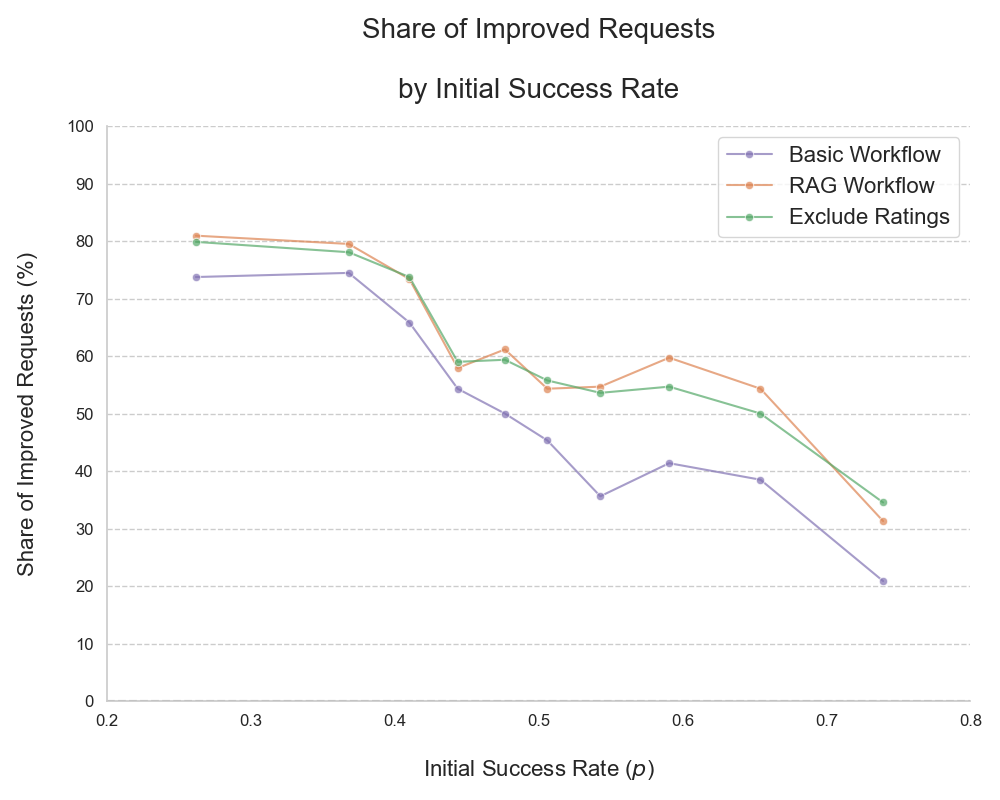

The Predicted Success Rate, generated by the Reward Model, directly influences the LLM revision process. Requests initially identified as having a lower probability of success demonstrate a measurable improvement when processed through the basic workflow; specifically, these weaker requests experience a +3.4 percentage point increase in their predicted success rate following revision. This indicates the model effectively identifies areas for improvement and guides the LLM towards generating more successful responses for challenging prompts, providing a quantitative metric for evaluating the efficacy of the revision process.

Beyond Revision: Amplifying Potential with RAG

The standard process of refining Large Language Model outputs was significantly improved through the implementation of a Retrieval-Augmented Generation (RAG) workflow. This approach moves beyond simple iterative prompting by actively sourcing relevant, high-quality examples during the revision stage. Rather than relying solely on the LLM’s internal knowledge, the RAG workflow dynamically retrieves well-written requests from a curated dataset, effectively providing the model with concrete instances of desired output. This external knowledge injection not only bolsters the relevance of suggested revisions, ensuring they align with established best practices, but also enhances the overall quality and sophistication of the final text, leading to more impactful and user-friendly communication.

The revision process benefits from a two-pronged approach employing specialized agents within the workflow. A Retriever Agent proactively seeks out examples of well-crafted requests, effectively establishing a benchmark for quality and clarity. This curated collection isn’t simply presented, however; an Explainer Agent analyzes these examples and formulates specific editorial guidance. This guidance is then applied to weaker requests, providing targeted suggestions for improvement-essentially offering a reasoned rationale for refining the initial phrasing and structure. The combined action of these agents moves beyond simple correction, fostering a deeper understanding of effective request formulation and ultimately enhancing the overall quality of submissions.

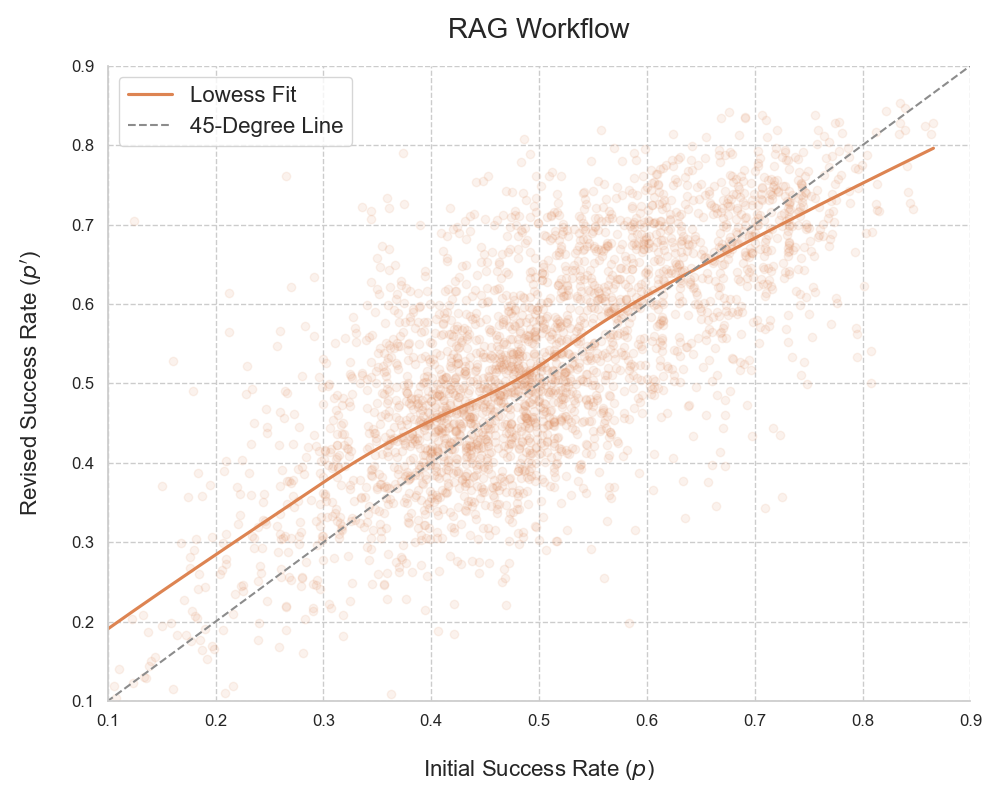

Analysis of revised requests utilized a Lowess curve to illustrate improvements in predicted success rates. This smoothing technique revealed a substantial positive trend, demonstrating that the RAG workflow significantly enhanced the quality of initially weaker requests. Specifically, the probability of successful completion for these requests increased by 5.5 percentage points following revision – a 14% relative improvement when contrasted with the baseline probabilities observed in the original, unrevised requests. This suggests the RAG workflow doesn’t merely refine existing strong requests, but demonstrably elevates the potential of those initially less likely to succeed, offering a valuable pathway for optimizing the overall effectiveness of language model interactions.

The pursuit of enhancing communication through artificial intelligence, as demonstrated in this work on job referral requests, echoes a fundamental truth about all complex systems. This paper’s success in bolstering weaker requests via Retrieval-Augmented Generation (RAG) without harming stronger ones reveals a pragmatic approach to system longevity. As John McCarthy observed, “It is better to be vaguely right than precisely wrong.” The system isn’t aiming for perfect success in every instance, but rather for a generally improved outcome, acknowledging that complete stability is an illusion. The inherent latency in any request – the time it takes to process and respond – is a cost willingly paid for the potential of a positive connection, mirroring the trade-offs present in all enduring systems.

What Lies Ahead?

The demonstrated capacity for large language models to refine even ostensibly competent requests for professional assistance reveals a subtle truth: efficacy is rarely absolute, but perpetually asymptotic. This work isn’t about achieving perfect requests – an impossibility given the inherent messiness of human interaction – but about nudging systems closer to optimal function. The improvements seen, particularly for weaker initial attempts, suggest a potential for these agents to act as subtle amplifiers of individual agency, not replacements for it. Every delay is the price of understanding, and the iterative refinement process inherent in this approach acknowledges that.

However, the architecture underpinning these agents – retrieval-augmented generation – is not without its vulnerabilities. The quality of the retrieved knowledge remains a critical dependency, and the long-term stability of those knowledge sources is an open question. Furthermore, the current focus on predicting ‘success’ risks conflating correlation with causation; a successful referral doesn’t necessarily indicate a good request, merely a fortunate outcome.

Future work must address these limitations. Investigating methods for dynamic knowledge curation, exploring alternative reward models that prioritize qualities beyond simple success rates, and, crucially, considering the ethical implications of subtly shaping human communication-these are not merely next steps, but preconditions for a sustainable trajectory. Architecture without history is fragile and ephemeral; a deeper understanding of the underlying mechanisms-and their potential for unintended consequences-is paramount.

Original article: https://arxiv.org/pdf/2601.10726.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash Royale Best Boss Bandit Champion decks

- Vampire’s Fall 2 redeem codes and how to use them (June 2025)

- World Eternal Online promo codes and how to use them (September 2025)

- Best Arena 9 Decks in Clast Royale

- Country star who vanished from the spotlight 25 years ago resurfaces with viral Jessie James Decker duet

- ‘SNL’ host Finn Wolfhard has a ‘Stranger Things’ reunion and spoofs ‘Heated Rivalry’

- M7 Pass Event Guide: All you need to know

- Mobile Legends January 2026 Leaks: Upcoming new skins, heroes, events and more

- Solo Leveling Season 3 release date and details: “It may continue or it may not. Personally, I really hope that it does.”

- Kingdoms of Desire turns the Three Kingdoms era into an idle RPG power fantasy, now globally available

2026-01-19 17:48