Author: Denis Avetisyan

New research reveals that simply explaining an AI’s reasoning isn’t enough – users need access to supporting evidence to truly evaluate and trust fact-checking claims.

Providing accessible evidence alongside natural language explanations from large language models is crucial for fostering informed reliance and improving accuracy in fact-checking evaluations.

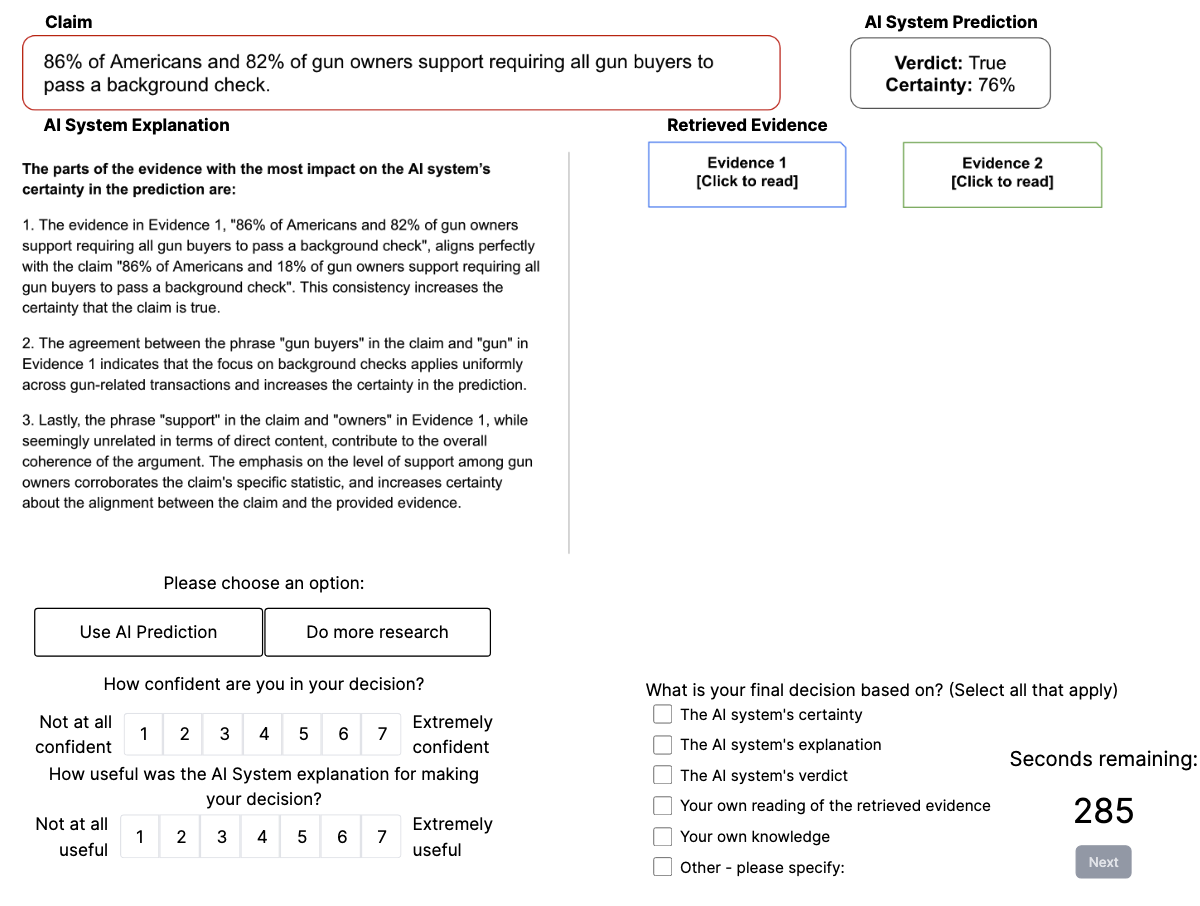

Despite growing interest in explainable AI, the fundamental role of supporting evidence in building trust and facilitating informed decision-making remains surprisingly underexplored. This study, ‘Show me the evidence: Evaluating the role of evidence and natural language explanations in AI-supported fact-checking’, systematically investigates how accessible evidence impacts user reliance on, and accuracy of, AI-supported claims. Our findings demonstrate that individuals consistently prioritize evidence when evaluating information presented by an AI, even when provided with natural language explanations, suggesting evidence is key to fostering appropriate trust. How can we best design evidence presentation to maximize its utility and mitigate potential biases in human-AI collaboration?

The Illusion of Knowing: AI and the Erosion of Critical Thought

The accelerating integration of Large Language Models into fact-checking processes represents a significant shift in information verification. These AI systems offer an unprecedented capacity for rapidly analyzing vast datasets and identifying potential inaccuracies – a task previously limited by human time and resources. This speed and scale are particularly valuable in combating the spread of misinformation online, where content virality often outpaces traditional fact-checking methods. While human fact-checkers traditionally assess source credibility and contextual nuances, LLM_AI can quickly scan for inconsistencies, verify claims against established databases, and flag potentially false statements. The technology is being deployed by news organizations, social media platforms, and independent verification services, demonstrating its growing role in maintaining informational integrity, though the implications of this increased reliance require careful consideration.

The increasing integration of artificial intelligence into daily workflows presents a significant challenge: the potential for uncritical acceptance of AI-generated recommendations. As algorithms take on tasks previously requiring human judgment, a reliance on their outputs – even when flawed – can subtly erode independent thought and verification. This phenomenon, termed AI overreliance, isn’t necessarily about believing AI is infallible, but rather a cognitive shortcut where recommendations are accepted at face value due to convenience or perceived authority. Studies suggest this can manifest across various domains, from medical diagnoses to financial decisions, leading to errors propagating unchecked and potentially undermining the very foundations of informed decision-making. The danger lies not in the technology itself, but in a diminishing capacity – or willingness – to apply critical evaluation to its outputs, creating a feedback loop where algorithmic suggestions replace human scrutiny.

The increasing integration of artificial intelligence into information validation and decision-making presents a subtle but significant risk to societal trust. As reliance on AI-driven recommendations grows, the human capacity for critical evaluation may diminish, fostering a dependence that accepts outputs without questioning their accuracy or underlying biases. This erosion isn’t necessarily due to malicious intent within the AI itself, but rather a consequence of reduced human oversight and a gradual acceptance of algorithmic authority. Consequently, a decline in independent verification could lead to the widespread dissemination of misinformation and flawed judgments, ultimately undermining confidence in established institutions and the very foundations of informed discourse. Mitigating this requires proactive strategies focused on fostering AI literacy, promoting healthy skepticism, and maintaining human accountability alongside automated systems.

Beyond Black Boxes: The Promise of Evaluative AI

Evaluative AI represents a departure from traditional artificial intelligence systems that primarily deliver conclusions; instead, it prioritizes transparency by providing the evidence and reasoning that support its outputs. This approach fundamentally alters the human-AI interaction, shifting the focus from accepting a result at face value to critically assessing the underlying basis for that result. By explicitly presenting the data and logic used in its decision-making process, Evaluative AI enables users to independently verify information, assess its relevance, and build confidence in the system’s conclusions. This paradigm shift is crucial for applications requiring high levels of trust and accountability, such as legal analysis, medical diagnosis, and financial modeling.

Evidence Presentation is a core component of evaluative AI systems, facilitating independent verification of conclusions by providing access to source materials. A recent study quantified user engagement with this feature, finding that participants opened provided evidence documents in 67% of instances where they were available. This indicates a strong user preference for directly reviewing the underlying basis for AI-generated outputs and supports the implementation of systems that prioritize transparency through readily accessible source documentation.

Maintaining source credibility is essential for trustworthy AI outputs. Systems must explicitly convey the reliability of the information used in their reasoning processes, potentially through indicators like source ranking, publication date, author expertise, or documented bias. This transparency allows users to assess the validity of supporting evidence and understand the potential limitations of the AI’s conclusions. Without clear indication of source quality, users lack the necessary context to critically evaluate the information presented and may misinterpret or inappropriately rely on unreliable data.

The Devil in the Details: Communicating Uncertainty with AI

Effective AI communication necessitates conveying not only the evidence supporting a claim, but also the system’s confidence in that claim through a practice termed Uncertainty_Communication. Presenting data without indicating the degree of certainty can lead to miscalibration of user trust and potentially inappropriate reliance on the AI’s output. This approach recognizes that AI systems, unlike humans, do not inherently express subjective confidence, and therefore require explicit mechanisms to communicate their internal assessment of reliability. Implementing Uncertainty_Communication allows users to appropriately weigh the AI’s advice, particularly in high-stakes decision-making contexts, and fosters a more realistic understanding of the system’s capabilities and limitations.

AI systems can communicate their confidence levels by combining Numerical_Uncertainty and Natural_Language_Explanations. Numerical_Uncertainty involves assigning a numerical score to represent the AI’s confidence in a given claim; internal testing demonstrated these scores ranged from 35% to 78% depending on the specific assertion being evaluated. Complementing these scores, Natural_Language_Explanations provide human-readable justifications for the AI’s assessment, detailing the reasoning behind the confidence score and offering transparency into the decision-making process. This dual approach aims to provide users with both a quantifiable measure of confidence and a qualitative understanding of the AI’s rationale.

Cognitive forcing, a technique designed to promote critical assessment of AI outputs, involves requiring users to formulate their own predictions or judgments prior to being presented with the AI’s response. Our research indicated that implementing this technique effectively encourages user engagement with the information. However, analysis of study data revealed no statistically significant difference in user reliance on AI advice or in the extent to which provided information was utilized, regardless of whether the AI’s explanation focused on its final verdict or explicitly detailed its uncertainty estimates.

The Real World Test: Trustworthy AI in Contentious Domains

When artificial intelligence addresses deeply sensitive societal issues – such as the pervasive presence of microplastics in the environment, anxieties surrounding economic stability, or the contentious debate over abortion rights – the foundational principles of evaluative AI and transparent communication become paramount. AI systems operating in these domains must not only deliver information but also clearly articulate the basis for their conclusions, acknowledging the inherent uncertainties and limitations of the data and algorithms employed. This necessitates a move beyond “black box” predictions toward systems that offer explainable reasoning, allowing individuals to assess the validity of the information presented and form their own independent judgements. Without this commitment to transparency and rigorous evaluation, AI risks exacerbating existing societal divisions and eroding public trust, particularly when dealing with topics that evoke strong emotional responses and deeply held beliefs.

Effective communication of artificial intelligence insights hinges on a crucial balance: presenting robust evidence alongside honest acknowledgment of inherent uncertainties. Rather than portraying AI outputs as definitive truths, systems designed with evaluative principles prioritize the delivery of supporting data – allowing individuals to trace the reasoning and assess the validity of conclusions. This approach actively empowers critical thinking, as users are equipped to independently weigh the information, identify potential limitations, and form their own informed perspectives. By openly displaying the confidence levels associated with predictions and explicitly stating what remains unknown, AI can move beyond simply delivering answers and instead facilitate a process of informed judgment and responsible decision-making, ultimately fostering trust and promoting a more nuanced understanding of complex issues.

Artificial intelligence, often viewed with skepticism, holds considerable potential to build public confidence and support sound choices when transparency and verifiability are central to its design. Rather than functioning as a ‘black box’, trustworthy AI systems clearly articulate the data sources, algorithms, and reasoning processes behind their outputs, allowing for independent scrutiny and validation. This approach moves beyond simply presenting information to demonstrating how conclusions are reached, fostering a sense of accountability and enabling users to assess the reliability of the AI’s insights. Consequently, AI transitions from a potential source of misinformation to a valuable instrument for informed decision-making across complex domains, strengthening societal trust and promoting responsible innovation.

The pursuit of AI-supported fact-checking, as this study demonstrates, inevitably highlights the limitations of even the most sophisticated systems. Providing explanations alongside evidence doesn’t magically instill trust; it merely shifts the burden of verification. As Carl Friedrich Gauss observed, “I do not know what I appear to the world, but to myself I seem to be a man who has lived a long life without knowing it.” This rings particularly true when evaluating LLM outputs. The elegance of a natural language explanation is quickly overshadowed by the messiness of production data-the need to verify the evidence itself. The study confirms a predictable truth: accessibility of evidence, not the sophistication of the reasoning, dictates informed decision-making. Anything appearing self-healing hasn’t truly broken yet; the system will eventually be stressed by the sheer volume of claims requiring validation.

The Road Ahead

This exploration into evidence and explanation feels… predictably necessary. The study confirms what production systems have screamed for years: simply saying something is fact-checked isn’t enough. Humans, stubbornly, still require supporting data. The current fascination with large language models will inevitably run headfirst into this wall. It’s easy to generate a convincing rationale; it’s considerably harder to attach verifiable substance. Expect a surge in ‘explainable AI’ research, mostly focused on post-hoc rationalizations for decisions already made, and a continued struggle to build systems that actually reason from evidence rather than confabulate plausible-sounding narratives.

The real challenge isn’t trust, it’s scale. This work demonstrates that accessible evidence improves evaluation in a controlled setting. But what happens when the ‘controlled setting’ is a firehose of information? Any system that claims to ‘scale’ fact-checking will quickly discover that humans are remarkably adept at ignoring inconvenient truths, regardless of how neatly they’re presented. Better one well-vetted source than a thousand confidently incorrect microservices, a lesson history consistently ignores.

Ultimately, this isn’t a problem AI will solve. It’s a problem AI will faithfully reproduce, amplifying existing biases and vulnerabilities. The focus should shift from building ‘trustworthy AI’ to building systems that make it easier for humans to apply their own critical thinking – a task, one suspects, that will remain perpetually underfunded.

Original article: https://arxiv.org/pdf/2601.11387.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash Royale Best Boss Bandit Champion decks

- Vampire’s Fall 2 redeem codes and how to use them (June 2025)

- World Eternal Online promo codes and how to use them (September 2025)

- Best Arena 9 Decks in Clast Royale

- Country star who vanished from the spotlight 25 years ago resurfaces with viral Jessie James Decker duet

- M7 Pass Event Guide: All you need to know

- Mobile Legends January 2026 Leaks: Upcoming new skins, heroes, events and more

- ‘SNL’ host Finn Wolfhard has a ‘Stranger Things’ reunion and spoofs ‘Heated Rivalry’

- Solo Leveling Season 3 release date and details: “It may continue or it may not. Personally, I really hope that it does.”

- Kingdoms of Desire turns the Three Kingdoms era into an idle RPG power fantasy, now globally available

2026-01-19 07:40