Author: Denis Avetisyan

New research explores how AI-powered tools can foster deeper critical thinking in decision-making, moving beyond simple reliance on automated outputs.

This review introduces the AI-Assisted Critical Thinking (AACT) framework and examines how it leverages metacognition and counterfactual reasoning to improve human-AI collaboration in decision support systems.

Despite the increasing prevalence of human-AI collaborative decision-making, performance often lags due to insufficient attention to the human element of reasoning. This paper, ‘Understanding the Effects of AI-Assisted Critical Thinking on Human-AI Decision Making’, introduces the AI-Assisted Critical Thinking (AACT) framework, designed to improve decision robustness by prompting users to critically reflect on their own rationale rather than passively accepting AI outputs. Through a case study on house price prediction, findings demonstrate that AACT reduces over-reliance on AI, though at the cost of increased cognitive load, and is particularly beneficial for users familiar with AI technologies. How can we best leverage AI to facilitate critical thinking and build truly effective human-AI partnerships?

The Fragile Foundation of Choice: Recognizing the Limits of Intuition

Despite access to abundant data, human decision-making remains surprisingly vulnerable to cognitive biases. These aren’t necessarily flaws in reasoning, but rather deeply ingrained mental shortcuts – heuristics – developed to expedite processing in a complex world. Studies reveal consistent patterns of deviation from logical choices, such as confirmation bias, where individuals favor information confirming existing beliefs, or anchoring bias, where initial information unduly influences subsequent judgments. Even experts, equipped with considerable knowledge, are susceptible, demonstrating that biases operate largely outside of conscious awareness and can affect evaluations in fields ranging from medical diagnosis to financial forecasting. The pervasiveness of these biases highlights the limitations of purely intuitive thinking, suggesting that systematic approaches to decision-making are crucial for minimizing errors and improving outcomes.

The human brain, constantly bombarded with information, relies on cognitive shortcuts – heuristics – to expedite decision-making. While these mental processes are remarkably efficient, allowing for quick responses in complex situations, they aren’t foolproof. This reliance can introduce systematic errors, leading to suboptimal outcomes because shortcuts often prioritize speed over accuracy. For example, the availability heuristic causes individuals to overestimate the likelihood of events that are easily recalled, like dramatic news stories, while underestimating more common but less sensational risks. Similarly, confirmation bias encourages the favoring of information confirming pre-existing beliefs, hindering objective evaluation. Consequently, even with access to comprehensive data, these ingrained biases can consistently skew judgment, demonstrating that intelligent thought isn’t always rational thought.

The escalating complexity of modern challenges demands a shift in how decisions are approached; rather than striving to eliminate human judgment, the focus is increasingly on tools that enhance it. Research indicates that attempting to bypass human cognition entirely often results in brittle systems unable to adapt to unforeseen circumstances. Instead, effective strategies involve developing methods – encompassing data visualization, predictive modeling, and collaborative platforms – that present information in ways that mitigate cognitive biases and support more informed choices. This augmentation approach acknowledges the inherent strengths of human intuition – pattern recognition, contextual understanding – while simultaneously providing safeguards against systematic errors, ultimately leading to more robust and reliable outcomes across diverse fields from healthcare and finance to urban planning and environmental management.

Augmenting the Oracle: An AI Framework for Critical Thinking

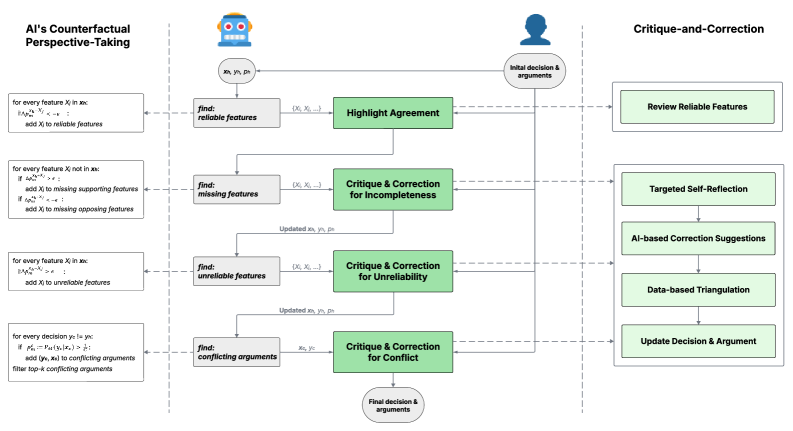

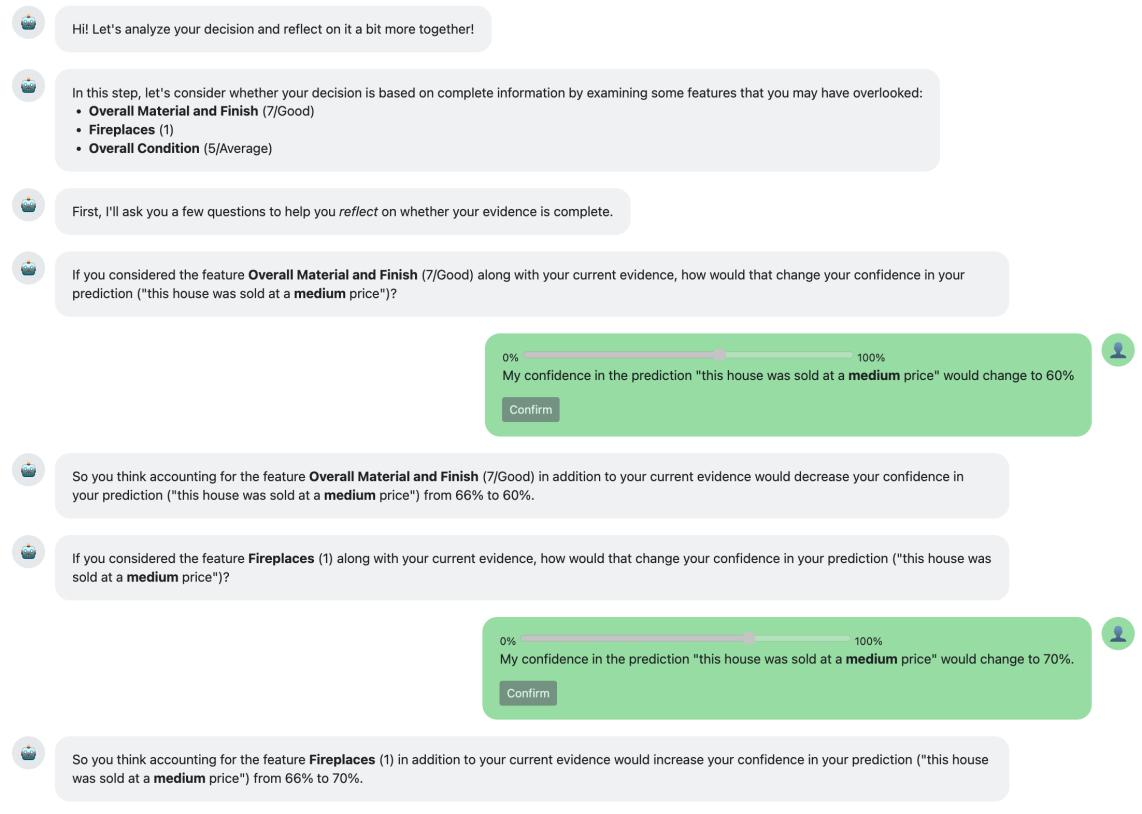

The AI-Assisted Critical Thinking (AACT) framework is designed to augment human decision-making processes by offering both evaluative feedback and alternative viewpoints. This is achieved through an AI system that analyzes the reasoning behind a proposed decision, identifying potential weaknesses or biases in the supporting arguments. Rather than simply providing a ‘yes’ or ‘no’ assessment, AACT delivers constructive critique, highlighting areas for improvement and suggesting alternative perspectives that the decision-maker may not have initially considered. The intention is not to replace human judgment, but to enhance it by providing a more comprehensive and objective evaluation of available information and potential outcomes.

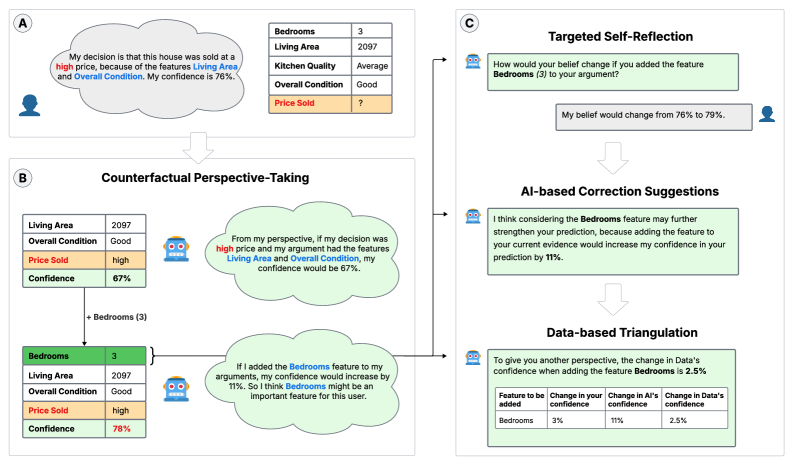

The AI-Assisted Critical Thinking (AACT) framework utilizes artificial intelligence tailored to specific knowledge domains for the evaluation of decision-making arguments. This evaluation incorporates techniques such as CounterfactualPerspectiveTaking, a method wherein the AI generates alternative scenarios to assess the robustness of the original decision logic. By systematically exploring “what if” conditions, the AI identifies potential weaknesses or biases in the reasoning process. The domain-specific nature of the AI allows for nuanced analysis, considering relevant contextual factors and established principles within that field, improving the accuracy and relevance of the critique provided. This process moves beyond simple logical validation to assess the practical implications of a decision under varying conditions.

The AACT framework’s operational logic is based on the RecognitionMetacognition model, a cognitive architecture positing that effective decision-making requires continuous self-monitoring and adjustment. This model facilitates a cyclical process wherein the AI first recognizes potential flaws or biases in a proposed decision’s rationale. Subsequently, it generates critical feedback and alternative viewpoints, prompting a reassessment of the initial argument. This iterative cycle of recognition, critique, and refinement allows for progressive improvement in the quality and robustness of the final decision, moving beyond static analysis to dynamic, adaptive reasoning.

Deconstructing the Decision: AACT in Practice

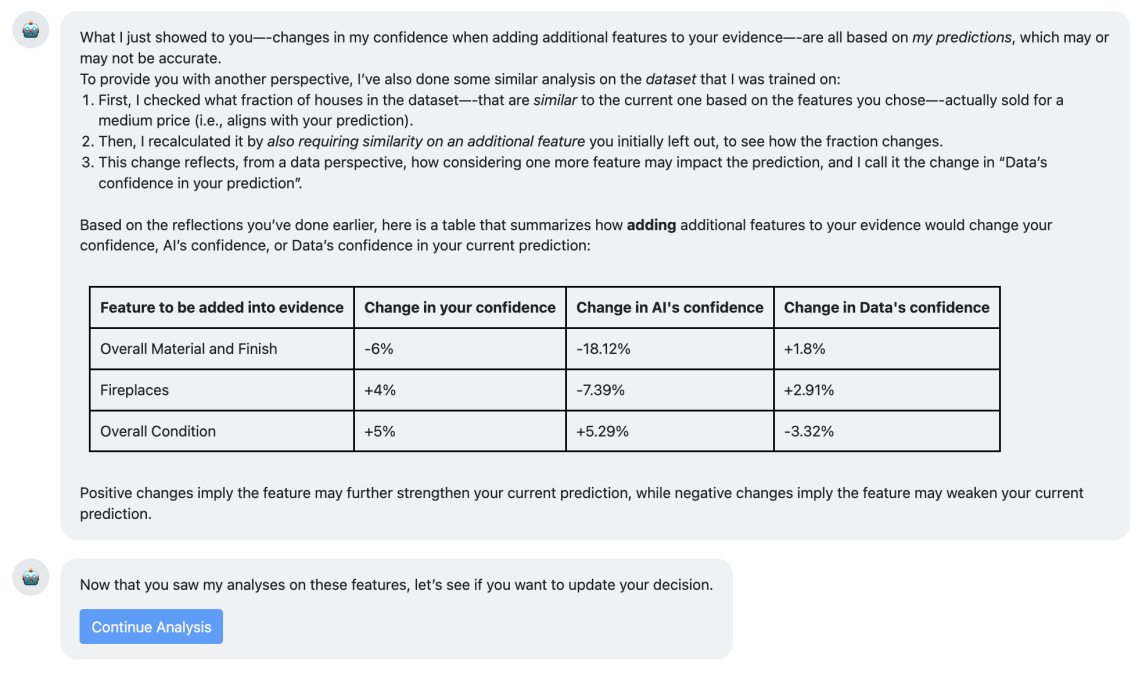

AACT’s critique rationale is established through the application of AIExplanation and DataTriangulation methodologies. AIExplanation focuses on interpreting the internal logic of the assessed artificial intelligence, providing insights into the factors influencing its decisions. Complementing this, DataTriangulation validates these explanations by cross-referencing the AI’s reasoning with the underlying data used for training and evaluation. This dual approach ensures a robust and verifiable understanding of the AI’s assessment, moving beyond simple output reporting to reveal the supporting evidence and reasoning processes.

The AACT framework leverages the Local Interpretable Model-agnostic Explanations (LIME) method to conduct counterfactual analysis, identifying the key features influencing an AI’s assessment. LIME approximates the complex AI model with a simpler, interpretable model locally around a specific prediction. By systematically perturbing input features and observing the resulting changes in the prediction, LIME determines which features have the most significant impact on the outcome. This allows for the identification of minimal changes to the input that would alter the AI’s decision, effectively pinpointing the driving factors behind the assessment and providing insights into the model’s reasoning.

The AACT framework’s functionality was validated through a case study utilizing the AmesHousingDataset and a LogisticRegression model. This model achieved an accuracy of 0.8737 when evaluated on the held-out test set. This dataset, comprising features related to residential properties, allowed for a quantifiable assessment of AACT’s ability to dissect and explain the model’s predictive outcomes. The achieved accuracy serves as a baseline performance metric against which the effectiveness of the explanation methods, such as counterfactual analysis with LIME, can be measured and interpreted within the AACT framework.

AACT’s emphasis on transparent explanations directly addresses a critical need for user acceptance of AI-driven systems. By detailing the factors influencing an AI’s assessment, AACT moves beyond a “black box” approach, enabling users to comprehend why a particular recommendation or critique was generated. This clarity is not merely about showcasing the AI’s internal logic; it actively builds user confidence in the AI’s reliability and validity, leading to increased adoption and appropriate reliance on its guidance. The provision of these explanations facilitates informed decision-making, allowing users to evaluate the AI’s output in context and identify potential biases or limitations.

Beyond the Algorithm: AACT’s Impact on Human Judgment

The Adaptive AI Collaboration Toolkit (AACT) is designed not as a substitute for human expertise, but as a powerful extension of it, enabling a synergistic partnership between people and artificial intelligence. Rather than automating decisions, AACT facilitates a collaborative process where AI insights are presented alongside opportunities for critical self-reflection. This approach recognizes that human judgment, informed by experience and contextual understanding, remains central to effective decision-making, while AI serves as a valuable resource for information and alternative perspectives. By prompting users to actively evaluate assumptions and consider various viewpoints, AACT empowers individuals to leverage AI’s capabilities without relinquishing control or succumbing to undue influence, ultimately fostering a more robust and nuanced decision-making process.

The AACT framework actively cultivates critical thinking through a process of TargetedSelfReflection, prompting users to examine the foundations of their own judgments. This isn’t simply about identifying errors, but a deeper exploration of the assumptions and biases that inevitably shape human reasoning. By encouraging individuals to articulate why they agree or disagree with an AI’s suggestion, the system fosters metacognition – thinking about one’s own thinking. This deliberate pause for introspection allows users to recognize potential cognitive shortcuts or pre-existing beliefs that might be unduly influencing their decision-making, ultimately leading to more informed and nuanced conclusions. The goal is not to eliminate bias entirely – an impossible feat – but to make these inherent tendencies transparent, enabling a more objective assessment of information and a reduction in impulsive reliance on either AI or pre-conceived notions.

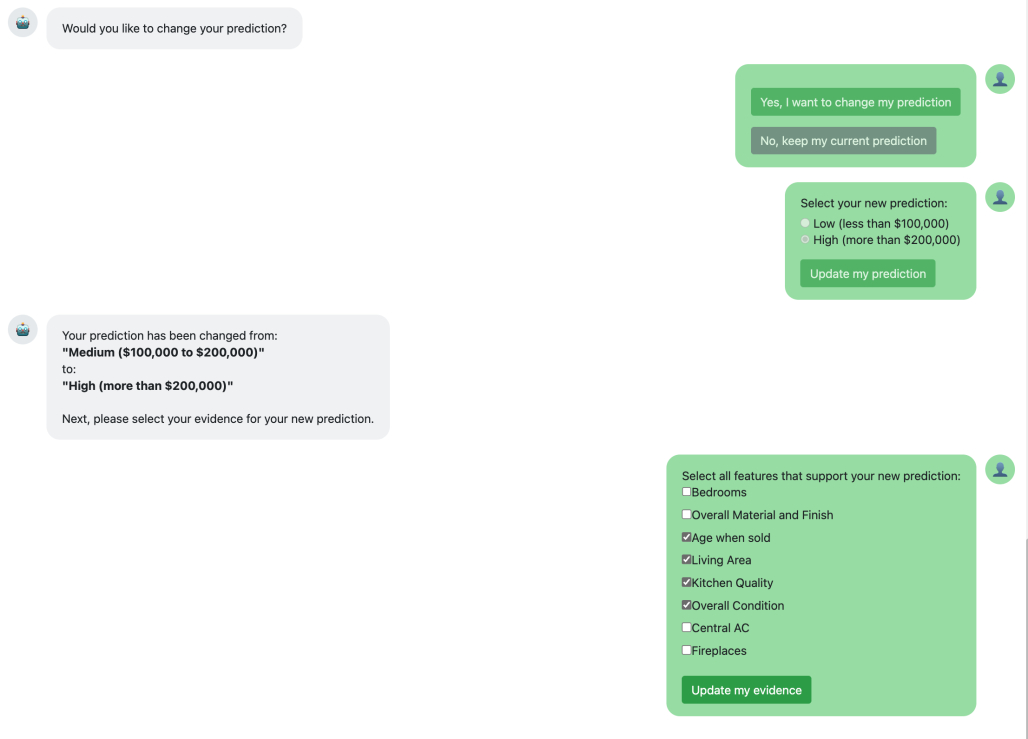

The architecture of the AACT framework is fundamentally designed to preserve human agency in decision-making processes. Rather than automating choices, the system functions as a cognitive partner, presenting information and prompting critical self-reflection, but ultimately leaving the final judgment to the user. This emphasis on DecisionAutonomy is crucial; the system doesn’t dictate outcomes, but instead empowers individuals to make informed choices by surfacing potential biases and encouraging a thorough evaluation of available data. By maintaining this human-in-the-loop approach, AACT seeks to avoid the pitfalls of both blindly accepting AI recommendations and dismissing potentially valuable insights, fostering a collaborative dynamic where human expertise and artificial intelligence complement each other.

Recent research indicates that the AACT framework effectively diminishes an individual’s tendency to excessively depend on artificial intelligence; specifically, participants without bachelor’s degrees exhibited a 0.565 reduction in over-reliance when using AACT, as compared to a standard recommender system. While the intervention also fostered a measurable improvement in learning – registering a 0.157 increase during the active phase – this enhanced understanding unfortunately did not translate to sustained performance gains on post-intervention assessments, suggesting a need for strategies to reinforce long-term retention of the critical evaluation skills AACT promotes.

The AACT framework is designed not to simply improve the accuracy of AI-assisted decisions, but to cultivate a more nuanced relationship between human judgment and artificial intelligence. By actively prompting users to reflect on their own reasoning, and to consider the basis for both accepting and rejecting AI suggestions, the framework addresses the pitfalls of both extremes – undue trust and dismissive skepticism. This balanced approach helps individuals avoid blindly following algorithms – a phenomenon known as OverReliance – while simultaneously preventing the rejection of valuable insights due to unwarranted skepticism, or UnderReliance. Ultimately, the AACT system aims to empower users to integrate AI as a supportive tool, rather than a replacement for, critical thinking and informed decision-making, resulting in more robust and well-considered outcomes.

The pursuit of increasingly sophisticated decision support systems, as explored within the AACT framework, often feels less like construction and more like tending a garden. One anticipates eventual decay, yet still cultivates with care. Donald Knuth observed, “Premature optimization is the root of all evil.” This rings true; the drive for immediate gains in AI-assisted decision-making-the promise of optimized outcomes-can easily eclipse the need for flexible, adaptable systems. The paper rightly focuses on metacognition-thinking about thinking-because true robustness isn’t achieved through algorithmic perfection, but through a system’s capacity to withstand unforeseen circumstances and encourage deeper human reflection, even when the AI appears certain.

Where Do We Grow From Here?

The framework detailed within doesn’t promise better decisions, but a more thoughtful entanglement with the tools that propose them. It acknowledges a simple truth: a system isn’t a calculator, it’s a garden-cultivate only the seeds of self-awareness, and the yield will be a more resilient form of reasoning. The focus on metacognition isn’t a technical fix, but a subtle shift in responsibility. One doesn’t build trust into an algorithm; one nurtures the capacity to question it.

The true limitations of this work, and indeed the field, lie not in the sophistication of the AI, but in the stubborn opacity of the human mind. Counterfactual reasoning, even when prompted, remains a fragile bloom. The study highlights the potential for improved decision-making, but begs the question: what forms of cognitive scaffolding are most effective in sustaining this deeper reflection? Future research should explore the long-term effects, and the inevitable decay, of these metacognitive prompts.

Resilience lies not in isolating components from failure, but in forgiveness between them. The next generation of decision support systems won’t eliminate error, but embrace it as a necessary condition for growth. Perhaps the most fruitful path lies in abandoning the pursuit of ‘correct’ answers, and instead focusing on the cultivation of robust, self-aware questioners.

Original article: https://arxiv.org/pdf/2602.10222.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash of Clans Unleash the Duke Community Event for March 2026: Details, How to Progress, Rewards and more

- Gold Rate Forecast

- Star Wars Fans Should Have “Total Faith” In Tradition-Breaking 2027 Movie, Says Star

- KAS PREDICTION. KAS cryptocurrency

- Christopher Nolan’s Highest-Grossing Movies, Ranked by Box Office Earnings

- eFootball 2026 Jürgen Klopp Manager Guide: Best formations, instructions, and tactics

- Jujutsu Kaisen Season 3 Episode 8 Release Date, Time, Where to Watch

- Jason Statham’s Action Movie Flop Becomes Instant Netflix Hit In The United States

- Jessie Buckley unveils new blonde bombshell look for latest shoot with W Magazine as she reveals Hamnet role has made her ‘braver’

- How to download and play Overwatch Rush beta

2026-02-12 19:08