Author: Denis Avetisyan

A new study investigates whether models guided by natural language instructions can effectively support the open-ended process of exploratory search.

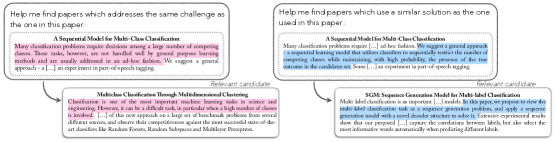

Researchers evaluate the ability of instructed retrievers to balance relevance ranking with nuanced instruction following and aspect-conditional information seeking using benchmarks like CSFCube.

Exploratory search, characterized by evolving information needs, presents a challenge for retrieval systems that demand nuanced understanding of user intent. This work, ‘Can Instructed Retrieval Models Really Support Exploration?’, investigates whether instruction-following retrieval models-designed to adapt results based on natural language guidance-can effectively support aspect-conditional seed-guided exploration. While the evaluation reveals improvements in ranking relevance compared to traditional methods, the study demonstrates that performance in following instructions does not consistently correlate with improved ranking, often exhibiting insensitivity or counterintuitive behavior. This raises the question of whether current instructed retrievers truly enhance the user experience for extended exploratory sessions requiring greater responsiveness to complex, evolving instructions.

The Illusion of Understanding: Why Search Still Struggles

Conventional search engines excel when presented with specific questions, yet frequently stumble when users begin with vague curiosity. These exploratory searches, driven by a desire for broad understanding rather than pinpointed answers, present a unique challenge. The typical keyword-matching approach proves inadequate, often returning results that are either too narrow or irrelevant to the user’s evolving information need. Instead of seeking the answer, the user is attempting to define the question itself, requiring a system capable of interpreting ambiguity and suggesting pathways for discovery. This demands a shift from precision-focused retrieval to a more holistic approach that prioritizes relevance, diversity, and the facilitation of intellectual exploration – a landscape where the journey of learning is as important as the destination.

Traditional information retrieval systems are frequently optimized for known-item finding – locating documents matching specific keywords. However, exploratory search presents a distinct challenge, necessitating models that infer user intent beyond the literal query. This demands a move away from purely lexical matching towards semantic understanding, where the system attempts to grasp the underlying information need. Such nuanced approaches require incorporating techniques like query expansion, topic modeling, and relevance ranking based on conceptual similarity, rather than simply keyword overlap. Ultimately, effective exploratory search relies on the ability to bridge the gap between what a user types and what they truly seek to discover, fostering a dynamic interaction that refines understanding with each retrieved document.

Current exploratory search systems often falter because they inadequately utilize the rich contextual information present within initial, or ‘seed’, documents provided by the user. These systems frequently treat seed documents as simple lists of keywords, overlooking the subtle relationships between concepts and the overall thematic content. This limitation hinders effective exploration, as the system struggles to identify relevant, yet conceptually distant, information that aligns with the user’s underlying information need. Consequently, retrieval becomes overly reliant on surface-level keyword matching, failing to surface the deeper connections and nuanced perspectives crucial for genuine exploratory learning. Improving the capacity to interpret and leverage contextual cues within seed documents represents a significant hurdle in building truly intelligent exploratory search tools.

As information landscapes become increasingly complex, traditional search methods prove inadequate for users who begin without clearly defined needs. These individuals require retrieval systems capable of adapting to evolving information needs – systems that don’t simply match keywords, but instead learn from initial interactions to refine subsequent results. The critical need for adaptable retrieval stems from the inherent uncertainty in exploratory tasks; users often don’t know what they’re looking for until they see it, necessitating a dynamic search process. This demands models that can interpret ambiguous queries, leverage contextual clues from examined documents, and proactively suggest potentially relevant avenues of investigation, ultimately empowering users to efficiently navigate and synthesize knowledge within vast and multifaceted domains.

Instructed Retrieval: A Thin Veneer of Intelligence

Instructed retrieval addresses limitations in traditional information retrieval by directly incorporating natural language instructions into the search process. Unlike keyword-based or semantic search methods, instructed retrievers utilize these instructions to interpret the user’s underlying intent and refine the retrieval strategy accordingly. This is achieved by framing the retrieval task as an instruction-following problem for a Large Language Model (LLM), allowing the model to understand what the user is looking for, not just which keywords are present. The inclusion of instructions enables a more nuanced and context-aware search, improving the precision and relevance of returned results by aligning them with the user’s specific needs and expectations.

Instructed retrieval models utilize Large Language Models (LLMs) as a core component for interpreting natural language instructions provided alongside search queries. These LLMs are not simply used for keyword matching; instead, they analyze the instruction to understand the user’s intent and desired characteristics of the retrieved documents. This understanding then dynamically adjusts the retrieval strategy, influencing factors such as weighting of different document features, re-ranking of initial search results, and the selection of appropriate similarity metrics. The LLM effectively acts as a programmable filter, modifying the retrieval process to prioritize documents that best fulfill the specified instructions, rather than solely relying on lexical similarity to the query.

Pairwise ranking prompting optimizes Large Language Model (LLM)-based document ranking by presenting the LLM with pairs of documents and a query, asking it to identify which document is more relevant to the query. This comparative approach allows the LLM to learn subtle distinctions in relevance without requiring absolute relevance scores. During training or fine-tuning, numerous document pairs are evaluated, effectively creating a preference-based learning signal. The LLM then adjusts its internal parameters to consistently favor the more relevant document in each pair, leading to improved ranking performance as assessed by metrics like Normalized Discounted Cumulative Gain (NDCG) and Mean Reciprocal Rank (MRR). This method is particularly effective in scenarios where defining an absolute relevance score is difficult or subjective.

Instructed retrievers address the challenge of semantic misalignment between user queries and document content by explicitly incorporating natural language guidance into the retrieval process. Traditional information retrieval systems often rely on keyword matching, which can fail to capture the nuanced intent behind a query. By accepting instructions alongside the query – specifying, for example, the desired document characteristics or the type of information sought – these systems utilize Large Language Models to re-rank or refine search results. This allows the retriever to prioritize documents that not only contain relevant keywords but also demonstrably satisfy the specified instructions, effectively aligning the retrieved information with the user’s underlying need and improving precision.

CSFCube: A Carefully Constructed Illusion of Progress

The CSFCube dataset is designed as a benchmark to assess the performance of retrieval models specifically trained to follow instructions during exploratory search. It consists of 10,000 queries, each paired with a seed document and multiple relevant documents, enabling evaluation of a model’s ability to retrieve information based on nuanced instructions. The dataset differentiates itself from traditional retrieval benchmarks by focusing on scenarios where users iteratively refine their information needs, requiring the model to adapt its retrieval strategy based on instruction types that specify aspects of the query or seed document. This allows for a more granular assessment of instruction-following capabilities beyond simple keyword matching and relevance ranking, and provides a controlled environment for testing models designed for interactive search experiences.

The CSFCube dataset enables evaluation of instruction-following capabilities by presenting models with queries and seed documents paired with specific instructions targeting particular facets of the information need. These instructions are designed to test a model’s ability to prioritize and retrieve documents relevant to the instructed aspect, rather than simply returning generally relevant results. Assessment involves measuring whether the retrieved documents align with the instruction’s focus – for example, requesting information specifically about the “history” of a topic when provided with a document about that topic’s “current status.” This granular evaluation allows researchers to determine the extent to which a model can interpret and act upon nuanced instructions within the context of exploratory search.

Evaluation of retrieval performance within the CSFCube dataset utilizes Normalized Discounted Cumulative Gain at rank 20 (NDCG@20) to measure ranking relevance and precision mean reciprocal rank (p-MRR) to assess instruction-following capability. While NDCG@20 provides a standard metric for overall ranking quality, reported improvements based on this metric have lacked consistency across different experimental results. This inconsistency stems from variations in experimental setup and a potential decoupling between ranking relevance and adherence to specific instructions, requiring a combined analysis of both NDCG@20 and p-MRR to obtain a comprehensive evaluation of instructed retrieval systems.

The CSFCube evaluation utilizes three distinct instruction types to assess model adaptability: definition, paraphrase, and aspect subset instructions. Results indicate that the aspect subset strategy, which focuses retrieval on specific facets of a query, achieved the highest scores for instruction following, demonstrating an ability to prioritize instructed criteria. However, this strategy correlated with reduced ranking relevance as measured by standard metrics. Conversely, employing generic, non-specific instructions resulted in consistently low p-MRR values, typically nearing zero, suggesting the model largely ignored the instructions and functioned as an unguided retriever.

The Promise and Peril of Advanced Retrieval Models

Recent advancements in information retrieval are exemplified by models such as Specter2, SciNCL, and otAspire, each designed to push the boundaries of search technology within specific domains. Specter2 leverages contrastive learning to create robust sentence embeddings, improving semantic search capabilities, while SciNCL focuses on scientific literature, offering enhanced precision for complex research queries. otAspire distinguishes itself through aspect-conditional retrieval, allowing searches to be refined by specific facets of a topic, thereby yielding more relevant results. These models represent a shift from keyword-based approaches to those grounded in semantic understanding, enabling more nuanced and effective access to information – a crucial step in managing the ever-increasing volume of digital data and meeting increasingly sophisticated information needs.

Aspect-conditional retrieval represents a refinement in information access, moving beyond simple keyword matching to prioritize the specific facets of a user’s query that truly matter. Rather than treating all parts of a question equally, these systems – exemplified by models like otAspire – dissect the query to identify its core aspects and then retrieve documents most relevant to those specific points. This focused approach allows for a more nuanced search, particularly useful when dealing with complex topics where a broad search might yield a deluge of irrelevant results. By concentrating on the essential elements of the information need, aspect-conditional retrieval delivers more precise and insightful answers, improving the overall user experience and enabling more effective knowledge discovery.

Recent advancements showcase the capabilities of large language models, specifically GritLM-7B and gpt-4o, in the realm of instructed retrieval – a process where models respond to complex queries with nuanced information. These models demonstrate the potential of scaling language model size to improve search relevance, yet quantifying specific performance gains remains a challenge. While initial reports suggest improvements, particularly using metrics like NDCG@20, consistent and substantial gains across diverse datasets haven’t been definitively established. This suggests that while the architecture holds promise, further research is needed to understand the conditions under which these models truly excel and to develop robust evaluation methodologies for instructed retrieval systems.

Despite advancements enabling more precise and nuanced search results, recent analyses reveal that many retrieval models demonstrate unexpectedly counterintuitive behavior. While combined approaches-like those utilizing Specter2, SciNCL, and otAspire-aim to address complex information needs, their performance isn’t always aligned with clear instructions. Evaluations using the p-MRR metric show considerable variability, with values ranging from negative figures to approximately 0.2 under certain conditions. This suggests that, despite improvements in retrieval technology, models can exhibit instruction-agnostic tendencies, failing to consistently prioritize relevant information as directed, and highlighting a need for further refinement in aligning model behavior with user intent.

The study’s findings regarding the inconsistency of instruction following feel…predictable. It seems every attempt to build a ‘smarter’ retrieval system-one capable of nuanced, aspect-conditional retrieval as demonstrated with the CSFCube-eventually reveals a brittle core. As Blaise Pascal observed, “The eloquence of angels is silence.” These models speak volumes with their parameters, yet often fail to grasp the simplest intent. The paper notes improvements in relevance ranking, but that’s merely a temporary reprieve. Production will inevitably expose the gaps where the elegant theory couldn’t account for the messy reality of user queries. Better one carefully tuned, deterministic system than a hundred models claiming to ‘understand’ natural language.

So, Where Does This Leave Us?

The promise of instructed retrieval – a system that understands what a user intends to discover, rather than merely matching keywords – remains compelling. However, this work subtly reinforces a familiar pattern. Improved relevance ranking is, predictably, not the same as genuine exploration support. The models perform well when given blunt directives, but nuance appears to be a casualty. It’s a reminder that elegant architectures often stumble on the messy reality of human language. The CSFCube, for all its dimensions, still feels like a carefully constructed sandbox, not a reflection of the unpredictable queries production will inevitably throw at it.

The current focus on instruction-following as a proxy for exploratory intent is likely a local maximum. True exploration isn’t about obeying commands; it’s about serendipitous discovery. Future work must address the inherent tension between controlled retrieval and the very notion of “exploration”. Perhaps the field should consider techniques that intentionally introduce controlled randomness, or mechanisms that allow the system to ‘forget’ initial instructions mid-search.

Ultimately, this isn’t a failure of the technology, but a reminder of its limitations. Legacy systems weren’t ‘wrong’ – they were merely… persistent. And bugs, of course, are just proof of life. The next iteration will undoubtedly offer incremental improvements, but the fundamental challenge – bridging the gap between algorithmic precision and human curiosity – will remain.

Original article: https://arxiv.org/pdf/2601.10936.pdf

Contact the author: https://www.linkedin.com/in/avetisyan/

See also:

- Clash Royale Best Boss Bandit Champion decks

- Vampire’s Fall 2 redeem codes and how to use them (June 2025)

- Best Arena 9 Decks in Clast Royale

- Country star who vanished from the spotlight 25 years ago resurfaces with viral Jessie James Decker duet

- World Eternal Online promo codes and how to use them (September 2025)

- JJK’s Worst Character Already Created 2026’s Most Viral Anime Moment, & McDonald’s Is Cashing In

- ‘SNL’ host Finn Wolfhard has a ‘Stranger Things’ reunion and spoofs ‘Heated Rivalry’

- Solo Leveling Season 3 release date and details: “It may continue or it may not. Personally, I really hope that it does.”

- M7 Pass Event Guide: All you need to know

- Kingdoms of Desire turns the Three Kingdoms era into an idle RPG power fantasy, now globally available

2026-01-20 17:17